This article was published as a part of the Data Science Blogathon

Overview

We are living in a digital world today. From the beginning of the day till we say ‘Good Night’ to our loved ones we consume loads of data either in form of visuals, music/audio, web, text, and many more sources.

Today we will explore one of these sources of data and see if we can gain information out of it. We will use ‘Text’ data which is available in abundance thanks to reviews, feedback, articles, and many other data collection/publishing ways.

We will try to see if we can capture ‘sentiment’ from a given text, but first, we will preprocess the given ‘Text’ data and make it structured since it is unstructured in row form. We need to do make text data into structured format because most machine learning algorithms work with structured data.

In this article, we will use publicly available data from ‘Kaggle’. Please use the below link to get the data.

This is going to be a classification exercise since this dataset consists of movie reviews of users labelled as either positive or negative.

Sentiment Classification

The dataset that we just discussed contains movie reviews. Each review is either labelled as positive or negative. The dataset contains the ‘text’ and ‘sentiment’ fields. These fields are separated by the ‘tab’ character. See below for details:

1. text:- Sentence that describes the review.

2. sentiment:- 1 or 0. 1 represents positive review and 0 represents negative review.

Now we will discuss the complete process of ‘sentiment classification’. Below will be the flow of the project.

- Loading The dataset

- Exploring Dataset

- Text Pre-Processing

- Build a model for sentiment classification

- Split dataset

- Make the prediction on test case

- Finding model Accuracy

Loading the Dataset

Loading the data using panda’s read_csv() method is done as follows:

import pandas as pd

import numpy as np

import warnings

warnings.filterwarnings('ignore')

train_data = pd.read_csv('sentiment_train.csv')

train_data.head(5)

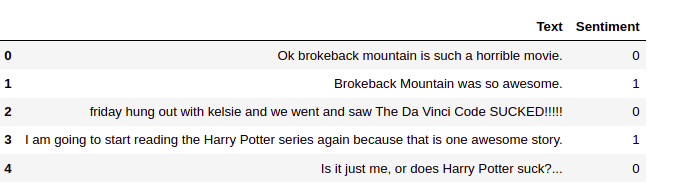

The first five records of loaded data are shown in the table below.

Above table few of the texts may have been truncated while getting output as the default column width is limited. This can be changed by setting the max_colwidth parameter to increase the width size.

Each record or example in the column sentence is called a document. Use the following code to print the first five positive sentiment documents.

pd.set_option('max_colwidth',1800)

train_data[train_data.label == 1][0:5]

The first five positive sentiments. sentiments value of 1 denotes the positive sentiments

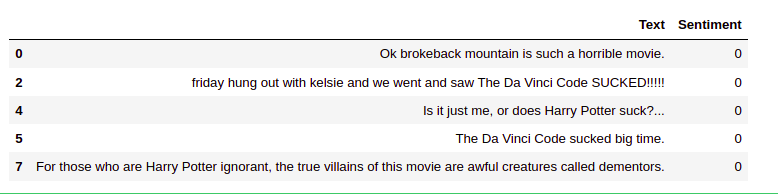

To print the first five negative sentiment documents use:

train_data[train_data.Sentiment == 1][0:5]

The first five negative sentiments. The sentiments value of 0 denotes the negative sentiments.

In the next section, we will be discussing exploratory data analysis on the text data.

Exploration of the DataSet

Exploratory data analysis can be carried out by counting the number of comments, positive comments, negative comments, etc. For example, we can check how many reviews are available in the dataset? Are the positive and negative sentiment reviews well represented in the dataset? Printing metadata of the dataframe using info() method.

train_data.info()

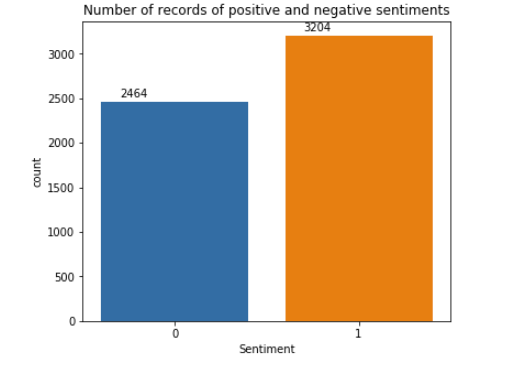

From the output, we can infer that there are 5668 records available in the dataset. we create a count plot to compare the number of positive and negative sentiments.

import matplotlib.pyplot as plt

import seaborn as sn

%matplotlib inline

plt.figure(figsize=(6,5))

plt.title("Number of records of positive and negative sentiments")

plot = sn.countplot(x = 'Sentiment', data=train_data)

for p in plot.patches:

plot.annotate(p.get_height(),(p.get_x()+0.1 ,p.get_height()+50))

From the figure, we can infer that that is a total of 5668 records in the dataset. Out of 5668 records, 2464 records belong to negative sentiments and 3204 records belong to positive sentiments. Thus positive and negative sentiment documents have fairly equal representation in the dataset.

Before building the model, text data needs preprocessing for feature extraction. The following section explains step-by-step text preprocessing techniques.

Text Pre-Processing

This section will focus on how to do preprocessing on text data. Which function have to be used to get better formate of the dataset which can apply the model on that text dataset. we have a few techniques to do this process. This article only will discuss using creating count vectors. You can follow my other article for some other preprocessing techniques apply to the text datasets.

Click here:- https://www.geeksforgeeks.org/blog/2021/08/text-preprocessing-techniques-for-performing-sentiment-analysis/#h2_3

All vectorizer classes take a list of stop words as a parameter and remove the stop words while building the dictionary or feature set. And these words will not appear in the count vector representing the documents. we will create new count vectors bypassing the stop words list.

count_vectorizer = CountVectorizer(stop_words= my_stop_words, max_features= 1000)

feature_vector = count_vectorizer.fit(train_data.Text)

train_ds_features = count_vectorizer.transform(train_data.Text)

features = feature_vector.get_feature_names()

features_counts = np.sum(train_ds_features.toarray(), axis = 0)

features_counts = pd.DataFrame(dict(features = features, counts = features_counts))

features_counts.sort_values("counts", ascending= False)[0:15]

It can be noted that the stop words have been removed. But we also notice another problem. Many appear in multiple forms. For example, love and love. The vectorizer treats the two words as separated words and hence -creates two separated features. But if a word has a similar meaning in all its forms, we can use only the root word as a feature. Stemming and lemmatization are two popular techniques that are used to convert the words into root words.

1. Stemming: This removes the difference between the inflected form of a word to reduce each word to its root form. This is done by mostly chopping off the end of words. One problem with streaming is that chopping words may result in words that are not part of the vocabulary. PorterStemmer and LancasterStemmer are two popular algorithms for streaming, which have rules on how to chop off a word.

2. Lemmatization:- This takes the morphological analysis of the words into consideration. It uses a language dictionary to convert the words to the root word. For example, stemming would fail to the difference between man and men, while lemmatization can bring these words to their original from man.

from nltk.stem.snowball import PorterStemmer

stemmer = PorterStemmer()

analyzer = CountVectorizer().build_analyzer()

def stemmed_words(doc):

stemmed_words = [stemmer.stem(w) for w in analyzer(doc)]

non_stop_words = [word for word in stemmed_words if not in my_stop_words]

return non_stop_words

CountVectorizer takes a custom analyzer for streaming and stops word removal, before creating count vectors. So, the custom function stemmed_words() is passed as an analyzer.

count_vectorizer = CountVectorizer(stop_words= my_stop_words, max_features= 1000)

feature_vector = count_vectorizer.fit(train_data.Text)

train_ds_features = count_vectorizer.transform(train_data.Text)

features = feature_vector.get_feature_names()

features_counts = np.sum(train_ds_features.toarray(), axis = 0)

features_counts = pd.DataFrame(dict(features = features, counts = features_counts))

features_counts.sort_values("counts", ascending= False)[0:15]

Print the first 15 words and their count in descending order.

It can be noted that the words love, loved, awesome have all been stemmed from the root words.

Once preprocessing is done then move forward to build the model.

Build Model for sentiment classification

We will build different models to classify sentiments.

1. Naive-Bayes classifier

2. TF-IDF VECTORIZER

Now we will discuss one by one and also will see the comparison. Let’s start

First will discuss the Naive Bayes classifier

Naive-Bayes Model For Sentiment Classification

Naive-Bayes classifier is widely used in Natural language processing and proved to give better results. It works on the concept of Baye’s theorem.

Assume that we would like to predict whether the probability of a document is positive given that the document contains a word awesome. This can be computed if the probability of the word awesome appearing in a document given that it is positive sentiment is multiplied by the probability of the document being positive.

P(doc = +ve | word = awesome) = P(word = awesome | doc = +ve) * P(doc = +ve)

The posterior probability of the sentiment is computed from the prior probabilities of all the words it contains. The assumption is that the occurrences of the words in a document are considered independent and they do not influence each other. So, if the document contains N-words and words represented as w1, w2, w3………wn then

P(doc = +ve | word = w1, w2, w3………wn) =

sklearn.naive_bayes provides a class BernoulliNB which is a Naive -Bayes classifier for multivariate BernoulliNB models. BernoulliNB is designed for binary features, which is the case here.

The steps involved in using the Naive-Bayes model for sentiment classification are as follows:

- Split the dataset into train and validation sets,

- Build the Naive-Bayes model,

- Find model accuracy.

we will discuss these in the following subsections.

Split the dataset into train and validation sets

Split the dataset into a 70:30 ratio for creating training and test datasets using the following code.

from sklearn.model_selection import train_test_split

train_x, test_x, train_y, test_y = train_test_split(train_ds_features, train_data.Sentiment,

test_size = 0.3, random_state = 42)

Build Naive-Bayes Model

Build Naive-Bayes model using the training set.

from sklearn.naive_bayes import BernoulliNB nb_clf = BernoulliNB() nb_clf.fit(train_x.toarray(), train_y)

Make a prediction on Test case

The predicted class will be the one that has the higher probability based on Naive-Baye’s Probability calculation. Predict the sentiments of the test dataset using predict() method.

test_ds_predicted = nb_clf.predict(test_x.toarray())

Finding Model Accuracy

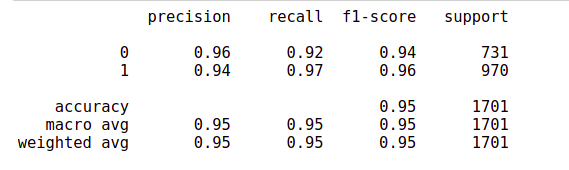

Let us print the classification report.

from sklearn import metrics print(metrics.classification_report(test_y,test_ds_predicted))

The Model is classifying with very high accuracy. Both average precision and recall is about 98% for identifying positive and negative sentiment documents. Let us draw the confusion matrix.

cm = metrics.confusion_matrix(test_y, test_ds_predicted) sn.heatmap(cm, annot=True, fmt = '.2f')

In the confusion matrix, the rows represent the actual number of positive and negative documents in the test set, whereas the columns represent what the model has predicted. Label 1 means positive sentiment and label 0 means negative sentiment.

As per the model prediction, that there are only 13 instances that are wrongly classified as a negative sentiment document and there are only 26 negative sentiment documents classified wrongly as positive sentiment documents. Rest all have been classified correctly.

The next section will discuss TD-IFD Vectorizer Model.

TF-IDF VECTORIZER

TfidfVectorizer is used to create both TF Vectorizer and TF-IDF Vectorizer. It Takes a parameter to use _idf to create TF-IDF vectors. If use _idf set to false, it will create only TF vectors and if it is set to True, it will create TF-IDF vectors.

from sklearn.feature_extraction.text import TfidfVectorizer tfidf_vectorizer = TfidfVectorizer(analyzer = stemmed_words, max_features = 1000) feature_vector = tfidf_vectorizer.fit(train_data.Text) train_ds_features = tfidf_vectorizer.transform(train_data.Text) features = feature_vector.get_feature_names()

Tf-IDF is continuous values and these continuous values associated with each class can be assumed to be distributed according to Gaussian distribution. So Gaussian Naive-Bayes can be used to classify these documents. We will GaussianNB, which implements the Gaussian Naive_bayes algorithm for classification.

from sklearn.naive_bayes import GaussianNB

train_x, test_x, train_y, test_y = train_test_split(train_ds_features, train_data.Sentiment,

test_size = 0.3, random_state = 42)

nb_clf = GaussianNB() nb_clf.fit(train_x.toarray(), train_y)

test_ds_predicted = nb_clf.predict(test_x.toarray()) print(metrics.classification_report(test_y,test_ds_predicted))

cm = metrics.confusion_matrix(test_y, test_ds_predicted) sn.heatmap(cm, annot=True, fmt = '.2f')

The precision and recall seem to be pretty much the same. The accuracy is very high in this example as the dataset is clean and carefully curated. But it may not be the case in the real world.

Conclusion

In this article, Text data is unstructured data and needs extensive preprocessing before applying models. Naive-Bayes classification Models are the most widely used algorithm for classifying texts. The next article will discuss some challenges of text analytics using few techniques such as using N-Grams.

About the Author

Hi, I am Kajal Kumari. I have completed my Master’s from IIT(ISM) Dhanbad in Computer Science & Engineering. As of now, I am working as Machine Learning Engineer in Hyderabad. You can also check out few other blogs that I have written here.

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.