This was originally posted on the Silicon Valley Data Science blog.

Options for Developing with Deep Learning

Modern artificial intelligence makes many benefits available to business, bringing cognitive abilities to machines at scale. As a field of computer science, AI is moving at an unprecedented rate: the time you must wait for a research result in an academic paper to translate into production-ready code can now be measured in mere months. However, with this velocity comes a corresponding level of confusion for newcomers to the field. As well as developing familiarity with AI techniques, practitioners must choose their technology platforms wisely. This post surveys today’s foremost options for AI in the form of deep learning, examining each toolkit’s primary advantages as well as their respective industry supporters.

Machine learning, and deep learning

Contemporary AI workloads can be divided into two classes: machine learning and deep learning. The first of these classes, and the overwhelming majority in current use, is machine learning. These incorporate the most common algorithms used by data scientists: linear models, k-means clustering, decision trees, and so on. Though we now talk of them as part of AI, this is what data scientists have been doing for a long time.

The second class of AI workload has received considerably more attention and hype in the last two years: a specialization of one machine learning technique, neural networks, known as deep learning. Deep learning is fueling the interest in AI, or “cognitive” technologies, with applications such as image recognition, voice recognition, automatic game-playing, and self-driving cars as well as other autonomous vehicles. Typically, these applications require vast amounts of data to feed and train complex neural networks.

Options for machine learning

Toolkits to handle this first class of workloads are integrated into every statistics package in common use. Commercial offerings include SAS, SPSS, and MATLAB. Common open-source tools include R and Python; the big data platforms Apache Spark and Hadoop also have their own toolkits for parallel machine learning (Spark’s MLLIB and Apache Mahout). Currently, Python is emerging as the most popular programming language for data science in industry, thanks to projects such as scikit-learn and Anaconda.

Options for deep learning

The landscape of deep learning toolkits is evolving rapidly. Both academia and data giants, such as Google, Baidu, and Facebook, have been investing in deep learning for years, and as a result there are multiple strong alternatives. As such, the newcomer is faced with many choices! Here’s a rundown of the main contenders, which each have different strengths and ecosystems.

- TensorFlow: from Google, and the highest profile at the time of writing. It’s a “second generation” deep learning library, built by those experienced with earlier frameworks. TensorFlow is very accessible from Python, and includes the TensorBoard tool, which lends a strong advantage in debugging and inspecting networks. The XLA compilation tool provides optimal execution of models, and TensorFlow Mobile brings machine learning support for low-powered mobile devices.

- MXNet: has high profile adoption, spearheaded by Amazon Web Services, and is integrated with many programming languages. MXNet has been accepted into the Apache Incubator, which puts it on track to eventually become a top-level Apache project.

- Deeplearning4J: a commercially-supported deep learning framework with strong performance in a Java environment, making it attractive for enterprise applications.

- Torch: a powerful framework in use at places such as Facebook and Twitter, but written in Lua, with less first-class support for other programming languages.

- PyTorch: a descendant of Torch that “puts Python first,” PyTorch brings Torch into the popular Python ecosystem of data science. Released in 2017, it claims Facebook and Twitter among its backers. PyTorch supports dynamic computational graphs, something not currently available in TensorFlow, and provides a smoother development flow than non-dynamic alternatives.

- CTNK: Microsoft’s offering in the deep learning space, with Python and C++ APIs (Java is also available experimentally).

- Caffe: brings an emphasis on computer vision applications. Core language is C++, with a Python interface.

- Theano: one of the oldest deep learning frameworks, written in Python. Used broadly in academia, but isn’t that well suited to production use.

Many of these deep learning frameworks operate at a lower level than everyday developers would like, and so have higher-level libraries on top of them that make their use more friendly. The most important of these is Keras, a Python library that supports the creation of deep learning applications that can run on either TensorFlow, Theano, CNTK, or Deeplearning4j.

Apple’s entry into machine learning is also worth a mention. In contrast to the above toolkits, Apple provides only the execution framework for models. Developers must train their models with toolkits such as Caffe, Keras, or scikit-learn, and then convert them so apps can use them through Apple’s CoreML.

Where to start?

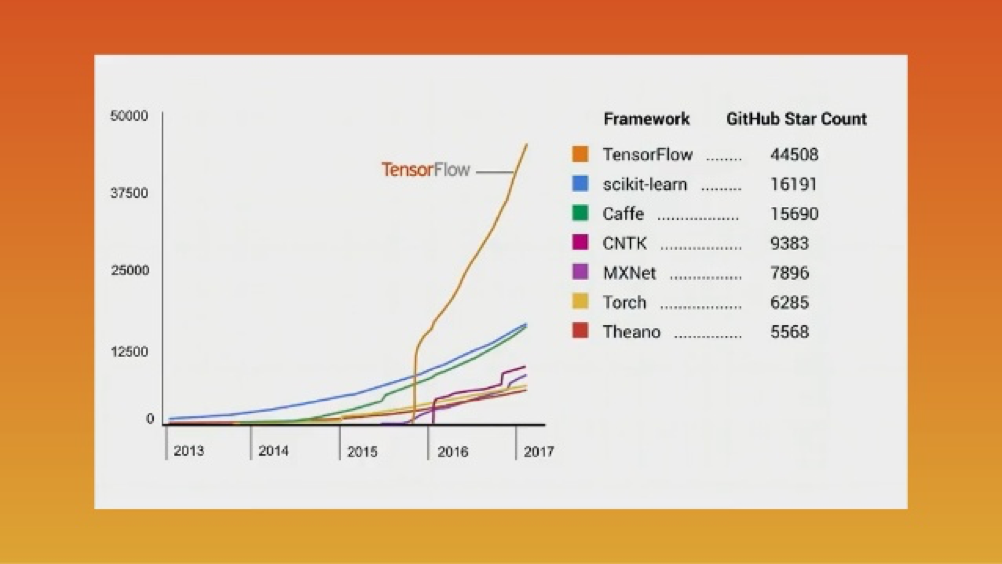

TensorFlow Github Star Count, Feb 2017. GDG-Shanghai 2017 TensorFlow Summit

If you have no specific reason to choose any of the alternatives, then the Keras and TensorFlow combination appears the strongest default choice going forward, on account of its developer experience, Google’s reputation in AI, and the importance of the Python ecosystem. TensorFlow’s rapid growth in popularity is likely to ensure it is compatible with the broadest range of data tools in the near term. For example, see Databricks’ recent announcement of TensorFlow and Keras support for deep learning in Spark.

However, Amazon Web Services’ strong backing of MXNet makes it one to continue watching, as it grows and migrates into an Apache project; and Microsoft’s strong foothold with Azure makes CNTK a significant effort.

Deep learning is a rapidly growing field, and each of the cloud providers see pre-eminence in machine learning as a strategic goal. Fortunately, they are all choosing open source approaches—guaranteeing accessibility and longevity for any projects that adopt their toolkits. Together with the increasing availability of deep-learning focused compute resource in clouds and GPUs, this is great news for anyone wanting to start exploring deep learning.