This article was published as a part of the Data Science Blogathon

Introduction

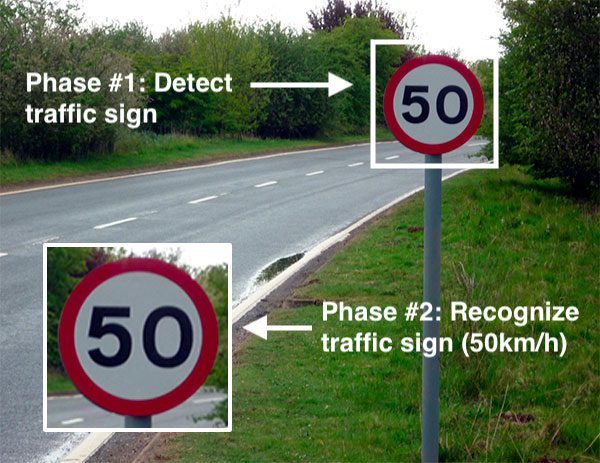

In this era of Artificial Intelligence, humans are becoming more dependent on technology. With the enhanced technology, multinational companies like Google, Tesla, Uber, Ford, Audi, Toyota, Mercedes-Benz, and many more are working on automating vehicles. They are trying to make more accurate autonomous or driverless vehicles. You all might know about self-driving cars, where the vehicle itself behaves like a driver and does not need any human guidance to run on the road. This is not wrong to think about the safety aspects—a chance of significant accidents from machines. But no machines are more accurate than humans. Researchers are running many algorithms to ensure 100% road safety and accuracy. One such algorithm is Traffic Sign Recognition that we talk about in this blog.

When you go on the road, you see various traffic signs like traffic signals, turn left or right, speed limits, no passing of heavy vehicles, no entry, children crossing, etc., that you need to follow for a safe drive. Likewise, autonomous vehicles also have to interpret these signs and make decisions to achieve accuracy. The methodology of recognizing which class a traffic sign belongs to is called Traffic signs classification.

In this Deep Learning project, we will build a model for the classification of traffic signs available in the image into many categories using a convolutional neural network(CNN) and Keras library.

Image 1

Dataset for Traffic Sign Recognition

The image dataset is consists of more than 50,000 pictures of various traffic signs(speed limit, crossing, traffic signals, etc.) Around 43 different classes are present in the dataset for image classification. The dataset classes vary in size like some class has very few images while others have a vast number of images. The dataset doesn’t take much time and space to download as the file size is around 314.36 MB. It contains two separate folders, train and test, where the train folder is consists of classes, and every category contains various images.

Image 2

You can download the Kaggle dataset for this project from the below link.

Prerequisites for Traffic Sign Recognition

To understand this project, you should have some prior knowledge of Deep learning libraries, Python Language, and CNN. If you are not familiar with these techniques, you can click here:

Deep Learning- https://www.geeksforgeeks.org/blog/2021/05/a-comprehensive-tutorial-on-deep-learning-part-1/

Python- https://www.geeksforgeeks.org/blog/2016/01/complete-tutorial-learn-data-science-python-scratch-2/

CNN- https://www.geeksforgeeks.org/blog/2018/12/guide-convolutional-neural-network-cnn/

Install all these packages to begin with the project:

pip install tensorflow pip install tensorflow keras pip install tensorflow sklearn pip install tensorflow matplotlib pip install tensorflow pandas pip install tensorflow pil

Workflow

We need to follow the below 4 steps to build our traffic sign classification model:

- Dataset exploration

- CNN model building

- Model training and validation

- Model testing

Dataset exploration

Around 43 subfolders(ranging from 0 to 42) are available in our ‘train’ folder, and each subfolder represents a different class. We have an OS module that helps in the iteration of all the images with their respective classes and labels. To open the contents of ideas into an array, we are using the PIL library.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from PIL import Image

import os

from sklearn.model_selection

import train_test_split

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Conv2D, MaxPool2D, Dense, Flatten, Dropout

data = []

labels = []

classes = 43

cur_path = os.getcwd()

for i in range(classes):

path = os. path.join(cur_path,'train', str(i))

images = os.listdir(path)

for a in images:

try:

image = Image.open(path + '\'+ a)

image = image.resize((30,30))

image = np.array(image)

data.append(image)

labels.append(i)

except:

print("Error loading image")

data = np.array(data)

labels = np.array(labels)

In the end, we have to store every image with its corresponding labels into lists. A NumPy array is needed to feed the data to the model, so we convert this list into an array. Now, the shape of our data is (39209, 30, 30, 3), where 39209 represents the number of images, 30*30 represents the image sizes into pixels, and the last 3 represents the RGB value(availability of coloured data).

print(data.shape, labels.shape) #Splitting training and testing dataset X_t1, X_t2, y_t1, y_t2 = train_test_split(data, labels, test_size=0.2, random_state=42) print(X_t1.shape, X_t2.shape, y_t1.shape, y_t2.shape)

#Converting the labels into one hot encoding y_t1 = to_categorical(y_t1, 43) y_t2 = to_categorical(y_t2, 43)

Output

CNN model building

We build a CNN model to classify the images into their respective categories.

The architecture of our model is:

- 2 Conv2D layer (filter=32, kernel_size=(5,5), activation=”relu”)

- MaxPool2D layer ( pool_size=(2,2))

- Dropout layer (rate=0.25)

- 2 Conv2D layer (filter=64, kernel_size=(3,3), activation=”relu”)

- MaxPool2D layer ( pool_size=(2,2))

- Dropout layer (rate=0.25)

- Dense Fully connected layer (256 nodes, activation=”relu”)

- Dropout layer (rate=0.5)

- Dense layer (43 nodes, activation=” softmax”)

- #Building the model model = Sequential() model.add(Conv2D(filters=32, kernel_size=(5,5), activation=’relu’, input_shape=X_train.shape[1:])) model.add(Conv2D(filters=32, kernel_size=(5,5), activation=’relu’)) model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(rate=0.25)) model.add(Conv2D(filters=64, kernel_size=(3, 3), activation=’relu’)) model.add(Conv2D(filters=64, kernel_size=(3, 3), activation=’relu’)) model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(rate=0.25)) model.add(Flatten()) model.add(Dense(256, activation=’relu’)) model.add(Dropout(rate=0.5)) model.add(Dense(43, activation=’softmax’)) #Compilation of the model model.compile(loss=’categorical_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

Model training and validation

To train our model, we will use the model.fit() method that works well after the successful building of model architecture. With the help of 64 batch sizes, we got 95%accuracy on training sets and acquired stability after 15 epochs.

eps = 15 anc = model.fit(X_t1, y_t1, batch_size=32, epochs=eps, validation_data=(X_t2, y_t2))

Output

#plotting graphs for accuracy

plt.figure(0)

plt.plot(anc.anc['accuracy'], label='training accuracy')

plt.plot(anc.anc['val_accuracy'], label='val accuracy')

plt.title('Accuracy')

plt.xlabel('epochs')

plt.ylabel('accuracy')

plt.legend()

plt.show()

plt.figure(1)

plt.plot(history.history['loss'], label='training loss')

plt.plot(history.history['val_loss'], label='val loss')

plt.title('Loss')

plt.xlabel('epochs')

plt.ylabel('loss')

plt.legend()

plt.show()

Output

Model testing

A folder named” test” is available in our dataset; inside that, we got the main working comma-separated file called” test.csv”. It comprises two things, the image paths, and their respective class labels. We can use the pandas’ python library to extract the image path with corresponding labels. Next, we need to resize our images to 30×30 pixels to predict the model and create a numpy array filled with image data. To understand how the model predicts the actual labels, we need to import accuracy_score from the sklearn.metrics. At last, we are calling the Keras model.save() method to keep our trained model.

#testing accuracy on test dataset

from sklearn.metrics import accuracy_score

y_test = pd.read_csv('Test.csv')

labels = y_test["ClassId"].values

imgs = y_test["Path"].values

data=[]

for img in imgs:

image = Image.open(img)

image = image.resize((30,30))

data.append(np.array(image))

X_test=np.array(data)

pred = model.predict_classes(X_test)

#Accuracy with the test data from sklearn.metrics import accuracy_score print(accuracy_score(labels, pred))

Output

0.9532066508313539

model.save(‘traffic_classifier.h5’)#to save

Full Source code

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt#to plot accuracy

import cv2

import tensorflow as tf

from PIL import Image

import os

from sklearn.model_selection import train_test_split #to split training and testing data

from keras.utils import to_categorical#to convert the labels present in y_train and t_test into one-hot encoding

from keras.models import Sequential, load_model

from keras.layers import Conv2D, MaxPool2D, Dense, Flatten, Dropout#to create CNN

data = []

labels = []

classes = 43

cur_path = os.getcwd()

#Retrieving the images and their labels

for i in range(classes):

path = os.path.join(cur_path,'train',str(i))

images = os.listdir(path)

for a in images:

try:

image = Image.open(path + '\'+ a)

image = image.resize((30,30))

image = np.array(image)

#sim = Image.fromarray(image)

data.append(image)

labels.append(i)

except:

print("Error loading image")

#Converting lists into numpy arrays

data = np.array(data)

labels = np.array(labels)

print(data.shape, labels.shape)

#Splitting training and testing dataset

X_t1, X_t2, y_t1, y_t2 = train_test_split(data, labels, test_size=0.2, random_state=42)

print(X_t1.shape, X_t2.shape, y_t1.shape, y_t2.shape)

#Converting the labels into one hot encoding

y_t1 = to_categorical(y_t1, 43)

y_t2 = to_categorical(y_t2, 43)

#Building the model

model = Sequential()

model.add(Conv2D(filters=32, kernel_size=(5,5), activation='relu', input_shape=X_train.shape[1:]))

model.add(Conv2D(filters=32, kernel_size=(5,5), activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(rate=0.25))

model.add(Conv2D(filters=64, kernel_size=(3, 3), activation='relu'))

model.add(Conv2D(filters=64, kernel_size=(3, 3), activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(rate=0.25))

model.add(Flatten())

model.add(Dense(256, activation='relu'))

model.add(Dropout(rate=0.5))

model.add(Dense(43, activation='softmax'))

#Compilation of the model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

eps = 15

anc = model.fit(X_t1, y_t1, batch_size=32, epochs=eps, validation_data=(X_t2, y_t2))

model.save("my_model.h5")

#plotting graphs for accuracy

plt.figure(0)

plt.plot(anc.anc['accuracy'], label='training accuracy')

plt.plot(anc.anc['val_accuracy'], label='val accuracy')

plt.title('Accuracy')

plt.xlabel('epochs')

plt.ylabel('accuracy')

plt.legend()

plt.show()

plt.figure(1)

plt.plot(history.history['loss'], label='training loss')

plt.plot(history.history['val_loss'], label='val loss')

plt.title('Loss')

plt.xlabel('epochs')

plt.ylabel('loss')

plt.legend()

plt.show()

#testing accuracy on test dataset

from sklearn.metrics import accuracy_score

y_test = pd.read_csv('Test.csv')

labels = y_test["ClassId"].values

imgs = y_test["Path"].values

data=[]

for img in imgs:

image = Image.open(img)

image = image.resize((30,30))

data.append(np.array(image))

X_test=np.array(data)

pred = model.predict_classes(X_test)

#Accuracy with the test data

from sklearn.metrics import accuracy_score

print(accuracy_score(labels, pred))

model.save(‘traffic_classifier.h5’)

GUI for Traffic Signs Classifier

We will use a standard python library called Tkinter to build a graphical user interface(GUI) for our traffic signs recognizer. We need to create a separate python file named” gui.py” for this purpose. Firstly, we need to load our trained model ‘traffic_classifier.h5’ with the Keras library’s help of the deep learning technique. After that, we build the GUI to upload images and a classifier button to determine which class our image belongs. We create classify() function for this purpose; whence we click on the GUI button, this function is called implicitly. To predict the traffic sign, we need to provide the same resolutions of shape we used at the model training time. So, in the classify() method, we convert the image into the dimension of shape (1 * 30 * 30 * 3). The model.predict_classes(image) function is used for image prediction, it returns the class number(0-42) for every image. Then, we can extract the information from the dictionary using this class number.

import tkinter as tk

from tkinter import filedialog

from tkinter import *

from PIL import ImageTk, Image

import numpy

#load the trained model to classify sign

from keras.models import load_model

model = load_model('traffic_classifier.h5')

#dictionary to label all traffic signs class.

classes = { 1:'Speed limit (20km/h)',

2:'Speed limit (30km/h)',

3:'Speed limit (50km/h)',

4:'Speed limit (60km/h)',

5:'Speed limit (70km/h)',

6:'Speed limit (80km/h)',

7:'End of speed limit (80km/h)',

8:'Speed limit (100km/h)',

9:'Speed limit (120km/h)',

10:'No passing',

11:'No passing veh over 3.5 tons',

12:'Right-of-way at intersection',

13:'Priority road',

14:'Yield',

15:'Stop',

16:'No vehicles',

17:'Veh > 3.5 tons prohibited',

18:'No entry',

19:'General caution',

20:'Dangerous curve left',

21:'Dangerous curve right',

22:'Double curve',

23:'Bumpy road',

24:'Slippery road',

25:'Road narrows on the right',

26:'Road work',

27:'Traffic signals',

28:'Pedestrians',

29:'Children crossing',

30:'Bicycles crossing',

31:'Beware of ice/snow',

32:'Wild animals crossing',

33:'End speed + passing limits',

34:'Turn right ahead',

35:'Turn left ahead',

36:'Ahead only',

37:'Go straight or right',

38:'Go straight or left',

39:'Keep right',

40:'Keep left',

41:'Roundabout mandatory',

42:'End of no passing',

43:'End no passing vehicle with a weight greater than 3.5 tons' }

#initialise GUI

top=tk.Tk()

top.geometry('800x600')

top.title('Traffic sign classification')

top.configure(background='#CDCDCD')

label=Label(top,background='#CDCDCD', font=('arial',15,'bold'))

sign_image = Label(top)

def classify(file_path):

global label_packed

image = Image.open(file_path)

image = image.resize((30,30))

image = numpy.expand_dims(image, axis=0)

image = numpy.array(image)

pred = model.predict_classes([image])[0]

sign = classes[pred+1]

print(sign)

label.configure(foreground='#011638', text=sign)

def show_classify_button(file_path):

classify_b=Button(top,text="Classify Image",command=lambda: classify(file_path),padx=10,pady=5)

classify_b.configure(background='#364156', foreground='white',font=('arial',10,'bold'))

classify_b.place(relx=0.79,rely=0.46)

def upload_image():

try:

file_path=filedialog.askopenfilename()

uploaded=Image.open(file_path)

uploaded.thumbnail(((top.winfo_width()/2.25),(top.winfo_height()/2.25)))

im=ImageTk.PhotoImage(uploaded)

sign_image.configure(image=im)

sign_image.image=im

label.configure(text='')

show_classify_button(file_path)

except:

pass

upload=Button(top,text="Upload an image",command=upload_image,padx=10,pady=5)

upload.configure(background='#364156', foreground='white',font=('arial',10,'bold'))

upload.pack(side=BOTTOM,pady=50)

sign_image.pack(side=BOTTOM,expand=True)

label.pack(side=BOTTOM,expand=True)

heading = Label(top, text="check traffic sign",pady=20, font=('arial',20,'bold'))

heading.configure(background='#CDCDCD',foreground='#364156')

heading.pack()

top.mainloop()

Output

Frequently Asked Questions

A. Traffic sign recognition utilizes computer vision technology, particularly image processing and machine learning techniques. Convolutional Neural Networks (CNNs) are commonly employed for feature extraction and classification. Preprocessing steps like image enhancement and segmentation are applied to improve sign detection. The trained model analyzes captured images or video frames to identify and interpret various traffic signs accurately.

A. Traffic sign recognition is a computer vision application that aims to automatically detect and interpret traffic signs from images or video streams captured by cameras on vehicles or in smart infrastructure. It helps drivers by providing real-time information about speed limits, stop signs, warnings, and other regulatory signs, enhancing road safety and reducing the likelihood of accidents.

Conclusion

In this article, we created a CNN model to identify traffic signs and classify them with 95% accuracy. We had observed the accuracy and loss changes over a large dataset. GUI of this model makes it easy to understand how signs are classified into several classes.

References

Image 1: https://www.pyimagesearch.com/2019/11/04/traffic-sign-classification-with-keras-and-deep-learning/

Image 2: Source:- https://steemit.com/programming/@kasperfred/looking-at-german-traffic-signs

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion.