Selenium WebDriver is an open-source API that allows you to interact with a browser in the same way a real user would and its scripts are written in various languages i.e. Python, Java, C#, etc. Here we will be working with python to scrape data from tables on the web and store it as a CSV file. As Google Chrome is the most popular browser, to make things easy, we will be using it. Further to store the data, we will be using the pandas and csv module of python.

Note: Make sure that you have the chromedriver installed in your system and it must be in the same folder as the python file. The chromedriver can be found here.

First, we need to locate the elements of the table, for this selenium WebDriver. We will be using the xpath method as most of the elements in the webpage have a unique xpath.

Stepwise implementation:

Step 1: Import the required modules.

Python3

from selenium import webdriverfrom selenium.webdriver.support.ui import Selectfrom selenium.webdriver.support.ui import WebDriverWaitimport timeimport pandas as pdfrom selenium.webdriver.support.ui import Selectfrom selenium.common.exceptions import NoSuchElementExceptionfrom selenium.webdriver.common.keys import Keysfrom selenium.webdriver.common.by import Byimport csv |

Step 2: Initialise the web browser with a variable driver, mention the executable_path as the location where you have the chromedriver file, and direct to the required URL.

Python3

driver = webdriver.Chrome( executable_path='/usr/lib/chromium-browser/chromedriver') |

Step 3: Wait for the WebPage to load. You can do so by the implicitly_wait() method. When fully loaded, maximize the window use maximize_window().

Python3

driver.implicitly_wait(10)driver.maximize_window() |

Step 4: Try to find a pattern in the xpath’s of the rows and locate them using find_element_by_xpath(), and run a loop to find all the table cells and convert them into text by adding .text() at the end of every element located through the generalized xpath.

Python3

while(1): try: method = driver.find_element(By.XPATH, '//*[@id="post-427949"]\ /div[3]/table[2]/tbody/tr['+str(r)+']/td[1]').text Desc = driver.find_element(By.XPATH, '//*[@id="post-427949"]\ /div[3]/table[2]/tbody/tr['+str(r)+']/td[2]').text Table_dict = { 'Method': method, 'Description': Desc } templist.append(Table_dict) df = pd.DataFrame(templist) r += 1 except NoSuchElementException: break |

Step 5: Export the Dataframe to a CSV file and close the exit of the browser.

Python3

df.to_csv('table.csv')driver.close() |

Below is the complete implementation:

Python3

from selenium import webdriverfrom selenium.webdriver.support.ui import Selectfrom selenium.webdriver.support.ui import WebDriverWaitimport timeimport pandas as pdfrom selenium.webdriver.support.ui import Selectfrom selenium.common.exceptions import NoSuchElementExceptionfrom selenium.webdriver.common.keys import Keysfrom selenium.webdriver.common.by import Byimport csvdriver = webdriver.Chrome( executable_path='/usr/lib/chromium-browser/chromedriver')driver.implicitly_wait(10)driver.maximize_window()r = 1templist = []while(1): try: method = driver.find_element(By.XPATH, '//*[@id="post-427949"]\ /div[3]/table[2]/tbody/tr['+str(r)+']/td[1]').text Desc = driver.find_element(By.XPATH, '//*[@id="post-427949"]/\ div[3]/table[2]/tbody/tr['+str(r)+']/td[2]').text Table_dict = {'Method': method, 'Description': Desc} templist.append(Table_dict) df = pd.DataFrame(templist) r + = 1 # if there are no more table data to scrape except NoSuchElementException: break# saving the dataframe to a csvdf.to_csv('table.csv')driver.close() |

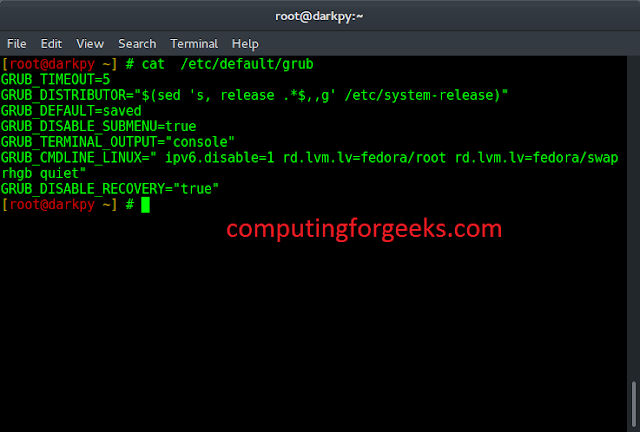

Output: