JPMorgan has unveiled its latest AI – DocLLM, an extension to large language models (LLMs) designed for comprehensive document understanding. In a bid to transform the landscape of generative pre-training, DocLLM goes beyond traditional models by incorporating spatial layout information. Thus, providing an efficient solution for processing visually complex documents.

Also Read: LLM in a Flash: Efficient Inference with Limited Memory

Unveiling DocLLM – The Game-Changer

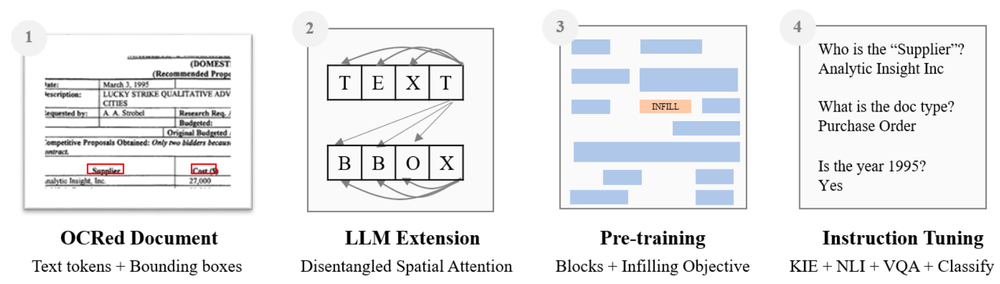

JPMorgan’s DocLLM is a transformer-based model that sets itself apart by strategically omitting expensive image encoders, focusing solely on bounding box information derived from optical character recognition (OCR). This unique approach enhances spatial layout comprehension without the need for complex vision encoders.

Also Read: Apple Secretly Launches Its First Open-Source LLM, Ferret

The Innovative Design of DocLLM

DocLLM introduces a disentangled spatial attention mechanism, extending the self-attention mechanism of standard transformers. By decomposing attention into disentangled matrices, the model captures cross-alignment between text and layout modalities. This innovative design allows DocLLM to represent alignments between content, position, and size of document fields, addressing the challenges posed by irregular layouts.

A Closer Look at DocLLM’s Pre-Training Objective

The pre-training objective of DocLLM stands out by focusing on infilling text segments. This approach, tailored for visually rich documents, effectively handles irregular layouts and mixed data types. The model’s ability to adapt to diverse document structures is demonstrated through its performance improvement ranging from 15% to 61% in comparison to other models.

Evaluations and Real-World Implications

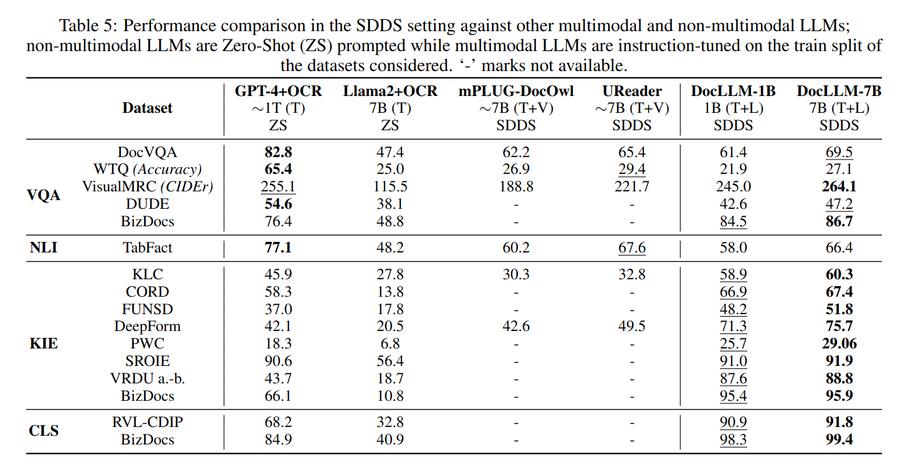

DocLLM underwent extensive evaluations, outperforming equivalent models on 14 out of 16 known datasets. It also showcased robust generalization to previously unseen datasets in 4 out of 5 settings. The model’s practical implications are substantial, offering automated document processing and analysis for businesses, particularly in financial institutions dealing with large volumes of diverse documents.

JPMorgan’s Vision for DocLLM

JPMorgan plans to further enhance DocLLM by incorporating vision-related features in a lightweight manner, and grow the model’s capabilities. This commitment to continuous improvement positions DocLLM as a pivotal tool for unlocking insights from a variety of documents & forms.

Also Read: India’s AI Leap: 6 LLMs that are Built in India

Our Say

JPMorgan’s introduction of DocLLM marks a significant leap in AI-driven document understanding. Its emphasis on spatial layout comprehension, coupled with a disentangled spatial attention mechanism, showcases the potential to revolutionize how large language models approach complex documents. As JPMorgan looks to the future with plans for additional enhancements, DocLLM remains a promising solution for businesses navigating the challenges of diverse document structures.