Overview

- Google AI has introduced Tensorflow 3D, designed to bring 3D deep learning capabilities into TensorFlow.

- The library can be used for state-of-the-art 3D semantic segmentation, 3D object detection, and 3D instance segmentation

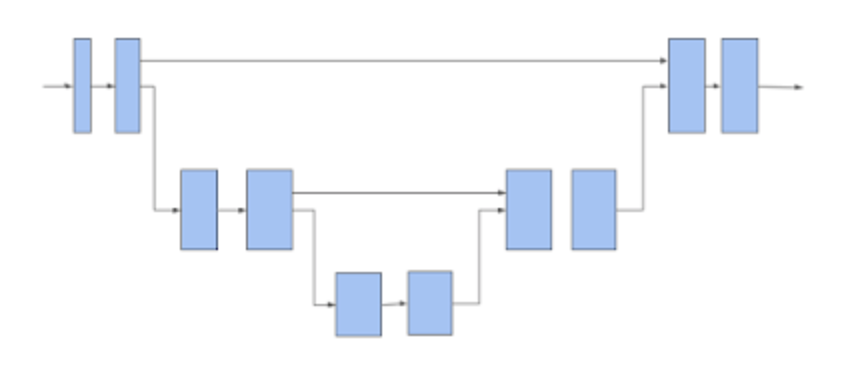

- Understanding the 3D sparse voxel U-Net architecture, the backbone network to extract features based on the task of interest.

Introduction

Another day, Another breakthrough in the field of Deep Learning!

The team at Google AI has open-sourced and released the newest TensorFlow 3D library. The TensorFlow 3D library is an open-source framework built on top of TensorFlow 2 and Keras that makes it easy to construct, train and deploy 3D Object Detection, 3D Semantic Segmentation, and 3D Instance Segmentation models. This release will make the domain of 3D scene understanding much easier to tackle for the community.

3D render of an abstract background with flowing hexagonal grid design

We have seen the rise of 3D sensors in recent years from Lidar and Depth sensing cameras in Iphones to 3D object detection for robotics and autonomous driving applications. But there is limited availability tools and resources that can be applied to such 3D data from sensors.

To improve research in the field of Computer vision, Google AI introduced TF 3D. It is a highly modular and efficient library that is designed to bring 3D Scene understanding capabilities into TensorFlow. TF 3D provides a set of popular operations, loss functions, data processing tools, models, and metrics that allow for training and deploying state-of-the-art 3D scene understanding models.

If you are interested in having a career in Data Science and learning about these amazing things, I recommend you check out our Certified AI & ML BlackBelt Accelerate Program.

Architecture

TF 3D uses a 3D sparse voxel U-Net architecture. When the data is sparse, directly applying a dense convolution will waste a lot of computing resources due to invalid calculations in the empty space also after traditional convolution, the extracted features are no longer sparse. To this end, TF 3D uses submanifold sparse convolution, which is designed to process 3D sparse data more efficiently as 3D data is inherently sparse.

The U-Net network consists of three modules, an encoder, a bottleneck, and a decoder, each of which consists of a number of sparse convolution blocks with possible pooling or un-pooling operations which is used to extract a feature for each voxel. A voxel represents a value on a regular grid in three-dimensional space.

The U-Net figure can be described as:

- The horizontal arrows take in the voxel features and apply a submanifold sparse convolution to it.

- The arrow moving downwards performs a submanifold sparse pooling.

- The arrow moving upwards will gather back the pooled features, concatenate them with the features coming from the horizontal arrow, and perform a submanifold sparse convolution on the concatenated features.

This model has been utilized as a backbone architecture by TF 3D for 3D Object Detection, 3D Semantic Segmentation, and 3D Instance Segmentation models. TF 3D allows for configuring of the U-Net network by changing the number of encoder-decoder layers and the number of convolutions in each layer, and by modifying the convolution filter sizes based on the use case.

Here is the code to create the U-Net model displayed in the above diagram from the Official Docs:-

from tf3d.layers import sparse_voxel_unet task_names_to_num_output_channels = {'semantics': 5, 'embedding': 64} task_names_to_use_relu_last_conv = {'semantics': False, 'embedding': False} task_names_to_use_batch_norm_in_last_layer = {'semantics': False, 'embedding': False} unet = sparse_voxel_unet.SparseConvUNet( task_names_to_num_output_channels, task_names_to_use_relu_last_conv, task_names_to_use_batch_norm_in_last_layer, encoder_dimensions=((32, 48), (64, 80)), bottleneck_dimensions=(96, 96), decoder_dimensions=((80, 80), (64, 64)), network_pooling_segment_func=tf.math.unsorted_segment_max) outputs = unet(voxel_features, voxel_xyz_indices, num_valid_voxels) semantics = outputs['semantics'] embedding = outputs['embedding']

Before diving into a high-level understanding of the different 3D scene understanding domains, let’s first understand the datasets. Currently, TF 3D supports the Waymo Open, ScanNet, and Rio datasets.

All the 3 datasets have been defined as follows:

- Frame: each entry contains data like color and depth camera images, point cloud, camera intrinsics, ground-truth semantic, and instance segmentation annotations.

- Scene: each entry contains point-cloud data of a whole scene and basic information to all frames in the scene.

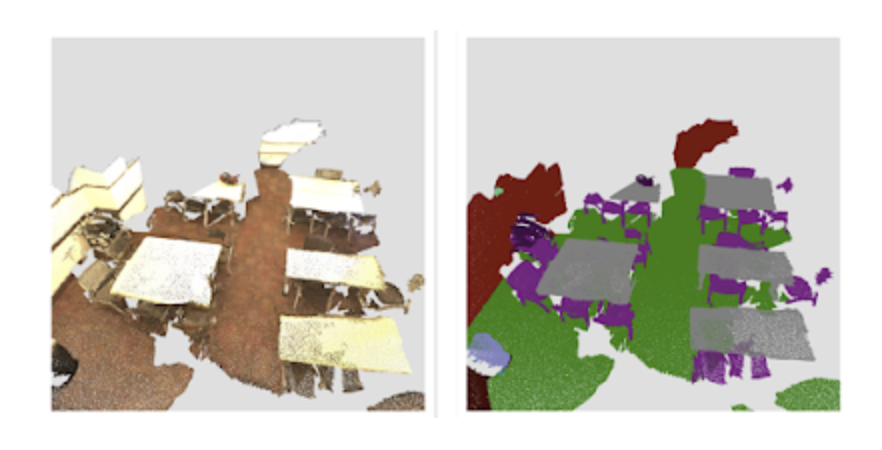

3D Semantic Segmentation

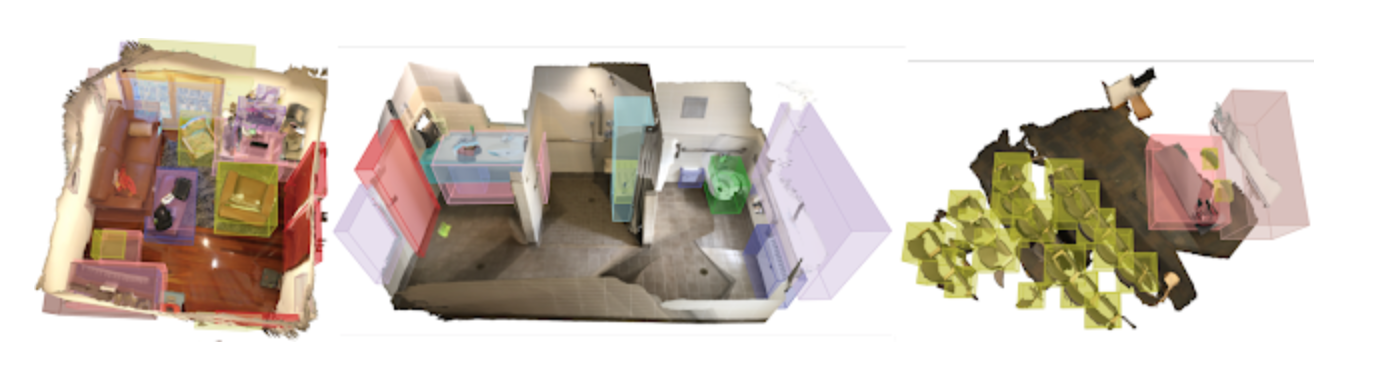

3D Semantic Segmentation involves the segmentation of 3D objects or scenes represented as point clouds into their constituent parts where each point in the input space must be assigned a part label. TF 3D architecture uses only one output head for predicting the per-voxel semantic scores, which are then mapped back to points to predict the semantic label per point. The U-Net uses a submanifold sparse convolutional networks because they can process low-dimensional data living in a space of higher dimensionality.

3D Instance Segmentation

The goal in 3D Instance Segmentation is to group the voxels that belong to the same object together. The model used by TF 3D predicts a per-voxel instance embedding vector as well as a semantic score ( same as that explained in 3D Semantic Segmentation) for each voxel. By default, TF 3D uses the U-Net Network as the backbone and has two output heads for predicting per-voxel semantic logits, and instance embedding. A greedy algorithm is utilized to pick one instance seed at a time and then use the distance between the voxel embeddings to group them into segments.

3D Object Detection

Source

TF 3D proposes a single-stage 3D object detection method to predict the per-voxel size, center, and rotation matrices and the object semantic scores. A 3D sparse U-Net is used to extract features from each voxel. Then two blocks of sparse convolutions predict object properties per voxel. These features are then propagated back to the points and passed through a graph convolution module.

At inference time, the per-voxel box predictions are reduced into a few accurate box proposals, and then at training time, box prediction and classification losses are applied to per-voxel predictions.

The loss function applied to calculate the distance between predicted and the ground-truth box corners is the Huber Loss. Huber Loss is a differentiable function to compute the eight 3D corners of each predicted box. Every point in the point cloud predicts a 3D bounding box. Therefore a classification loss is used that classifies points that make accurate predictions as positive and others as negative.

This approach shows state-of-the-art results for 3D object detection datasets for both indoor and outdoor scenes.

Ending notes

In recent years, we have seen Google harness machine learning through TensorFlow for many purposes. Tensorflow 3D opens up vast new opportunities to be explored in the field of computer vision.

Experiments on the datasets show that the implementation of TF 3D is around 20x faster than a well-designed implementation with pre-existing TensorFlow operations. With the rapid growth of data capturing sensors, we finally have the right resource and capabilities to handle it and tackle newer challenges.

Computer vision just continues to amaze us!! Go ahead and install the Tensorflow 3D library and get started with it!

Did you find this article helpful? Do share your valuable feedback in the comments section below.