Overview

- We will discuss aggregation pipelines in MongoDB.

- We will see how to use the operator like the match, group, bucket, and facet.

Introduction

MongoDB is an unstructured database that stores data in the form of documents. Additionally, MongoDB is able to handle huge volumes of data very efficiently and is the most widely used NoSQL database as it offers rich query language and flexible and fast access to data.

In this article, we will see multiple examples of how to create the Aggregation Pipelines in a MongoDB database using PyMongo. Further, we will see how to use the operators like the match to filter the data, a bucket to create user-defined buckets on a field, facet to create multiple pipelines over a set of documents.

This is the 3rd article in the series of MongoDB articles. I have listed down the first 2 articles in the series below-

So if you are a complete beginner in MongoDB, I would recommend you go through that article first before we begin.

Table of Contents

- What is PyMongo?

- Installation Steps

- Insert the data into the databases

- What are Aggregation Pipelines?

- Match

- Group

- Bucket

- Facet

- End Notes

What is PyMongo?

PyMongo is a Python library that enables us to connect with MongoDB. Moreover, this is the most recommended way to work with MongoDB and Python.

Also, we have chosen Python to interact with MongoDB because it is one of the most commonly used and considerably powerful languages for data science. PyMongo allows us to retrieve the data with dictionary-like syntax.

In case you are a beginner in Python, I will recommend you to enroll in this free course: Introduction to Python

Now let us see how to create Aggregation Pipelines in a MongoDB Database Using PyMongo.

Installation Steps

Installing PyMongo is simple and straightforward. Here, I am assuming you already have Python 3 and MongoDB installed. The following command will help you to install PyMongo:

pip3 install pymongo

Insert the data into the databases

We will use the same data as we did in the last article. In case you already have it, you can skip to the next section.

-

Importing the libraries and connect to mongo client

Start the MongoDB server on your machine. I am assuming it is running file at localhost:27017.

Let’s start by importing some of the libraries that we are going to use. By default, MongoDB server runs at port 27017 on the local machine. Then, we will connect to the MongoDB client using the pymongo library.

Then get the database instance of the database sample_db. In case it doesn’t exist, MongoDB will create one for you.

-

Create the Collections from the JSON files

We will use the data from a meal delivery company that operates in multiple cities. Additionally, they have various fulfillment centers in these cities for dispatching meal orders to their customers. You can download the data and the code here.

- weekly_demand:

- id: unique ID for each document

- week: Week Number

- center_id: Unique ID for fulfillment center

- meal_id: Unique ID for Meal

- checkout_price: Final price including discount, taxes & delivery charges

- base_price: Base price of the meal

- emailer_for_promotion: Emailer sent for promotion of meal

- homepage_featured: Meal featured at the homepage

- num_orders: (Target) Orders Count

- weekly_demand:

-

Insert data into Collections

Now, the data we have is in the JSON format. Then we will get the instance of the collection, read the data file, and insert the data using the insert_many function.

https://gist.github.com/lakshay-arora/7a86d0bafe8b43d51e0dfcfd02b6f80a#file-collection_2-py

What are Aggregation Pipelines?

In aggregation operations, the data is processed and the computed results are returned. Here, the aggregation pipeline provides you a framework to aggregate data and is built on the concept of the data processing pipelines. The following is its syntax:

your_collection.aggregate( [ { <stage1> }, { <stage2> },.. ] )

The aggregation pipeline consists of multiple stages. Each stage in the pipeline transforms the documents as they pass through the pipeline. Lets a have a look at these stages-

-

Match

The match stage is generally used to filter the documents and it is mostly occurring at the beginning of the pipeline. It has the following syntax:

{ $match: { <query> } }

We should place the match stage as early as possible in the pipeline as it will filter the documents and the match operator will be able to take advantage of the indexes too.

In the following example, in the first stage, we will filter the documents where the center_id is 11 and in the second stage, we will count the number of documents left.

Let’s have a look at another example where in the first stage we will filter the documents, having the center_id as 11, and now from the filtered documents, we will find out the documents where the checkout price was greater than 130 and less than 140. In the final stage, we will compute the total number of documents left.

-

Group

The next aggregation operator is the group operator. In case you have worked with pandas, you might have seen we usually use groupby to find out the stats based on a particular field. For example, the average salary of employees department wise, the number of unique items sold by each store, etc.

The following is its syntax:

{ $group: { _id: <expression>, // Group By Expression <field1>: { <accumulator1> : <expression1> }, ... } }If you put the value of _id to be “None” or any constant value like 0, it will calculate the aggregated results on all the fields present in the document instead of grouping the data on any specific field. In the example below, we will find out all the documents where center_id is 11 in the first stage. And in the second stage, if we put _id equals 0 then it will consider all the documents and returns results.

Let’s have a look at the following code:

Also, in the same group operator, we can add different accumulators like $avg, $sum, $first, $last, and many more. Check out the complete list here on the MongoDB documentation.

Let’s have a look at the following example. It will calculate the total checkout price and the average checkout price of the center_id 11.

Let’s take another example where we group the data on the field homepage_featured. It will return us the different categories present in the specified field “homepage_featured”.

In the previous example, we just found out that there were 2 categories 0 and 1 in all the documents where center id is 11. Now, we will see the average checkout price for both these categories-

Then let’s see how to group in multiple fields. For example, you need to calculate the average salary of employees for each department and for each gender. In the “_id” object simply provide the fields in the order you want the data to be grouped.

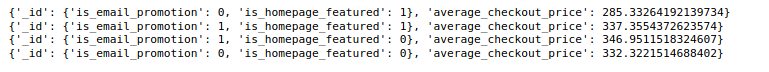

In the following example, firstly the documents with center_id 11 will be matched and for all the combination of email promotion and home page featured it will calculate the average check out page.

-

Bucket

The bucket operator will divide the documents into multiple groups and then do the operations. The following is its syntax:

{ $bucket: { groupBy: <expression>, boundaries: [ <lowerbound1>, <lowerbound2>, ... ], default: name_of_the_label, output: { <output1>: { <$accumulator expression> }, ... <outputN>: { <$accumulator expression> } } } }You need to provide the field on which you have groupby and the boundaries of your buckets and if in case any document doesn’t lie in any of the buckets you can also put that in the default bucket. Further, in the output object, you can define the stats that you want for each of your buckets.

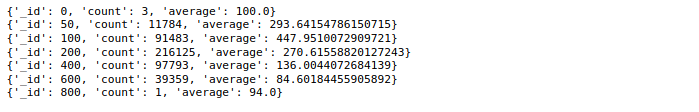

In the following example, 7 different buckets on the field checkout_price will be created and for each of them, we will calculate the total number of documents and the average number of orders.

-

Facet

It is one of the most important operators of the aggregation pipelines. It will allow you to create multiple aggregation pipelines on the same set of documents. Each pipeline in the facet will give its own output. The following is its syntax:

{ $facet: { <output_field_name_1>: [ <stage1>, <stage2>, ... ], <output_field_name_2>: [ <stage1>, <stage2>, ... ], ... } }

Each of the pipelines within facet will get the exact same set of documents. Each of the pipelines is completely independent of one another.

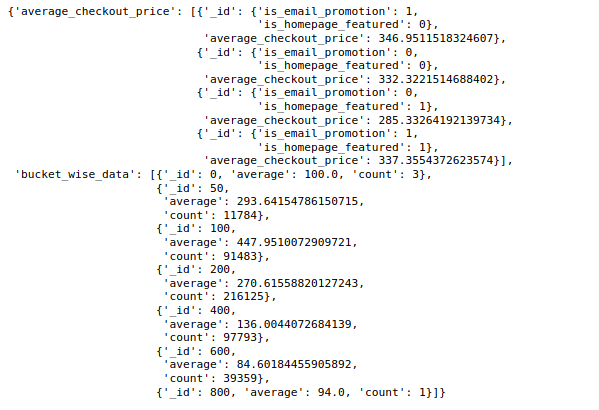

Here, in this example, we will add two aggregation pipelines in the facet. In the first pipeline, we will find out the average checkout price of each combination of email promotion and homepage featured. In the second pipeline, we will create buckets on the checkout price and find out the average number of orders in each of the buckets.

End Notes

To summarize, in this article, we learned how to create aggregation pipelines in a MongoDB database using PyMongo. Also, we understood how to apply various operators like the match, group, bucket, and facet.

In case you want to learn more about querying data, I recommend the following course – Structured Query Language (SQL) for Data Science

I encourage you to try things on your own and share your experiences in the comments section. Additionally, if you face any problem with any of the above concepts, feel free to ask me in the comments below.