Just in time for back-to-school season, Google is rolling out a set of new Gemini AI Mode features in Search designed to make studying, research, and project planning easier. The updates, announced today by Robby Stein, VP of Product for Google Search, expand AI Mode into new territory: handling course materials, helping students stay organized, and even explaining diagrams in real time through live video input.

High-utility AI Mode tools hitting the web interface

Upload images and PDFs on desktop

Until now, asking detailed questions about images in AI Mode was limited to Google’s mobile apps. That changes this week, as desktop users in the U.S. can now upload images — and soon, PDFs — directly into Search.

Google says the feature is aimed squarely at students. You could, for example, drop in a psychology lecture PDF or a page from your chemistry textbook and then ask AI Mode follow-up questions that go beyond the basic content. The system will cross-reference the file’s contents with relevant information from the web, offering an AI-generated explanation alongside prominent links for deeper research.

Support for other file types, including documents from Google Drive, is planned for later this year.

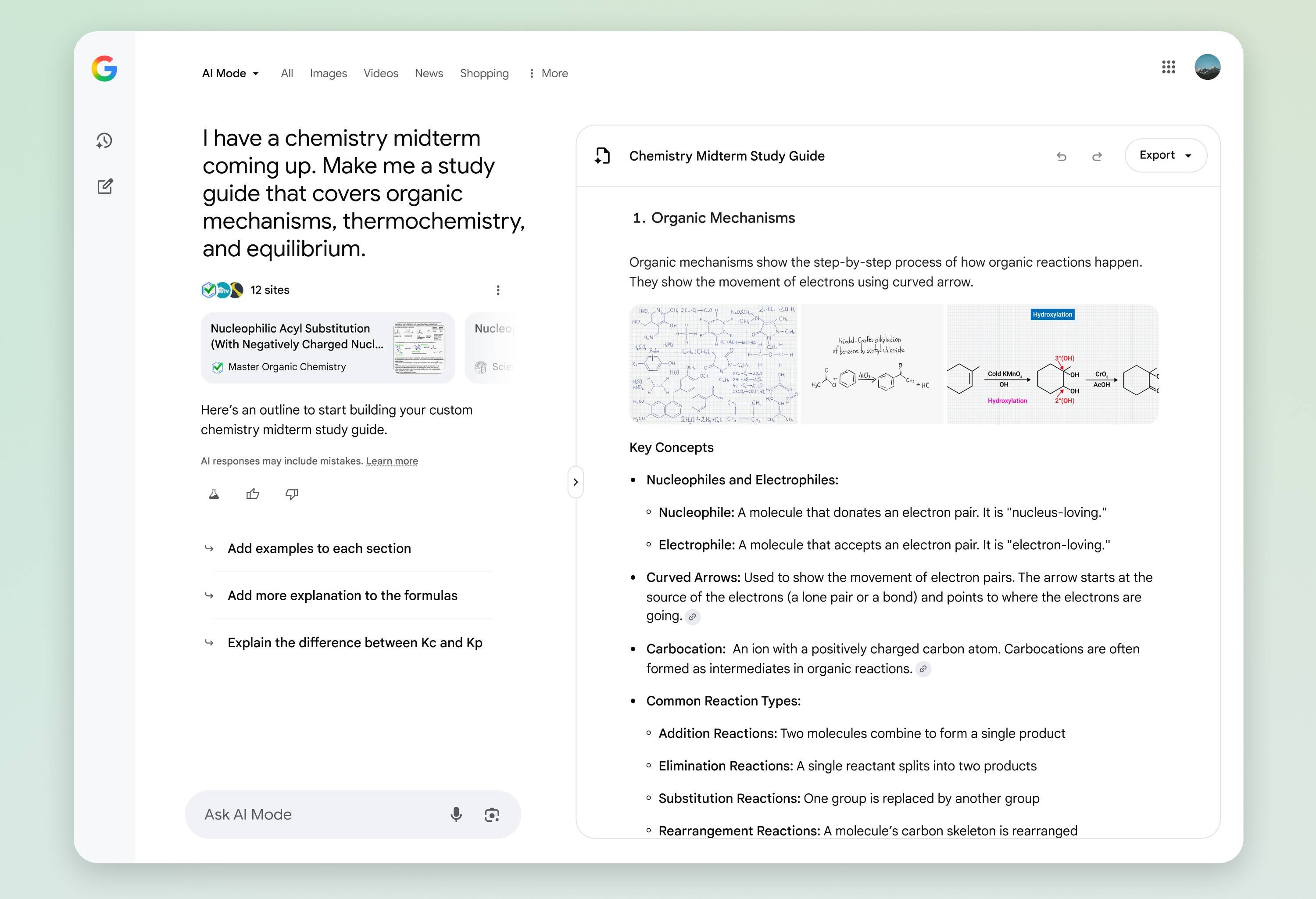

Canvas: an interactive planning panel

Another addition is Canvas, a new mode that lives in a dynamic side panel. Instead of spitting out a one-off AI response, Canvas lets you build and refine a plan across multiple sessions.

Say you’re building a study plan for finals: you can create a Canvas, ask Gemini to generate an outline, then refine it step by step. The panel updates in real time, and you’ll soon be able to upload your own notes or syllabi to customize the plan even further.

Google is pitching Canvas as useful beyond education — for trip planning, major projects, or anything that benefits from structured, iterative planning. Canvas is rolling out in the coming weeks for U.S. users enrolled in the AI Mode Labs experiment.

Search Live with video input

Google is also bringing in some of the tech it previewed earlier this year under “Project Astra.” Search Live lets you point your phone’s camera at a problem — say, a tricky geometry diagram or a confusing formula — and have a back-and-forth conversation with AI Mode about what you’re seeing.

The system isn’t static; it can handle moving objects and multiple angles, and it ties directly into Google Lens. The feature starts rolling out this week on mobile in the U.S., again limited to AI Mode Labs testers.

Lens in Chrome gets smarter

Finally, Google is upgrading Lens in Chrome with AI Mode follow-ups. Lens has long been able to identify what’s on your screen, but now you can go deeper by clicking “AI Mode” at the top of the Lens results or tapping a new “Dive deeper” button in the AI Overview.

So if you’re staring at a diagram in an online PDF, you can ask Lens for an overview, then immediately start an AI conversation to better understand what’s in front of you.

The big picture for interactive research and study

Extracting real-world usefulness from the hype

Together, these features push AI Mode beyond being a novelty Q&A tool into something more like a study partner and productivity hub. The ability to upload course materials, build interactive study plans, and get live explanations in context could make Search a lot stickier for students heading into the school year.

Of course, most of these updates are limited to the U.S. and to users in Google’s AI Mode Labs program — so not everyone will see them right away. But the direction is clear: Google wants AI Mode to become the go-to tool not just for finding information, but for making sense of it.

… [Trackback]

[…] Read More on that Topic: geeksforgeeks.org/google-search-is-going-back-to-school-with-these-powerful-interactive-upgrades/ […]

… [Trackback]

[…] Information on that Topic: geeksforgeeks.org/google-search-is-going-back-to-school-with-these-powerful-interactive-upgrades/ […]