Summary

- Google removes pledge to avoid harmful AI applications, signaling shift in stance.

- Google hints at AI’s role in national security and emphasizes collaboration for global protection.

- New guidelines maintain commitment to social responsibility and following international law in AI development.

Google’s made a curious change to its public-facing AI principles. As spotted by The Washington Post (and picked up by The Verge), on Tuesday, Google published an updated version of its guidelines for AI development that removes references to the company’s prior commitment to avoid using AI in applications that “cause or are likely to cause overall harm” — including AI-enhanced weapons and surveillance tech.

In a blog post accompanying the company’s new guidelines, Google’s James Manyika and Demis Hassabis discuss the company’s rationale for recent changes. “There’s a global competition taking place for AI leadership within an increasingly complex geopolitical landscape,” the post reads. “We believe democracies should lead in AI development, guided by core values like freedom, equality, and respect for human rights.”

The bit about democracies leading the way in AI development seems like an oblique reference to China’s DeepSeek AI, which sent shock waves through the US stock market when it was made widely available in late January. The post goes on to say that the public and private sectors should collaborate “to create AI that protects people, promotes global growth, and supports national security.”

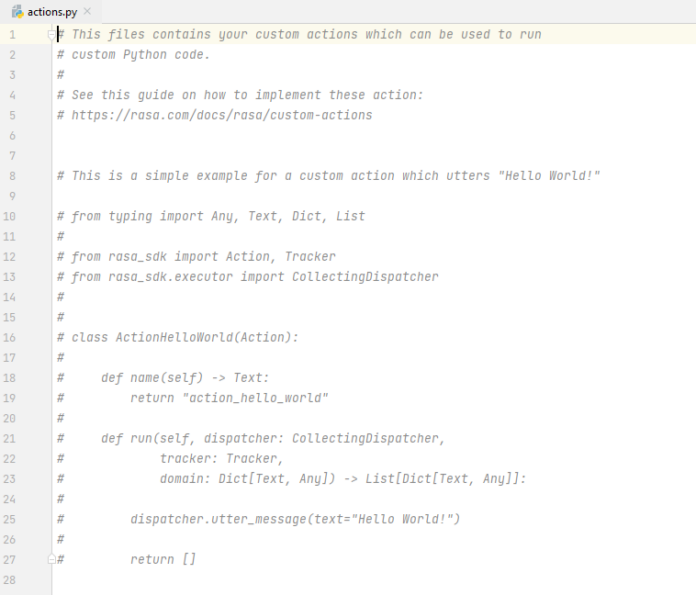

This section has been removed from Google’s AI Principles page.

It’s worth noting here that Google hasn’t said it intends to weaponize AI. But removing public pledges not to do so, in conjunction with a post from Google AI leaders that talks up AI’s future role in national security, sure makes it look like the company is more open to leveraging AI in potentially harmful ways than it used to be.

The Verge points out that Google’s previously lent its prowess to military operations, despite its former promise not to create AI weapons. Google AI was used in a 2018 project by the US military to analyze drone footage, and a few years later, Google worked with Amazon to fulfill a $1.2 billion contract to provide cloud services to the Israeli government and military.

Google says it’s still committed to ‘social responsibility’ in AI

In Google’s blog post about recent changes to its AI principles, Manyika and Hassabis write that the company still considers it “an imperative to pursue AI responsibly throughout the development and deployment lifecycle.” The post also pledges that Google’s AI development will “stay consistent with widely accepted principles of international law and human rights.”

You can see an archived version of Google’s previous AI principles here.