Google is switching on a new kind of AI assistant — one that doesn’t just talk about doing things, it actually clicks the buttons. The company’s Gemini 2.5 Computer Use model is now in public preview for developers through the Gemini API in Google AI Studio and Vertex AI, giving agents the ability to navigate real websites like a human: open pages, fill out forms, tap dropdowns, drag items, and keep going until the job’s done.

The next evolution of today’s AI

A significant, actually functional upgrade on the way

Instead of relying on clean, structured APIs, Computer Use works in a loop. Your code sends the model a screenshot of the current screen along with recent actions. Gemini analyzes the scene and replies with a function call such as “click,” “type,” or “scroll,” which the client executes. Then you send back a fresh screenshot and URL, and the cycle repeats until success or a safety stop. It’s relatively mechanical, but effective. Most consumer web interfaces weren’t built for bots, and this lets agents operate behind the login, where APIs don’t exist.

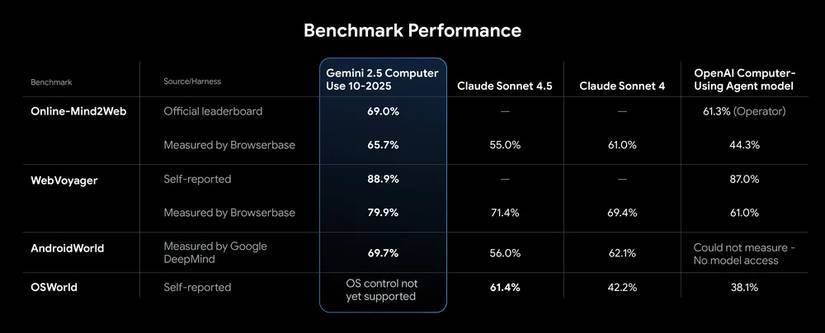

Google says the model is tuned for browsers first, with promising early results on mobile UIs. Desktop OS-level control isn’t the focus yet. Performance-wise, Gemini 2.5 Computer Use leads recent browser-control benchmarks like Online-Mind2Web and WebVoyager and does so with lower latency in Browserbase’s environment. It’s a meaningful combo if you’re trying to, for example, navigate an account dashboard or book travel in real time. Google also published extra evaluation details for the curious.

Safety is treated like a seat belt, not an optional package. Each proposed action can be run through a per-step safety service before execution; developers can also require user confirmation for high-stakes moves like purchases or anything that might harm system integrity. You can further narrow what actions are even allowed, which should help keep agents from clicking themselves into trouble. Still, Google stresses exhaustive testing before you ship.

If you want to kick the tires, Google points devs to a hosted demo via Browserbase, sample agent loops, and documentation for building locally with Playwright. And if parts of this feel familiar, that’s because versions of the model have already been working behind the scenes in efforts like Project Mariner, Firebase’s Testing Agent, and some of Search’s AI Mode features. Today’s news simply opens the door.

With the preview now available, Gemini is clearly ready to graduate from an assistant that suggests to an assistant that does. If your workflows live on the web, this could be the most interesting thing Google’s shipped this year.