The human face and body are key entities in the task of fake content generation. Particularly when dealing with the human face, apart from detecting the overall face in each image or video, a deepfake solution also needs to focus on the eyes, mouth, and other features. The different deepfake solutions are:

- Facial Action Coding System (FACS)

- 3D Morphable Model (3DMM)

- Facial landmarks

FACS and 3DMM-based features are highly accurate but computationally expensive and sometimes even require human intervention (for example, FACS coding) for proper results. Facial landmarks are another feature set that is simple yet powerful and are being used by several recent works to achieve state-of-the-art results.

This article is an excerpt from the book, Generative AI with Python and TensorFlow 2 by Joseph Babcock and Raghav Bali – The book is a comprehensive resource that enables you to create and implement your own generative AI models through practical examples.

Facial landmarks are a list of important facial features, such as the nose, eyebrows, mouth, and corners of the eyes. The goal is the detection of these key features using some form of a regression model. There are a couple of different methods we can use to detect facial landmarks as features for the task of fake content generation. In this article, we will cover three of the most widely used methods: OpenCV, dlib, and MTCNN.

Facial landmark detection using OpenCV

OpenCV is a computer vision library aimed at handling real-time tasks. It is one of the most popular and widely used libraries, with wrappers available in several languages, Python included. It consists of extensions and contrib-packages, such as the ones for face detection, text manipulation, and image processing. The packages enhance its overall capabilities.

Facial landmark detection can be performed using OpenCV in a few different ways. One of the ways is to leverage Haar Cascade filters, which make use of histograms followed by an SVM for object detection. OpenCV also supports a DNN-based method of performing the same task.

Facial landmark detection using dlib

Dlib is another cross-platform library that provides more or less similar functionality to OpenCV. The major advantage dlib offers over OpenCV is a list of pretrained detectors for faces as well as landmarks. Before we get onto the implementation details, let’s learn a bit more about the landmark features.

Facial landmarks are granular details on a given face. Even though each face is unique, certain attributes help us to identify a given shape as a face. This precise list of common traits is codified into what is called the 68-coordinate or 68-point system. This point system was devised for annotating the iBUG-300W dataset. This dataset forms the basis of a number of landmark detectors available through dlib. Each feature is given a specific index (out of 68) and has its own (x, y) Coordinates. The 68 indices are indicated in Figure 1.

As depicted in the figure, each index corresponds to a specific coordinate and a set of indices mark a facial landmark. For instance, indices 28-31 correspond to the nose bridge and the detectors try to detect and predict the corresponding coordinates for those indices.

Let’s now leverage this 68-coordinate system of facial landmarks to develop a short demo application for detecting facial features. We will make use of pretrained detectors from dlib and OpenCV to build this demo. The following snippet shows how a few lines of code can help us identify different facial landmarks easily:

detector = dlib.get_frontal_face_detector() predictor = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat") image = cv2.imread('nicolas_ref.png') # convert to grayscale gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) faces = detector(gray) # identify and mark features for face in faces: x1 = face.left() y1 = face.top() x2 = face.right() y2 = face.bottom() landmarks = predictor(gray, face) for n in range(0, 68): x = landmarks.part(n).x y = landmarks.part(n).y cv2.circle(image, (x, y), 2, (255, 0, 0), -1)

The preceding code takes in an image of a face as input, converts it to grayscale, and marks the aforementioned 68 points onto the face using a dlib detector and predictor. Once we have these functions ready, we can execute the overall script. The script pops open a video capture window. The video output is overlaid with facial landmarks, as shown in Figure 2:

As you can see, the pretrained facial landmark detector seems to be doing a great job. With a few lines of code, we were able to get specific facial features. In the later sections of the chapter, we will leverage these features for training our own deepfake architectures.

Facial landmark detection using MTCNN

There are several alternatives to OpenCV and dlib for face and facial landmark detection tasks. One of the most prominent and well-performing ones is called MTCNN, short for Multi-Task Cascaded Convolutional Networks. Developed by Zhang, Zhang et al. (https://kpzhang93.github.io/MTCNN_face_detection_alignment/).

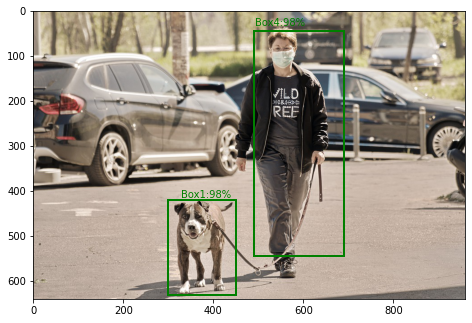

MTCNN is a complex deep learning architecture consisting of three cascaded networks called P-Net, R-Net, and O-Net. Without going into much detail, the setup first builds a pyramid of the input image, i.e. the input image is scaled to different resolutions. The Proposal-Net, or P-Net, then takes these as input and outputs several potential bounding boxes that might contain a face. With some pre-processing steps in between, the Refine-Net, or R-Net, then refines the results by narrowing them down to the most probable bounding boxes. The final output is generated by Output-Net, or O-Net. The O-Net outputs the final bounding boxes containing faces, along with landmark coordinates for the eyes, nose, and mouth.

Let us now try out this state-of-the-art architecture to identify faces and corresponding landmarks. Luckily for us, MTCNN is available as a pip package, which is straightforward to use. In the following code listing, we will build a utility function to leverage MTCNN for our required tasks:

def detect_faces(image, face_list): plt.imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB)) ax = plt.gca() for face in face_list: # mark faces x, y, width, height = face['box'] rect = Rectangle((x, y), width, height, fill=False, color='orange') ax.add_patch(rect) # mark landmark features for key, value in face['keypoints'].items(): dot = Circle(value, radius=12, color='red') ax.add_patch(dot) plt.show() # instantiate the detector detector = MTCNN() # load sample image image = cv2.imread('trump_ref.png') # detect face and facial landmarks faces = detector.detect_faces(image) # visualize results detect_faces(image, faces)

As highlighted in the code listing, the predictions from the MTCNN detector outputs two items for each detected face – the bounding box for the face and five coordinates for each facial landmark. Using these outputs, we can leverage OpenCV to add markers on the input image to visualize the predictions. Figure 3 depicts the sample output from this exercise.

As shown in the figure, MTCNN seems to have detected all the faces in the image along with the facial landmarks properly. With a few lines of code, we were able to use a state-of-the-art complex deep learning network to quickly generate the required outputs. Like dlib/OpenCV exercise in the previous section, we can leverage MTCNN to identify key features which can be used as inputs for our fake content generation models.

About the authors

Joseph Babcock has spent more than a decade working with big data and AI in the e-commerce, digital streaming, and quantitative finance domains. Throughout his career, he has worked on recommender systems, petabyte-scale cloud data pipelines, A/B testing, causal inference, and time series analysis. He completed his PhD studies at Johns Hopkins University, applying machine learning to the field of drug discovery and genomics.

Raghav Bali is an author of multiple well-received books and a Senior Data Scientist at one of the world’s largest healthcare organizations. His work involves the research and development of enterprise-level solutions based on Machine Learning, Deep Learning, and Natural Language Processing for Healthcare and Insurance-related use cases. His previous experiences include working at Intel and American Express. Raghav has a master’s degree (gold medalist) from the International Institute of Information Technology, Bangalore.