Objective

- Understand what is speech separation and why we need it.

- Discuss the traditional methods of speech separation.

Table of contents

- What is speech separation?

- Why do we need speech separation?

- A Brief History of Traditional methods

- Voice Separation with an Unknown Number of Multiple Speakers

Note: All audio samples and the videos, images in the 4th section have been taken from Facebook research here, here and here.

What is speech separation?

Let’s say we want to write a program to generate the lyrics of a song. As we know this process includes the usage of Automatic Speech Recognition(ASR). But will it be able to recognize the speech properly? While some of the state-of-the-art methods can, it still won’t be able to recognize the lyrics because of the background music.

But wouldn’t it be better if we were able to somehow separate the speech from the music? Because then we will be recognizing the speech without any disturbances(in this case it is music) and ultimately get better results.

That is exactly what speech separation(Formally known as Audio Source Separation) is; decomposing an input mixed audio signal into the sources that it originally came from. Speech separation is also called the cocktail party problem. The audio can contain background noise, music, speech by other speakers, or even a combination of these.

Note: the task of extracting the target speech signal from a mixture of sounds as speech enhancement. The extraction of multiple speech signals from a mixture is denoted as speech separation. I will be using the term ‘separation’ only for the rest of the article.

Why do we need speech separation?

A practical application of speech separation is in the working of hearing aids. Identifying and enhancing non-stationary speech targets speech in various noise environments, such as a cocktail party, is an important issue for real-time speech separation. Or in another case say there are multiple speakers in an audio file. Say the news is being reported by the reporters in a crowded event.

As the reporters are standing close to each other, the recorded audio will have overlapping voices, making it difficult to understand and troublesome. So it becomes crucial to be able to identify and separate the target speaker.

From the start of the Information Age, voice information has played an important role in our day-to-day life; phone calls, voice messages, live news telecasts, etc, and thus the role of speech separation is important.

A Brief History of Traditional methods

The problem of separating two or more speakers in audio has been a challenge for ages. In signal processing, for single speaker audio, speech enhancement methods are considered where a power spectrum of the estimated noise or an ideal Wiener wave recorder, such as spectral subtraction and Wiener filter. Independent component analysis(ICA) based blind source separation and Non-negative matrix factorization are also popular methods used.

But in recent times, deep learning techniques have achieved state-of-the-art results in speech separation. Notably, Conv-TasNet which is a deep learning framework for end-to-end time-domain speech separation has achieved very good results. Another deep learning model with good performance is SepFormer, which is an RNN free transformer neural network.

The current leading methodology is based on an overcomplete set of linear filters, and on separating the filter outputs at every time step using a mask for two speakers, or a multiplexer for more speakers.

Now let us move ahead to the Facebook research’s method of speech separation.

Voice Separation with an Unknown Number of Multiple Speakers

This paper was published on 1st September 2020 to the International Conference on Machine Learning (ICML 2020), by the Facebook research team. SVoice(as named by the authors) considers a supervised voice separation technique with the source being a single microphone/single-channel source separation containing mixed voices.

This method performs better than the previous state-of-the-art methods on several standards, including speech with reverberations or noise. The various datasets used are WHAM, WHAMR, WSJ-2mix, WSJ-3mix, WSJ-4mix, WSJ-5mix. This model achieved a scale-invariant signal-to-noise ratio(SI-SNR) improvement of more than 1.5 decibels over the current state-of-the-art models.

To tackle the problem of separating speech from a source with an unknown number of speakers, the authors had built a new system by training different models for separating two, three, four, and five speakers. In order to avoid biases that arise from the distribution of data and to promote solutions in which the separation models are not detached from the selection process, they had used an activity detection algorithm, in which the average power of each output channel is computed and verified that it is above a predefined threshold.

For example, if a model is trained for C speakers, we get C output channels which are verified with the activity detection algorithm. If there is no speech(silence), then the C-1 model is taken. This process is repeated until there are no silent output channels or only one silent output channel for the 2 speaker model.

As the number of speakers increase, mask-based techniques are limited because the mask needs to extract and suppress more information from the audio representations. Therefore in this paper, the authors have implemented a mask-free method.

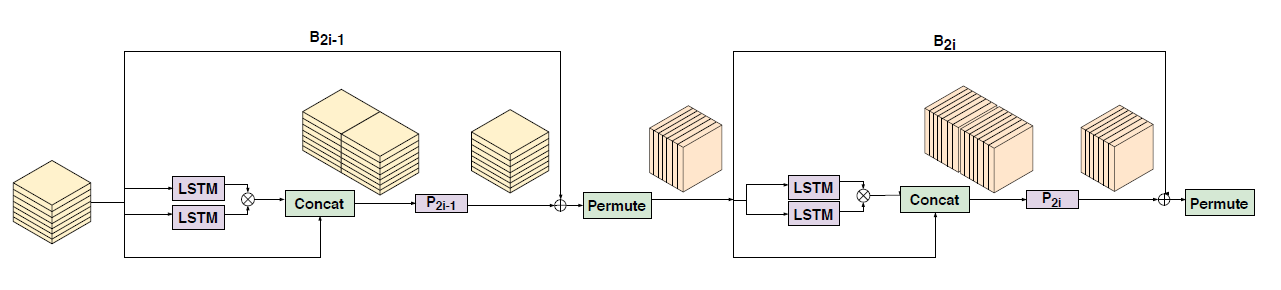

This method implements a sequence of RNNs that are applied to the audio. Also, they evaluate the loss after each RNN and thus obtain a compound loss that reflects the reconstruction quality after each layer. The RNNs are bi-directional. Each RNN block is built with a specific type of residual connection, where two RNNs run in parallel. The output of each layer is the concatenation of the element-wise multiplication of the two RNNs together with the layer input that undergoes a skip connection.

For more details on the skip, connections check this article. For a detailed explanation of RNNs, refer here.

As part of processing, the method includes encoding and chunking the audio. It is different from other methods as the RNNs use dual heads and the losses are also different.

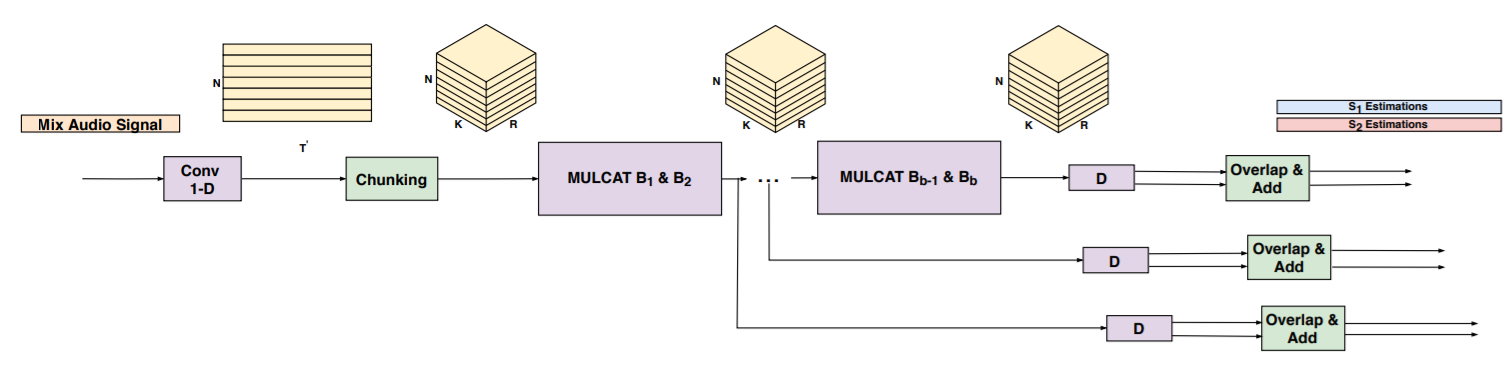

First, an encoder network, E, gets as input the mixture waveform and outputs an N-dimensional latent representation of the waveform. Specifically, E is a 1-D convolutional layer with a kernel size L and a stride of L/2(L is the compression factor), followed by a ReLU non-linear activation function.

The latent representation is then divided into chunks where all chunks are then concatenated along the singleton dimensions and we obtain a 3-D tensor.

Next, the tensor is fed into the separation network Q, which consists of b RNN blocks. The odd blocks (1,3,5…) apply the RNN along the time-dependent dimension of size R. The even blocks(2,4,6…) are applied along the chunking dimension.

Intuitively, processing the second dimension yields a short-term representation, while processing the third dimension produces long-term representation. After this, the method diverges from others by using MULCAT blocks.

The MULCAT block means to multiply and concat. The 3D tensor and the odd blocks obtained from chunking are fed as input to two bi-directional LSTMs that operate along the second dimension. The results are then multiplied element-wise, and it is followed by a concatenation of the original signal along the third dimension. To obtain a tensor of the same size of the input, a linear projection along this dimension is applied. In the even blocks, these same operations take place along the chunking axis.

A multi-scale loss is employed in this method, which is required to reconstruct the original audio after each pair of blocks. PReLU non-linearity is applied to the 3D tensor. In order to transform the 3D tensor back to audio, we employ the overlap-and-add operator to the R chunks. The operator, which inverts the chunking process, adds overlapping frames of the signal after offsetting them appropriately by a certain step size.

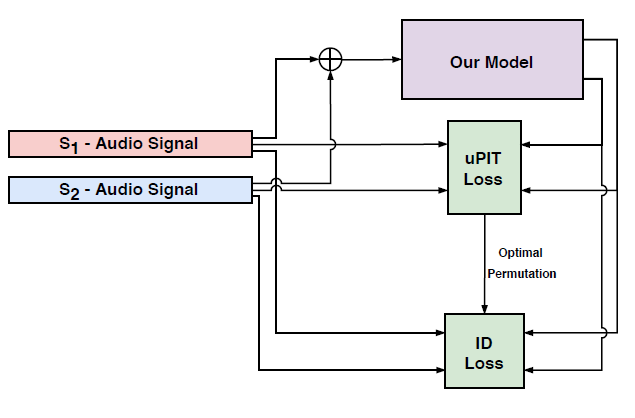

Coming to the speaker classification loss, a common problem is aligning the separated speech frames with the same output stream. Usually, the Permutation Invariant Loss is applied independently to each input frame. But the authors had used the uPIT applied to the whole sequence at once. After doing this, the performance improved drastically in situations where the output is flipped between different streams.

But still, the performance was not close to optimal. So the authors had to add a loss function that imposes a long-term dependency on the output. To tackle this, a speaker recognition model was used that had been trained to identify the persons in the training set and thus minimize the L2 distance between the network embeddings of the predicted audio channel and the corresponding source.

Endnotes

This model works well for music separation as well. In songs, it becomes crucial to separate the singer’s voice and background music. It also works well with noisy audio files and reverberations. As the dataset used is not publicly licensed, the pre-trained model is not available. But one can find the code for model architecture and other preprocessing code from the author’s official GitHub repository. A detailed explanation of the architecture with the dimensions of each layer is given in the paper.