This article was published as a part of the Data Science Blogathon.

Introduction

Machine Learning has become a buzzword in recent times. There are many reasons for the great excitement around machine learning. One of the important reasons is the wide range of applications where machine learning techniques have been implemented. There are hardly any areas of business or technology where the learning techniques haven’t played an important role. This is also the reason why Billions of dollars are being poured in by corporations and governments across the globe.

Another important reason for this newfound interest in the development of machine learning-based systems is the ease through which these systems can be implemented. This ease is because of the availability of a huge number of useful packages. Machine Learning packages like SciKit Learn, TensorFlow, and Keras, among others, have helped the developers in building sophisticated machine learning models without actually writing the code for the algorithms.

As a matter of fact corporations like Google have come out with frameworks, using which machine learning-based systems can be developed without writing a single line of code! DialogFlow is one such prime example where a conversational system can be developed without writing any code.

But…. Every coin has two sides..!

Even as on the one side machine learning techniques have found their way into most of the applications, on the other side different ways are being identified by the hackers and crackers to compromise these machine learning systems.

Threats to Machine Learning Systems

It is important to understand how machine learning techniques work to understand the security threats it might face. In machine learning, as the name suggests, the system tries to learn something and train itself with the help of examples. These examples are called the training datasets. Using these examples a machine learning system creates its own generalized rules for predictions.

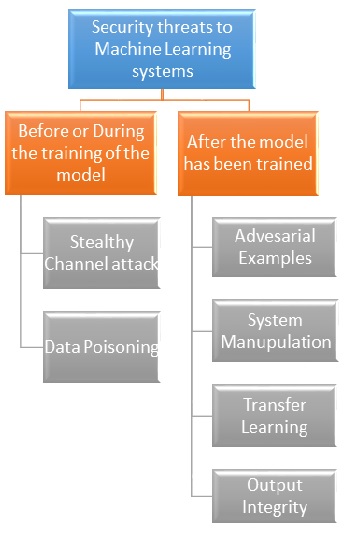

Now, the security threats to machine learning systems can be categorized into two types according to the learning model’s training status. These two categories are, Threats Before or during the training of the model and Threats After the machine learning model has been trained.

Threats to Machine Learning Systems: Before or During the Training of the Model

As previously mentioned, a machine learning system learns from the training data provided to it. Also, many factors determine the accuracy of a system but, generally, it can be said that the quality of a dataset provided to a learning system for training plays a very important role in determining the accuracy of a system. So, it can be said that data is a very important component in the development of a machine learning system.

Understanding the importance of the data in the development of machine learning systems, attackers have tried to target the training data itself. There are two ways in which the data destined to be used for the training of machine learning systems can be targeted.

1. Stealthy Channel Attack

As already mentioned the quality of the data plays a crucial role in developing a good machine learning model. So, the collection of good and relevant data is a very important task. For the development of a real-world application, data is collected from various sources. This is where an attacker can insert fraudulent and inaccurate data, thus compromising the machine learning system. So, even before a model has been created, by inserting

a very large chuck of fraudulent data the whole system can be compromised by the attacker, this is a stealthy channel attack. This is the reason why the

data collectors should be very diligent while collecting the data for machine learning systems.

2. Data Poisoning

One of the most highly studied and effective attacks on a machine learning system is data poisoning. As data plays such a crucial role in machine learning even a small deviation in the data can render the system useless. The attackers use this vulnerability and sensitivity of the machine learning systems and try to manipulate the data to be used for training.

Taking an example, suppose the model was to be given the data as

– “What is the capital of India?”, expected answer “New Delhi”.

Manipulated or poisoned data might look like

– “What is the capital of India?”, answer “New Mumbai” or

– “What is the capital of Australia”, answer “New Delhi”.

Both these cases illustrate the poisoning of the data. By changing just a single word the data can be poisoned.

Data poisoning directly affects two important aspects of data, data confidentiality, and data trustworthiness. Many a time the data used for training a system might contain confidential and sensitive information. By poisoning attack, the confidentiality of the data is lost. It is often believed that maintaining the confidentially of data is a challenging area of study by itself, the additional aspect of machine learning makes the task of securing the confidentiality of the data becomes that much more important.

Another important aspect affected by data poisoning is data trustworthiness. While developing a machine learning system the ML engineer must have confidence in the data being used. When data is collected from online sources or from sensors the risk of these things getting compromised or poisoned is very high. Hence, data poisoning affects the confidence of an engineer in the data itself.

Generally, data confidentiality and data trustworthiness have been treated separately as individual threats. But, it is believed that the source of this security threat is data poisoning. That is, the loss of confidence in the confidentially of the data and lack of trust in the data can be considered as a consequence of data poisoning. Hence, it has been considered under the data poisoning attack itself.

There are two specific attacks under data poisoning.

i. Label Flipping

In the Label Flipping attack, the data is poisoned by changing the labels in data. The training data provided to the machine learning model contains the expected output for the given input as well, in the case of supervised learning. If these expected outputs are of a distinct group then it is called labels. In label flipping these expected outputs are interchanged.

Consider the following example. The first table showcases the original data and the second table shows the data after label flipping.

Table 1: Original Data

| Cities | Country |

| Delhi | India |

| Mumbai | India |

| Bangalore | India |

| Sydney | Australia |

| Melbourne | Australia |

| Perth | Australia |

Table 2: Data after label flipping

| Cities | Country |

| Delhi | Australia |

| Mumbai | Australia |

| Bangalore | Australia |

| Sydney | India |

| Melbourne | India |

| Perth | India |

The table shows the cities (Input data) associated with its country (Label). In the original data, the association between the cities and country was correct and in the second table this has been altered, this is label flipping.

ii. Gradient Descent Attack

A machine learning model learns by trial and error method. In the first iteration itself, it is almost impossible for the model to start predicting the right answers. Generally, the model evaluates the predicted answers with the actual answers provided to it, and gradually over many iterations, it tries to descend towards the right answers by tweaking the constant variables. This general principle is called gradient descent. The gradient descent attack is undertaken while the model is being trained.

In gradient descent, the model keeps on performing the iterations by tweaking the constant variables until and unless it is convinced that this is the closest it is going to be to the actual answer.

Gradient descent attack can be performed in two ways. Firstly, the model can be pushed into an infinite loop of iterations by making it believe that it is still very far away from the actual answer. This is done by continuously changing the actual answer, because of which the model will get stuck in an infinite loop of iterations and the training of the model never attains completion.

The second way of attacking a machine learning model using gradient descent is making the model believe that it has completed the descent towards the right answer. That is, the model is falsely made to believe that the predicted answer is the actual answer. Because of this attack, the model is not properly trained, or in other words, it can be said that the model has been trained on inaccurate parameters. Due to this incomplete training, all the predicted answers of the model will be inaccurate. Thus, compromising the model.

Threats to Machine Learning Systems: After the Model has been Trained

While the focus of the attack before the model has been trained is on the training data, the focus of the attacks after the model has been trained is on the trained model itself. The different attacks on the trained model have been discussed here.

1. Adversarial Examples/Evasion Attack

Adversarial Examples or Evasion Attack is another important and highly studied security threat to machine learning systems. In this type of attack, the input data or the testing data is manipulated in such a way that it forces the machine learning system into predicting incorrect information. This compromises the integrity of the system and also the confidence in the system is also affected.

It has also been observed that a system that overfits the data is highly susceptible to evasion attacks. One of the important reason for this is, rather than have a generalized rule an overfit model has very specific rules for prediction which can be easily breached hence, it is more likely to predict incorrectly and easy to be attacked.

2. System Manipulation

A true machine learning system never ceases to lean, it keeps on learning and improving itself. The improvement is done by taking constant feedback from the environment. This is somewhat similar to the reinforcement models which take continuous feedback from a critic element. This constant feedback and self-improvement is an important quality of a good machine learning system.

This property of a good machine learning system can be misused by the attacker by steering the system in the wrong direction by providing falsified data as feedback to the system. So, over some time instead of improvement in the performance, the system performance degrades thus altering the behavior of the system and rendering the system useless.

3. Transfer learning Attack

Many times for quick production pre-trained machine learning models are used. One of the important reasons for using pre-trained models is, for certain applications that involve the requirement of a large amount of training data the training time is exponentially high. With computing resources still being a luxury, it makes sense to go for pre-trained models.

These pre-trained models are tweaked and fine-tuned according to the requirements and used. But, as the models have been pre-trained and there is no way to know if the model has been trained on the advertised dataset or not. This can be exploited by the attacker who might manipulate or replace a genuine model with a malicious model.

4. Output Integrity Attack

If an attacker can get in-between the model and interface responsible for displaying the results then manipulated results can be displayed. This type of attack is called the output integrity attack. Due to our lack of understanding of the actual internal working of a machine learning system theoretically, it becomes difficult to anticipate the actual result. Hence, when the output has been displayed by the system it is taken at face value. This naivety can be exploited by the attacker by compromising the integrity of the output.

These are some of the security threats to a machine learning system. In a gist, the different security threats to a machine learning system can be categorized as:

I. Before or During the Training of the Model

1. Stealthy Channel attack during raw data collection phase

2. Data Poisoning (data confidentiality and trust worthiness)

i. Label Flipping

ii. Gradient Descent Attack

II. After the Model has been Trained

1. Adversarial Examples/Evasion Attack (overfit data can be easily attacked)

2. System Manipulation (especially for reinforcement / models trying to lean perpetually)

3. Transfer learning

4. Output Integrity Attack

References

[1] Pulei Xiong, Scott Buffett, Shahrear Iqbal, Philippe Lamontagne, Mohammad Mamun, and Heather Molyneaux, “Towards a Robust and Trustworthy Machine Learning System Development”, National Research Council Canada, Canada, 2021. Available at: https://arxiv.org/pdf/2101.03042.pdf. Last Accessed: 22nd Jan, 2021

[2] Gary McGraw, Richie Bonett, Victor Shepardson, and Harold Figueroa, “The Top 10 Risks of Machine Learning Security”, IEEE Computer Society, Cybertrust, June 2020, pp. 57-61.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.