This article went through a series of changes!

I was initially writing on a different topic (related to analytics). I had almost finished writing it. I had put in about 2 hours and written an average article. If I had made it live, it would have done OK! But something in me stopped me from making it live. I was just not satisfied with the output. The article didn’t convey how I am feeling about 2015 and how useful Analytics Vidhya could become for your analytics learning this year.

So, I put that article in Trash and started re-thinking which topic would do justice. This is what I ended up with – let me write awesome articles and guides about what was my biggest learning in 2014 – The Scikit-learn or sklearn library in Python. This was my biggest learning because it is now the tool I use for any machine learning project I work upon.

Creating these articles would not only be immensely useful for readers of the blog but would also challenge me in writing about something I am still relatively new at. I would also love to hear from you on the same – what was your biggest learning in 2014 and would you want to share it with readers of this blog?

What is scikit-learn or sklearn?

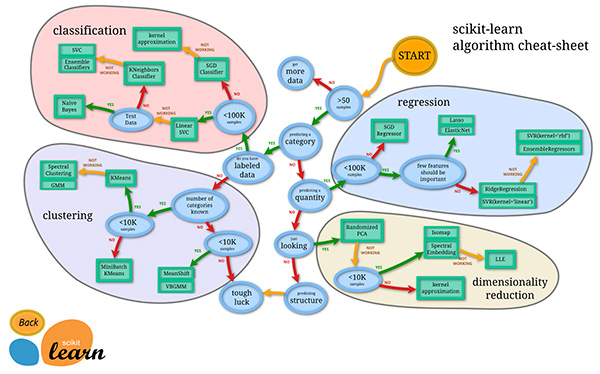

Scikit-learn is probably the most useful library for machine learning in Python. The sklearn library contains a lot of efficient tools for machine learning and statistical modeling including classification, regression, clustering and dimensionality reduction.

Please note that sklearn is used to build machine learning models. It should not be used for reading the data, manipulating and summarizing it. There are better libraries for that (e.g. NumPy, Pandas etc.)

Components of scikit-learn:

Scikit-learn comes loaded with a lot of features. Here are a few of them to help you understand the spread:

- Supervised learning algorithms: Think of any supervised machine learning algorithm you might have heard about and there is a very high chance that it is part of scikit-learn. Starting from Generalized linear models (e.g Linear Regression), Support Vector Machines (SVM), Decision Trees to Bayesian methods – all of them are part of scikit-learn toolbox. The spread of machine learning algorithms is one of the big reasons for the high usage of scikit-learn. I started using scikit to solve supervised learning problems and would recommend that to people new to scikit / machine learning as well.

- Cross-validation: There are various methods to check the accuracy of supervised models on unseen data using sklearn.

- Unsupervised learning algorithms: Again there is a large spread of machine learning algorithms in the offering – starting from clustering, factor analysis, principal component analysis to unsupervised neural networks.

- Various toy datasets: This came in handy while learning scikit-learn. I had learned SAS using various academic datasets (e.g. IRIS dataset, Boston House prices dataset). Having them handy while learning a new library helped a lot.

- Feature extraction: Scikit-learn for extracting features from images and text (e.g. Bag of words)

Community / Organizations using scikit-learn:

One of the main reasons behind using open source tools is the huge community it has. Same is true for sklearn as well. There are about 35 contributors to scikit-learn till date, the most notable being Andreas Mueller (P.S. Andy’s machine learning cheat sheet is one of the best visualizations to understand the spectrum of machine learning algorithms).

There are various Organizations of the likes of Evernote, Inria and AWeber which are being displayed on scikit learn home page as users. But I truly believe that the actual usage is far more.

In addition to these communities, there are various meetups across the globe. There was also a Kaggle knowledge contest, which finished recently but might still be one of the best places to start playing around with the library.

Quick Example:

Now that you understand the ecosystem at a high level, let me illustrate the use of sklearn with an example. The idea is to just illustrate the simplicity of usage of sklearn. We will have a look at various algorithms and best ways to use them in one of the articles which follow.

We will build a logistic regression on IRIS dataset:

Step 1: Import the relevant libraries and read the dataset

[stextbox id = “grey”]

import numpy as np

import matplotlib as plt

from sklearn import datasets

from sklearn import metrics

from sklearn.linear_model import LogisticRegression

[/stextbox]

We have imported all the libraries. Next, we read the dataset:

[stextbox id = “grey”]

dataset = datasets.load_iris()

[/stextbox]

Step 2: Understand the dataset by looking at distributions and plots

I am skipping these steps for now. You can read this article, if you want to learn exploratory analysis.

Step 3: Build a logistic regression model on the dataset and making predictions

[stextbox id = “grey”]

model.fit(dataset.data, dataset.target)

expected = dataset.target

predicted = model.predict(dataset.data)

[/stextbox]

Step 4: Print confusion matrix

[stextbox id = “grey”]

print(metrics.classification_report(expected, predicted))

print(metrics.confusion_matrix(expected, predicted))

[/stextbox]

End Notes:

This was an overview of one of the most powerful and versatile machine learning library in Python. It was also the biggest learning I did in 2014. What was your biggest learning in 2014? Please share it with the group through comments below.

Are you excited about learning and using Scikit-learn? If Yes, stay tuned for the remaining articles in this series.

A quick reminder: If you have not checked out Analytics Vidhya Discuss yet, you should do it now. Users are joining in quickly – so take up that username you want before it gets picked up by someone else!