In the dynamic realm of artificial intelligence (AI), where breakthroughs are frequent, developers face the challenge of seamlessly integrating potent AI models into various applications. Addressing this need, Monster API emerges as a solution, streamlining the fine-tuning and deployment of open-source models. As we step into 2024, the focus is on harnessing the advancements of 2023 and overcoming the challenges posed by open-source models.

Also Read: Microsoft’s WaveCoder and CodeOcean Revolutionize Instruction Tuning

Navigating the AI Landscape

In a recent tweet, user Santiago highlighted the growing demand for expertise in Large Language Model (LLM) application development, Retrieval Augmented Generation (RAG) workflows, and optimizing open-source models. The distinction between open and closed source models underscores the importance of privacy, flexibility, and transparency offered by the former. However, Santiago also pinpointed the challenges of tuning and deploying open-source models.

Also Read: Apple Secretly Launches Its First Open-Source LLM, Ferret

Monster API – Bridging Gaps

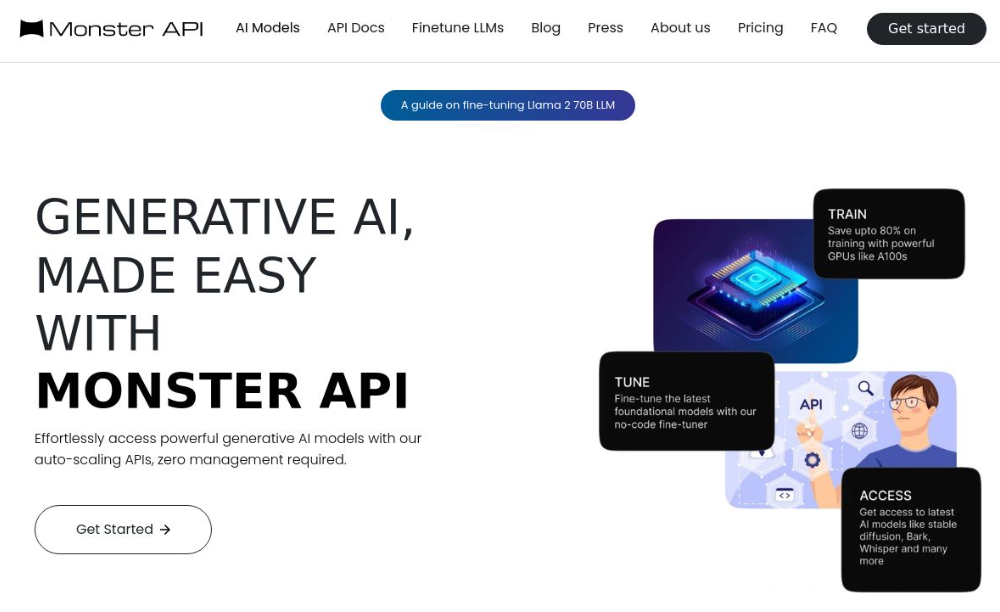

Monster API addresses the concerns raised by Santiago, providing a user-friendly platform for fine-tuning and deploying open-source models. This AI-focused computing infrastructure offers a one-click approach, optimizing and simplifying the entire process. Its integrated platform includes GPU infrastructure configuration, cost-effective API endpoints, and high-throughput models, making it an efficient choice for developers.

Exploring Monster API’s Capabilities

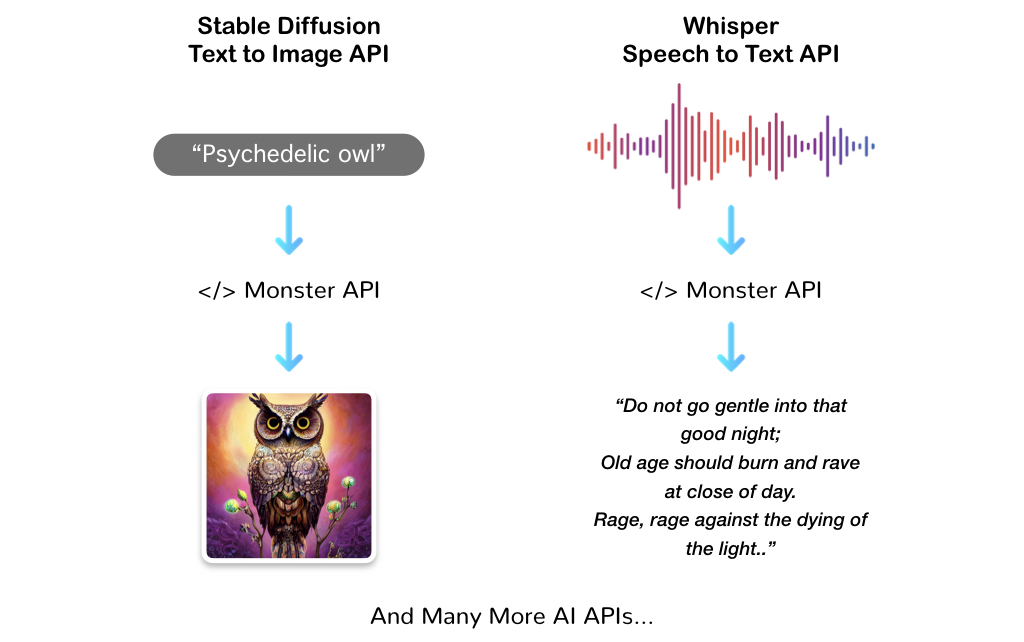

The Monster API opens doors to powerful generative AI models, supporting diverse applications like code generation, conversation completion, text-to-image generation, and speech-to-text transcription. Its REST API design facilitates quick integration into various applications, offering developers flexibility and adaptability. The API supports multiple programming languages such as CURL, Python, Node.js, and PHP, ensuring seamless integration into existing workflows.

Developer-Centric Innovation

Monster API stands out not only for its range of functionalities but also for its developer-centric approach. The API prioritizes scalability, cost-efficiency, and speedy integration. Developers can customize the API according to their needs, with prebuilt integrations minimizing complexities. Monster API houses state-of-the-art models like Dreambooth, Whisper, Bark, Pix2Pix, and Stable Diffusion, making them accessible to developers with significant cost savings.

Our Say

In the rapidly evolving landscape of technology, Monster API emerges as a catalyst for innovation. It provides developers with access to powerful generative AI models and simplifies their integration. By doing so, it paves the way for creative breakthroughs without the burden of complex infrastructure management. As we look towards the future, Monster API stands as a testament to the fusion of versatility and ease of use in the realm of artificial intelligence.