Introduction

Machine learning is a field that empowers computers to learn from data and make intelligent decisions. It encompasses various concepts and techniques. One such concept is “stochastic,” which plays a crucial role in many machine learning algorithms and models. In this article, we will delve into the meaning of stochastic in machine learning, explore its applications, and understand its significance in optimizing learning processes.

Table of Contents

Understanding Stochastic in Machine Learning

Stochastic, in the context of machine learning, refers to the introduction of randomness or probability into algorithms and models. It allows for the incorporation of uncertainty, enabling the algorithms to handle noisy or incomplete data effectively. By embracing stochasticity, machine learning algorithms can adapt to changing environments and make robust predictions.

Stochastic Processes in Machine Learning

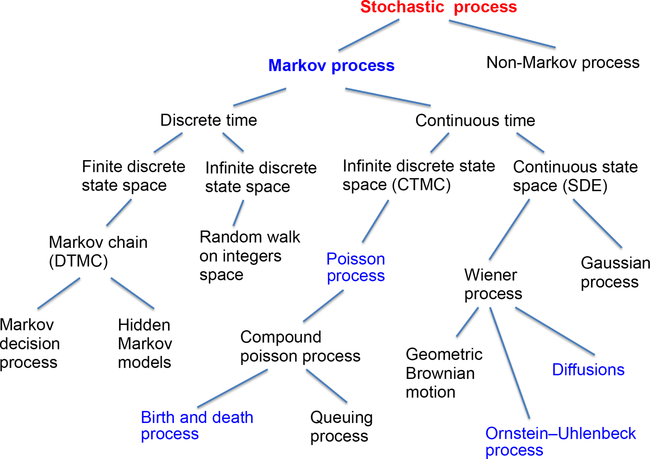

Stochastic processes are mathematical models that describe the evolution of random variables over time. They are widely used in machine learning to model and analyze various phenomena. These processes possess unique characteristics that make them suitable for capturing the inherent randomness in data.

Definition and Characteristics of Stochastic Processes

A stochastic process is a collection of random variables indexed by time or another parameter. It provides a mathematical framework to describe the probabilistic behavior of a system evolving over time. Stochastic processes exhibit properties such as stationarity, independence, and Markovianity, which enable them to capture complex dependency patterns in data.

Applications of Stochastic Processes in Machine Learning

Stochastic processes find applications in diverse areas of machine learning. They are helpful in time series analysis, where the goal is to predict future values based on past observations. They also play a crucial role in modeling and simulating complex systems, such as financial markets, biological processes, and natural language processing.

Stochastic Gradient Descent (SGD)

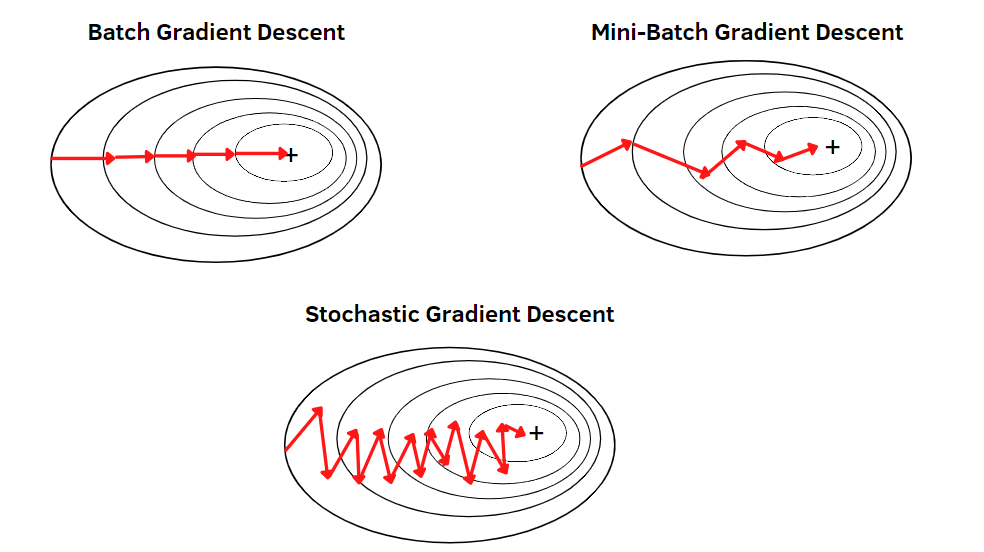

Stochastic Gradient Descent (SGD) is a popular optimization algorithm in machine learning. It is a variant of the traditional gradient descent algorithm that introduces randomness into the parameter updates. SGD is particularly useful when dealing with large datasets, as it allows for efficient and scalable optimization.

Overview of SGD

In SGD, instead of computing the gradient using the entire dataset, the gradient is estimated using a randomly selected subset of the data, known as a mini-batch. This random sampling introduces stochasticity into the optimization process, making it more adaptable to noisy or dynamic data. By iteratively updating the model parameters based on these mini-batch gradients, SGD converges to an optimal solution.

Advantages and Disadvantages of SGD

SGD offers several advantages over traditional gradient descent. It converges faster, requires less memory, and is computationally efficient, especially for large-scale datasets. However, SGD’s stochastic nature introduces some drawbacks. It may converge to suboptimal solutions due to the noise in the gradient estimates, and the learning rate needs careful tuning to ensure convergence.

Implementing SGD in Machine Learning Algorithms

SGD can be implemented in various machine learning algorithms, such as linear regression, logistic regression, and neural networks. In each case, the algorithm updates the model parameters based on the gradients computed from the mini-batches. This stochastic optimization technique enables the models to learn from massive datasets efficiently.

Stochastic Models in Machine Learning

Stochastic models are probabilistic models that capture the uncertainty in data and make predictions based on probability distributions. They are widely used in machine learning to model complex systems and generate realistic samples.

Types of Stochastic Models in Machine Learning

There are 3 types of Stochastic models in machine learning: Hidden Markov Models, Gaussian Mixture Models, and Bayesian Networks. These models incorporate randomness and uncertainty, allowing for more accurate representation and prediction of real-world phenomena.

Let’s now explore the applications of these models.

- Hidden Markov Models (HMMs)

- Application: Speech recognition

- Use: Modeling the probabilistic nature of speech patterns

- Gaussian Mixture Models (GMMs)

- Application: Image and video processing

- Use: Modeling the statistical properties of pixels

- Bayesian Networks

- Application: Medical diagnosis

- Use: Capturing dependencies between symptoms and diseases

Stochastic Sampling Techniques

Stochastic sampling techniques are used to generate samples from complex probability distributions. These techniques play a vital role in tasks such as data generation, inference, and optimization.

Importance Sampling

Importance sampling is a technique for estimating properties of a target distribution by sampling from a different, easier-to-sample distribution. It allows for efficient estimation of expectations and probabilities, even when the target distribution is challenging to sample directly.

Markov Chain Monte Carlo (MCMC)

MCMC is a class of algorithms used to sample from complex probability distributions. It constructs a Markov chain that converges to the desired distribution, allowing for efficient sampling. MCMC methods, such as the Metropolis-Hastings algorithm and Gibbs sampling, are widely useful in Bayesian inference and optimization.

Stochastic Optimization Algorithms

Stochastic optimization algorithms are useful in finding optimal solutions in the presence of randomness or uncertainty. These algorithms mimic natural processes, such as annealing, evolution, and swarm behavior, to explore the solution space effectively.

Simulated Annealing

Simulated annealing is an optimization algorithm inspired by the annealing process in metallurgy. It starts with a high temperature, allowing for random exploration of the solution space, and gradually decreases the temperature to converge to the optimal solution. Simulated annealing is particularly useful for solving combinatorial optimization problems.

Genetic Algorithms

Genetic algorithms are optimization algorithms based on the process of natural selection and genetics. They maintain a population of candidate solutions and iteratively evolve them through selection, crossover, and mutation operations. Genetic algorithms are effective in solving complex optimization problems with large solution spaces.

Particle Swarm Optimization

Particle swarm optimization is an optimization algorithm based on the collective behavior of bird flocks or fish schools. It maintains a population of particles that move through the solution space, guided by their own best position and the best position found by the swarm. Particle swarm optimization is most useful in continuous optimization problems.

Ant Colony Optimization

Ant colony optimization is an optimization algorithm inspired by the foraging behavior of ants. It models the problem as a graph, where ants deposit pheromones to communicate and find the optimal path. Ant colony optimization is particularly useful for solving combinatorial optimization problems, such as the traveling salesman problem.

Stochasticity vs Determinism in Machine Learning

The choice between stochastic and deterministic approaches in machine learning depends on the problem at hand and the available data. Both approaches have their pros and cons, and their suitability varies across different scenarios.

Pros and Cons of Stochastic Approaches

Stochastic approaches, with their inherent randomness, allow for better adaptation to changing environments and noisy data. They can handle large-scale datasets efficiently and provide robust predictions. However, stochastic approaches may suffer from convergence issues and require careful tuning of hyperparameters.

Deterministic Approaches in Machine Learning

Deterministic approaches, on the other hand, provide more stable and predictable results. They are suitable for problems with noise-free data and well-defined underlying patterns. Deterministic approaches, such as traditional gradient descent, are useful in scenarios where interpretability and reproducibility are crucial.

Conclusion

Stochasticity plays a vital role in machine learning, enabling algorithms to handle uncertainty, adapt to changing environments, and make robust predictions. Stochastic processes, stochastic gradient descent, stochastic models, and stochastic optimization algorithms are essential components of the machine learning toolbox. By understanding and leveraging the power of stochasticity, we can unlock the full potential of machine learning in solving complex real-world problems.