This article was published as a part of the Data Science Blogathon.

Introduction

How many rows of sample data are required (or what should be the size of the training dataset required) to build a machine learning model that can predict fraudulent transactions in a credit card fraud detection dataset containing around 284407 rows? You will be surprised to know that some 200 rows are better than 200,000 rows!! If you are not convinced, read on!

The concept of imbalanced datasets and the techniques that can be applied to correct the imbalance, so that the machine learning algorithm can predict without bias, is a major research area in data science (2). In this article, we shall revisit some of the fundamental concepts pertaining to imbalanced datasets and establish what can be termed an imbalanced dataset and when should we really need to apply imbalance techniques.

In this article, let us consider the adult income dataset which is a public dataset available in the UCI Machine learning repository (1). It is a widely quoted public dataset in machine learning literature and is used for introducing supervised machine learning algorithms for binary classification. The dataset contains 32,561 rows with 14 attributes of census data pertaining to adult income. The prediction task is to determine whether a person makes over 50K income in a year. The number of rows with ‘>50K’ is a minority class constituting 24% of the data.

Most of the literature on this publicly available dataset terms this dataset as imbalanced. In fact, wherever I read about this dataset, it starts with the statement that this dataset is an example of an imbalanced dataset. It is also one of the datasets considered imbalanced in the research paper that introduced SMOTE imbalance technique to machine learning (3).

Now, the question is what is really meant by an imbalanced dataset? Is the Adult Income Dataset really imbalanced that warrants the application of imbalance techniques to correct the same?

What is an Imbalanced Dataset?

We shall first delve into some basic concepts of imbalanced data and its characteristics. Assume a dataset that contains 95% majority class and 5% minority class. If the classification algorithm completely ignores the data on minority class as noise and considers only majority class and predicts them as such correctly, then we will get a default accuracy of 95%. Obviously, the algorithm has failed 100% in its ability to classify the minority class which is normally the very objective of the problem that is being modelled. Something like this can happen when we try to predict with a skewed dataset.

The default accuracy of the basic model for any classification problem will always be as high as the size of the majority class. Hence, for classification problems, we should never go by the default accuracy as a measure of the efficiency of the model. The best metric of accuracy is the ‘balanced accuracy’ measure available in the sci-kit learn library. Alternatives are measures like precision, recall, F1, and Area under the ROC curve. Each of these has different interpretations and implications, and the problem at hand should be evaluated while choosing the relevant metric.

In real-world problems, we, almost, never will get a dataset where the percentage of the minority class is the same as or anywhere near the majority class. In fact, in a truly imbalanced dataset, one that requires the application of techniques to correct the imbalance, the percentage of minority class will be very minimal – somewhere around 1 to 5%.

Let us now delve into the nature of the imbalanced datasets.

Characteristics of the Problem of Imbalanced Datasets

We shall briefly look into some important characteristics of the problem of imbalanced datasets.

1. Imbalance is the very nature of the problem on hand – it has nothing to do with lack of data

The first basic thing to understand is that imbalance is the very nature of the problems of classification and prediction. There will always be a huge deviation between the percentages of majority and minority classes. The very nature of most of the real-world classification problems is that they involve minority classes, constituting a very low percentage, that needs to be predicted correctly.

Consider the example of fraud detection. Among thousands of transactions, there could be just one or two transactions that would be fraudulent, thereby leading to a very low and negligible percentage of rows that represent fraudulent transactions. Hence, the dataset is expected to contain a very low percentage of fraudulent transactions i.e. minority class.

2. Information sufficiency

In the example of fraud (or anomaly) detection, in terms of relationships between the various independent variables, the rows of the majority class represent several ways of doing transactions (or types of transactions) that are normal in nature. Given a set of features, and its various possible values, we can theoretically determine various ways of doing normal transactions. Such a set would constitute the rows of the majority class that contain unique information about the normal transactions.

Any more rows in the majority class would only add to the size of the majority class without adding any additional unique information that can be used to classify the two classes. Hence, techniques like under-sampling of the majority classes would work very well, ONLY, in the case of such duplicate and superfluous information. If we can’t determine that and continue with under-sampling, then we may end up losing information that could be important for the model.

Similarly, in the case of minority classes, oversampling by duplicating minority class rows may not add any additional information to the model. If we resort to over-sampling or under-sampling, the additional rows added/removed should reflect some attributes of new ways of fraudulent transactions or the nature of the problem being modeled.

Suppose, based on the domain knowledge, we determine that at least 5% of all transactions are historically fraudulent transactions. But in our dataset, we might have just 1% of data that represent the fraudulent samples of the minority class. Then, our effort should be to acquire additional real-world data so that the data becomes complete and reflects the real nature of the problem. Hence, in this case, also, we cannot resort to oversampling.

However, suppose we also determine that the existing 1% of fraudulent transactions (among the typical 5%) represent all possible ways of doing fraudulent transactions, then we can do oversampling in order to make our classification algorithm work better.

Methods like SMOTE if they create synthetic samples that ignore the actual real-life pattern, may not succeed in creating a model that mimics the real-world problem.

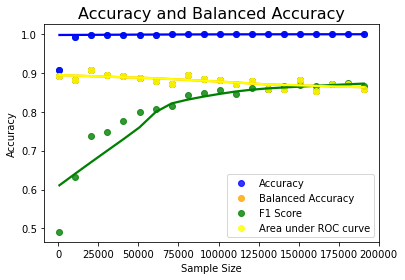

3. Effectiveness of the Classification algorithms decrease with the increase in imbalance but increases with a decrease in sample size

The nature of the machine learning algorithms is such that their effectiveness in making correct predictions decrease with an increase in imbalance. However, the effectiveness of the algorithms increases with a decrease in sample size. This is because the decrease in sample size reduces the degree of imbalance. This is why the technique of under-sampling will most likely perform better than the base model in any imbalanced dataset.

Photo by Stephen Dawson on Unsplash

As I had written in one of my previous articles, most of the machine learning algorithms that use the traditional statistical methods, as part of their algorithms, work as well with a very low size of the training dataset. The bigger size of the training dataset does not increase the effectiveness or accuracy of the algorithm. The difference in accuracy between the training dataset sizes of 80% vs 20% will be just +/-2% (max) only.

In the case of imbalanced datasets, as the size of the training dataset decreases, the accuracy tends to improve.

What can rightly be termed an Imbalanced Dataset?

Based on the arguments made in the foregoing sections, there are two ways we can categorize a dataset as an imbalanced dataset:

1. From the perspective of Machine Learning Algorithms – In this perspective, a dataset can be termed imbalanced when there is a significant deviation between the percentages of majority and minority classes.

The recognition of the imbalance in the dataset, based on this perspective, is important, as the default accuracy measure arrived at, will be misleading as explained in the previous section. The machine learning algorithms are designed to predict correctly the majority classes and are hence biased against minority classes.

However, the important point being made in this article is that the imbalance from this perspective is the problem of the ability of the machine learning algorithm and not the dataset itself. When the problem is with the machine learning algorithm, the implementation of imbalance techniques may not improve accuracy and may even lead to erroneous results.

In fact, on the contrary, when the dataset is imbalanced from the perspective of a machine learning algorithm, no application of the imbalance technique might be required to get good predictions! I shall explain this paradox with an illustration in a subsequent section.

2. From the perspective of the real-world problem being modeled – In this perspective, a dataset can be termed imbalanced when the percentage of minority classes does not reflect the real-world problem.

This perspective focuses on the nature of the real-world problem. The proportion of the majority vs minority classes in the dataset should reflect the real-world problem. For example, in the adult dataset, the percentage of adults with income above 50K is 24%. Is this figure of 24% reflect the real-world problem? Do people who earn income above 50K constitute only 24% of the adult population?

If the answer is yes, the dataset is not imbalanced to the extent that it warrants the application of handling of imbalance

If the answer is No, then the dataset is imbalanced. If the dataset is imbalanced from this perspective, as a first step, we should strive to get more real-world data so that the data represents the real-world problem.

If we cannot get additional data on minority classes, then we may resort to implementing techniques that can mitigate the imbalance like SMOTE, NearMiss, Tomek links, etc.

Illustration – Adult Income Dataset

The adult income dataset available in the UCI Machine learning repository can be considered to reflect the real-world skewness in terms of the percentage of minority class viz., those earning above 50K is 24%. Hence, the dataset is imbalanced only from the perspective of machine learning algorithms. The huge number of rows representing the majority class may only provide superfluous and redundant information as discussed in previous sections. We can show that the dataset can be used to give sufficiently higher accuracy by reducing the size of the training dataset and without the use of any techniques for imbalance. (I shall be using Random Forest Classifier for the model building. The results will be the same with logistic regression and decision tree classifiers. Also, K-fold cross-validation can also be used to verify the mean accuracy).

In this section, I shall demonstrate the following points, made in the previous sections, using the adult income dataset:

1. The effectiveness of the machine learning algorithms decreases as the imbalance increases and the effectiveness of the machine learning algorithms increases as the sample size decreases in the case of imbalanced datasets

3. When the dataset is not imbalanced from the perspective of a real-world problem, no imbalance technique may be required to make good predictions

4. The size of the training dataset need not be more than the size of the minority class to make good predictions. Hence, the base model consisting of the entire dataset will perform poorly when compared to the reduced size of the training dataset (without proportionate reduction in the size of minority classes).

1. Effectiveness of the machine learning algorithms with the decrease in the degree of imbalance and sample size

That the machine learning algorithms are biased towards the majority class is a known fact. It can also be shown that the effectiveness increases with a decrease in the degree of imbalance. What this means is when the dataset is imbalanced from the perspective of a machine learning algorithm (i.e. significant deviation between the two classes), the full dataset will always give a model that is not very effective. As we reduce the size of the training dataset, the accuracy will improve as we move nearer to the minority class (though the highest accuracy need not necessarily occur with scikit’s under-sampling method).

Case 1 – Basic Model – Balanced_Accuracy of 77%

Python Code:

We shall now build the basic model with entire dataset

from sklearn.ensemble import RandomForestClassifier

from sklearn.compose import ColumnTransformer

from sklearn.compose import make_column_selector

from sklearn.preprocessing import OneHotEncoder

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.model_selection import train_test_split

from sklearn.model_selection import cross_validate

from sklearn.metrics import confusion_matrix, accuracy_score

from sklearn.metrics import balanced_accuracy_score

from sklearn.metrics import precision_recall_fscore_support

from sklearn.metrics import f1_score

from sklearn.metrics import classification_report

from imblearn.under_sampling import RandomUnderSampler

from collections import Counter

from imblearn.over_sampling import RandomOverSampler

from imblearn.datasets import make_imbalance

from sklearn.metrics import roc_curve

from sklearn.metrics import roc_auc_score

from sklearn.metrics import f1_score

df=pd.read_csv('census-income.csv')

clf2=RandomForestClassifier()

X2i=df.iloc[:,:-1]

y2i=df.iloc[:,-1]

ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(sparse=True), make_column_selector(dtype_include=object))],

remainder='passthrough')

X2i= ct.fit_transform(X2i)

le = LabelEncoder()

y2i = le.fit_transform(y2i)

X2i_train, X2i_test, y2i_train, y2i_test = train_test_split(X2i, y2i, test_size = 0.2, random_state = 0,stratify=y2i)

clf2.fit(X2i_train,y2i_train)

pred_y2=clf2.predict(X2i_test)

bacc=balanced_accuracy_score(y2i_test,pred_y2)

acc=accuracy_score(y2i_test,pred_y2)

f1=f1_score(y2i_test, pred_y2, average='macro')

probs=clf2.predict_proba(X2i_test)

f,t,th=roc_curve(y2i_test,probs[:,1],pos_label=1,drop_intermediate=False)

r=roc_auc_score(y2i_test,probs[:,1])

cm = confusion_matrix(y2i_test, pred_y2)

print("Confusion Matrix for Test Data n",cm)

print("Accuracy Score for Test Data n", accuracy_score(y2i_test,pred_y2))

print("Balanced accuracy Score for Test Data n", balanced_accuracy_score(y2i_test,pred_y2))

print("F1 Accuracy Score for Test Data n", f1_score(y2i_test,pred_y2,average='macro'))

print("The Area under ROC Curve is ",r)

We get the “balanced_accuracy” score of 77% on the test data without doing anything for imbalance.

Confusion Matrix for Test Data [[4600 345] [ 611 957]] Accuracy Score for Test Data 0.8532166436358053 Balanced accuracy Score for Test Data 0.7702820953962981 F1 Accuracy Score for Test Data 0.7863837034251735 The Area under ROC Curve is 0.9011929696044243

Case 2 – Size of the training dataset 20% – Balanced Accuracy: 76.95%

Suppose we change the size of the training dataset to 20%, we get an accuracy of 76.95% – not much impact on the accuracy!

X2i_train, X2i_test, y2i_train, y2i_test = train_test_split(X2i, y2i, test_size = 0.8, random_state = 0,stratify=y2i)

clf2.fit(X2i_train,y2i_train)

pred_y2=clf2.predict(X2i_test)

bacc=balanced_accuracy_score(y2i_test,pred_y2)

acc=accuracy_score(y2i_test,pred_y2)

f1=f1_score(y2i_test, pred_y2, average='macro')

probs=clf2.predict_proba(X2i_test)

f,t,th=roc_curve(y2i_test,probs[:,1],pos_label=1,drop_intermediate=False)

r=roc_auc_score(y2i_test,probs[:,1])

cm = confusion_matrix(y2i_test, pred_y2)

print("Confusion Matrix for Test Data n",cm)

print("Accuracy Score for Test Data n", accuracy_score(y2i_test,pred_y2))

print("Balanced accuracy Score for Test Data n", balanced_accuracy_score(y2i_test,pred_y2))

print("F1 Accuracy Score for Test Data n", f1_score(y2i_test,pred_y2,average='macro'))

print("The Area under ROC Curve is ",r)

Confusion Matrix for Test Data [[18215 1561] [ 2396 3877]] Accuracy Score for Test Data 0.8480939767361511 Balanced accuracy Score for Test Data 0.7695557653659775 F1 Accuracy Score for Test Data 0.7820677359661152 The Area under ROC Curve is 0.899116502081402

Case 3 – Model that ignores redundant information – training dataset size – 24%

Now, we take 50% of the dataset as training dataset and then create a model that has subset of a training dataset that contains maximum possible number of minority classes – similar to under-sampling. However, we shall test the model with the remaining 50% of the training dataset. Basically, we are reducing the dataset used for fitting the model to 7841 rows containing 50% of the rows of minority class i.e. 3920 rows of 7841 rows

df=pd.read_csv('census-income.csv')

clf2=RandomForestClassifier()

X2i=df.iloc[:,:-1]

y2i=df.iloc[:,-1]

ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(sparse=False), make_column_selector(dtype_include=object))],

remainder='passthrough')

X2i= ct.fit_transform(X2i)

X2i_train, X2i_test, y2i_train, y2i_test = train_test_split(X2i, y2i, test_size = 0.5, random_state = 0,stratify=y2i)

X2, y2 = make_imbalance(X2i_train,y2i_train,sampling_strategy={' 50K':3920})

le2=LabelEncoder()

y2 = le2.fit_transform(y2)

clf2.fit(X2,y2)

le2=LabelEncoder()

y2i_test = le2.fit_transform(y2i_test)

pred_y2=clf2.predict(X2i_test)

bacc=balanced_accuracy_score(y2i_test,pred_y2)

acc=accuracy_score(y2i_test,pred_y2)

f1=f1_score(y2i_test, pred_y2, average='macro')

probs=clf2.predict_proba(X2i_test)

f,t,th=roc_curve(y2i_test,probs[:,1],pos_label=1,drop_intermediate=False)

r=roc_auc_score(y2i_test,probs[:,1])

cm = confusion_matrix(y2i_test, pred_y2)

print("Confusion Matrix for Test Data n",cm)

print("Accuracy Score for Test Data n", accuracy_score(y2i_test,pred_y2))

print("Balanced accuracy Score for Test Data n", balanced_accuracy_score(y2i_test,pred_y2))

print("The F1 Accuracy Score for Test Data n", f1_score(y2i_test,pred_y2,average='macro'))

print("The Area under ROC Curve is ",r)

Though we have reduced the data used for fitting the model and though we are exposing the model to a test data set of 50% size, we are getting the balanced_accuracy of 81.56% far greater than that achieved with the full dataset. This shows that as the imbalance decreases along with decrease in sample size, the accuracy increases.

Confusion Matrix for Test Data [[9904 2456] [ 667 3254]] Accuracy Score for Test Data 0.8081813156440022 Balanced accuracy Score for Test Data 0.8155924162401607 The F1 Accuracy Score for Test Data 0.7697717123779277 The Area under ROC Curve is 0.8972876321921048

Now, how much can we reduce the size of the sample or training dataset so that the accuracy can be further increased? In other words, how many records of those earning more than 50K income are required to make valid predictions?

As discussed in the above sections, we can reduce the size of the dataset to the point where the information contained in the dataset is sufficient enough to make predictions.

Case 4 – Size of minority class 0.2%

Generally, in a truly imbalanced dataset, the percentage of minority classes will be negligible. For example, the percentage of fraudulent transactions in the publicly available European credit card dataset is 0.17%.

Suppose we get an adult income dataset with just 0.2% data of rows with income above 50K.

Since we concluded that in general, the percentage of adults with income above 50K is 24%, if we get a dataset with just 0.2% minority class, then the dataset should be considered imbalanced even from the perspective of the real nature of the problem as per our categorization earlier. In such cases, the use of imbalance techniques like oversampling will not lead to the right results.This is shown below.

We have reduced the dataset now to the size of 3920 but with just 2% of minority classes i.e. 65 rows. This would greatly reduce the accuracy, but more importantly, any under or oversampling will not improve the accuracy. We shall oversample this dataset by increasing the minority rows by duplication.

df=pd.read_csv('census-income.csv')

clf2=RandomForestClassifier()

X2i=df.iloc[:,:-1]

y2i=df.iloc[:,-1]

ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(sparse=False), make_column_selector(dtype_include=object))],

remainder='passthrough')

X2i= ct.fit_transform(X2i)

X2i_train, X2i_test, y2i_train, y2i_test = train_test_split(X2i, y2i, test_size = 0.5, random_state = 0,stratify=y2i)

X2, y2 = make_imbalance(X2i_train,y2i_train,sampling_strategy={' 50K':65})

rus = RandomOverSampler( )

X2,y2 = rus.fit_resample(X2,y2)

print('Resample dataset shape', Counter(y2))

le2=LabelEncoder()

y2 = le2.fit_transform(y2)

clf2.fit(X2,y2)

le2=LabelEncoder()

y2i_test = le2.fit_transform(y2i_test)

pred_y2=clf2.predict(X2i_test)

bacc=balanced_accuracy_score(y2i_test,pred_y2)

acc=accuracy_score(y2i_test,pred_y2)

f1=f1_score(y2i_test, pred_y2, average='macro')

probs=clf2.predict_proba(X2i_test)

f,t,th=roc_curve(y2i_test,probs[:,1],pos_label=1,drop_intermediate=False)

r=roc_auc_score(y2i_test,probs[:,1])

cm = confusion_matrix(y2i_test, pred_y2)

print("Confusion Matrix for Test Data n",cm)

print("Accuracy Score for Test Data n", accuracy_score(y2i_test,pred_y2))

print("Balanced accuracy Score for Test Data n", balanced_accuracy_score(y2i_test,pred_y2))

print("The F1 Accuracy Score for Test Data n", f1_score(y2i_test,pred_y2,average='macro'))

print("The Area under ROC Curve is ",r)

The output shows that the balanced_accuracy has drastically gone down to around 55% even though we have used the same size of the training data to fit the model. The dataset used to build the model here is a truly imbalanced dataset, as it reflects a lack of real-world data that can help the algorithm to arrive at accurate predictions. Techniques like oversampling and under-sampling may not lead to higher accuracy as shown above.

In these cases where the imbalance is due to a lack of real-world data, and the balance cannot be achieved by acquiring more data, we may resort to the techniques that synthetically create new data (e.g. SMOTE) or algorithms that optimize the existing data like (NearMiss).

Thus, all the arguments made in the article have been verified using a random forest classifier algorithm and adult income dataset.

To reiterate, the adult income dataset has correct proportions of the two classes of data and is not imbalanced from the perspective of the real-world nature of the problem. Hence, better accuracy can be achieved by simply reducing the training dataset to the maximum possible minority classes. In other words, imbalance techniques are not required when the dataset is imbalanced from the perspective of a machine learning algorithm.

The above statements can also be shown to be true in the case of other imbalanced datasets. For example, in the case of the publicly available European credit card fraud detection dataset, out of 284807 rows of data, we can show similar to the above illustration, that we would just require 492 rows (the count of fraudulent transactions) to get the best accuracy, making the rest of the 284000 rows redundant!!

Conclusion

This article explains some basic concepts underlying classification with imbalanced data. The difference between the percentage of rows between majority and minority classes should be differentiated between the lack of sufficient data vs the actual real nature of the problem at hand. The imbalance in classification datasets arises due to the very nature of the problem but can also be due to a lack of sufficient data. While applying techniques to correct the imbalance, this difference should be clearly distinguished. There could be cases where additional accuracy of prediction can only be obtained by adding more features. In such cases, the implementation of techniques to handle imbalance could lead to erroneous results. Hence, it is necessary to study the problem thoroughly in the given domain context and compare the dataset information with real-world problems for making decisions regarding the handling of the imbalance of data.

The effectiveness of the classification algorithms increases with a decrease in sample size owing to a reduction in imbalance. Hence, when the imbalance is due to bias of the machine learning algorithm, the problem can be resolved by reducing the sample size thereby reducing the degree of imbalance, as long as the information content is sufficient in the dataset.

Key Takeaways

1. Imbalanced datasets are the very nature of many real-world problems. Hence, all imbalanced datasets need not necessarily require the implementation of techniques to handle the imbalance.

2. Default accuracy measures should not be used for models with imbalanced datasets.

3. The dataset, whether considered imbalanced or not, should reflect the nature of the real-world problem and the nature of production data in which it will be deployed

4. Imbalance can also be due to a lack of sufficient data in one of the classes. In such cases, the dataset should be augmented by obtaining more real-world data.

3. Imbalanced income datasets can thus be categorized as imbalanced from two perspectives viz., from the perspective of the algorithm and from the perspective of whether the dataset represents the real-world problem

4. Over-sampling and under-sampling can lead to erroneous predictions when the dataset does not reflect the real-world problem in terms of information sufficiency

6. Over-sampling and under-sampling (and use of other such methods like SMOTE) can be done if it does not reduce information content but augments the same thereby increasing the capability of the machine learning model

7. A certain amount of domain knowledge is required to make decisions on handling imbalanced data

8. The widely used adult income dataset of UCI, though contains only 25% data for one of the classes, cannot be termed imbalanced as it reflects the nature of the real-world problem

9. As the degree of imbalance increases, the effectiveness of the machine learning model decreases. However, as the sample size decreases, the imbalance decreases. Hence, by reducing the sample size, subject to not losing any information content, we can increase the accuracy of an imbalanced dataset.

10. Imbalance techniques that create synthetic data or modify the algorithm to amplify the information content of minority classes are highly useful when the dataset is imbalanced from the perspective of whether the dataset represents the real-world problem.

References:

1. Adult Dataset, https://archive.ics.uci.edu/ml/datasets/adult

2. Herrera, Francisco, et al. Learning from Imbalanced Data Sets. Germany, Springer International Publishing, 2018.

3. Chawla, Nitesh V., et al. “SMOTE: synthetic minority over-sampling technique.” Journal of artificial intelligence research 16 (2002): 321-357.

4. https://www.geeksforgeeks.org/blog/2017/03/imbalanced-data-classification

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.