This article was published as a part of the Data Science Blogathon.

Overview

Keras is a Python library including an API for working with neural networks and deep learning frameworks. Keras includes Python-based methods and components for working with various Deep Learning applications.

Table of contents

What is Keras?

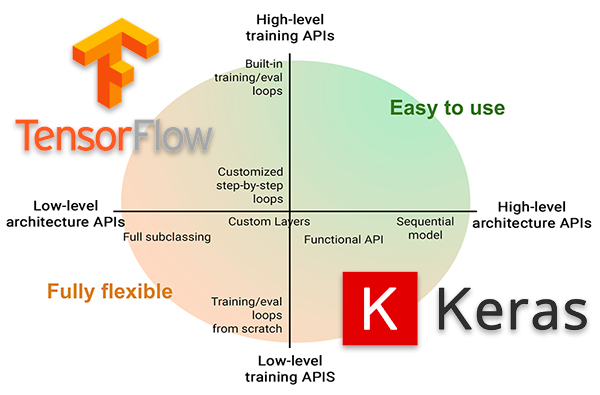

Tensorflow was previously the most widely used Deep Learning library, however, it was tricky to figure with for newbies. A simple one-layer network involves a substantial amount of code. With Keras, however, the entire process of creating a Neural Network’s structure, as well as training and tracking it, becomes exceedingly straightforward.

Keras is a high-level API that works with the backends Tensorflow, Theano, and CNTK. It includes a good and user-friendly API for implementing neural network tests. It’s also capable of running on both CPUs as well as GPUs.Keras comes with 10 different neural network modelling and training API modules. Let’s take a look at a few of them one by one.

Keras Models

By adding and deleting layers, the Models API allows you to build complicated neural networks. The model could be sequential, implying that the layers are piled one on top of the other with a single input and output. The model can also be operational, meaning that it can be completely changed.

A training module is also included in the API, with methods for generating the model, as well as the optimizer and loss function, fitting the model, and evaluating and forecasting input messages. It also provides methods for batch data training, testing, and forecasting. Models API also allows you to save and preprocess your models.

Keras models are divided into two categories:

• Keras Functional API • Keras Sequential Model

1. Keras Sequential API

It allows us to develop models in a layer-by-layer fashion. However, it does not allow us to create models with numerous inputs or outputs. It works well for simple layer stacks with only one input and output tensor.

This paradigm fails when any of the layers in the stack have numerous inputs or outputs. Even if we want non-linear topology, it isn’t suitable.

from keras.models import Sequential from keras.layers import Dense model=Sequential() model.add(Dense(64,input_shape=4,)) mode.add(Dense(32)

When defining a model and adding layers in Keras, it gives you more options.

source: techvidvan

2. Keras functional API.

The Functional API can be used to create models with various inputs and outputs. It also makes it possible for us to share these layers. In other words, we can use Keras’ functional API to construct layer graphs.

Because a functional API is a data structure, it’s simple to save it as a single file that can be used to rebuild the exact model without necessarily knowing the source code. From here, it’s also straightforward to model the graph and access its nodes.

from keras.models import Model from keras.layers import Input, Dense input=Input(shape=(32,)) layer=Dense(32)(input) model=Model(inputs=input,outputs=layer)

Explaining Neural Network’s Various Layers

Each and every neural network is founded on the basis of layers. The Layers API offers a complete set of tools for building neural network architectures. The Layers API contains methods for generating bespoke layers with custom weights and initializers in the Base Layer class.

ann = models.Sequential([

layers.Flatten(input_shape=(32,32,3)),

layers.Dense(3000, activation='relu'),

layers.Dense(1000, activation='relu'),

layers.Dense(10, activation='softmax')

])

The Layer Activations class provides many activation functions such as ReLU, Sigmoid, Tanh, Softmax, and so on. The Layer Weight Initializers class has methods for various weight initializations.

cnn = models.Sequential([

layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

It also offers the Core Layers class, which contains the classes required to generate core layers such as Dense, Activation, and Embedding. Convolution Layers can be created in a variety of ways using the Convolution Layer class. The Pooling Layers class contains the methods required for Max Pooling, Average Pooling, Global Max Pooling, and Global Average Pooling, as well as other types of pooling.

Neural Network Callbacks

Callbacks are a way of tracking the model’s training. You can do a variety of activities before or after an epoch or batch ends if Callback is enabled.

You can also utilise callbacks:

• Stopping after a specified number of epochs if the loss does not decrease significantly.

• While a model is being trained, you may see its internal states and statistics.

A callback is a type of object that can be used to accomplish tasks at different points of the training process (i.e at the start /end of an epoch, before/after a single batch).

Callbacks can be used to:

To keep track of your measurements, keep TensorBoard logs after each batch of training.

Save your model to disc on a regular basis.

Make an early stop.

During training, get a glimpse of a model’s internal states and statistics.

source: medium.com

Preprocessing of Datasets

Data preprocessing is being defined as the procedure for preparing raw information for machine learning/ deep learning models.

It is not always the norm that we come across clean and prepared data when working on a machine learning project.

Real-world data sometimes contains noise, missing data, and is in an unsatisfactory format that cannot be used effectively in machine learning models. Data preprocessing is an extremely important/noteworthy job for cleaning data and making it suitable for machine learning/ deep learning models, thus improving the model’s accuracy and efficiency.

The data is usually in raw format and structured in directories, and it must be preprocessed before it can be supplied to the model for fitting. The Image Data Preprocessing class contains many of these particular functions. The photo data, for example, must be an integer array, which may be done with the img to array method. We may also use the image dataset from the directory function if the images are in a directory and subfolders.

There are further classes for Time Series data and text data in the Data Preprocessing API.

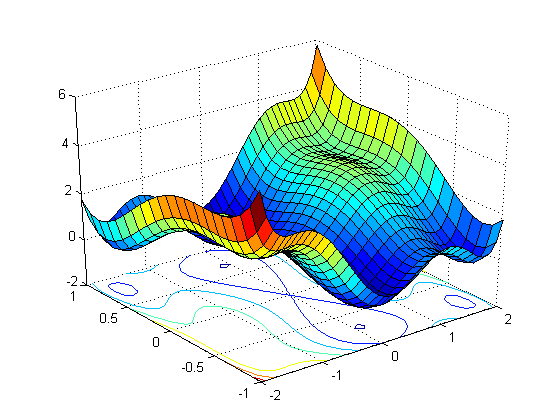

Deep Learning Optimizers

The core of any neural network is the optimizer. In order to determine the appropriate weights for prediction, each neural network optimises a loss function. There are several different types of optimizers, each with a somewhat different technique to identifying the optimal weights.

Optimizers are procedures or methodologies for minimising an error function as well as increasing production efficiency. Optimizers are computational models that are built on the learnable parameters of a model, such as Weights and Biases. Optimizers assist in determining how to adjust the weights and learning rate of a neural network in order to minimise losses.

In layman’s terms, optimizers tinker with the weights to shape and mould your model into the most accurate form possible. The loss function serves as a road map for the optimizer, indicating whether it is travelling in the correct or wrong path.

Types of Optimizers:

- Gradient Descent

- Stochastic Gradient Descent.

- Mini-Batch Gradient Descent.

- Momentum Based Gradient Descent.

- Nesterov Accelerated Gradient

- Adagrad.

- RMSProp

model.compile( loss=‘categorical_crossentropy’, optimizer=‘adam’, metrics=[‘accuracy’] )

Losses in Neural Network

Loss functions are required while compiling a model. This loss function would be optimised by the optimizer, which was also specified as a parameter in the compilation procedure. Probabilistic losses, regression losses, and hinge losses are the three types of losses.

One of the most key aspects of Neural Networks is the Loss Function. Loss is nothing more than a Neural Net prediction error. Loss Function is the way for computing the loss.

To put it another way, the gradients are calculated using the Loss. Gradients are also utilised to update the Neural Net’s weights.

Keras and Tensorflow have a variety of loss functions built-in for varied goals.

It’s a method of determining how effectively your algorithm models the data. Your loss function will produce a greater value if your forecasts are completely wrong. If they’re decent, it’ll give you a lower number. Your loss function will inform you if you’re making progress as you tweak parts of your algorithm to try to enhance your model.

Evaluation Metrics

To assess its performance on test data, every Machine Learning model use metrics. Metrics are similar to Loss Functions, except they are used to monitor test data. Accuracy, Binary Accuracy, Categorical Accuracy, and other forms of accuracy measures exist. Probabilistic measures such as binary cross-entropy, categorical cross-entropy, and others are also provided.

It also consists of regression metrics such as Root Mean Squared Error, Means Absolute Error, Means Squared Error etc

Different kinds of evaluation metrics available are as follows

- Classification Accuracy

- Confusion Matrix

- Area under Curve

- Logarithmic Loss

- Mean Squared Error

- F1 Score

- Mean Absolute Error

Applications for Keras

The Keras Applications class contains various prebuilt models as well as weights that have already been trained. In the Transfer Learning process, these pre-trained models are used. The architecture, number of layers, trainable weights, and other parameters of these pre-trained models vary. VGG16, Xception, Resnet50, MobileNet, and others are among them. Pre-Trained Deep Learning Models

Model Architecture and model Weights are the aspects of a trained model. Because model weights are a huge file, we must download and retrieve them from the ImageNet database.

The Keras applications module is used to deploy deep neural network models that have already been trained. Keras models are used for feature extraction, prediction, and fine-tuning.

Loading a model(pre-trained)

import keras import numpy as np from keras.applications import vgg16, inception_v3, resnet50, mobilenet #Load the VGG model vgg_model = vgg16.VGG16(weights = 'imagenet') #Load the Inception_V3 model inception_model = inception_v3.InceptionV3(weights = 'imagenet') #Load the ResNet50 model resnet_model = resnet50.ResNet50(weights = 'imagenet') #Load the MobileNet model mobilenet_model = mobilenet.MobileNet(weights = 'imagenet')

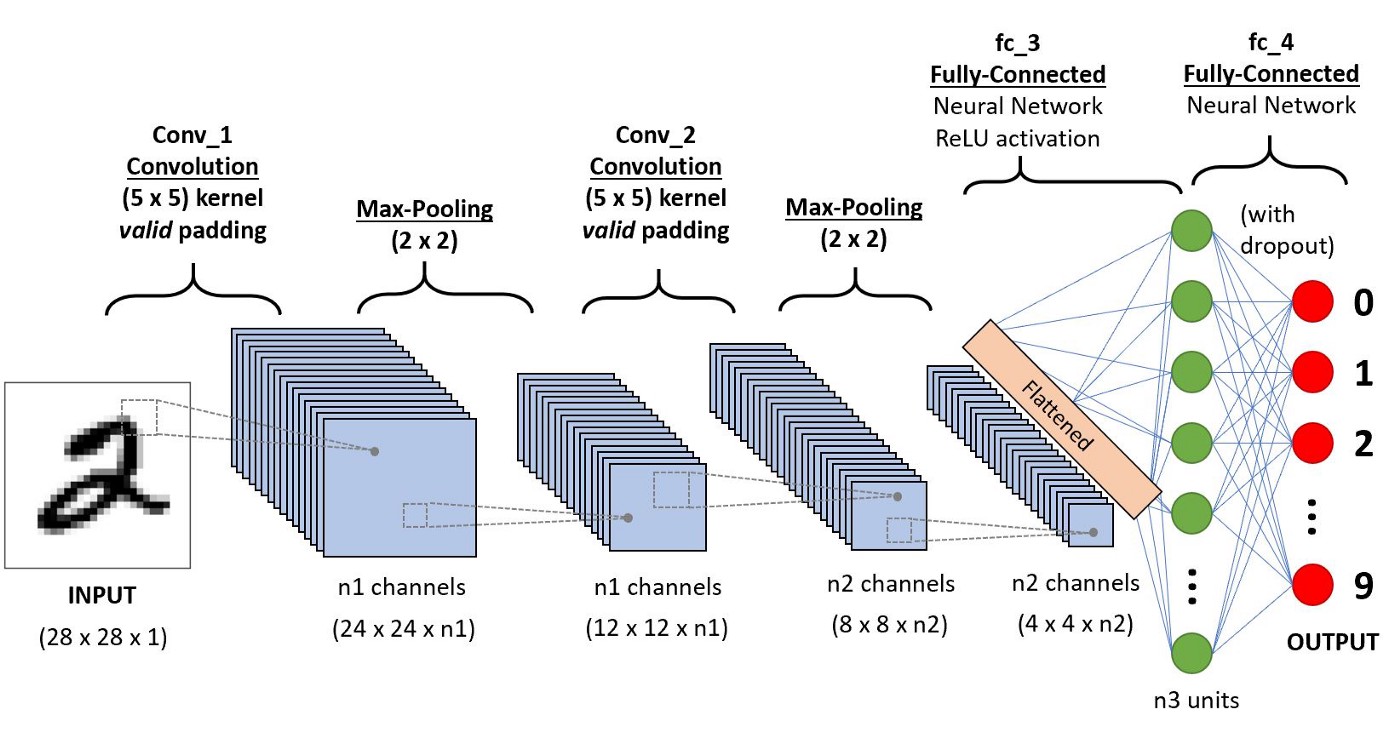

Using Keras to Train Neural Networks

Let us Consider the MNIST dataset, which is included in Keras’ datasets class. For categorising handwritten images of digits 0-9, we’ll build a basic sequential Convolutional Neural Network.

from keras.preprocessing.image import ImageDataGenerator datagen = ImageDataGenerator( rotation_range=10, zoom_range=0.1, width_shift_range=0.1, height_shift_range=0.1 )

epochs = 10 batch_size = 32 history = model.fit_generator(datagen.flow(x_train, y_train, batch_size=batch_size), epochs=epochs, validation_data=(x_test, y_test), steps_per_epoch=x_train.shape[0]//batch_size )

output:

Epoch 1/10 1563/1563 [==============================] - 55s 33ms/step - loss: 1.6791 - accuracy: 0.3891 Epoch 2/10 1563/1563 [==============================] - 63s 40ms/step - loss: 1.1319 - accuracy: 0.60360s - loss: Epoch 3/10 1563/1563 [==============================] - 66s 42ms/step - loss: 0.9915 - accuracy: 0.6533 Epoch 4/10 1563/1563 [==============================] - 70s 45ms/step - loss: 0.8963 - accuracy: 0.6883 Epoch 5/10 1563/1563 [==============================] - 69s 44ms/step - loss: 0.8192 - accuracy: 0.7159 Epoch 6/10 1563/1563 [==============================] - 62s 39ms/step - loss: 0.7695 - accuracy: 0.73471s Epoch 7/10 1563/1563 [==============================] - 62s 39ms/step - loss: 0.7071 - accuracy: 0.7542 Epoch 8/10 1563/1563 [==============================] - 62s 40ms/step - loss: 0.6637 - accuracy: 0.76941s - l Epoch 9/10 1563/1563 [==============================] - 60s 38ms/step - loss: 0.6234 - accuracy: 0.7840 Epoch 10/10 1563/1563 [==============================] - 58s 37ms/step - loss: 0.5810 - accuracy: 0.7979

Click on this for accesing the Gihub link for Google Colab Notebook for above example

Frequently Asked Questions

A. A Keras neural network is a type of deep learning model implemented using the Keras library, which is now integrated into TensorFlow. Keras simplifies the creation and training of neural networks. It provides a high-level API for building and configuring neural networks, allowing developers to define layers, connections, and training processes with ease, making it a popular choice for developing deep learning models.

A. Keras is an open-source high-level neural networks API written in Python. It acts as an interface to various deep learning frameworks, including TensorFlow and Theano. Keras simplifies the process of building, training, and evaluating deep learning models by providing a user-friendly and modular approach. It’s widely used for tasks like image classification, natural language processing, and more, making deep learning accessible to developers and researchers.

With this, I finish this blog.

Hello Everyone, Namaste

My name is Pranshu Sharma and I am a Data Science Enthusiast

Thank you so much for taking your precious time to read this blog. Feel free to point out any mistake(I’m a learner after all) and provide respective feedback or leave a comment.

Dhanyvaad!!

Feedback:Email: [email protected]

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion.