Introduction

The practice of using analytics to measure movie’s success is not a new phenomenon. Most of these predictive models are based on structured data with input variables such as Cost of Production, Genre of the Movie, Actor, Director, Production House, Marketing expenditure, no of distribution platforms, etc.

However, with the advent of social media platforms, young demographics, digital media and the increasing adoption of platforms like Twitter, Facebook, etc to express views and opinions. Social Media has become a potent tool to measure Audience Sentiments about a movie.

This report is an attempt to understand one such platform, i.e., Twitter. The movie chosen is Rangoon, which is a 2017 Bollywood movie, directed by Vishal Bhardwaj and produced by Sajid Nadiadwala and Viacom 18 Motion Pictures. The lead actors are Saif Ali Khan, Shahid Kapoor and Kangana Ranaut. The film was released on 24 February 2017 in India on a weekend.

I will be using R, an open source statistical programming tool, to carry out the analysis.

Note: I will focus on the approach and the findings. The R Code to carry out the analysis can be found at the end of the article.

Table of Contents

- Introduction to Text Analytics

- Objective of the Analysis

- Data for the Analysis

- Conducting the analysis Approach 1 using the ‘tm’ package

- Conducting the analysis Approach 2 using the ‘suazyhet’ package

- Conclusion

- Making a Prediction Algorithm for Movie Success

1. Introduction to Text Analytics

Before moving ahead with the analysis, it’s only relevant to ask the question.

What is Text Analytics?.

In simple words, it is the process of converting unstructured text data into meaningful insights to measure customer opinion, product reviews, sentiment analysis, customer feedback, etc.

Text Analytics is completely different from the traditional approach, as the latter works primarily on structured data. On the other hand, texts (such as tweets) are loosely structured, have poor spelling, often contain grammar errors, and they are multilingual.

This makes the process much more challenging and interesting!

There are two common methodologies for Text Mining Sentiment Parsing and Bag of Words.

Sentiment Parsing emphasises on the structure and grammer of words. Whereas Bag of words disregards the grammer and word type, instead focusing on representing text (a sentence, tweet, document) as the bag (multiset) of words.

2. Objective of the Analysis

Any analytics project should have a well defined objective. In our case, the goal is to us eText Aalytics on Twitter data to gauge audience sentiments about the movie Rangoon.

3. Data for the Analysis

To start with, we need to have the data. Fortunately, in case of twitter, it’s not that difficult. Twitter data is publicly available and one can collect it through scraping the website or by using a special interface for programmers that Twitter provides, called an API.

I have used the “twitteR” package in R to collect 10,000 tweets/retweets about ‘Rangoon’. The movie released on 24th of February 2017, and the tweets were extracted on 25th Feb. The tweets were then saved as the csv file, and read into R using the ‘readr’ package. The process of obtaining tweets from Twitter is beyond the scope of the article.

#Loading the data

library(readr)

rangoon = read_csv("rangoontweets.csv")

4. Conducting the analysis Approach 1 using the ‘tm’ package

The ‘tm’ package is a framework for text mining applications within R. It works on arguably the most widely used Text Mining ‘Bag of Words’ Principle. Bag of Words approach of handling texts is very simple. It just counts the frequency of each word appearance in the text and uses these counts as the independent variables. This simple approach is often very effective and it’s used as a baseline in text analytics projects and for natural language processing.

The main steps are outlined below.

Step 1: Let’s load the required libraries for the analysis and then extract the tweets from the file “rangoon”.

#loading libraries

library('stringr')

library('readr')

library('wordcloud')

library('tm')

library('SnowballC')

library('RWeka')

library('RSentiment')

library(DT)

#extracting relevant data

r1 = as.character(rangoon$text)

Step 2: Data Preprocessing

Preprocessing the text can dramatically improve the performance of the Bag of Words method (or for that matter, any method)

The first step towards doing this is Creating a Corpus, which in simple terms, is nothing but a collection of text documents. Once the Corpus is created, we are ready for preprocessing.

First, let us remove Punctuations. The basic approach to deal with this is to remove everything that isn’t a standard number or letter. It should be borne in mind that sometimes punctuations can be really useful, like web addresses, where the punctuation often defines the web address. Therefore, the removal of punctuation should be tailored to the specific problem. In our case, we will remove all punctuations.

Next, we change the case of the word to lowercase so that same words are not counted as different because of lower or upper case.

Another preprocessing task we have to do is to remove unhelpful terms. Many words are frequently used but are only meaningful in a sentence. These are called stop words. Examples are ‘the’, ‘is’, ‘at’, and ‘which’. It’s unlikely that these words will improve our ability to understand sentiments, so we want to remove them to reduce the size of the data.

Another important preprocessing step is stemming, motivated by the desire to represent words with different endings as the same word. For example, there is hardly any distinction between love,loved, and loving and all these could be represented by a common stem, lov. The algorithmic process of performing this reduction is called stemming.

Once we have preprocessed our data, we’re now ready to extract the word frequencies to be used in our prediction problem. The tm package provides a function called DocumentTermMatrix that generates a matrix where the rows correspond to documents, in our case tweets, and the columns correspond to words in those tweets. The values in the matrix are the counts of how many times that word appeared in each document.

Let’s go ahead and generate this matrix and call it “dtm_up”.

#Data Preprocessing

set.seed(100)

sample = sample(r1, (length(r1)))

corpus = Corpus(VectorSource(list(sample)))

corpus = tm_map(corpus, removePunctuation)

corpus = tm_map(corpus, content_transformer(tolower))

corpus = tm_map(corpus, removeNumbers)

corpus = tm_map(corpus, stripWhitespace)

corpus = tm_map(corpus, removeWords, stopwords('english'))

corpus = tm_map(corpus, stemDocument)

dtm_up = DocumentTermMatrix(VCorpus(VectorSource(corpus[[1]]$content)))

freq_up <- colSums(as.matrix(dtm_up))

Step 3: Calculating Sentiment

Now it’s time to get into the world of sentiment scoring. This is done in R using the calculate_sentiment function. This function loads text and calculates sentiment of each sentence. The syntax is that it takes text as arguments and outputs a vector containing sentiment of each sentence as value.

Let’s run the code and movie have been received well by the audience.

#Calculating Sentiments

sentiments_up = calculate_sentiment(names(freq_up))

sentiments_up = cbind(sentiments_up, as.data.frame(freq_up))

sent_pos_up = sentiments_up[sentiments_up$sentiment == 'Positive',]

sent_neg_up = sentiments_up[sentiments_up$sentiment == 'Negative',]

cat("We have far lower negative Sentiments: ",sum(sent_neg_up$freq_up)," than positive: ",sum(sent_pos_up$freq_up))

We see that the ratio of positive to negative words is 5780/3238 = 1.8 which prima facie indicates that the movie has been received well by the audience.

Let’s deep dive into the positive and negative sentiments separately to understand it better.

-

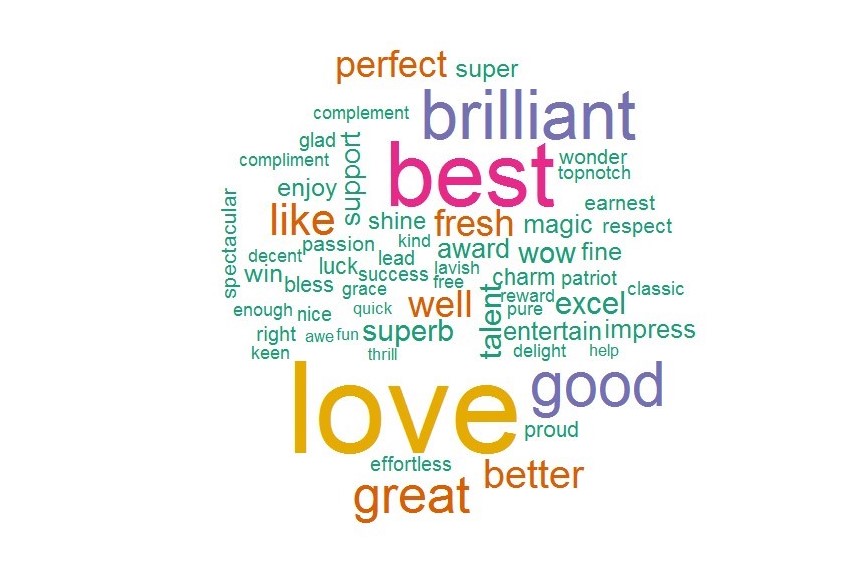

Positive Words

The table below shows the frequency of words in our text classified as positive. The same has been generated using the data table function.

The words ‘love’, ‘best’ and ‘brilliant’ are the three top positive words in terms of frequency.

DT::datatable(sent_pos_up)

| text | sentiment | freq_up | |

| accomplish | accomplish | Positive | 1 |

| adapt | adapt | Positive | 2 |

| appeal | appeal | Positive | 4 |

| astonish | astonish | Positive | 3 |

| award | award | Positive | 85 |

| awe | awe | Positive | 11 |

| awestruck | awestruck | Positive | 5 |

| benefit | benefit | Positive | 1 |

| best | best | Positive | 580 |

| better | better | Positive | 186 |

Let’s now visualise the positive words using a word cloud function. Word clouds can be a visually appealing way to display the most frequent words in a body of text. How it works is that a word cloud arranges the most common words and uses size to indicate the frequency of a word. Let’s make word cloud of positive terms.

Word Cloud of Positive Words

layout(matrix(c(1, 2), nrow=2), heights=c(1, 4)) par(mar=rep(0, 4)) plot.new() set.seed(100) wordcloud(sent_pos_up$text,sent_pos_up$freq,min.freq=10,colors=brewer.pal(6,"Dark2"))

The word cloud also shows that love is the most frequent positive term used in the tweets.

-

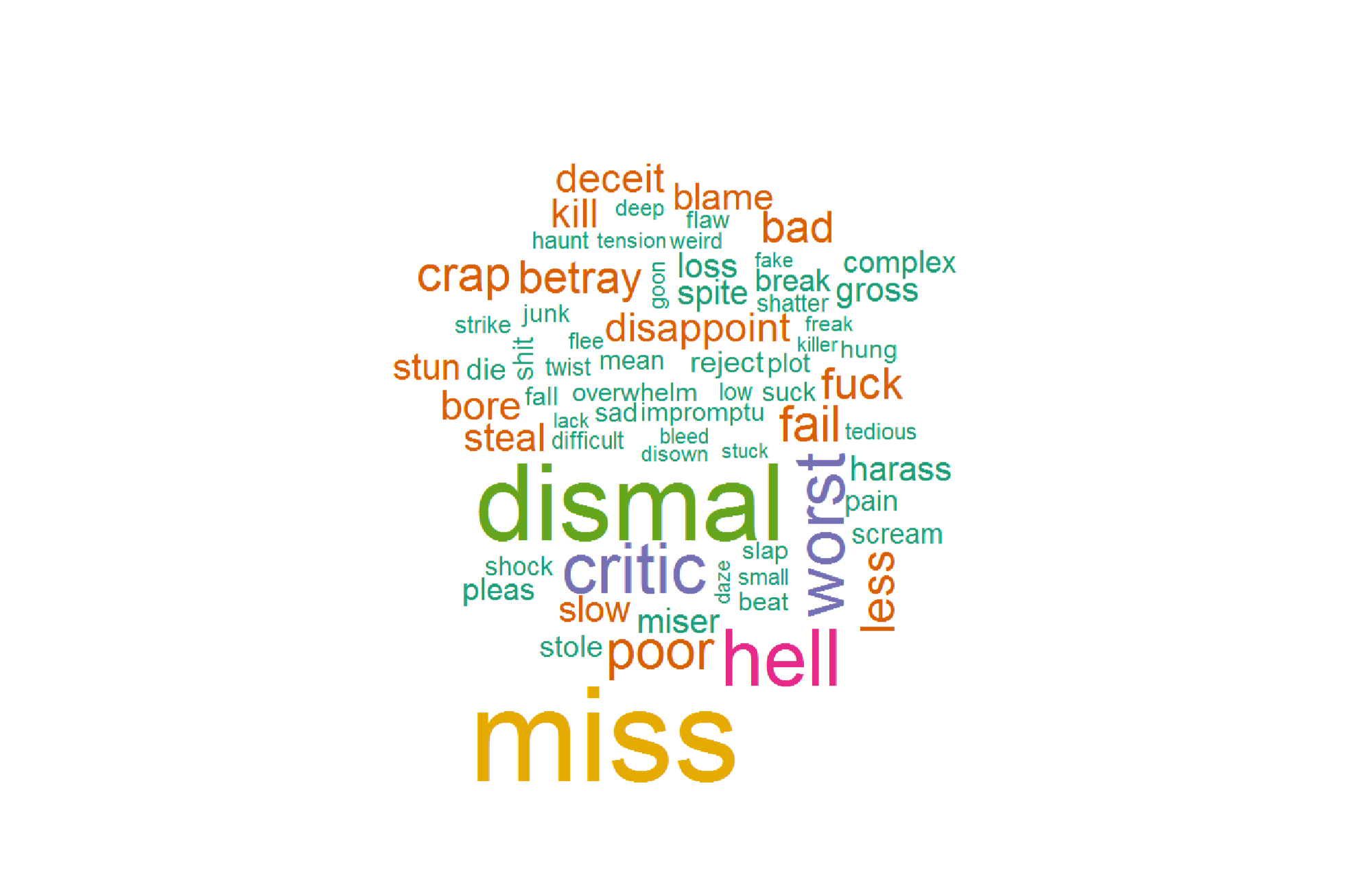

Negative Sentiments

Let’s repeat the same exercise for negative words. We see that words like ‘miss’, ‘dismal’, ‘hell’, etc are the most frequent words with negative connotations. Let’s visualize this with word cloud.

DT::datatable(sent_neg_up)

| text | sentiment | freq_up | |

| abrupt | abrupt | Negative | 3 |

| addict | addict | Negative | 1 |

| annoy | annoy | Negative | 3 |

| arduous | arduous | Negative | 1 |

| attack | attack | Negative | 2 |

| awkward | awkward | Negative | 2 |

| bad | bad | Negative | 64 |

| bad | bad | Negative | 64 |

| baseless | baseless | Negative | 1 |

| bash | bash | Negative | 5 |

| beat | beat | Negative | 22 |

Word Cloud of Negative Words

plot.new() set.seed(100) wordcloud(sent_neg_up$text,sent_neg_up$freq, min.freq=10,colors=brewer.pal(6 ,"Dark2")

We see the most frequently used negative words are miss, dismal and hell.

Word of Caution: while doing text analytics, it is important to have some prior understanding of the subject. For instance, negative words like ‘bloody’ or ‘hell’ might be representing the famous song ‘bloody hell’ of the movie. Similarly, ‘miss’ might represent the title and in the case of Rangoon, one of the leading character’s screen name is Miss Julia, portrayed by Kangana Ranaut. So it can be tricky to consider ‘miss’ as a negative word.

Once we have accounted for these possible anamloies, we can make further adjustments in our analysis. For instance, we have earlier calculated that the ratio of positive to negative words is 5780/3238 = 1.8. Of these 3238 negative word count, let us not consider 144 counts of the word ‘hell’, and relook at the ratio. We see that the ratio increases to 5780/3094 = 1.87.

This was the first approach of gauging audience opinion aboout the movie Rangoon.It seems that positive emotions are more than the negative ones. We can further check this using another method of polarity discussed below.

5. Conducting the analysis Approach 2 using the ‘syuzhet’ package

The ‘syuzhet’ Package extracts sentiments from text using three sentiment dictionaries. The difference between this and the above approach is that this approach is based on a much wider range of sentiments. The first step,as always, is to prepare the data for text analytics. This will include cleaning html links,

#Approach 2 - using the 'syuzhet' package

text = as.character(rangoon$text)

##removing Retweets

some_txt<-gsub("(RT|via)((?:\\b\\w*@\\w+)+)","",text)

##let's clean html links

some_txt<-gsub("http[^[:blank:]]+","",some_txt)

##let's remove people names

some_txt<-gsub("@\\w+","",some_txt)

##let's remove punctuations

some_txt<-gsub("[[:punct:]]"," ",some_txt)

##let's remove number (alphanumeric)

some_txt<-gsub("[^[:alnum:]]"," ",some_txt)

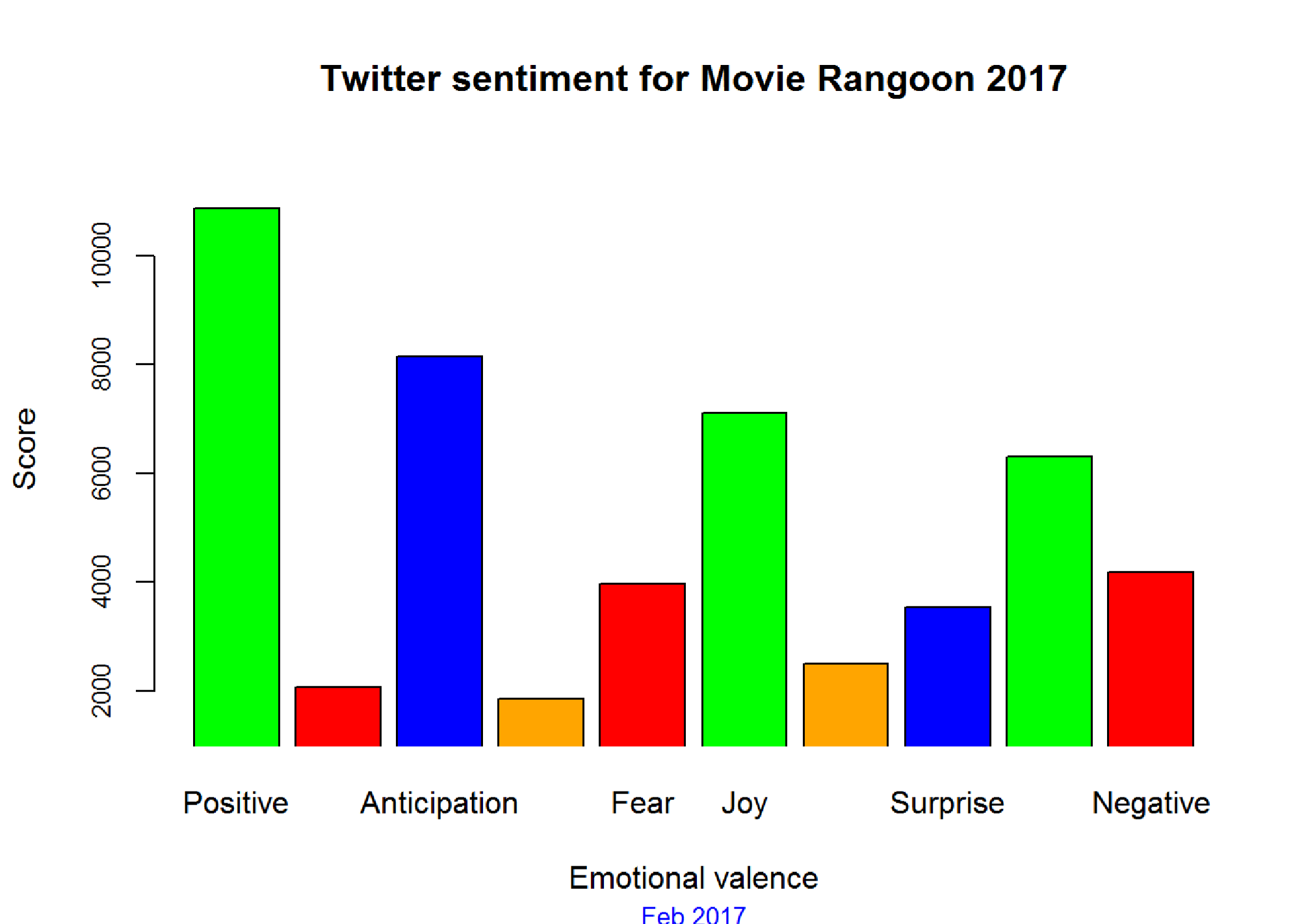

Post processing, we will use the ‘get_nrc_sentiment’ function to extract sentiments from the tweets. How this function works is that it Calls the NRC sentiment dictionary to calculate the presence of different emotions and their corresponding valence in a text file.

The output is a data frame where each row represents a sentence from the original file. The columns include one for each emotion type as well as a positive or negative valence. The ten columns are as follows: “anger”, “anticipation”, “disgust”, “fear”, “joy”, “sadness”, “surprise”, “trust”, “negative”, “positive.”

Let’s create a visual how our movie performs as per the emotions.

#visual library(ggplot2) # Data visualization library(syuzhet) mysentiment<-get_nrc_sentiment((some_txt))

# Get the sentiment score for each emotion mysentiment.positive =sum(mysentiment$positive) mysentiment.anger =sum(mysentiment$anger) mysentiment.anticipation =sum(mysentiment$anticipation) mysentiment.disgust =sum(mysentiment$disgust) mysentiment.fear =sum(mysentiment$fear) mysentiment.joy =sum(mysentiment$joy) mysentiment.sadness =sum(mysentiment$sadness) mysentiment.surprise =sum(mysentiment$surprise) mysentiment.trust =sum(mysentiment$trust) mysentiment.negative =sum(mysentiment$negative)

# Create the bar chart yAxis <- c(mysentiment.positive, + mysentiment.anger, + mysentiment.anticipation, + mysentiment.disgust, + mysentiment.fear, + mysentiment.joy, + mysentiment.sadness, + mysentiment.surprise, + mysentiment.trust, + mysentiment.negative)

xAxis <- c("Positive","Anger","Anticipation","Disgust","Fear","Joy","Sadness","Surprise","Trust","Negative")

colors <- c("green","red","blue","orange","red","green","orange","blue","green","red")

yRange <- range(0,yAxis) + 1000

barplot(yAxis, names.arg = xAxis,

xlab = "Emotional valence", ylab = "Score", main = "Twitter sentiment for Movie Rangoon 2017", sub = "Feb 2017", col = colors, border = "black", ylim = yRange, xpd = F, axisnames = T, cex.axis = 0.8, cex.sub = 0.8, col.sub = "blue")

colSums(mysentiment)

Looking at the bar chart and the sum of these emotions, we can see that the positive sentiments (‘positive’, ‘joy’, ‘trust’) comfortably outscore the negative emotions (‘negative’, ‘disgust’, ‘anger’). This may be a hint that may be the audience has recieved the movie positively.

6. Conclusions

Both the approaches seem to suggest that the movie ‘Rangoon’ has been well received by the audiences, as measured by the PT/NT ratio (Positive Tweet to Negative Tweet); as well as by the visual representation of various emotions.

7. Making a Prediction Algorithm for Movie Success

This article has focused on gauging audience sentiments expressed about the movie ‘Rangoon’ via tweets.However, this may not be an accurate yardstick to predict box office success.We all know that many critically acclaimed movies falter at the box office and many ‘don’t-bring-your-brains-tothe-theater’ movies become an astounding success.

So what’s the solution?

The solution is to analyze the historical records of how the PT/NT ratio has translated into box office collections for the similar genre of movies; and create well-trained and validated predictive models on the data. This model then can be used to predict the box office success of the movie. In case of Rangoon, he PT/NT ratio of 1.87 will be the input value.

Since that is beyond the scope of this article, we will not be covering it here, but it’s important to highlight that text analytics can also be used as an alternative to measuring box office success.

End Notes

In this article, we have seen how we can use twitter analytics to not just analyze sentiments but also to predict box office revenues.

It should be noted that the analysis will vary depending on when the tweets were extracted pre or post movie release. It is very much possible that the same analysis done on tweets from different time frame can yield different results. Also, different steps of preprocessing can alter the results.

The objective of this article was not to conclude the success or failure of the movie ‘Rangoon’, but solely on steps and thought process behind doing text analytics on twitter reviews of the movie.

There may be more advanced methods to do the similar analysis, but have confined to these two approaches as found them simple and intuitive.

Feel free to connect to discuss any suggestions or observations on the article. Also, if there are other more effective methods, please feel free to share them as well.

By Analytics Vidhya Team: This article was contributed by Vikash Singh and is the first rank holder of Blogathon 3.

About the Author

Vikash Singh Decision Scientist who specializes in integrating Strategy with Data Science for effective decision making and solving Business Problems. An MBA in International Business from Banaras Hindu University, he has also completed one-year certificate program on Business Analytics from IIM, Calcutta. Outside of work, Vikash loves learning new things in analytics, follow his favorite sports and play cricket.

Vikash Singh Decision Scientist who specializes in integrating Strategy with Data Science for effective decision making and solving Business Problems. An MBA in International Business from Banaras Hindu University, he has also completed one-year certificate program on Business Analytics from IIM, Calcutta. Outside of work, Vikash loves learning new things in analytics, follow his favorite sports and play cricket.