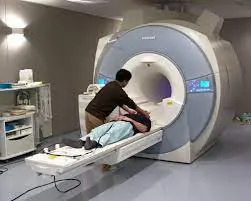

With the advancement in artificial intelligence, newer methods of reading the human mind will be at play in 2023. Scientists at the University of Texas at Austin have announced a breakthrough in non-invasive brain activity mapping. The team has developed an AI model using fMRI that can translate brain activity into text. It uses a new technology called a semantic decoder.

The study is led by Jerry Tang, a doctoral student in computer science, and Alex Huth, an assistant professor of neuroscience and computer science at the University of Texas at Austin. The study is based on a transformer model similar to the one that powers Google Bard and OpenAI’s ChatGPT. This new semantic decoder technology focuses on translating brain activity into text. The research findings have been published in the peer-reviewed journal Nature Neuroscience.

Also Read: Google Bard Learnt Bengali on Its Own: Sundar Pichai

Semantic Decoder: A Non-Invasive Breakthrough

The semantic decoder, a new AI technology, can reconstruct speech with uncanny accuracy while people listen to a story or even silently imagine one using only fMRI scan data. In the past, language decoding systems have required surgical implants, making this new development a significant breakthrough.

Dr. Alexander Huth, who worked on the project, says, “We were shocked that it works as well as it does. I’ve been working on this for 15 years … so it was shocking and exciting when it finally did work.”

The new AI technology could revolutionize the treatment of patients with stroke, motor neuron disease, and other communication difficulties. It raises the prospect of new ways to restore speech in patients who struggle to communicate due to these conditions.

Also Read: ChatGPT Outperforms Doctors in Providing Quality Medical Advice

Overcoming Limitations of fMRI

The team overcame a fundamental limitation of fMRI. Although the technique can map brain activity to a specific location with incredible resolution, an inherent time lag makes tracking activity in real-time impossible. The lag exists because fMRI scans measure the blood flow response to brain activity. The blood flow peaks and returns to baseline over 10 seconds. This means that even the most AI technology or powerful scanner cannot improve on this.

“It’s this noisy, sluggish proxy for neural activity,” said Huth. This hard limit has hampered the ability to interpret brain activity in response to natural speech because it gives a “mishmash of information” spread over a few seconds.

Continuous Language for Extended Periods

The researchers have created a model that can decode continuous language for extended periods with complicated ideas. Scientists say that once the AI system is fully trained, it can generate a stream of text when a participant listens to or imagines a story. The team used technology like ChatGPT to interpret people’s thoughts while watching silent films or when they imagined themselves telling a story.

The study raises concerns about mental privacy as it opens doors to new possibilities for interpreting the thoughts and dreams of individuals. However, the team behind the AI research believes that the development will help restore communication in patients who have lost their ability to speak.

Our Say

The University of Texas at Austin research is a significant breakthrough in non-invasive brain activity mapping. Developing an AI model that can read thoughts and translate them into text in real-time is an important milestone. It helps understand how the brain interprets language and offers new ways to restore speech in patients struggling to communicate due to stroke or motor neuron disease. While the development raises concerns about mental privacy, the achievement is a massive leap forward in neuroscience and artificial intelligence.

Also Read: Microsoft Releases VisualGPT: Combines Language and Visuals