This article was published as a part of the Data Science Blogathon

Objective

A warm welcome to all the readers. This article is about exploring the possibility of Artificial Intelligence aided renaissance in the field of medical science and healthcare. A paradigm shift from a traditional approach is the need of the hour to improve the quality of the patient outcome. This article would be immensely beneficial for those working in the area of Artificial Intelligence trying to make an inroad into the domain of Medical and Healthcare and for those working in the area of Medical and Healthcare Science trying to make an inroad into the domain of Artificial Intelligence.

Table of contents

- Objective

- Introduction

- Links to Deep Learning Codes

- Reasons for making a paradigm shift from a traditional approach to a machine-driven approach

- Deep Learning Methods

- Role of Deep Learning in addressing the pertinent medical conditions and complications arising out of these conditions

- Understanding TREWS and its applications

- Challenges of Deep Learning in the Medical and Healthcare world

- Conclusion

- Frequently Asked Questions

- References

Introduction

a)What is Deep Learning?

Deep learning can be used as a potent tool to identify patterns of certain conditions that develop in our body, a lot quicker than a clinician. Deep learning mimics the working mechanism of the human brain through a combination of data inputs, weights, and biases. Clustering data and making predictions with a high degree of accuracy remain the basic mechanism of deep learning.

b)Medical conditions/procedures covered

1. Thyroidectomy – 1. Excision of the thyroid gland or suppression of its function is known as Thyroidectomy. A gland is an aggregation of cells specialized to secrete materials not needed for metabolism. The thyroid gland is one of the glands in our body which secretes thyroxine (T4) and triiodothyronine (T3) responsible for metabolism.

2. Cardiovascular disease – Cardiovascular refers to heart and blood vessels, and any condition

associated with these is known as cardiovascular disease. Common cardiovascular

diseases are angina pectoris, cardiac arrhythmia, congestive cardiac failure,

myocardial infarction, coronary insufficiency, and hypertension.

3. Sepsis – Presence of pathogenic organisms in the blood or other tissues. It occurs when infection releases chemicals in the blood that triggers a negative inflammatory response leading to major organ failure.

c)A real-life incident with the traditional approach

A 52-year-old woman was admitted to the Baltimore hospital emergency room with a complaint of a sore foot. She was diagnosed with dry gangrene of the toe and was admitted to a general ward. On the third day, she shows symptoms of pneumonia and the line of treatment, as usual, is a course of antibiotics. After 3 days, she experiences tachycardia (raised heart rate) along with faster breathing. Within 12 hours, her condition worsens as she enters septic shock. She was transferred to ICU but her major organs started to fail one after another, and finally, on the 22nd day, she passed away (Ashley, 2017).

According to a Doctor at Johns Hopkins Medicine’s Armstrong Institute for Patient Safety and Quality, septic shock is a life-threatening condition with a 50% mortality rate and an hour of untreated sepsis adds 8% to the mortality rate. So, it is imperative on the part of clinicians to diagnose the condition very fast. But, with the shortage of the clinician workforce, how to achieve this? The answer is to use ‘Machine’. In the upcoming sections, we will discuss the usefulness of using machines to manage each of the given conditions.

Links to Deep Learning Codes

To be familiar with the ropes, the links to understanding deep learning codes in a stepwise manner have been given below

Gearing up to dive into Mariana Trench of Deep Learning

Plunging into Deep Learning carrying a red wine

Reasons for making a paradigm shift from a traditional approach to a machine-driven approach

The quality of patient outcomes in hospital setup is in a dilapidated state. As seen with the outcome of the lady being admitted with a sore, it is important to make a paradigm shift for better patient outcomes. The reasons for making a transition towards a machine-driven approach from a traditional approach are

a) Clinician workforce is not sufficient to cater to the increasing population with diversifying conditions.

b) Difficult to scale up clinicians but easy to scale up machines.

c) Consumerism approach in private hospitals affecting the quality of patient outcomes.

d) Bureaucratic bottleneck especially in the government-controlled hospitals resulting in poor health outcomes.

A machine-driven approach would remain unaffected by all the above limitations, thereby, having the potential to bring better results.

Deep Learning Methods

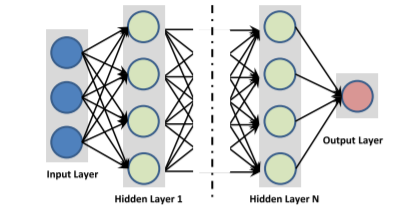

The concept has been derived from the Artificial Neural Network (ANN). The key is to build several hidden layers and generate massive training data which would enable the machine to learn more useful features for better prediction and classification. The different types of Deep Learning architectures, associated images and their features as put forth by Ravi et al. (2017) have been discussed below

| S. No | Architecture type of Deep Learning | Features |

| 1 | Deep Neural Network | 1.Used for classification or regression

2.More than 2 hidden layers involved 3.Complex, the non-linear hypothesis is allowed to be expressed. 4.The learning process is very slow 5.Training is not trivial |

Image Source: Ravi et al. (2017) https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

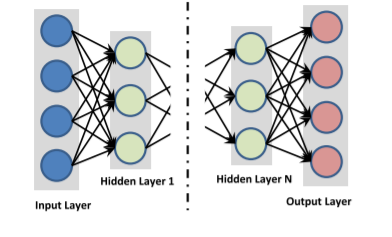

| S. No | Architecture type of Deep Learning | Features |

| 2 | Deep Auto Encoder | 1.For feature extraction or dimensionality reduction

2.Same number of input and output nodes 3.Unsupervised learning method. 4.Labelled data for training is not required 5. Requires a pre-training stage |

Image Source: Ravi et al. (2017) https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

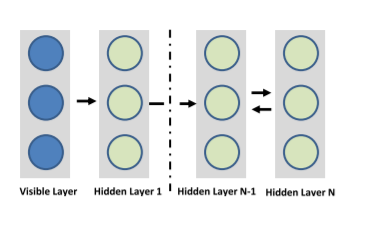

| S. No | Architecture type of Deep Learning | Features |

| 3 | Deep Belief Network | 1.Allows supervised as well as unsupervised training of the network

2.Has undirected connection just at the top 2 layers 3.Layer by layer greedy learning strategy to start the network 4.Likelihood maximization 5.Computationally expensive training process |

Image Source: Ravi et al. (2017) https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

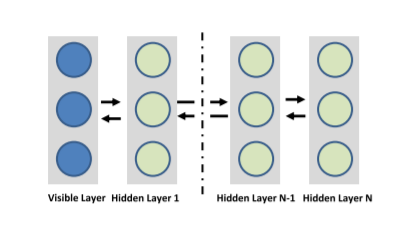

| S. No | Architecture type of Deep Learning | Features |

| 4 | Deep Boltzmann Machine | 1.Connections existing among all layers of the network are not properly directed2.Stochastic maximum likelihood algorithm is applied to maximize the lower bound of the likelihood.3.Involves top-down feedback

4.Time complexity for the inference is higher 5.For large datasets optimization is not pragmatic |

Image Source: Ravi et al. (2017) https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

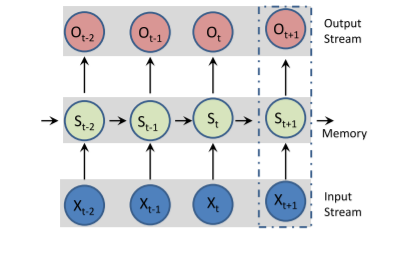

| S. No | Architecture type of Deep Learning | Features |

| 5 | Recurrent Neural Network | 1.Used where the output depends on the previous computations.

2.Ability to memorize sequential events 3.Capacity to model time dependencies 4.Suitable for NLP (Natural language processing) applications 5.Frequent learning issues |

Image Source: Ravi et al. (2017) https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

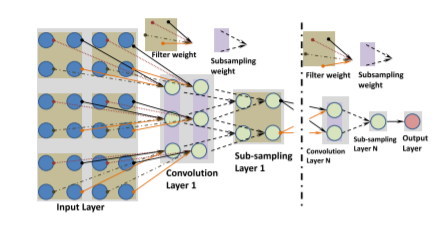

| S. No | Architecture type of Deep Learning | Features |

| 6 | Convolutional Neural Network | 1.Conducive for 2D data like images

2.Each of the convolutional filters transforms its input to a 3D output volume of neuron activations 3.Limited neuron connections required 4.Requirements for many layers to find the complete hierarchy of the visual features 5.Large dataset comprising labelled images is required |

Role of Deep Learning in addressing the pertinent medical conditions and complications arising out of these conditions

Let’s cover the first 2 medical procedures/conditions mentioned at the outset and see how Deep Learning helps in those conditions.

1. Thyroidectomy –

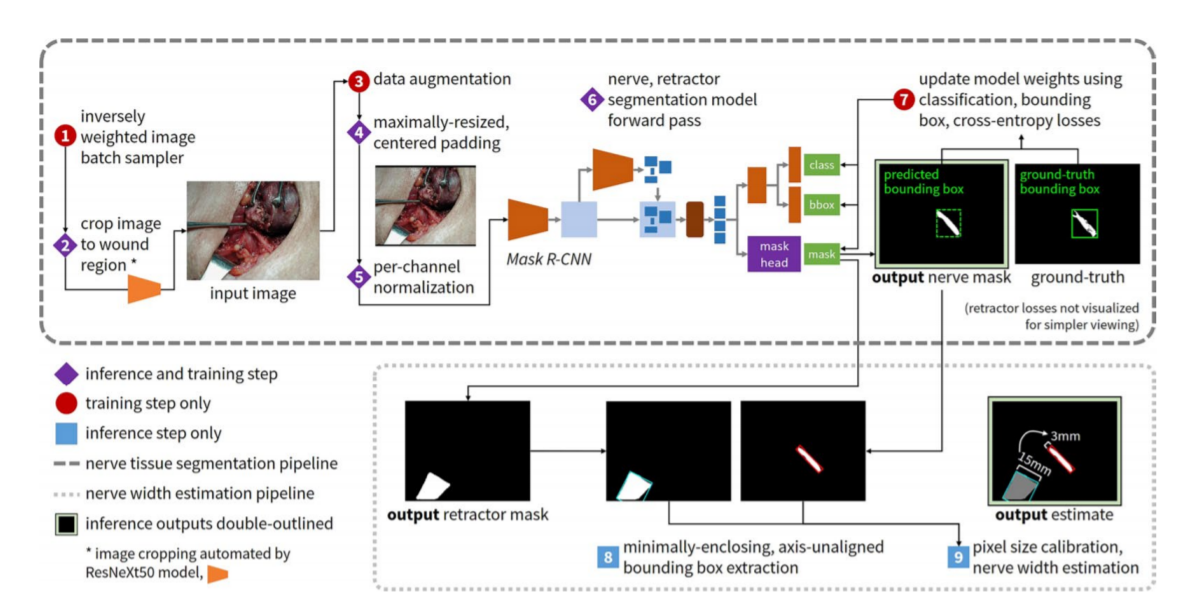

During thyroidectomy, the identification of recurrent laryngeal nerve (RLN) is of paramount importance. The recurrent laryngeal nerve pertains to the larynx (voice box) and is a part of the vagus nerve which supplies all the internal muscles. During thyroidectomy, distinguishing RLN from small-caliber blood vessels is very important. The rate of postoperative complications arising out of inexperienced surgeon’s work is quite high. Can computer vision tools enable surgeons to identify RLN in a better manner? Let’s see The methods/models of Deep Learning, their procedures, and results applied in the identification of RLN as put forth by Gong et al. (2021) have been discussed below

| Dataset – 277 images from 130 patients obtained by using a digital SLR (Nikon 3000) and smartphone(apple) | ||||

| S. No | Methods/Models | Procedures | Results | Remarks |

| 1 | Segmentation | Images tagged with far away or close-up picture distance->Manual annotations of nerve segmentation for each image by head and neck endocrine surgeons->RLN segmentations evaluated quantitively using DSC (Dice similarity coefficient) against ground truth surgeon annotation applying k-fold cross-validation(k=5) | DSC = 2TP/(2TP+FP+FN) where TP = True Positive; FP = False Positive, and FN = False Negative DSC is the primary metric for segmentation |

|

| 2 | Cropping model | Images cropped to focus on surgical anatomy of interest->cropped images segmented by the nerve segmentation model->AP (Average Precision) metric was reported which ranges from 0 to 1 with AP>0.5 is considered to be a good model | AP (across all 5 folds) = 0.756

AP (far away image) = 0.677 AP (close up image) = 0.872 |

|

| 3 | Nerve segmentation model | Similar to the cropping model | DSCRange = 0.343(+/- 0.077, n=78, 95% CI) to 0.707(+/- 0.075, n=40, 95% CI) | The lowest DSC was obtained under far away, bright lightning and the highest DSC was obtained under close up, medium lighting. |

| 4 | Nerve width estimation | Similar to above model but images are illustrated with army-navy retractors, and maximum width estimates are derived from ground-truth (gt) and predicted nerve segmentations (pr) | Estimations using different auto-cropped input images and predicted segmentations: i) gt=1.434 mm, pr=1.009mmii) gt=3.994 mm, pr=3.317mm iii) gt=3.184 mm, pr=3.634 mm iv) gt=3.485 mm, pr=3.203 mm |

|

It can be inferred that estimations derived from both are similar. The illustration of the entire process involving end to end training and inference pipeline can be seen in the image below

Image Source: Gong et al. (2021) www.nature.com/scientificreports

The promising results show that Deep Learning has the capacity to provide insight to surgeons in performing thyroid operations.

2. Cardiovascular disease –

Proper diagnosis of cardiac ailments is key to deter adverse outcomes. Deep Learning has the potential to diagnose key elements effectively. Let’s see how it can do so. The Deep Learning pipeline for Cardiac Ultrasonic Imaging is having three parts viz. acquisition and pre-processing of data, selection of network, and training and evaluation (Cao et al., 2019). The flow chart of the process can be seen below

| 1. Medical images collected in DICOM format

2. Images converted to a JPG/PNG format 3. Preprocessing by removing poor quality, denoising, and tagging 4. Selection of network preferably 152 layers of ResNet from Keras or SGD and Dropout optimization techniques 5. Adjusting parameters according to the network to optimize performance 6. Test the network NB: DICOM = Digital imaging and communications in medicine |

Cao et al. (2019) put forth some research contents of Heart image segmentation and Heart image classification using Deep Learning. These have been summarized in the following tables

| Author | Year | Dataset | Segmentation content | Method | Dice |

| Wenjia Bai et al | 2017 | UK Biobank study | Short Axis Heart | Semi-supervised Learning | 0.92 |

| Ozan Oktay et al | 2017 | UK Digital Heart Project | short-axis cardiac | ACNN | 0.939 |

| Yakun Chang et a | 2018 | ACDC | short-axis cardiac | FCN | 0.90 |

| Author | Year | Dataset | Classification content | Method | Accuracy |

| Lasya PriyaKotu et al | 2015 | Author self-made data | the risk of arrhythmias | k-NN | 0.94 |

| Houman Ghaemmaghami et al | 2017 | Author self-made data | heart-sound | TDNN | 0.95 |

| Ali Madani et al | 2018 | Author self-made data | view of echocardiograms | CNN | 0.978 |

Deep Learning has evolved as an important tool to predict the hidden patterns of cardiovascular diseases and may help in the early diagnosis of critical conditions.

Understanding TREWS and its applications

Sepsis is a fatal medical condition that remains difficult to treat despite a plethora of antibiotics in the arsenal of clinicians. Early diagnosis is the key and even an hour delay adds to 8% to the mortality as observed by the researchers and evident from the example we saw at the outset. Early aggressive treatment is important to improve mortality outcomes but with the available clinical tools, it is difficult to predict who will develop sepsis and its associated manifestations.

TREWS (Targeted Real-time Early Warning Score) has proved to be an answer to accurately and quickly diagnose Sepsis. Henry, Hager, Pronovost, & Suria (2015) developed a model by applying a method to MIMIC-II clinical database. According to the SSC (Surviving sepsis campaign) guidelines, organ dysfunction or to be more specific SIRS (Systemic inflammatory response syndrome) was defined as the presence of any 2 criteria out of 4 which are

i)Systolic blood pressure 2.0 mmol/L; urine output < 0.5 mL/kg for more than 2 hours despite adequate hydration.

ii)Serum creatinine > 2.0 mg/dL in absence of renal insufficiency

iii)Bilirubin > 2 mg/dL in absence of any liver disease

iv)Acute lung injury with PaO2/FiO2 < 200 in the presence of pneumonia or with PaO2/FiO2 < 250 in the absence of pneumonia

The steps to develop a model according to Henry, Hager, Pronovost, & Suria (2015) are as follows

1) Segregation of dataset

Random sampling was done to segregate data into development and validation sets.

Development set = 13,181 patients (1836 positive, 11,178 negative,

and 167 patients with right censoring after treatment)

Validation set = 3053 patients (455 positive, 2556 negative, and

42 patients with right-censored after treatment)

Patients referred to as negative cases are those who did not develop septic shock.

2) Model Development

At the outset, processing of patient-specific measurement streams was done to compute features categorized into vital, clinical, and laboratory groups. This was followed by the estimation of coefficients used in the targeted early warning score applying a supervised learning algorithm. The features that were predictive of septic shock were spontaneously selected by the learning algorithm, and a model comprising the list of predictive features and their coefficients was the output.

2.1) Estimating model coefficients

Supervisory signal using Cox-proportional hazards model was fit using the time until the onset of septic shock. The risk of shock is computed from two parts as the equation given below

λ(t |X) = λ0t*exp{XβT}

X= Features, t= time, λ0= Time-varying baseline hazard function, β = regression coefficient

A limitation occurred with this model in the form of unknown or censored event times. So, a multiple imputations–based approach was used to address model parameter estimation for the Cox proportional hazards model as it was easy to implement.

From each of the N copies of the development data set, a separate model was trained. The regularization parameter was found to be 0.01 using 10-fold cross-validation on the first sampled data set and was fixed to this value for training the subsequent models.

Finally, Rubin’s equations were applied to combine resulting predictions to compute the final risk value as the average of risk values from the output of each of the N models.

3) Model Evaluation

Model coefficients obtained from the development set were fixed and applied to patients in the validation set as though they

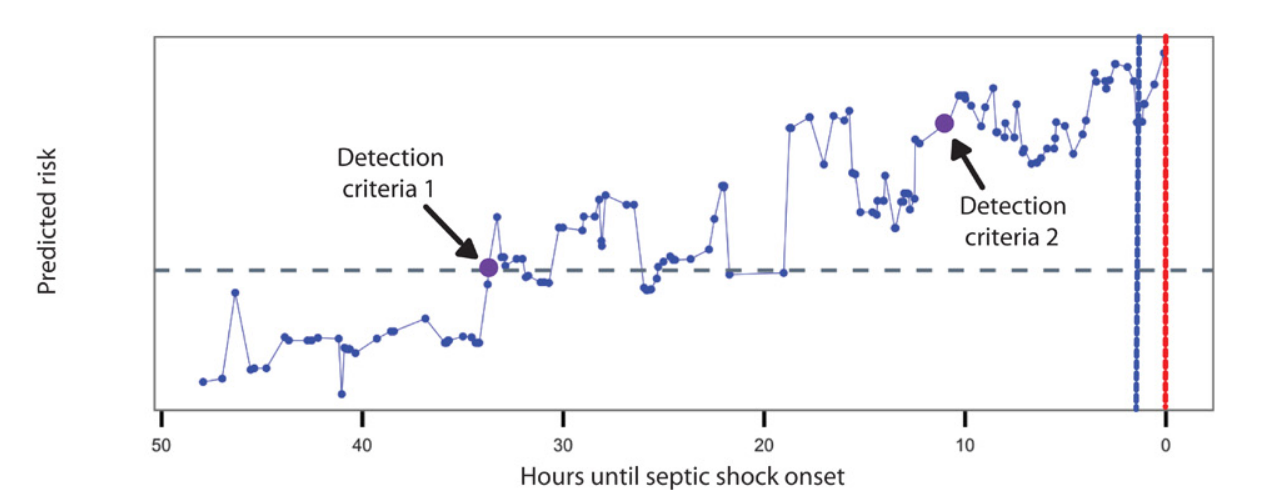

were observed prospectively. With the availability of new data, the TREWScore was recomputed for each patient in the validation set resulting in a point in time risk for septic shock for each individual. For a fixed risk threshold, an individual was identified as being at high risk of septic shock if his or her risk trajectory ever rose above the detection threshold before the onset of septic shock, and sensitivity and specificity were calculated.

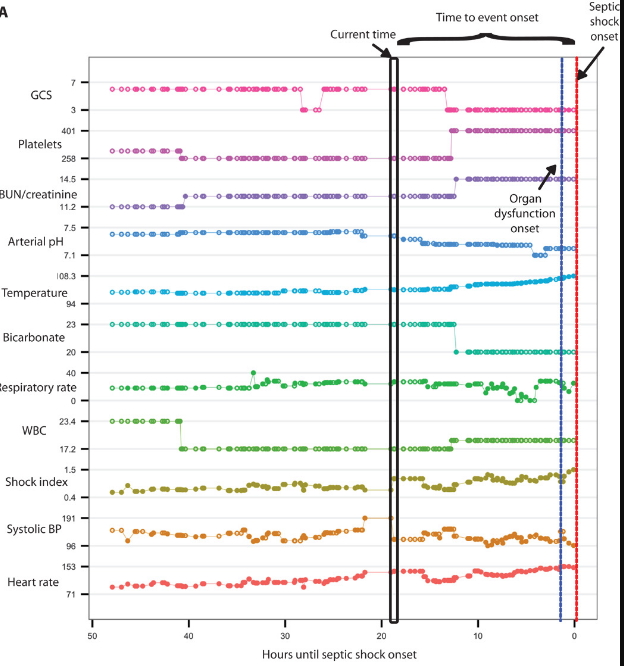

4) Results

A subset of the features that were most indicative of septic shock was selected by the algorithm and learned a set of weights for them. The features at each time point were labeled by the time to onset, the number of hours until the onset of septic shock (Fig 1), and used to generate TREWScore risk predictions over time (Fig 2).

Fig 1 . Image Source: Henry, Hager, Pronovost, & Suria (2015) https://stm.sciencemag.org

Fig 2. Image Source: Henry, Hager, Pronovost, & Suria (2015) https://stm.sciencemag.org

The median lead time of over 24 hours through TREWS gives clinicians the time to intervene before sepsis turns into a septic shock.

Challenges of Deep Learning in the Medical and Healthcare world

1. Insufficient data at times results in low accuracy

2. Problem of interpretability with Deep Learning models gives it a shape of a black box

3. Limitation of disease-specific data of rare diseases

4. Many a time the raw data cannot be directly used as input for DNN

5. DNN can be tricked easily by adding small changes to the input samples resulting in misclassification

Conclusion

The Healthcare sector is transforming from paper-based records to electronic health records (EHR). This will open the floodgate of opportunities for the application of Deep Learning in this field and to overhaul the Medical and Healthcare system. This would improve patient-clinician interaction, thereby resulting in improved health outcomes in a hospital setup. Deep Learning will hold the key to the success of clinicians in the field of Medical Science in the future.

Frequently Asked Questions

A. Deep learning is utilized in healthcare for a wide range of applications. It aids in medical image analysis, including the detection of diseases and abnormalities in X-rays, MRIs, and CT scans. Deep learning models are employed for predictive analytics in patient outcomes, drug discovery, and personalized treatment recommendations. Natural language processing enables the extraction of relevant information from medical documents, such as electronic health records and research papers. Deep learning also plays a role in genomics research, assisting in the interpretation of genetic data for disease diagnosis and prognosis. Overall, deep learning improves diagnostics, treatment planning, and healthcare decision-making processes.

A. Deep learning in healthcare faces several challenges. First, the requirement for large and labeled datasets is often hindered by issues of data privacy and limited access to high-quality medical data. Interpretability and explainability of deep learning models remain critical concerns, as they need to be transparent and understandable for medical professionals. The lack of standardized protocols for model validation and regulatory approval poses obstacles for widespread adoption. Additionally, deep learning models may not generalize well across different populations or healthcare settings, requiring careful evaluation and customization. Lastly, the potential for biases in the data and models must be addressed to ensure fair and equitable healthcare outcomes.

References

1. Ashley, S. (2017). Using Artificial Intelligence to spot Hospitals’ Silent Killer. NOVA. Retrieved from https://www.pbs.org/wgbh/nova/article/ai-sepsis-detection/

2. Cao, Y et al. (2019). Deep Learning Methods for Cardiovascular Diseases. Journal of Artificial Intelligence and Systems. Retrieved from https://iecscience.org/journals/AIS

3. Gong, J et al. (2021). Using deep learning to identify the recurrent laryngeal nerve during thyroidectomy. Scientific Reports. Retrieved from www.nature.com/scientificreports

4. Henry, K, E., Hager, D, N., Pronovost, P, J., & Suria, S. (2015). A targeted real-time early warning score (TREWScore) for septic shock. Science Translational Medicine. Retrieved from https://stm.sciencemag.org

5. Ravi, D et al. (2017). Deep Learning for Health Informatics. IEEE JOURNAL OF BIOMEDICAL AND HEALTH INFORMATICS. Retrieved from https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7801947

6. Richards, S. (2019). Early Warning Algorithm Targeting Sepsis at John Hopkins. John Hopkins Medicine. Retrieved from https://www.hopkinsmedicine.org/news/articles/early-warning-algorithm-targeting-sepsis-deployed-at-johns-hopkins

7. https://artemia.com/blog_post/ai-trends-in-modern-healthcare/

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.