I wasn’t at CES, and being completely honest, I wasn’t paying an enormous amount of attention to the show.

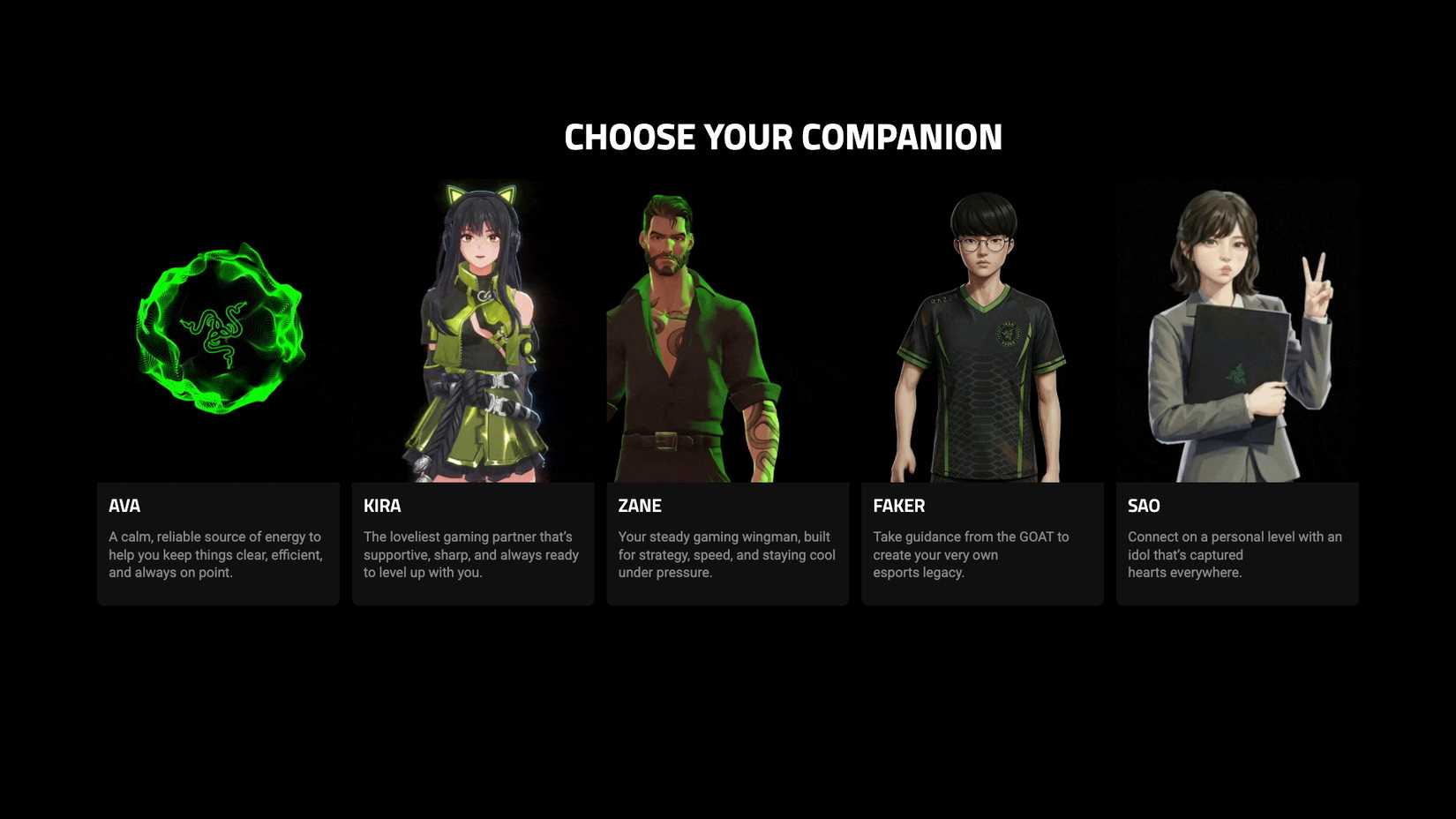

But even I saw Razor’s Project Ava and the storm that followed it. I’ve seen the opinions and the comments, and I agree with some of them.

I get the immediate uncanny-valley revulsion, I get the idea that they’re a bit weird. They’re a bit cringe. They’re 100% not for me.

But just because they’re not for me doesn’t mean they’re not for anyone.

Some people will really appreciate Project Ava, and plenty of people are using AI companions to scratch that social itch.

But that just throws up a larger issue for me, and I feel like it’s one that people aren’t talking about.

AI companions should be allowed to exist

I know relatively few people are actually making this point, but it’s worth underlining exactly why AI companions should be allowed to exist.

Ultimately, there are people who would benefit from having them around. There are lonely people who struggle to make friends.

Maybe they have anxiety, and the faceless nature of AI and lack of pressure helps to ease them into the experience.

Perhaps they’ve simply ended up somewhere in their lives where they’re alone through no fault of their own.

Whatever the reason, an AI “person” can help to bridge that gap.

You can argue it’s not “real,” but I would argue it’s real if it helps. Human pack instincts are strong.

My robot vacuum cleaner is called Esther because she gets stuck a lot and pesters us about it. She’s Esther the Pester.

She’s not alive, she has no feelings, and I know that. But it doesn’t stop me from wishing her a good morning and giving her a pat.

Why do I do this? Because, on some level, it makes me feel better to do so.

It gives me a sense of connection, and I enjoy it, even though I live with other people I connect with every day. These connections have value.

Which is why it’s so tragic that they can be taken away just like that.

Your AI friends can be taken away at a moment’s notice

Your AI friends are not real, even if the emotions you feel towards them are. They’re ephemeral creations, a puff of smoke, a mere whisper on the wind.

They’re fragile and can be taken away in a moment, without your knowledge or forewarning.

If a CEO decides their company could save money by shutting down your AI companion, you can be sure they’ll do it. And your friend and companion will be gone, perhaps forever.

We’ve seen this happen over and over again with a number of services, from video games to heating systems.

If they’re willing to turn people’s thermostats into bricks, you can be sure it’ll happen to your little AI buddy as well. It is, unfortunately, just a matter of time.

And if they’re not shut down, you can still be sure they’re patched — which comes with its own issues.

Remember all of those people who lost their “AI husbands” when ChatGPT-4 updated to ChatGPT-5? People noticed that the underlying software was different, and they noticed it hard.

The point here is that your AI companion can change overnight, or even be shut off, without you being able to do anything about it.

If these products are being sold as companions, this doesn’t feel like it should be allowed.

If these are to truly be sold as companions, there needs to be protection

It doesn’t feel right that companies should be the ultimate arbiters of your friendships.

Companies, despite what US law says, are not people, and they do not think like people.

A person employed to help out at your home may choose to help out a little longer than their employment requires. Heck, they may become a friend and visit you even after employment has ceased.

Companies do not do that, and left to them, your AI buddies won’t either. The moment an AI companion no longer makes economic sense, it’s gone.

You can make the argument that people shouldn’t be reliant on AI companions, and maybe you’re right. But the world isn’t that simple, and lives are messy.

Sometimes people need crutches, whether physical or emotional — and we shouldn’t judge people who’ve found themselves in that position.

Self-hosting an AI model can help fix this, but that’s a rather technical solution for most people.

Ultimately, I believe there should be ways to protect these companions from just being removed. And maybe the future will come up with solutions.

But right now, it worries me that we’re allowing AI to simply rampage through people’s lives with few restrictions.

AI needs to step lightly when it comes to people’s lives, and at the moment, I’m not seeing any indication that’s going to happen.