Every time we do a Google search, we don’t necessarily think about the environmental impact it can have. A significant amount of energy is consumed to power the data centers and servers when using Google search (and other search engines). A single search isn’t destroying the planet, but the cumulative use of billions of users using Google search does leave a large enough footprint.

But where does that leave AI? Google Gemini is embedded in Google search for AI Overviews, so it wouldn’t be surprising if it contributed to greenhouse gas emissions. However, did you know that sending a prompt to an AI chatbot can have a greater environmental impact than using Google search? So, how much is it, and is it realistic to make cutbacks with it? Using AI on your smartphone, smart home devices, security cameras, and thermostat may hurt the environment — we dive deeper into why that is.

Training AI already uses up a lot of energy

It’s a demanding process that needs to be sustained

The Uneven Distribution of AI’s Environmental Impacts (2024) in the HBR publication states that AI consumes thousands of megawatt-hours of electricity and emits hundreds of tons of carbon. According to the publication, that is equivalent to the annual carbon emissions of hundreds of households in America. Additionally, training AI models can also lead to the depletion of freshwater resources. As a result, I fully expect the environmental cost to rise as more complex AI and competitors require increasingly more computational resources to sustain themselves — which doesn’t improve the situation.

AI is housed in energy-hungry data centers

Most large-scale AI deployments are housed in data centers, including those operated by cloud service providers. Unfortunately, data centers are electronically hungry for operation and setup. One aspect is that the large 2 kg computer housed in one of these data centers requires around 800 kg of raw materials (this does not account for the need for microchips that power the AI). Another includes the construction. Water is in high demand during construction, but it is also crucial during operation, as it is necessary to keep electronic components cool and functioning properly. Water is a precious resource that isn’t limitless, and if we continue to use it to house and host these costly data centers, it will only hurt the environment.

Additionally, data centers generate a substantial amount of electronic waste, which often contains hazardous substances such as mercury and lead. Mercury pollution is toxic to wildlife and can single-handedly destroy ecosystems, whereas lead is also disruptive, causing neurological damage to animal life and decreasing plant growth. It’s an ongoing issue that’s difficult to reverse when hazardous substances have already been released within the environment. As someone who previously worked with lead-based compounds on a much smaller scale, it’s somewhat of a hassle to properly dispose of it — I can’t imagine the challenges of doing it at the macro level.

Hosting AI technology requires a lot of energy

It’s not always coming from renewable sources

To keep everything operational, you need to power the data center, all the operational equipment, and the AI. Powering everything requires a lot of energy. Sadly, this often isn’t from sustainable sources. Instead, it usually comes from the burning of fossil fuels (coal, oil, gas).

Fossil fuels are a significant contributor to greenhouse gas emissions. Approximately 75% of global greenhouse gas emissions come from fossil fuels. These emissions are also a primary source of global climate change, as greenhouse gases are responsible for trapping the sun’s heat. Unfortunately (as much as it pains me to admit), we are still far from establishing data centers that can be powered solely by renewable energy sources.

Supplying the hardware for AI also hurts the environment

Creating hardware that leverages AI will inevitably result in significant energy consumption during manufacturing. The extraction process for procuring metals for the production process is very energy-intensive. In layman’s terms, making new tech that uses AI is not (energy) cheap. The cycle continues based on the market; if more people continue to buy devices powered by AI, these companies are more likely to keep producing them. Therefore, production is unlikely to slow down anytime soon.

AI activity is harmful to the environment

Frequently using AI is also quite costly

Creating electronics, training AI, and leveraging AI processes all have a chain effect on energy consumption. According to Earth.org, electronics contribute significantly to emissions due to activities such as web surfing, social media use, streaming, and video conferencing. The website states that these activities can account for up to 40% of the per capita carbon budget.

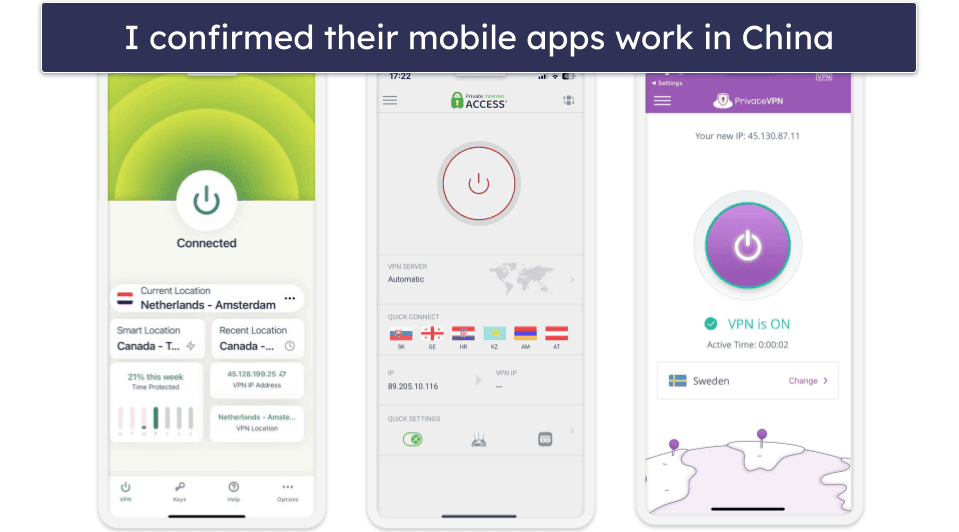

The UN Environmental Programme also reports that a request made through ChatGPT consumes 10 times the electricity of a Google search (based on the International Energy Agency’s findings). That’s far more expensive than you’d expect; this is likely due to factors like housing the data center and high operational costs (it requires significantly more computational power to run compared to Google search, especially when dealing with large language models).

The AI Overviews embedded in Google search are estimated to consume 30 times as much energy to generate text as it would to extract it directly from a source. Though it was not as much of an energy killer as it had first launched, a Google spokesperson told Scientific American that

“machine costs associated with [generative] AI responses have decreased 80 percent from when [the feature was] first introduced in [Google’s feature incubator] Labs, driven by hardware, engineering and technical breakthroughs.”

It’s better than it was, but it’s still not great. Given the number of users who interact with AI Overviews, the cost adds up. It is also very tricky (and nearly impossible without some workaround) to turn it off directly. The best we can do is interact with an alternative to Google search to avoid it.

Reducing our carbon footprint in tech

Unfortunately, we find ourselves in a difficult situation. As someone who is more environmentally conscious, I’ve felt a lack of control over what I can do to reduce my carbon footprint. I can’t directly control the manufacturing process for new hardware, and I also heavily rely on internet activity to stay up to date on tech news and breakthroughs.

I’ve also experimented with AI chatbot prompts on numerous occasions to understand the importance of AI and how it works. But it doesn’t come without a guilty conscience, knowing that we still rely on non-renewable energy to power data centers. Unfortunately, the ugly truth is that there is not much we can do about it except to limit our digital activities and hope that AI can become more energy-efficient as models improve.