Hey folks!. In this blog post, we’re to look at how to extract website urls, emails, files and accounts using Photon crawler. Photon is an incredibly fast site crawler written in Python used to extract urls, emails, files, website accounts and much more from a target.

Photon is able to handle 160 requests per second while extensive data extraction is just another day for Photon!. The project is under heavy development and updates for fixing bugs, optimizing performance & new features are being rolled every day.

Photon is able to extract the following types of data while crawling:

- Extracts URLs both in-scope & out-of-scope, as well as URLs with parameters (example.com/gallery.php?id=2)

- JavaScript file s & Endpoints present in them

- Can extract strings based on custom regex pattern

- Extract Intel – e.g emails, social media accounts, Amazon buckets etc.

- Extracts Files: pdf, png, xml etc.

- Subdomains & DNS related data

- Strings matching custom regex pattern

- Secret keys (auth/API keys & hashes)

The data extracted by Photon is saved in an organized manner.

$ ls -1 geeksforgeeks.org

endpoints.txt

external.txt

files.txt

fuzzable.txt

intel.txt

links.txt

scripts.txt

All files are saved as text for easy reading.

Install and use Photon Website crawler in Linux

Photon project is available on git, clone it by running:

$ git clone https://github.com/s0md3v/Photon.git

Cloning into 'Photon'...

remote: Counting objects: 417, done.

remote: Compressing objects: 100% (22/22), done.

remote: Total 417 (delta 20), reused 42 (delta 20), pack-reused 374

Receiving objects: 100% (417/417), 151.42 KiB | 201.00 KiB/s, done.

Resolving deltas: 100% (182/182), done.Change toPhoton and start using photon script.

cd Photon

chmod +x photon.py

The help page is available when the option --help is used. Below are the options available:

usage: photon.py [options]

-u --url root url

-l --level levels to crawl

-t --threads number of threads

-d --delay delay between requests

-c --cookie cookie

-r --regex regex pattern

-s --seeds additional seed urls

-e --export export formatted result

-o --output specify output directory

--timeout http requests timeout

--ninja ninja mode

--update update photon

--dns dump dns data

--only-urls only extract urls

--user-agent specify user-agent(s)

A basic usage example:

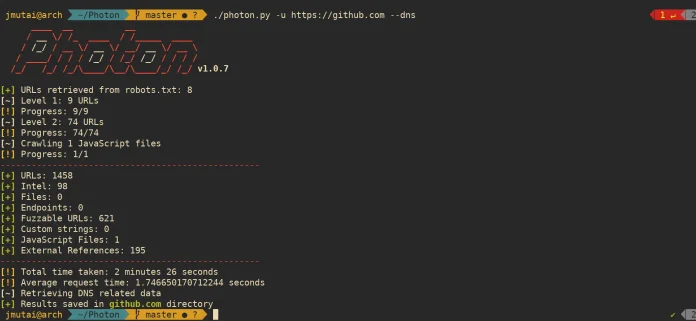

./photon.py -u https://github.comSee below screenshot:

-u option is used to specify root URL.

When done, a directory with site name should be created.

To crawl with 10 threads, level 4 and export data as json

./photon.py -u https://github.com -t 10 -l 3 --export=jsonGenerates an image containing the DNS data of the target domain.

./photon.py -u http://example.com --dnsAt present, it doesn’t work if the target is a subdomain.

Updating Photon

To update photon, run:

./photon.py --update

Running Photon in Docker container

Photon can be launched using a lightweight Python-Alpine (103 MB) Docker image.

cd PhotonBuild docker container:

docker build -t photon .Run the container

docker run -it --name photon photon:latest -u google.com