The Azure Machine Learning team is excited to announce the public preview of Azure Arc-enabled Machine Learning for inference. This builds on our training preview, enabling customers to deploy and serve models in any infrastructure on-premises and across multi-cloud using Kubernetes. With a simple AzureML extension deployment on a Kubernetes cluster, Kubernetes compute are seamlessly supported in Azure ML providing service capabilities for full ML lifecycle and automation with MLOps in hybrid cloud.

Many enterprises have chosen the Kubernetes container platform to meet the challenges of providing compute infrastructure for machine learning. Although there are open source ML tools for Kubernetes, it requires time and effort to set up the infrastructure, deploy data science productivity tools and frameworks, and keep all those up to date. To scale ML adoption in an organization, automation with MLOps is often required. MLOps in a hybrid cloud presents a different set of challenges such as on-premises and cloud environment compatibility, networking and security, and lack of a single pane of glass to provision and maintain hybrid infrastructures.

Built on top of Azure Arc enabled Kubernetes, which provides a single pane of glass to manage Kubernetes anywhere, Azure Arc enabled ML inference extends Azure ML model deployment capabilities seamlessly to Kubernetes clusters, and enables customers to deploy and serve models on Kubernetes anywhere at scale. Enterprise IT or DevOps team can deploy and manage the AzureML extension with their standard tools and ensure all Azure ML service capabilities are up to date. ML engineers can deploy and score models with an Azure ML online endpoint, deploy a model with network isolation, or have a machine learning project fully on-premises. By using a repeatable pipeline to automate workflows for CI/CD in a hybrid cloud environment, data scientists and ML Ops teams can continuously monitor model deployment anywhere and trigger retraining automatically to improve model performance.

How it works

It is easy to enable an existing Kubernetes cluster for Azure Arc enabled ML inference by following the 4 steps below:

Connect. Follow the instructions here to connect an existing Kubernetes cluster to Azure Arc. For Azure Kubernetes Service (AKS) cluster, connecting to Azure Arc is not needed and this step can be skipped.

Deploy. Arc enabled ML inference supports online endpoint with public IP or private IP, and this can be configured at AzureML extension deployment time. The following CLI command will deploy AzureML extension to an Arc-connected Kubernetes cluster and enable an online endpoint with private IP. For AzureML extension deployment on AKS cluster without Azure Arc connection, please change --cluster-type parameter value to be managedClusters.

az k8s-extension create --name tailwind-ml-extension --extension-type Microsoft.AzureML.Kubernetes --cluster-type connectedClusters --cluster-name <your-connected-cluster-name> --config enableInference=True privateEndpointILB=True --config-protected sslCertPemFile=<path-to-the-SSL-cert-PEM-ile> sslKeyPemFile=<path-to-the-SSL-key-PEM-file> --resource-group <resource-group> --scope cluster

For additional scenarios to deploy AzureML extension for inference, please see Azure ML documentation.

Attach. Once the AzureML extension is deployed on the Kubernetes cluster, run the following CLI command or go to Azure ML Studio UI to attach the Kubernetes cluster to Azure ML workspace:

az ml compute attach --type kubernetes --name tailwind-compute --workspace-name tailwind-ws --resource-group tailwind-rg --resource-id tailwind-arc-k8s --namespace tailwind-ml

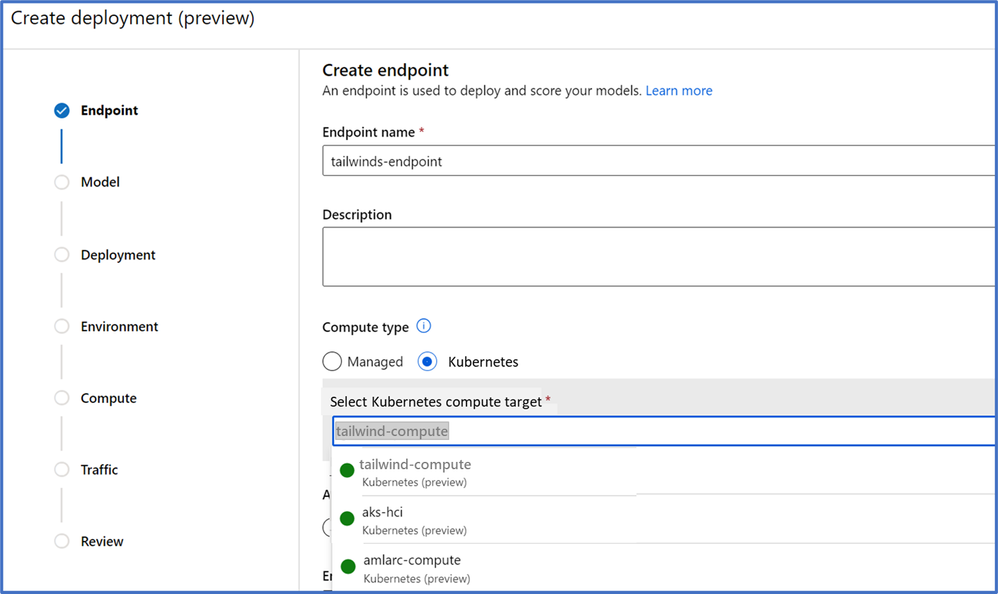

Use. After the above steps, the Kubernetes compute target is available in the Azure ML workspace. Users can look up Kubernetes compute target names with CLI command or in Studio UI “Compute-> Attached computes” page, and use Kubernetes compute target to train or deploy models without getting into Kubernetes details. To deploy the model to the Kubernetes cluster using CLI 2.0 or Studio Endpoint UI page, specify Kubernetes compute target name in endpoint YAML or in the Studio Endpoint UI page. See examples with endpoint YAML and Studio Endpoint UI page below:

name: tailwind-endpoint compute: azureml:tailwind-compute auth-mode: key |

Azure Arc-enabled ML model deployments share the same Online Endpoint concept as Azure ML Managed Online Endpoint, and it supports Azure ML built-in online endpoint capabilities such as safe rollout with blue-green deployment. Using the following sequence of CLI commands or Studio Endpoint UI page to achieve safe rollout with blue-green deployment:

# create endpoint az ml online-endpoint create --name tailwind-endpoint -f endpoint.yml # create blue deployment az ml online-deployment create --name blue --endpoint tailwind-endpoint -f blue-deployment.yml --all-traffic # test and scale blue deployment # create green deployment az ml online-deployment create --name green --endpoint tailwind-endpoint -f green-deployment.yml # test green deployment # assign initial traffic to green deployment az ml online-endpoint update --name tailwind-endpoint --traffic "blue=90 green=10" # test and monitor small traffic # assign 100% traffic to green deployment az ml online-endpoint update --name tailwind-endpoint --traffic "blue=0 green=100" # retire blue deployment az ml online-deployment delete --name blue --endpoint tailwind-endpoint --yes --no-wait

Take a look at a demo to see blue-green deployment in action:

Advanced model deployment capabilities

Deploy model with network isolation. Use a private AKS cluster and configure VNET/private link to deploy model with network isolation, or configure on-premises Kubernetes cluster with outbound proxy server to have secure ML on-premises. In either case, please ensure to meet the network requirements prior to deploying AzureML extension.

Sharing Kubernetes cluster across teams or Azure ML workspaces. At compute attach step, a namespace can be specified for the Kubernetes compute target. This can be helpful when different teams or projects share the same Kubernetes cluster. A single Kubernetes cluster can also be attached to multiple Azure ML workspaces, creating each compute target per Azure ML workspace to share the Kubernetes cluster compute resource.

Targeting nodes for model deployment. To target model deployment on specific nodes, for example, CPU pool vs GPU pool, create an instance type CRD and specify a particular instance type to use in the deployment of the YAML file, check out the documentation here.

Automation with MLOps in hybrid cloud. With Arc enabled ML, organizations can deliver on hybrid MLOps by using a mix of Azure-managed compute and customer-managed Kubernetes anywhere. For example, you can train a model using powerful Azure CPUs and GPUs, and deploy the model on-premises for data security and latency requirements. Or you can train a model on-premises to be compliant with data sovereignty requirements, and then deploy and use the model in the cloud for broad application access. For a complete MLOps demo using AKS on Azure Stack HCI as Kubernetes on-premises solution, please visit the Microsoft Ignite session here.

Get started today

To get started with Azure Arc enabled ML for inference, please visit Azure Arc enabled ML documentation and GitHub repo, where you can find detailed instructions to deploy the Azure ML extension on Kubernetes, and examples to use the Kubernetes compute target in Azure ML workspaces.

Article originally posted here. Reposted with permission.