Introduction

Time-series forecasting is a crucial task in various domains, including finance, sales, and energy demand. Accurate forecasting allows businesses to make informed decisions, optimize resources, and plan for the future effectively. In recent years, the XGBoost algorithm has gained popularity for its exceptional performance in time-series forecasting tasks. This article explores the power of XGBoost in time-series forecasting, its advantages, and how to effectively utilize it for accurate predictions.

Table of contents

- Importance of Accurate Time-Series Forecasting

- What is XGBoost?

- Advantages of XGBoost for Time-Series Forecasting

- Preparing Data for Time-Series Forecasting with XGBoost

- Building and Training an XGBoost Model for Time-Series Forecasting

- Advanced Techniques for Time-Series Forecasting with XGBoost

- Best Practices and Tips for Successful Time-Series Forecasting with XGBoost

- Limitations and Challenges of XGBoost for Time-Series Forecasting

- Frequently Asked Questions

Importance of Accurate Time-Series Forecasting

Accurate time-series forecasting is essential for businesses to make informed decisions and plan for the future. It enables organizations to optimize inventory management, predict customer demand, and allocate resources effectively. For example, in the retail industry, accurate sales forecasting helps in determining the optimal stock levels, reducing wastage, and maximizing profits. Similarly, in the energy sector, accurate demand forecasting allows for efficient resource allocation and grid management. Therefore, accurate time-series forecasting is crucial for businesses to stay competitive and thrive in today’s dynamic market.

Also Read: Time-series Forecasting -Complete Tutorial

What is XGBoost?

XGBoost, short for Extreme Gradient Boosting, is a powerful machine learning algorithm that excels in various predictive modeling tasks, including time-series forecasting. It is an ensemble learning method that combines the predictions of multiple weak models (decision trees) to create a strong predictive model. XGBoost is known for its scalability, speed, and ability to handle complex relationships in the data.

Advantages of XGBoost for Time-Series Forecasting

XGBoost offers several advantages that make it an excellent choice for time-series forecasting:

- Handling Non-Linear Relationships: XGBoost can capture complex non-linear relationships between input features and the target variable, making it suitable for time-series data with intricate patterns.

- Feature Importance: XGBoost provides insights into the importance of different features, allowing analysts to identify the most influential factors in the time-series data.

- Regularization: XGBoost incorporates regularization techniques to prevent overfitting, ensuring that the model generalizes well to unseen data.

- Handling Missing Values and Outliers: XGBoost can handle missing values and outliers in the data, reducing the need for extensive data preprocessing.

Preparing Data for Time-Series Forecasting with XGBoost

Step 1: Data Cleaning and Preprocessing

Before applying XGBoost to time-series data, it is essential to clean and preprocess the data. This involves handling missing values, removing outliers, and ensuring the data is in the correct format. For example, if the time-series data has irregular time intervals, it requires resamplin to ensure a consistent time interval.

Also Read: Data Cleaning for Beginners- Why and How ?

Step 2: Feature Engineering for Time-Series Data

Feature engineering plays a crucial role in time-series forecasting with XGBoost. It involves creating relevant features from the raw data that capture the underlying patterns and trends. Some common techniques include lag features (using past values as predictors), rolling statistics (e.g., moving averages), and Fourier transformations to capture seasonality.

Lag Features

Lag features involve incorporating past values of the target variable as predictors. The create_lag_features function in the provided code generates lag features up to a specified number of time steps (lag_steps). This technique allows the model to capture temporal dependencies and historical trends within the time-series data.

# Creating lag features for time-series data

def create_lag_features(data, lag_steps=1):

for i in range(1, lag_steps + 1):

data[f'lag_{i}'] = data['target'].shift(i)

return data

# Applying lag feature creation to the dataset

lagged_data = create_lag_features(original_data, lag_steps=3)Rolling Mean

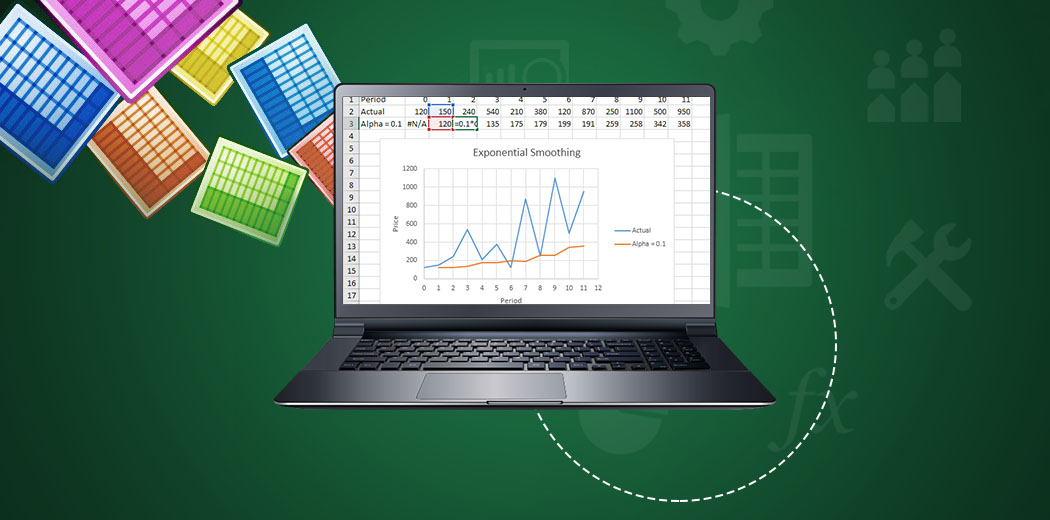

The rolling mean is a technique that smoothens time-series data by calculating the average over a specified window of observations. The create_rolling_mean function creates a new feature, ‘rolling_mean,’ by computing the mean of the target variable over a user-defined window size. This helps to highlight trends and patterns by reducing noise and fluctuations in the data.

# Creating rolling mean for time-series data

def create_rolling_mean(data, window_size=3):

data['rolling_mean'] = data['target'].rolling(window=window_size).mean()

return data

# Applying rolling mean to the dataset

rolled_data = create_rolling_mean(original_data, window_size=5)Fourier Transformation

Fourier transformation is applied to capture periodic components or seasonality within time-series data. The apply_fourier_transform function uses the Fast Fourier Transform (FFT) to convert the target variable values into the frequency domain. The resulting ‘fourier_transform’ feature contains information about the amplitudes of different frequency components, aiding in the identification and modeling of cyclic patterns in the time series.

# Applying Fourier transformation for capturing seasonality

from scipy.fft import fft

def apply_fourier_transform(data):

values = data['target'].values

fourier_transform = fft(values)

data['fourier_transform'] = np.abs(fourier_transform)

return data

# Applying Fourier transformation to the dataset

fourier_data = apply_fourier_transform(original_data)Step 3: Handling Missing Values and Outliers

XGBoost can handle missing values and outliers in the data. Missing values can be imputed using techniques such as interpolation or mean imputation. Outliers can be detected and treated using robust statistical methods or by transforming the data. By handling missing values and outliers effectively, XGBoost can provide more accurate forecasts.

Building and Training an XGBoost Model for Time-Series Forecasting

Step 1: Splitting the Data into Training and Testing Sets

To assess the performance of the XGBoost model, one must partition the time-series data into training and testing sets. The training set facilitates model training, and the testing set enables the evaluation of its performance on unseen data. Preserving the temporal order of observations is crucial when splitting the data.

# Splitting time-series data into training and testing sets

train_size = int(len(data) * 0.8)

train_data, test_data = data[:train_size], data[train_size:]Also Read: A Comprehensive Guide to Train-Test-Validation Split in 2024

Step 2: Parameter Tuning for XGBoost Model

Several hyperparameters in XGBoost can undergo tuning to optimize the model’s performance. Utilizing grid search or random search can help find the optimal combination of hyperparameters. Common hyperparameters that require tuning include the learning rate, maximum tree depth, and regularization parameters.

# Hyperparameter tuning using grid search

from sklearn.model_selection import GridSearchCV

param_grid = {

'learning_rate': [0.01, 0.1, 0.2],

'max_depth': [3, 5, 7],

'subsample': [0.8, 0.9, 1.0]

}

grid_search = GridSearchCV(XGBRegressor(), param_grid, cv=3)

grid_search.fit(X_train, y_train)

best_params = grid_search.best_params_Step 3: Training the XGBoost Model

Once the hyperparameters are tuned, the XGBoost model can be trained on the training set. The model learns the underlying patterns and relationships in the data, enabling it to make accurate predictions.

# Training the XGBoost model

from xgboost import XGBRegressor

xgb_model = XGBRegressor(**best_params)

xgb_model.fit(X_train, y_train)Step 4: Evaluating Model Performance

After training the XGBoost model, its performance needs to be evaluated on the testing set. Common evaluation metrics for time-series forecasting include mean absolute error (MAE), root mean squared error (RMSE), and mean absolute percentage error (MAPE). These metrics quantify the accuracy of the model’s predictions and provide insights into its performance.

# Evaluating the XGBoost model on the testing set

from sklearn.metrics import mean_absolute_error, mean_squared_error

predictions = xgb_model.predict(X_test)

mae = mean_absolute_error(y_test, predictions)

rmse = np.sqrt(mean_squared_error(y_test, predictions))Elevate your time-series forecasting skills with AI/ML Blackbelt Plus. Uncover the power of XGBoost and supercharge your predictive analytics journey now!

Advanced Techniques for Time-Series Forecasting with XGBoost

Handling Seasonality and Trends

XGBoost can effectively handle seasonality and trends in time-series data. Seasonal features can be incorporated into the model to capture periodic patterns, while trend features can capture long-term upward or downward trends. By considering seasonality and trends, XGBoost can provide more accurate forecasts.

# Adding seasonal and trend features to the dataset

data['seasonal_feature'] = data['timestamp'].apply(lambda x: seasonal_pattern(x))

data['trend_feature'] = data['timestamp'].apply(lambda x: trend_pattern(x))Dealing with Non-Stationary Data

Non-stationary data, where the statistical properties change over time, can pose challenges for time-series forecasting. XGBoost can handle non-stationary data by incorporating differencing techniques or by using advanced models such as ARIMA-XGBoost hybrids. These techniques help in capturing the underlying patterns in non-stationary data.

# Differencing technique for handling non-stationary data

data['stationary_target'] = data['target'].diff()Incorporating External Factors

In some time-series forecasting tasks, external factors can significantly influence the target variable. XGBoost allows for the incorporation of external factors as additional predictors, enhancing the model’s predictive power. For example, in energy demand forecasting, weather data can be included as an external factor to capture its impact on energy consumption.

# Including external factors in the dataset

data = pd.merge(data, external_factors, on='timestamp', how='left')Best Practices and Tips for Successful Time-Series Forecasting with XGBoost

Choosing the Right Evaluation Metrics

Selecting appropriate evaluation metrics is crucial for assessing the performance of the XGBoost model. Different time-series forecasting tasks may require different metrics. It is essential to choose metrics that align with the specific business objectives and provide meaningful insights into the model’s performance.

# Selecting evaluation metrics based on business objectives

evaluation_metrics = ['mae', 'rmse', 'mape']Feature Selection and Importance

Feature selection plays a vital role in time-series forecasting with XGBoost. It is important to identify the most relevant features that contribute to accurate predictions. XGBoost provides feature importance scores, which can guide the selection of the most influential features.

# Displaying feature importance scores

feature_importance = xgb_model.feature_importances_Regularization and Overfitting Prevention

Regularization techniques are essential to prevent overfitting in the XGBoost model. Overfitting occurs when the model learns the noise or random fluctuations in the training data, leading to poor generalization on unseen data. Regularization techniques such as L1 and L2 regularization can help in controlling the complexity of the model and improving its generalization performance.

# Implementing regularization in XGBoost

xgb_model = XGBRegressor(learning_rate=0.1, max_depth=5, subsample=0.9, reg_alpha=0.1, reg_lambda=0.1)Limitations and Challenges of XGBoost for Time-Series Forecasting

Handling Long-Term Dependencies

XGBoost may struggle to capture long-term dependencies in time-series data. If the target variable depends on events or patterns that occurred far in the past, XGBoost’s performance may be limited. In such cases, advanced models like recurrent neural networks (RNNs) or long short-term memory (LSTM) networks may be more suitable.

Dealing with Irregular and Sparse Data

XGBoost performs best when the time-series data is regular and dense. Irregular or sparse data, where there are missing observations or long gaps between observations, can pose challenges for XGBoost. In such cases, data imputation or interpolation techniques may be required to fill in the missing values or create a denser time series.

Conclusion

XGBoost is a powerful algorithm for time-series forecasting, offering several advantages such as handling non-linear relationships, feature importance analysis, and regularization. By following best practices and incorporating advanced techniques, XGBoost can provide accurate predictions in various domains, including sales forecasting, stock market prediction, and energy demand forecasting. However, it is essential to be aware of its limitations and challenges, such as handling long-term dependencies and irregular data. Overall, leveraging XGBoost for time-series forecasting can significantly enhance decision-making and planning for businesses in today’s dynamic market.

Ready to master XGBoost for time-series forecasting? Level up your expertise with the AI/ML Blackbelt Plus program.

Enroll today for an unbeatable learning experience!

Frequently Asked Questions

A. Yes, XGBoost excels in time series forecasting due to its ability to capture intricate patterns and handle non-linear relationships effectively.

A. The best model for time series forecasting varies based on the dataset. XGBoost is often considered excellent, alongside models like ARIMA, LSTM, and Prophet, depending on the specific characteristics of the time-series data.

A. Certainly, XGBoost is suitable for multivariate time series, accommodating multiple input features for forecasting scenarios where the target variable relies on multiple variables across different time points.

A. Absolutely, XGBoost is versatile for prediction tasks, excelling in a broad range of predictive modeling applications for both classification and regression. It offers high accuracy and robust predictions.