Introduction

Are you working with image data? There are so many things we can do using computer vision algorithms:

- Object detection

- Image segmentation

- Image translation

- Object tracking (in real-time), and a whole lot more.

This got me thinking – what can we do if there are multiple object categories in an image? Making an image classification model was a good start, but I wanted to expand my horizons to take on a more challenging task – building a multi-label image classification model!

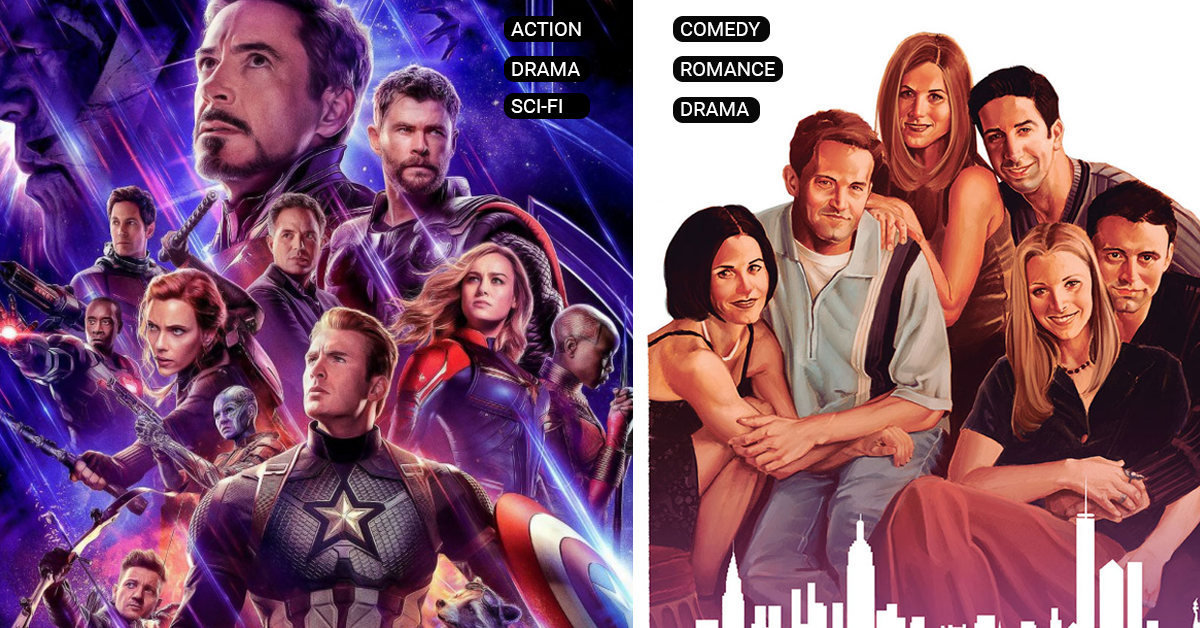

I didn’t want to use toy datasets to build my model – that is too generic. And then it struck me – movie/TV series posters contain a variety of people. Could I build my own multi-label image classification model to predict the different genres just by looking at the poster?

The short answer – yes! And in this article, I have explained the idea behind multi-label image classification. We will then build our very own model using movie posters. You will be amazed by the impressive results our model generates. And if you’re an Avengers or Game of Thrones fan, there’s an awesome (spoiler-free) surprise for you in the implementation section.

Excited? Good, let’s dive in!

Table of Contents

- What is Multi-Label Image Classification?

- How is Multi-Label Image Classification different from Multi-Class Image Classification?

- Understanding the Multi-Label Image Classification Model Architecture

- Steps to Build your Multi-Label Image Classification Model

- Case Study: Solve a Multi-Label Image Classification Problem in Python

What is Multi-Label Image Classification?

Let’s understand the concept of multi-label image classification with an intuitive example. Check out the below image:

The object in image 1 is a car. That was a no-brainer. Whereas, there is no car in image 2 – only a group of buildings. Can you see where we are going with this? We have classified the images into two classes, i.e., car or non-car.

When we have only two classes in which the images can be classified, this is known as a binary image classification problem.

Let’s look at one more image:

How many objects did you identify? There are way too many – a house, a pond with a fountain, trees, rocks, etc. So,

When we can classify an image into more than one class (as in the image above), it is known as a multi-label image classification problem.

Now, here’s a catch – most of us get confused between multi-label and multi-class image classification. Even I was bamboozled the first time I came across these terms. Now that I have a better understanding of the two topics, let me clear up the difference for you.

How is Multi-Label Image Classification different from Multi-Class Image Classification?

Suppose we are given images of animals to be classified into their corresponding categories. For ease of understanding, let’s assume there are a total of 4 categories (cat, dog, rabbit and parrot) in which a given image can be classified. Now, there can be two scenarios:

- Each image contains only a single object (either of the above 4 categories) and hence, it can only be classified in one of the 4 categories

- The image might contain more than one object (from the above 4 categories) and hence the image will belong to more than one category

Let’s understand each scenario through examples, starting with the first one:

Here, we have images which contain only a single object. The keen-eyed among you will have noticed there are 4 different types of objects (animals) in this collection.

Each image here can only be classified either as a cat, dog, parrot or rabbit. There are no instances where a single image will belong to more than one category.

1. When there are more than two categories in which the images can be classified, and

2. An image does not belong to more than one category

If both of the above conditions are satisfied, it is referred to as a multi-class image classification problem.

Now, let’s consider the second scenario – check out the below images:

- First image (top left) contains a dog and a cat

- Second image (top right) contains a dog, a cat and a parrot

- Third image (bottom left) contains a rabbit and a parrot, and

- The last image (bottom right) contains a dog and a parrot

These are all labels of the given images. Each image here belongs to more than one class and hence it is a multi-label image classification problem.

These two scenarios should help you understand the difference between multi-class and multi-label image classification. Connect with me in the comments section below this article if you need any further clarification.

Before we jump into the next section, I recommend going through this article – Build your First Image Classification Model in just 10 Minutes!. It will help you understand how to solve a multi-class image classification problem.

Steps to Build your Multi-Label Image Classification Model

Now that we have an intuition about multi-label image classification, let’s dive into the steps you should follow to solve such a problem.

The first step is to get our data in a structured format. This applied to be both binary as well as multi-class image classification.

You should have a folder containing all the images on which you want to train your model. Now, for training this model, we also require the true labels of images. So, you should also have a .csv file which contains the names of all the training images and their corresponding true labels.

We will learn how to create this .csv file later in this article. For now, just keep in mind that the data should be in a particular format. Once the data is ready, we can divide the further steps as follows:

Load and pre-process the data

First, load all the images and then pre-process them as per your project’s requirement. To check how our model will perform on unseen data (test data), we create a validation set. We train our model on the training set and validate it using the validation set (standard machine learning practice).

Define the model’s architecture

The next step is to define the architecture of the model. This includes deciding the number of hidden layers, number of neurons in each layer, activation function, and so on.

Train the model

Time to train our model on the training set! We pass the training images and their corresponding true labels to train the model. We also pass the validation images here which help us validate how well the model will perform on unseen data.

Make predictions

Finally, we use the trained model to get predictions on new images.

Understanding the Multi-Label Image Classification Model Architecture

Now, the pre-processing steps for a multi-label image classification task will be similar to that of a multi-class problem. The key difference is in the step where we define the model architecture.

We use a softmax activation function in the output layer for a multi-class image classification model. For each image, we want to maximize the probability for a single class. As the probability of one class increases, the probability of the other class decreases. So, we can say that the probability of each class is dependent on the other classes.

But in case of multi-label image classification, we can have more than one label for a single image. We want the probabilities to be independent of each other. Using the softmax activation function will not be appropriate. Instead, we can use the sigmoid activation function. This will predict the probability for each class independently. It will internally create n models (n here is the total number of classes), one for each class and predict the probability for each class.

Using sigmoid activation function will turn the multi-label problem to n – binary classification problems. So for each image, we will get probabilities defining whether the image belongs to class 1 or not, and so on. Since we have converted it into a n – binary classification problem, we will use the binary_crossentropy loss. Our aim is to minimize this loss in order to improve the performance of the model.

This is the major change we have to make while defining the model architecture for solving a multi-label image classification problem. The training part will be similar to that of a multi-class problem. We will pass the training images and their corresponding true labels and also the validation set to validate our model’s performance.

Finally, we will take a new image and use the trained model to predict the labels for this image. With me so far?

Case Study: Solving a Multi-Label Image Classification Problem

Congratulations on making it this far! Your reward – solving an awesome multi-label image classification problem in Python. That’s right – time to power up your favorite Python IDE!

Let’s set up the problem statement. Our aim is to predict the genre of a movie using just its poster image. Can you guess why it is a multi-label image classification problem? Think about it for a moment before you look below.

A movie can belong to more than one genre, right? It doesn’t just have to belong to one category, like action or comedy. The movie can be a combination of two or more genres. Hence, multi-label image classification.

The dataset we’ll be using contains the poster images of several multi-genre movies. I have made some changes in the dataset and converted it into a structured format, i.e. a folder containing the images and a .csv file for true labels. You can download the structured dataset from here. Below are a few posters from our dataset:

You can download the original dataset along with the ground truth values here if you wish.

Let’s get coding!

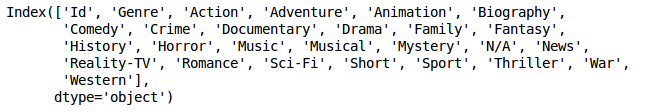

First, import all the required Python libraries:

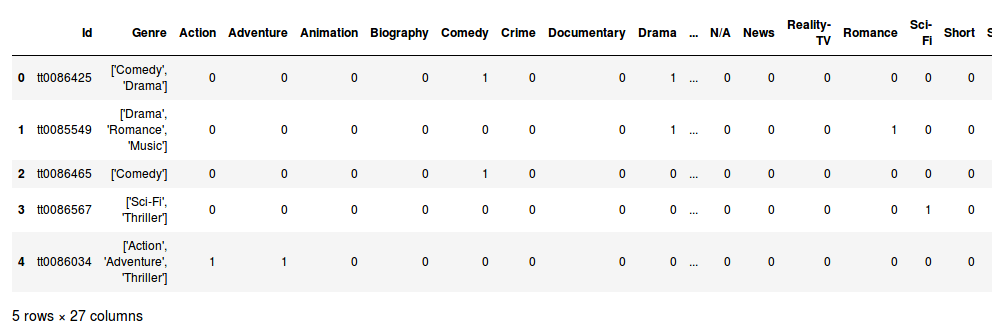

Now, read the .csv file and look at the first five rows:

There are 27 columns in this file. Let’s print the names of these columns:

The genre column contains the list for each image which specifies the genre of that movie. So, from the head of the .csv file, the genre of the first image is Comedy and Drama.

The remaining 25 columns are the one-hot encoded columns. So, if a movie belongs to the Action genre, its value will be 1, otherwise 0. The image can belong to 25 different genres.

We will build a model that will return the genre of a given movie poster. But before that, do you remember the first step for building any image classification model?

That’s right – loading and preprocessing the data. So, let’s read in all the training images:

A quick look at the shape of the array:

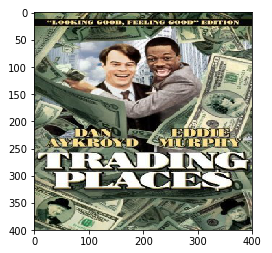

There are 7254 poster images and all the images have been converted to a shape of (400, 300, 3). Let’s plot and visualize one of the images:

This is the poster for the movie ‘Trading Places’. Let’s also print the genre of this movie:

This movie has a single genre – Comedy. The next thing our model would require is the true label(s) for all these images. Can you guess what would be the shape of the true labels for 7254 images?

Let’s see. We know there are a total of 25 possible genres. For each image, we will have 25 targets, i.e., whether the movie belongs to that genre or not. So, all these 25 targets will have a value of either 0 or 1.

We will remove the Id and genre columns from the train file and convert the remaining columns to an array which will be the target for our images:

The shape of the output array is (7254, 25) as we expected. Now, let’s create a validation set which will help us check the performance of our model on unseen data. We will randomly separate 10% of the images as our validation set:

The next step is to define the architecture of our model. The output layer will have 25 neurons (equal to the number of genres) and we’ll use sigmoid as the activation function.

I will be using a certain architecture (given below) to solve this problem. You can modify this architecture as well by changing the number of hidden layers, activation functions and other hyperparameters.

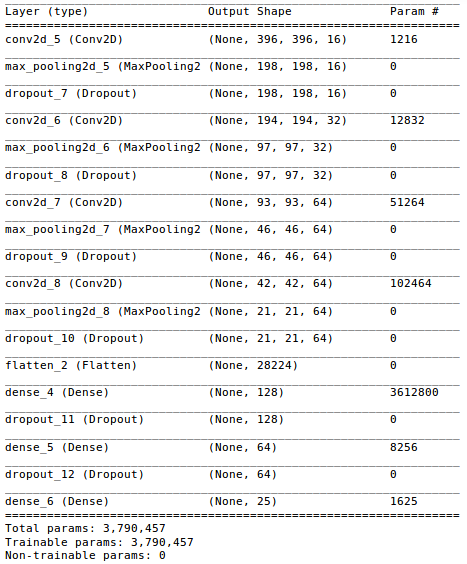

Let’s print our model summary:

Quite a lot of parameters to learn! Now, compile the model. I’ll use binary_crossentropy as the loss function and ADAM as the optimizer (again, you can use other optimizers as well):

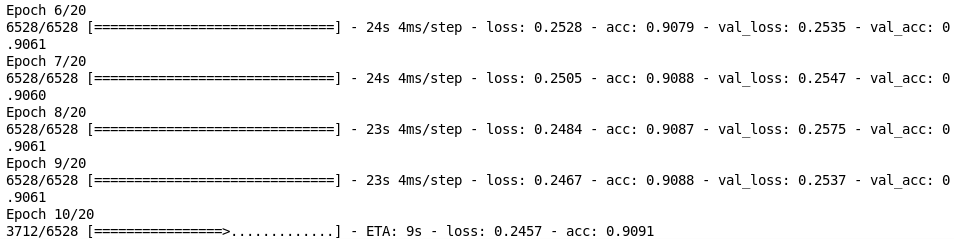

Finally, we are at the most interesting part – training the model. We will train the model for 10 epochs and also pass the validation data which we created earlier in order to validate the model’s performance:

We can see that the training loss has been reduced to 0.24 and the validation loss is also in sync. What’s next? It’s time to make predictions!

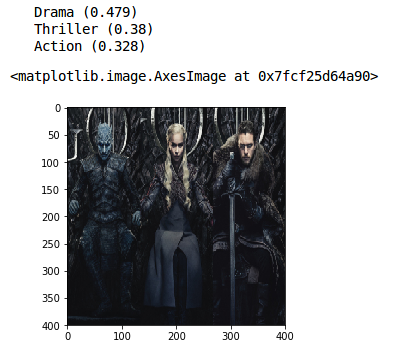

All you Game of Thrones (GoT) and Avengers fans – this one’s for you. Let’s take the posters for GoT and Avengers and feed them to our model. Download the poster for GOT and Avengers before proceeding.

Before making predictions, we need to preprocess these images using the same steps we saw earlier.

Now, we will predict the genre for these posters using our trained model. The model will tell us the probability for each genre and we will take the top 3 predictions from that.

Impressive! Our model suggests Drama, Thriller and Action genres for Game of Thrones. That classifies GoT pretty well in my opinion. Let’s try our model on the Avengers poster. Preprocess the image:

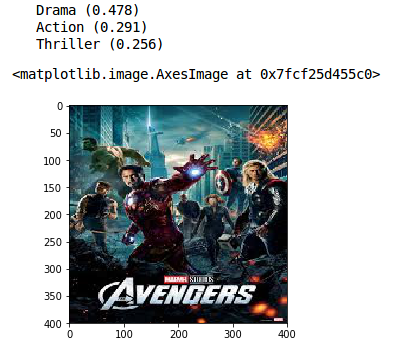

And then make the predictions:

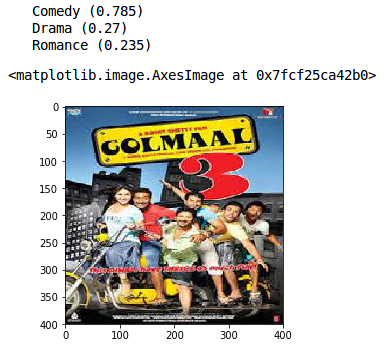

The genres our model comes up with are Drama, Action and Thriller. Again, these are pretty accurate results. Can the model perform equally well for Bollywood movies ? Let’s find out. We will use this Golmal 3 poster.

You know what to do at this stage – load and preprocess the image:

And then predict the genre for this poster:

Golmaal 3 was a comedy and our model has predicted it as the topmost genre. The other predicted genres are Drama and Romance – a relatively accurate assessment. We can see that the model is able to predict the genres just by seeing their poster.

Next Steps and Experimenting on your own

This is how we can solve a multi-label image classification problem. Our model performed really well even though we only had around 7000 images for training it.

You can try and collect more posters for training. My suggestion would be to make the dataset in such a way that all the genre categories will have comparatively equal distribution. Why?

Well, if a certain genre is repeating in most of the training images, our model might overfit on that genre. And for every new image, the model might predict the same genre. To overcome this problem, you should try to have an equal distribution of genre categories.

These are some of the key points which you can try to improve the performance of your model. Any other you can think of? Let me know!

End Notes

There are multiple applications of multi-label image classification apart from genre prediction. You can use this technique to automatically tag images, for example. Suppose you want to predict the type and color of a clothing item in an image. You can build a multi-label image classification model which will help you to predict both!

I hope this article helped you understand the concept of multi-label image classification. If you have any feedback or suggestions, feel free to share them in the comments section below. Happy experimenting!