This article was published as a part of the Data Science Blogathon.

Introduction

Hi, In this article, let us go over how you can create a Python program using Convolutional Neural Networks (Deep Learning) and a mouse/keyboard automation library called pyautogui to move your mouse/cursor with your head pose. We will be using pyautogui as it is the simplest available Python library to programmatically control the various components of your computer. More details on how to use this library mentioned below.

First things first, we have to create a deep learning model that can classify your current head pose into 5 different categories, namely, Neutral (no direction indicated), Left, Right, Up, and Down.

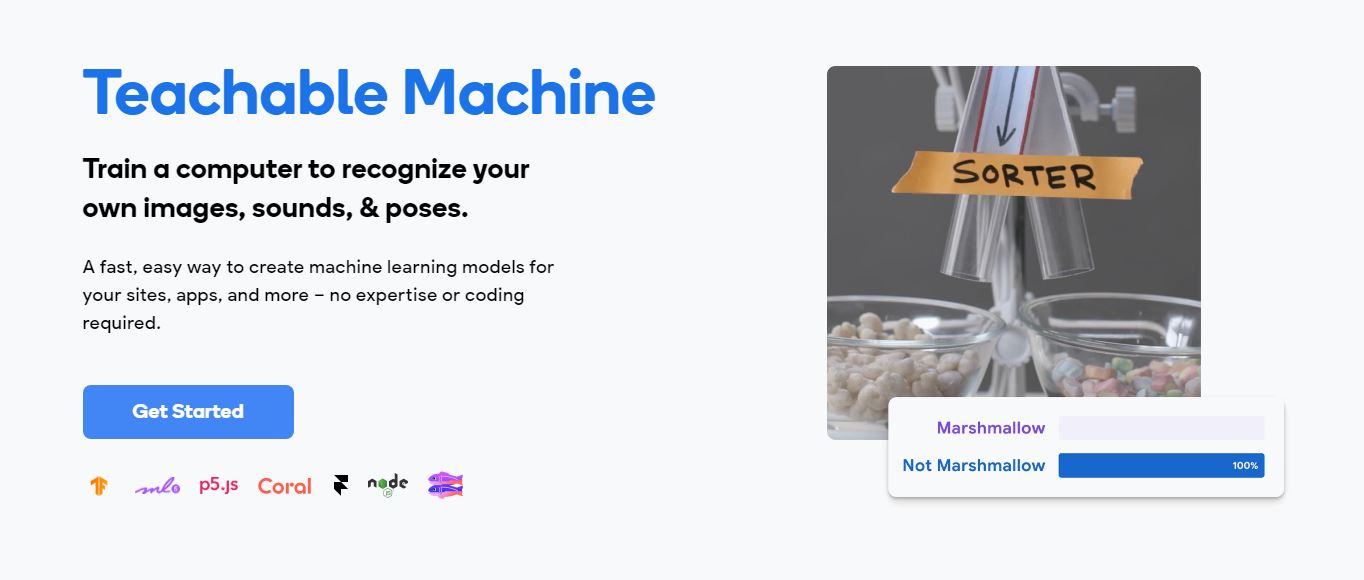

What is a Teachable Machine?

A teachable machine is a tool that helps you teach a computer to recognize things. It allows you to show the computer examples of different things and tell it what they are. The computer then learns from these examples and can identify similar things in the future. It’s like training a pet or teaching a child by showing them examples and explaining what they are. With a teachable machine, you can create your own programs or applications that can understand and respond to different inputs, like images or sounds, without needing to be an expert programmer.

GOOGLE TEACHABLE MACHINE

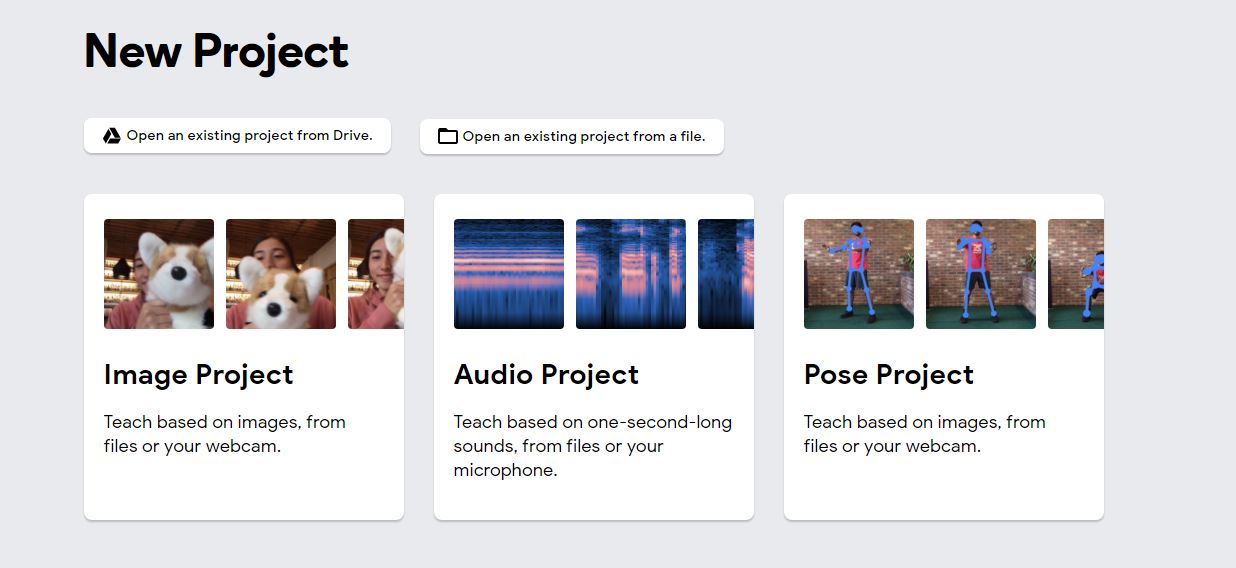

Google Teachable Machine is Google’s free no-code deep learning model creation web platform. You can build models to classify images, audios or even poses. After doing the same, you can download the trained model and use it for your applications.

You could use frameworks like Tensorflow or PyTorch to build a custom Convolutional Neural Network with network architecture of your choice or if you want a simple no-code way of doing the same, you could use the Google Teachable Machine platform to do the same for you. It is very intuitive and does a really good job.

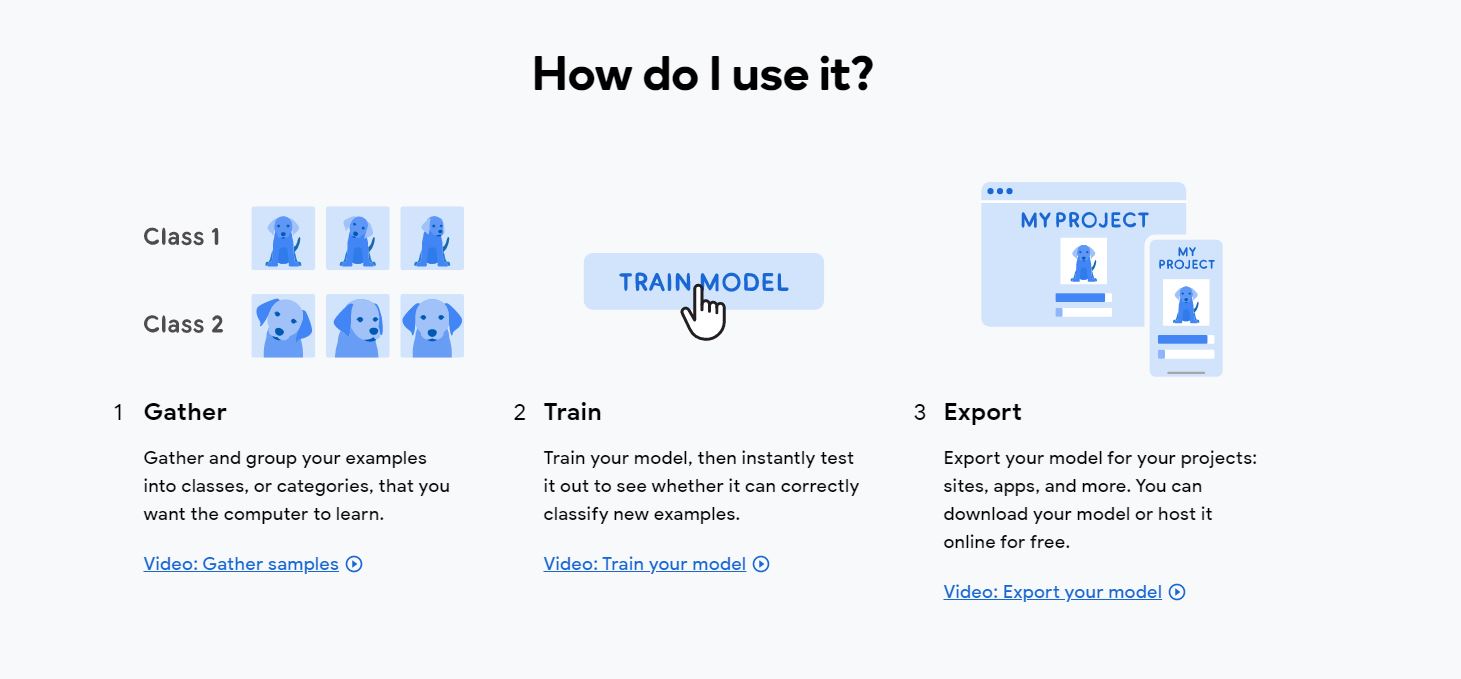

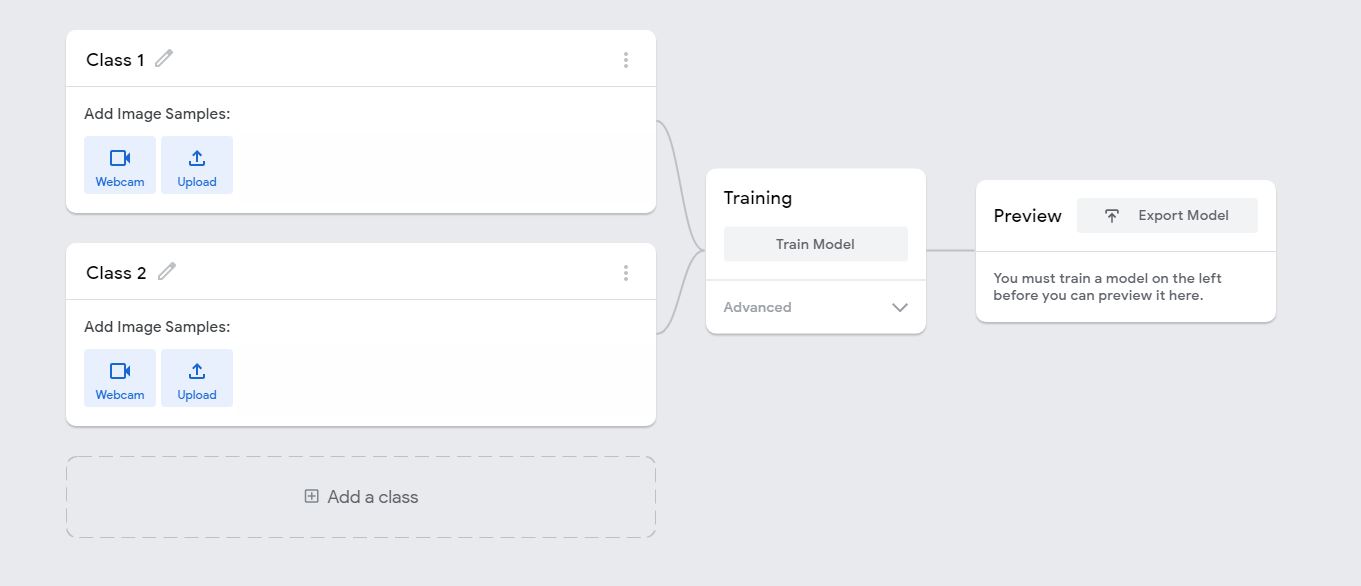

Choose ‘Image Project’ and name the samples and record your photos with corresponding head poses. You can let the default hyper-parameters be and proceed with the training.

Tip: Try to record the photos of the head poses from different depths and positions with respect to the camera so that you don’t overfit the data which will later lead to poor predictions.

Then you can use the Preview section of the same page to see how well your trained model is performing and decide whether to use it for the program or create a more robust model with a higher number of images and parameter tuning.

After the above step, download the model weights. The weights will get downloaded as ‘keras_model.h5’.

Program

Now, let us combine this with a program that is able to move the mouse.

Let us have a look at the code:

# Python program to control mouse based on head position # Import necessary modules import numpy as np import cv2 from time import sleep import tensorflow.keras from keras.preprocessing import image import tensorflow as tf import pyautogui

# Using laptop’s webcam as the source of video cap = cv2.VideoCapture(0) # Labels — The various outcome possibilities labels = [‘Left’,’Right’,’Up’,’Down’,’Neutral’]

# Loading the model weigths we just downloaded

model = tensorflow.keras.models.load_model(‘keras_model.h5’)

while True:

success, image = cap.read()

if success == False:

break

# Necessary to avoid conflict between left and right

image = cv2.flip(image,1)

cv2.imshow(“Frame”,image)

# The model takes an image of dimensions (224,224) as input so let’s

# reshape our image to the same.

img = cv2.resize(image,(224,224))

# Convert the image to a numpy array

img = np.array(img,dtype=np.float32)

img = np.expand_dims(img,axis=0)

# Normalizing input image

img = img/255

# Predict the class

prediction = model.predict(img)

# Map the prediction to a class name

predicted_class = np.argmax(prediction[0], axis=-1)

predicted_class_name = labels[predicted_class]# Using pyautogui to get the current position of the mouse and move

current_pos = pyautogui.position()

current_x = current_pos.x

current_y = current_pos.y print(predicted_class_name) if predicted_class_name == ‘Neutral’:

sleep(1)

continue

elif predicted_class_name == ‘Left’:

pyautogui.moveTo(current_x-80,current_y,duration=1)

sleep(1)

elif predicted_class_name == ‘Right’:

pyautogui.moveTo(current_x+80,current_y,duration=1)

sleep(1)

elif predicted_class_name == ‘Down’:

pyautogui.moveTo(current_x,current_y+80,duration=1)

sleep(1)

elif predicted_class_name == ‘Up’:

pyautogui.moveTo(current_x,current_y-80,duration=1)

sleep(1)

# Close all windows if one second has passed and ‘q’ is pressed

if cv2.waitKey(1) & 0xFF == ord(‘q’):

break

# Release open connections

cap.

# Close all windows if one second has passed and ‘q’ is pressed if cv2.waitKey(1) & 0xFF == ord(‘q’): break # Release open connections cap.release() cv2.destroyAllWindo

release() cv2.destroyAllWindows()

You can also find the code and weights here.

Explanation for the pyautogui functions:

pyautogui.moveTo(current_x-80, currently, duration=1)

The above code makes the mouse move 80 pixels to the left from the current position and take a duration of 1 second to do the same. If you do not set a duration parameter then the mouse pointer will instantaneously move to the new point removing the effect of moving the mouse.

current_pos = pyautogui.position() current_x = current_pos.x current_y = current_pos.y

The first line gets the value of the x and y coordinates of the mouse. And you can access them individually by using the following lines of code.

Finally, release the open connections.

We have now built an end to end deep learning model, that can take input video from the user. As the program reads the video, it classifies each image based on your head pose and returns the corresponding prediction. Using this prediction, we can take the appropriate action which is moving the mouse in the direction our head is pointing.

You can further improve the project we have just built by adding custom functionality to click the mouse without actually touching the mouse of your computer. You could train another deep learning model for this on Google Teachable Machine itself.

I hope this was a fun project to implement. Thanks for reading!

Frequently Asked Questions

A. Teachable Machine is a user-friendly tool that enables people to create their own machine learning models without extensive coding knowledge. It allows users to train a computer to recognize and classify various inputs, such as images, sounds, or gestures. By providing labeled examples, users can teach the machine what different things look or sound like. The tool then uses this training data to create a model that can recognize similar inputs and make predictions. Teachable Machine empowers users to build interactive applications, prototypes, or educational projects that can understand and respond to specific inputs based on the training provided.

A. Yes, Teachable Machine is completely free to use! It’s like having a cool tool that helps you teach a computer to recognize things without needing to pay anything. You can use it to train the computer to understand different pictures, sounds, or poses by showing it examples and telling it what they are. The best part is, you don’t have to worry about any costs or fees while using it. It’s a fun and accessible way for anyone to get started with machine learning without spending any money.