Description of the problem

Gradient Descent is an algorithm that finds local extremum points of a real valued function with several variables. As such it is a go-to algorithm for many optimization problems that appear in the context of machine learning. I wrote an implementation optimizing Linear Regression and Logistic Regression cost functions in Common Lisp in the past.

These cost functions that we would like to optimize, calculate an average error over a whole data set we have at hand. Thus, as the data set grows the price we have to pay (both time- and space-wise) grows. One option is to take a large-enough random sample from the data set and take the average cost over this representative sample. Thus we arrive at Stochastic Gradient Descent.

An implementation

The following is a faithful clojure translation of the code I wrote in Common Lisp.

(defn descent [f xs error rate epochs] (let [ns (range (count xs)) r (/ rate error 2)] (letfn [(delta [ys i e] (into [] (map (fn [j y] (if (= i j) (+ y e) y)) ns ys))) (step [ys] (into [] (map (fn [i y] (- y (* r (- (f (delta ys i error)) (f (delta ys i (- error))))))) ns ys))) (norm [ys] (Math/sqrt (reduce (fn [u v] (+ u (* v v))) 0 ys)))] (loop [ys xs m 0] (let [zs (step ys)] ;; (if (= 0 (mod m 20)) (println zs)) (if (or (> m epochs) (< (norm (map - zs ys)) error)) ys (recur zs (inc m))))))))

#'user/descent

Let me test this on the ordinary linear regression cost function. I am not going to explain the theory behind it. If you are so inclined, you should check my previous post where I explained why we need the following function (sans the sampling/stochastic bits) for our optimization routine.

(defn least-sq [data sample-rate] (letfn [(dot [ys zs] (reduce + (map * ys zs))) (hlp [ys zs] (let [y (first ys) x (rest ys) u (dot (conj (into [] x) 1) zs) v (- y u)] (* v v)))] (let [n (int (* sample-rate (count data))) r (/ n) sample (repeatedly n (fn [] (rand-nth data)))] (fn [xs] (* r (reduce + (map hlp sample (repeat n xs))))))))

#'user/least-sq

I need to generate a random test data set and generate the specific cost function for that data set. We do that as follows:

(let [data (repeatedly 100 (fn [] (let [x (rand 1.0)] [(+ (* 12.0 x) (- (rand 1.0) 0.5)) x]))) errfn (least-sq data 0.25)] (descent errfn [0.0 0.0] 0.02 0.2 1000))

[11.121470442729198 0.4521003889249443]

The result is encouraging.

Now, let us move to the logistic regression cost function.

(defn logistic [data sample-rate] (letfn [(dot [ys zs] (reduce + (map * ys zs))) (hlp [ys zs] (let [y (first ys) x (rest ys) p (/ 1.0 (+ 1.0 (Math/exp (- (dot (conj (into [] x) 1) zs)))))] (+ (* (+ 1 y) (Math/log p)) (* (- 1 y) (Math/log (- 1.0 p))))))] (let [n (int (* sample-rate (count data))) r (/ -1.0 n) sample (repeatedly n (fn [] (rand-nth data)))] (fn [xs] (* r (reduce + (map hlp sample (repeat n xs))))))))

#'user/logistic

(let [data (concat (repeatedly 600 (fn [] (let [x (rand 1.0)] [-1 x (+ (* -0.2 x) (- 0.2 (rand 1.2)))]))) (repeatedly 450 (fn [] (let [x (rand 1.0)] [ 1 x (+ (* -0.3 x) (rand 0.9))])))) errfn (logistic data 0.25) theta (descent errfn [0.0 0.0 0.0] 0.01 0.1 2000) slope (/ (nth theta 0) (nth theta 1) -1) intercept (/ (nth theta 2) (nth theta 1) -1)] [slope intercept])

[-0.0365938480224255 0.05372144183008341]

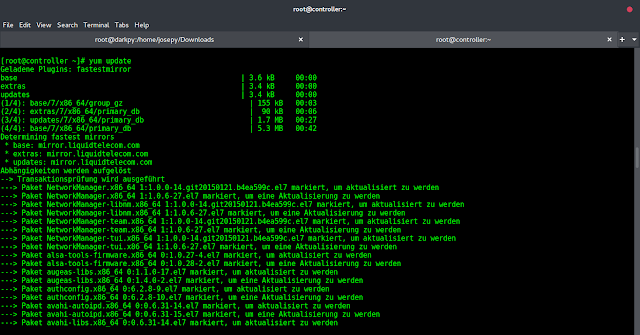

When we plot this we get