Google Dorking is a technique used by hackers and security researchers to find sensitive information on websites using Google’s search engine. It is also known as Google hacking or Google Dorking.

Search Filters

Google Dorking involves using advanced search operations in Google to search for specific keywords, file types, or website parameters. These operators can be combined to create more powerful search queries that can reveal information that would not be easily accessible otherwise.

Some examples of advanced search operators used in Google Dorking include:

|

Dork |

Description |

Example |

|---|---|---|

|

allintext |

Searches for occurrences of all the keywords given. | allintext:”keyword” |

|

intext |

Searches for the occurrences of keywords all at once or one at a time. | intext:”keyword” |

|

inurl |

Searches for a URL matching one of the keywords. | inurl:”keyword” |

|

intitle |

Searches for occurrences of keywords in title all or one. | intitle:”keyword” |

|

site |

Specifically searches that particular site and lists all the results for that site. | site:”www.geeksforgeeks.org” |

|

filetype |

Searches for a particular filetype mentioned in the query. | filetype:”pdf” |

|

link |

Searches for external links to pages | link:”keyword” |

|

related |

List web pages that are “similar” to a specified web page. | related:www.geeksforgeeks.org |

|

cache |

Shows the version of the web page that Google has in its cache. | cache:www.geeksforgeeks.org |

These are some of the dorks who generally used more as compared to other dorks. Dorks are just not limited to this list, you can also make your own custom dork by innovating already existing dorks. For reference, you can visit Google Hacking Database.

Examples

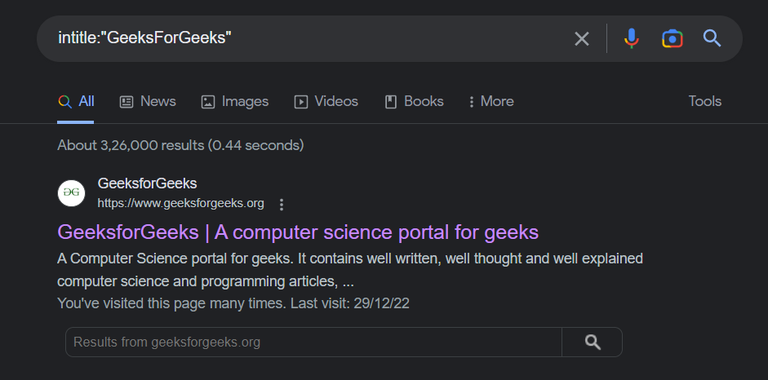

Let’s have an example of using a dork intitle:”GeeksForGeeks” which will filter the sites containing GeeksForGeeks in their title :

intitle:”GeeksForGeeks” (There are a lot more results, explore it by doing)

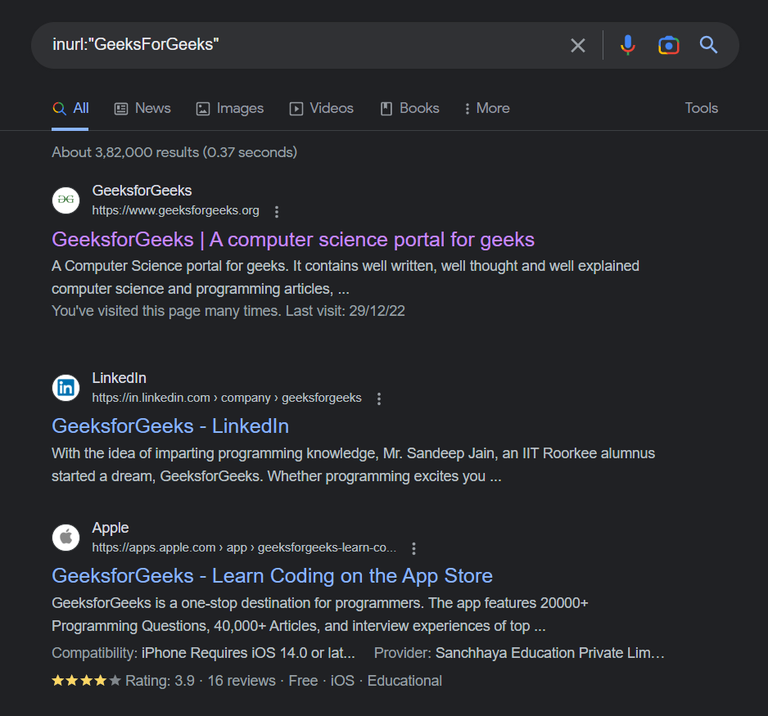

And one more inurl:”GeeksForGeeks”, it will filter all those sites which are having GeeksForGeeks in their URL.

inurl:”GeeksForGeeks” (Try it yourself to learn more)

Other Operators

Apart from the above-mentioned operator, there are also some logical operators which can be used to filter the search engine results according to the need. You will definitely get the stuff on seeing these operators. Here are these:

- OR: This self-explanatory operator searches for a given search term OR an equivalent term.

site:geeksforgeeks.org | site:www.geeksforgeeks.org

- AND: Similarly, this operator searches for a given search term AND an equivalent term.

site:lazyroar.com & site:www.geeksforgeeks.org

- Search Term: This operator only looks for the precise phrase within speech marks.

"GeeksForGeeks POTD"

- Glob Pattern (*): This works best when you don’t know what goes on in the place of the asterisk(*).

site: *.neveropen.tech

- Including Results: Will include the results.

site:linkedin.com +site:linkedin.*

- Exclude Results: Will exclude the results.

site:linkedin.* -site:linkedin.com

This is all about the operators which can be used apart from the dorks which doing a google search.

While Google Dorking can be used for legitimate purposes such as researching a website’s security vulnerabilities, hackers use this technique maliciously to find sensitive information such as usernames, passwords, and other potential information. As a result, it is important for website owners to secure their websites and avoid exposing sensitive information in publicly accessible directories.

In addition, internet users should also be careful about the information they share online and use strong, unique passwords for each of their online accounts to avoid falling victim to a cyber attack.

Overall, Google Dorking is a powerful technique that can be used for both good and bad purposes. Website owners and internet users should be aware of its potential risks and take steps to protect themselves from any potential security breaches.

Prevention From Google Dorking

As an owner/developer, you will wish your website to be secure from google dorking. You can do so by following the below-mentioned stuff:

- Use Robots.txt: You may tell search engines not to index particular web pages or directories on your website using a robots.txt file. By doing this, you may be able to stop attackers from discovering weak points on your website. There are a lot of modifications that can be done to robots.txt. For ex:

<META NAME="ROBOTS" CONTENT="NOINDEX, NOFOLLOW"> // This meta tag will prevent all robots from scanning your website

To get more insights about robots.txt, follow this GFG Article.

- Disable Directory Indexing: Web servers frequently permit directory crawling by default, allowing anybody to see a directory’s contents. You can stop it from happening by turning off directory indexing in your web server settings.

- Use a Firewall: You can use a WAF (Web Application Firewall) to enhance the security of your website. It will provide you with an extra layer of security.

- Use Access Control: You can use authentication or MFA (Multi-Factor Authentication) on the pages if you don’t want let anyone to have access. It will prevent unauthorized access to the website.

By following these methods, you can protect your website over the internet from google hackers, or better to say, google dorking.

Note: This article is only for educational purposes.