This article was published as a part of the Data Science Blogathon.

Introduction

“Machine intelligence is the last invention that humanity will ever need to make “ — Nick Bostrom

As we have already discussed RNNs in my previous post, it’s time we explore LSTMs for long memories. Since LSTM’s work takes previous knowledge into consideration it would be good for you also to have a look at my previous article on RNNs ( relatable right ?).

https://www.geeksforgeeks.org/blog/2020/10/recurrent-neural-networks-for-sequence-learning/

Let’s take an example, suppose I show you one image and after 2 mins I ask you about that image you will probably remember that image content, but if I ask about the same image some days later, the information might be fade or totally lost right? The first condition is where we need RNNs ( for shorter memories ) while the other one is when we need LSTMs for long memory capacities. this clears some doubts right?

For more clarification let’s take another one, suppose you are watching a movie without knowing its name ( e.g. Justice League ) in one frame you See Ban Affleck and think this might be The Batman Movie, in another frame you see Gal Gadot and think this can be Wonder Women right? but when seeing a few next frames you can be sure that this is Justice League because you are using knowledge acquired from past frames, this is exactly what LSTMs do, and by using the following mechanisms:

1. Forgetting Mechanism: Forget all scene related information that is not worth remembering.

2. Saving Mechanism: Save information that is important and can help in the future.

Now that we know when to use LSTMs, let’s discuss the basics of it.

The architecture of LSTM:

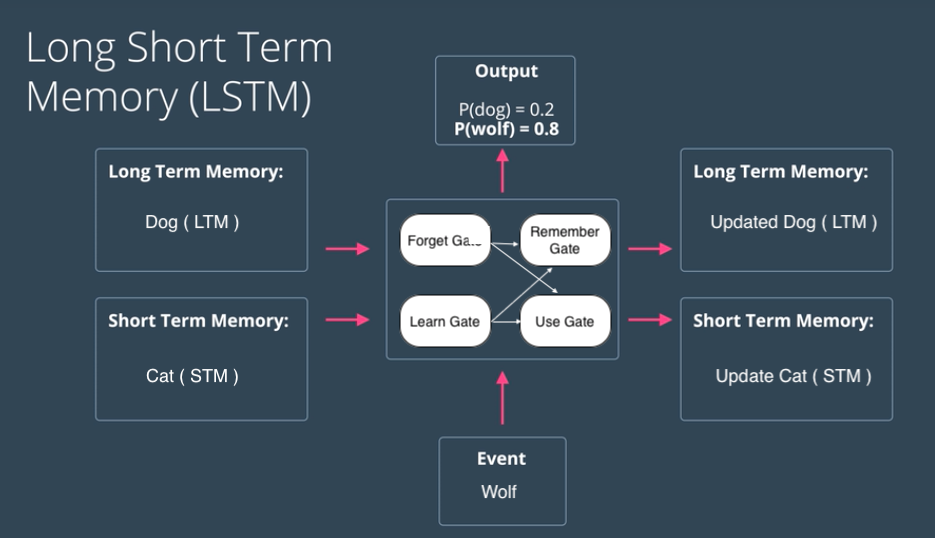

LSTMs deal with both Long Term Memory (LTM) and Short Term Memory (STM) and for making the calculations simple and effective it uses the concept of gates.

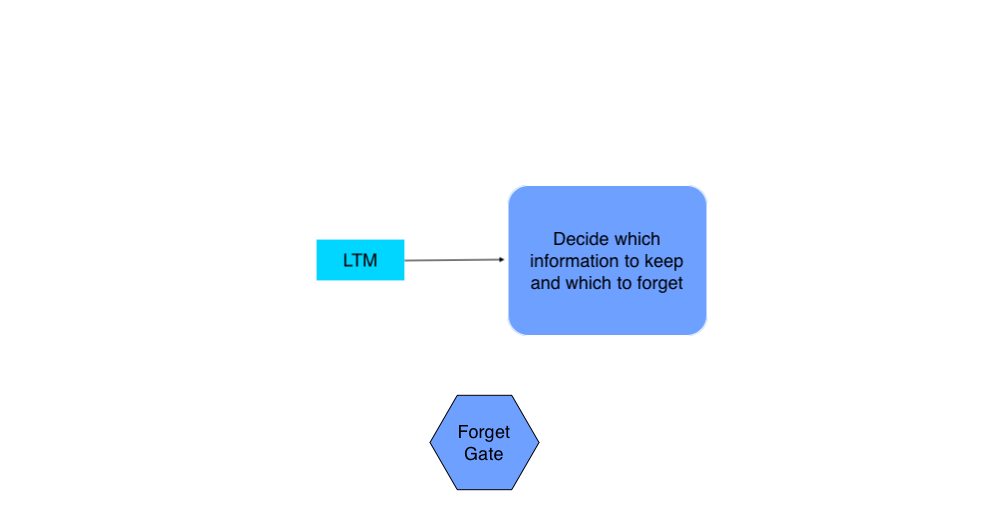

- Forget Gate: LTM goes to forget gate and it forgets information that is not useful.

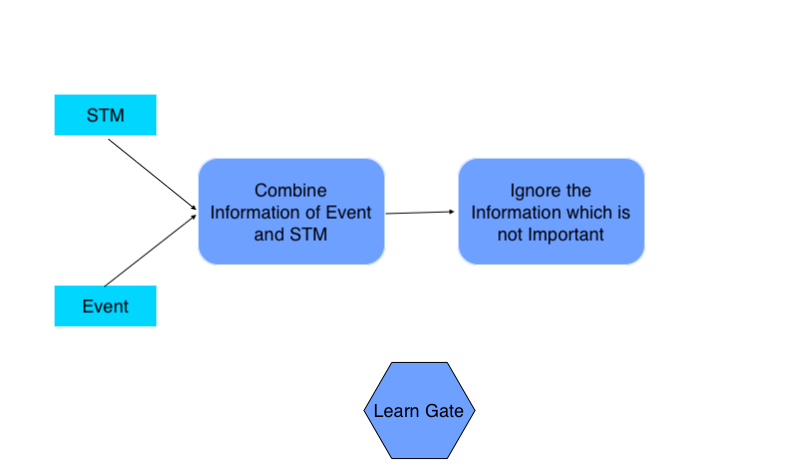

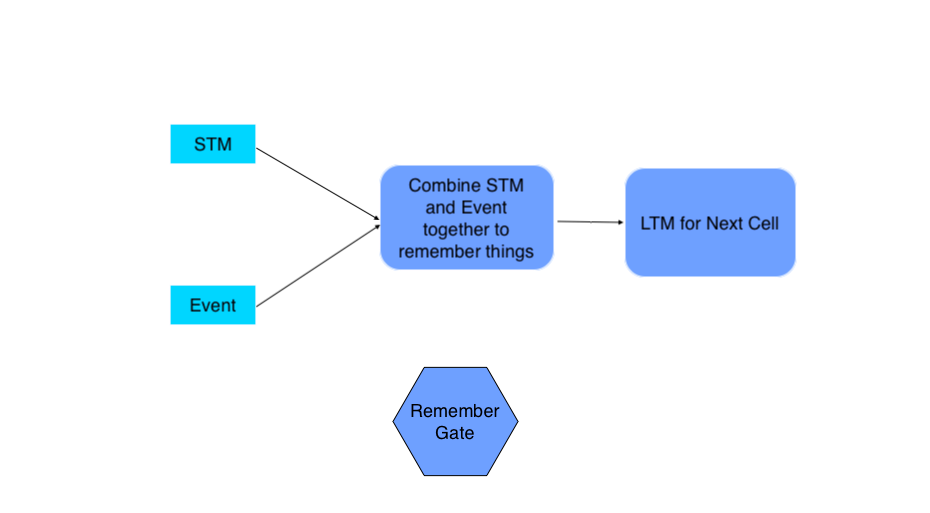

- Learn Gate: Event ( current input ) and STM are combined together so that necessary information that we have recently learned from STM can be applied to the current input.

- Remember Gate: LTM information that we haven’t forget and STM and Event are combined together in Remember gate which works as updated LTM.

- Use Gate: This gate also uses LTM, STM, and Event to predict the output of the current event which works as an updated STM.

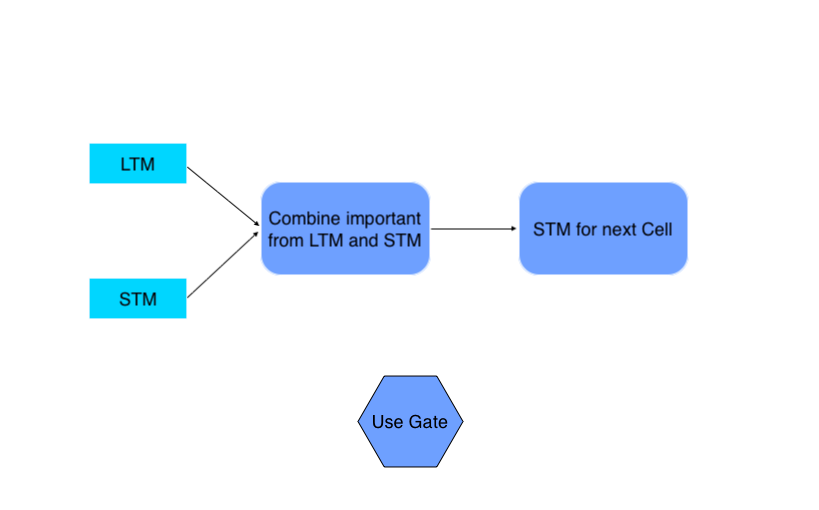

Figure: Remember Gate

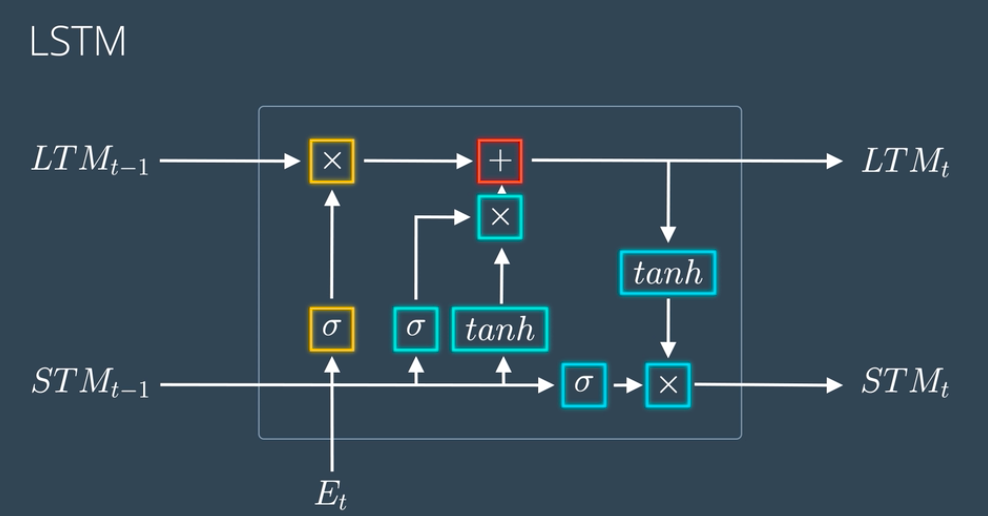

Figure: Remember GateThe above figure shows the simplified architecture of LSTMs. The actual mathematical architecture of LSTM is represented using the following figure:

don’t go haywire with this architecture we will break it down into simpler steps which will make this a piece of cake to grab.

Breaking Down the Architecture of LSTM:

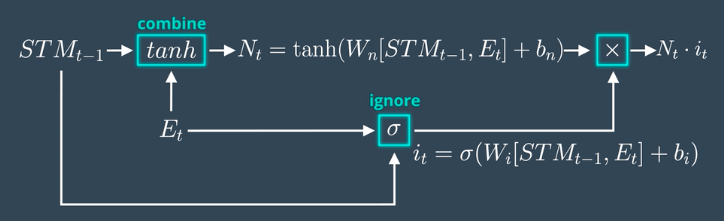

1. Learn Gate: Takes Event ( Et ) and Previous Short Term Memory ( STMt-1 ) as input and keeps only relevant information for prediction.

Calculation:

- Previous Short Term Memory STMt-1 and Current Event vector Et are joined together [STMt-1, Et] and multiplied with the weight matrix Wn having some bias which is then passed to tanh ( hyperbolic Tangent ) function to introduce non-linearity to it, and finally creates a matrix Nt.

- For ignoring insignificant information we calculate one Ignore Factor it, for which we join Short Term Memory STMt-1 and Current Event vector Et and multiply with weight matrix Wi and passed through Sigmoid activation function with some bias.

- Learn Matrix Nt and Ignore Factor it is multiplied together to produce learn gate result.

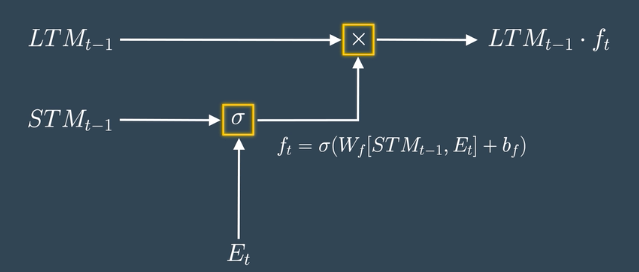

2. The Forget Gate: Takes Previous Long Term Memory ( LTMt-1 ) as input and decides on which information should be kept and which to forget.

Calculation:

- Previous Short Term Memory STMt-1 and Current Event vector Et are joined together [STMt-1, Et] and multiplied with the weight matrix Wf and passed through the Sigmoid activation function with some bias to form Forget Factor ft.

- Forget Factor ft is then multiplied with the Previous Long Term Memory (LTMt-1) to produce forget gate output.

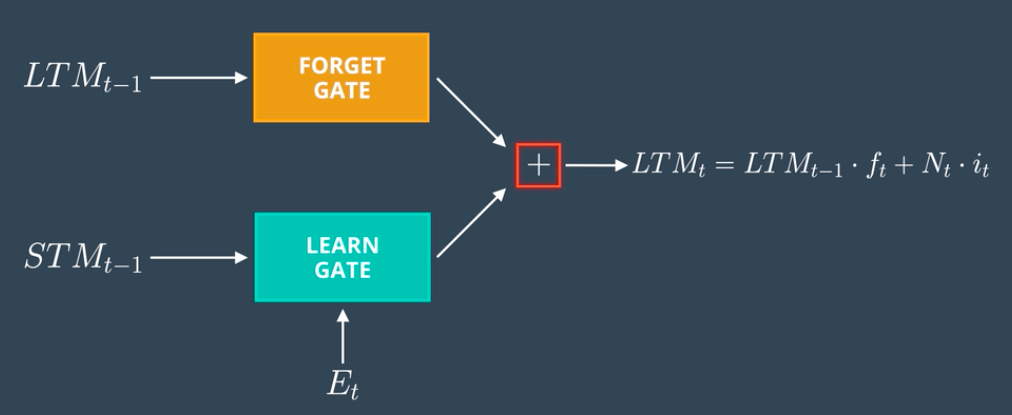

3. The Remember Gate: Combine Previous Short Term Memory (STMt-1) and Current Event (Et) to produce output.

Calculation:

- The output of Forget Gate and Learn Gate are added together to produce an output of Remember Gate which would be LTM for the next cell.

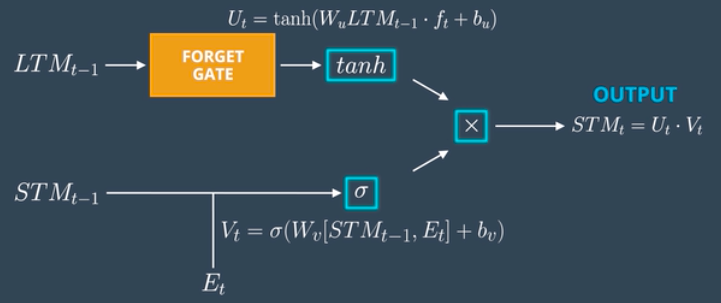

4. The Use Gate:

Combine important information from Previous Long Term Memory and Previous Short Term Memory to create STM for next and cell and produce output for the current event.

Calculation

Source: Udacity

- Previous Long Term Memory ( LTM-1) is passed through Tangent activation function with some bias to produce Ut.

- Previous Short Term Memory ( STMt-1 ) and Current Event ( Et)are joined together and passed through Sigmoid activation function with some bias to produce Vt.

- Output Ut and Vt are then multiplied together to produce the output of the use gate which also works as STM for the next cell.

Now scroll up to the architecture and put all these calculations so that you will have your LSTM ready.

Usage of LSTMs:

Training LSTMs removes the problem of Vanishing Gradient ( weights become too small that under-fits the model ), but it still faces the issue of Exploding Gradient ( weights become too large that over-fits the model ). Training of LSTMs can be easily done using Python frameworks like Tensorflow, Pytorch, Theano, etc. and the catch is the same as RNN, we would need GPU for training deeper LSTM Networks.

Since LSTMs take care of the long term dependencies its widely used in tasks like Language Generation, Voice Recognition, Image OCR Models, etc. Also, this technique is getting noticed in Object Detection also ( mainly scene text detection ).

References:

1. Understanding LSTMs: http://blog.echen.me/2017/05/30/exploring-lstms/

2. Understanding LSTM Networks: http://colah.github.io/posts/2015-08-Understanding-LSTMs/

3. Udacity Deep Learning: https://www.udacity.com/

these are some of the best articles on LSTMs, I will suggest going through them once for deeper understanding.

Thanks for reading this article do like if you have learned something new, feel free to comment See you next time !!! ❤️

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.