This article was published as a part of the Data Science Blogathon

Hyperparameters

Hyperparameters are the parameters that manipulate the training of an Artificial Neural Network, by tuning those we could be able to produce high-quality solutions. Hyperparameters are not produced by the model during the training process unlike the weights of the model. The hyperparameters don’t change over training time and remain constant and manipulate the training process of a model. Finding optimal hyperparameters is a search problem, by utilizing which we might or might not end up with the best possible solution which is global optima.

Hyperparameters of an Artificial Neural Network are,

- Number of layers to choose

- Number of neurons in a layer to choose

- Choice of the optimization function

- Choice of the learning rate for optimization function

- Choice of the loss function

- Choice of metrics

- Choice of activation function

- Choice of layer weight initialization

The importance of Hyperparameter Tuning

Hyperparameter tuning is the process of searching optimal set of hyperparameters. It is really hard to find the optimal set of hyperparameters manually, so there are certain algorithms that make our hyperparameter search easier. Grid search is one of the algorithms that perform an exhaustive search which is time-consuming by nature, so an alternative to that is the Random Search algorithm that randomly searches the hyperparameter search space but it doesn’t guarantee a globally optimal solution. The algorithms that are more likely to provide globally optimal solutions are Bayesian optimization, Hyperband, and Hyperparameter optimization using Genetic algorithms.

How do we evaluate a set of hyperparameters?

The hyperparameters are evaluated based on the losses of the model predictions, viz. the hyperparameters are set on the model, the model is trained on the data, and the performance of the model is evaluated based on the loss function. These steps are followed iteratively to find the optimal set of hyperparameters. As said earlier we might or might not end up with a globally optimal solution.

Keras Tuner

Keras tuner is an open-source python library developed exclusively for tuning the hyperparameters of Artificial Neural Networks. Keras tuner currently supports four types of tuners or algorithms namely,

- Bayesian Optimization

- Hyperband

- Sklearn

- Random Search

You can install the Keras tuner on your system using the following command,

pip install keras-tuner

Getting started with Keras Tuner

The model you want to tune is called the Hyper model. To work with Keras Tuner you must define your hyper model using either of the following two ways,

- Using model builder function

- By subclassing HyperModel class available in Keras tuner

Fine-tuning models using Keras-tuner

Import the required libraries. Here we have used the California dataset which is readily available in the Google Colab.

import math import pandas as pd import tensorflow as tf import matplotlib.pyplot as plt from tensorflow.keras import Model from tensorflow.keras import Sequential from tensorflow.keras.optimizers import Adam from sklearn.preprocessing import StandardScaler from tensorflow.keras.layers import Dense, Dropout from sklearn.model_selection import train_test_split from tensorflow.keras.losses import MeanSquaredLogarithmicError TRAIN_DATA_PATH = '/content/sample_data/california_housing_train.csv' TEST_DATA_PATH = '/content/sample_data/california_housing_test.csv' TARGET_NAME = 'median_house_value' # read the training and test data train_data = pd.read_csv(TRAIN_DATA_PATH) test_data = pd.read_csv(TEST_DATA_PATH) # split the data into features and target x_train, y_train = train_data.drop(TARGET_NAME, axis=1), train_data[TARGET_NAME] x_test, y_test = test_data.drop(TARGET_NAME, axis=1), test_data[TARGET_NAME]

Scale the datasets using Sklearn’s StandardScaler, doing this step helps the model ending up with optimal parameters.

def scale_datasets(x_train, x_test):

"""

Standard Scale test and train data

Z - Score normalization

"""

standard_scaler = StandardScaler()

x_train_scaled = pd.DataFrame(

standard_scaler.fit_transform(x_train),

columns=x_train.columns

)

x_test_scaled = pd.DataFrame(

standard_scaler.transform(x_test),

columns = x_test.columns

)

return x_train_scaled, x_test_scaled

# scale the dataset

x_train_scaled, x_test_scaled = scale_datasets(x_train, x_test)

Let’s start with fine-tuning the model with a Keras-tuner. The following tuner is defined with the model builder function.

import kerastuner as kt

msle = MeanSquaredLogarithmicError()

def build_model(hp):

model = tf.keras.Sequential()

# Tune the number of units in the first Dense layer

# Choose an optimal value between 32-512

hp_units1 = hp.Int('units1', min_value=32, max_value=512, step=32)

hp_units2 = hp.Int('units2', min_value=32, max_value=512, step=32)

hp_units3 = hp.Int('units3', min_value=32, max_value=512, step=32)

model.add(Dense(units=hp_units1, activation='relu'))

model.add(tf.keras.layers.Dense(units=hp_units2, activation='relu'))

model.add(tf.keras.layers.Dense(units=hp_units3, activation='relu'))

model.add(Dense(1, kernel_initializer='normal', activation='linear'))

# Tune the learning rate for the optimizer

# Choose an optimal value from 0.01, 0.001, or 0.0001

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3, 1e-4])

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=hp_learning_rate),

loss=msle,

metrics=[msle]

)

return model

# HyperBand algorithm from keras tuner

tuner = kt.Hyperband(

build_model,

objective='val_mean_squared_logarithmic_error',

max_epochs=10,

directory='keras_tuner_dir',

project_name='keras_tuner_demo'

)

tuner.search(x_train_scaled, y_train, epochs=10, validation_split=0.2)

The build_model function above is the model builder function that creates, compiles, and returns a neural network model.

The parameter to the build_model function ‘hp’ is passed internally by the Keras tuner. The argument ‘hp’ is an instance of the class HyperParameters.

Alternatively, you can define the hyper model by subclassing HyperModel class in the Keras tuner.

from kerastuner import HyperModel

class ANNHyperModel(HyperModel):

def build(self, hp):

model = tf.keras.Sequential()

# Tune the number of units in the first Dense layer

# Choose an optimal value between 32-512

hp_units1 = hp.Int('units1', min_value=32, max_value=512, step=32)

hp_units2 = hp.Int('units2', min_value=32, max_value=512, step=32)

hp_units3 = hp.Int('units3', min_value=32, max_value=512, step=32)

model.add(Dense(units=hp_units1, activation='relu'))

model.add(tf.keras.layers.Dense(units=hp_units2, activation='relu'))

model.add(tf.keras.layers.Dense(units=hp_units3, activation='relu'))

model.add(Dense(1, kernel_initializer='normal', activation='linear'))

# Tune the learning rate for the optimizer

# Choose an optimal value from 0.01, 0.001, or 0.0001

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3, 1e-4])

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=hp_learning_rate),

loss=msle,

metrics=[msle]

)

return model

hypermodel = ANNHyperModel()

tuner = kt.Hyperband(

hypermodel,

objective='val_mean_squared_logarithmic_error',

max_epochs=10,

factor=3,

directory='keras_tuner_dir',

project_name='keras_tuner_demo2'

)

tuner.search(x_train_scaled, y_train, epochs=10, validation_split=0.2)

The build method must be defined when subclassing the HyperModel class. The parameter to the build method ‘hp’ is passed internally by the Keras tuner. Now that we have seen the two ways to define a Hyper model, now let us see about the working of the code.

Working of Keras tuner

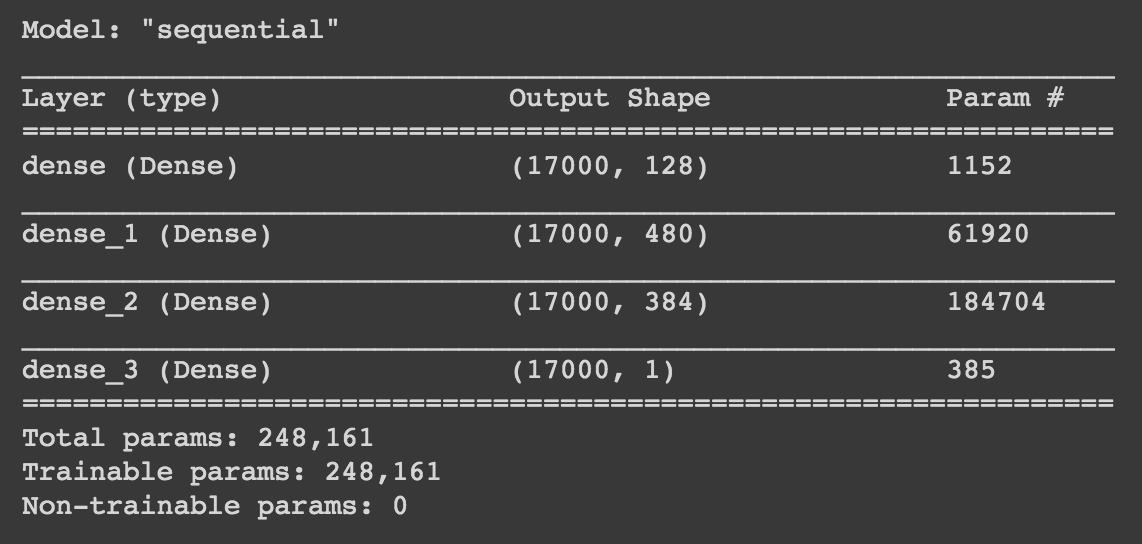

The model consists of four layers, the last one is the output layer with linear activation function since this is a Regression problem.

The instance of class HyperParameters ‘hp’ consists of many methods such as Int, Choice, Float, Fixed and etc. These provide search space for a hyper-parameter.

- The first argument of the method Int denotes the name for that hyper-parameter, in our case, it is ‘units1’. The min_value argument denotes the minimum value for the hyper-parameter to take and the max_value argument denotes the maximum value for the hyper-parameter to take. The steps argument denotes how many steps to take from min_value to max_value. The Int method is defined for the first three layers of the neural network model with the names ‘units1’, ‘units2’, and ‘units3’.

- The first argument of the method Choice denotes the name for that hyper-parameter as said earlier. The values argument of the method denotes the choice to make among those values. The Choice method is defined to select the learning rate for the loss function optimizer, Adam.

- After defining the hyper-parameters we compile the model with Adam optimizer, Mean squared logarithmic loss, and metric and return that model.

Here we have selected the HyperBand algorithm to optimize the hyperparameters, the other algorithms available are BayesianOptimization, RandomSearch, and SklearnTuner.

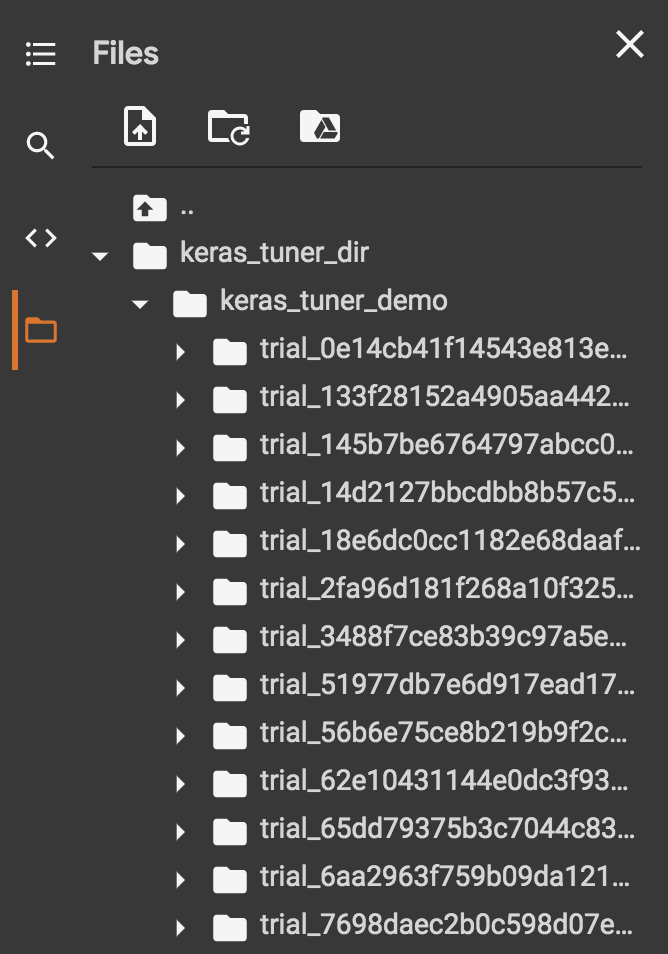

The first argument of the HyperBand algorithm is the model builder function, the objective argument contains the loss function to consider, in our case, it is validation data’s mean squared logarithmic error. The max_epochs argument denotes the maximum number of epochs to train a model. The results are saved in the directory ‘keras_tuner_dir’ and ‘keras_tuner_demo’ like in the image shown below.

When calling the tuner’s search method the Hyperband algorithm starts working and the results are stored in that instance.

The best hyper-parameters can be fetched using the method get_best_hyperparameters in the tuner instance and we could also obtain the best model with those hyperparameters using the get_best_models method of the tuner instance.

for h_param in [f"units{i}" for i in range(1,4)] + ['learning_rate']:

print(h_param, tuner.get_best_hyperparameters()[0].get(h_param))

# Output

# units1 128

# units2 480

# units3 384

# learning_rate 0.01

The best hyper-parameters found by the HyperBand algorithm are,

- 128 for units1 in the first layer,

- 480 for units2 in the second layer,

- 384 for units3 in the third layer,

- 0.01 for learning rate of Adam optimizer

Now select the best model which is saved in the tuner instance,

best_model = tuner.get_best_models()[0] best_model.build(x_train_scaled.shape) best_model.summary()

Image source: Executed in Google Colab by Author

As you can see the first, second, and third layer consists of units 128, 480, and 384 respectively which are the optimal hyperparameters found by the Keras tuner. Now train the model using the fit method.

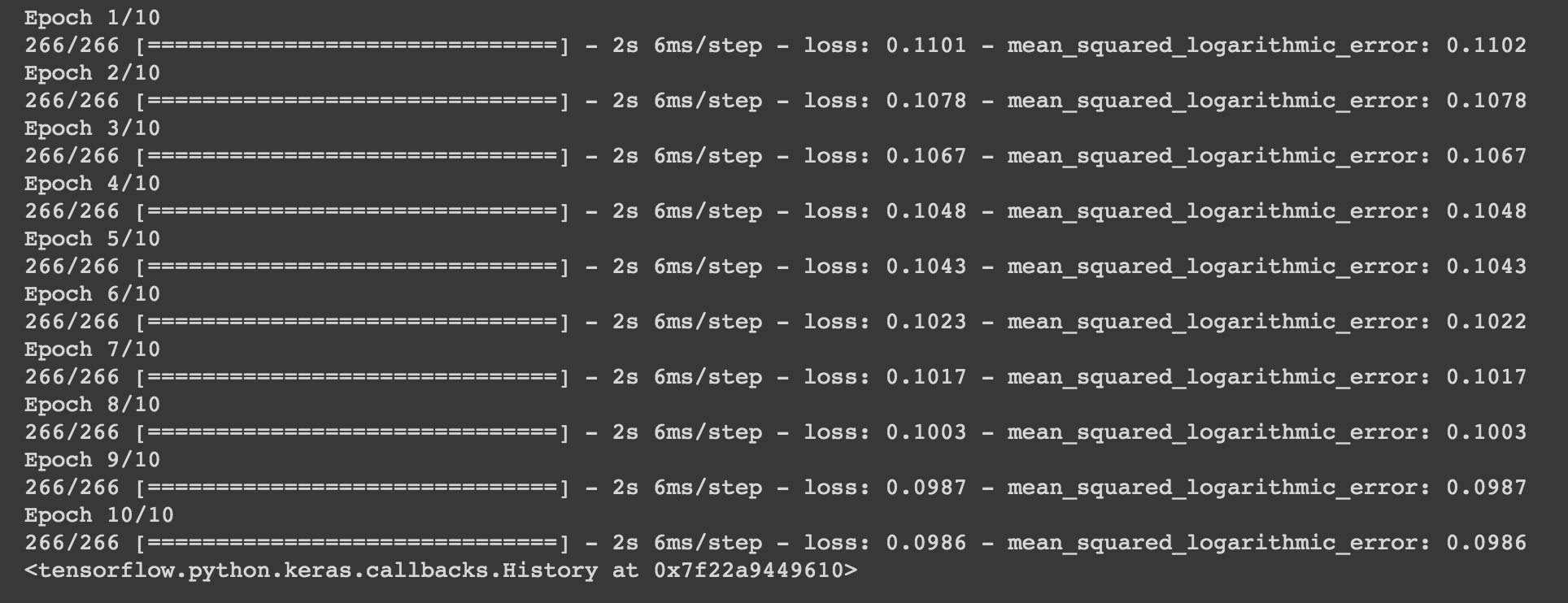

best_model.fit(

x_train_scaled,

y_train,

epochs=10,

batch_size=64

)

Now that we’ve trained a model with the best hyperparameters, now we can predict with that trained model and find the prediction errors.

# mean squared logarithmic error msle(y_test, best_model.predict(x_test_scaled)).numpy() # Output # 0.53408164

In this way, you can leverage the Keras tuner to tune your hyperparameters. You could also try out different hyperparameter algorithms such as Bayesian optimization, Sklearn tuner, and Random search available in the Keras-Tuner. By trying these, you might end up with an optimal solution that is far better than the hyperparameters found above.

References

[1] Keras tuner documentation

Connect with me on LinkedIn

Thank you!

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.