Introduction to FastAI.jl

The FastAI.jl library is similar to the fast.ai library in Python and it’s the best way to experiment with your deep learning projects in Julia. The library allows you to use state-of-the-art models that you can modify, train, and evaluate by using few lines of code. The FastAI.jl provides a complete ecosystem for deep learning which includes computer vision, Natural Language processing, tabular data, and more submodules are added every month FastAI (fluxml.ai).

In this project, we are going to use the fastai library to train an image classifier on the ImageNet dataset. The imagenette2-160 dataset is from the fastai dataset repository (https://course.fast.ai/datasets) that contains smaller size images of the things around us which range from animals to cars. The ResNet-18 model architecture is available at Deep Residual Learning for Image Recognition. We won’t be going deep into the dataset or how model architecture works, instead, we will be focusing on how fastai.jl has made deep learning easy.

Image 1

Getting Started with FastAI.jl in Julia

For more detail visit Quickstart (fluxml.ai) as the code used in this project is driven from fastai documentation.

Local Setup

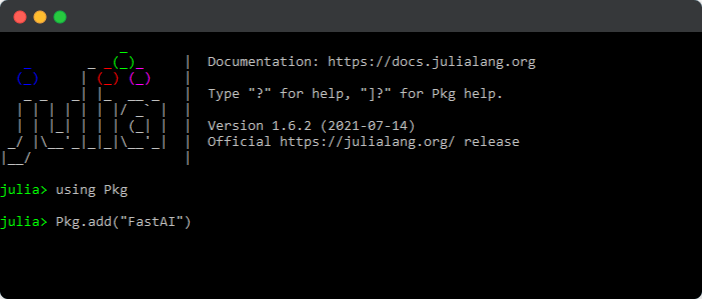

If you have Julia installed in your system, then type:

using Pkg

Pkg.add("FastAI")

Pkg.add("CairoMakie")

As shown in the image below 👇

Deepnote

For the Deepnote environment, you have to create a docker file and add:

FROM gcr.io/deepnote-200602/templates/deepnote

RUN wget https://julialang-s3.julialang.org/bin/linux/x64/1.6/julia-1.6.2-linux-x86_64.tar.gz &&

tar -xvzf julia-1.6.2-linux-x86_64.tar.gz &&

sudo mv julia-1.6.2 /usr/lib/ &&

sudo ln -s /usr/lib/julia-1.6.2/bin/julia /usr/bin/julia &&

rm julia-1.6.2-linux-x86_64.tar.gz &&

julia -e "using Pkg;pkg"add IJulia LinearAlgebra SparseArrays Images MAT""

ENV DEFAULT_KERNEL_NAME "julia-1.6.2"

Google Colab

For Google Colab you can follow my repo on GitHub or just create a Julia environment by adding an additional cell shown below. Installing Julia packages may take up to 15 minutes.

- Change Runtime to GPU for faster results.

- Execute the code below.

- Reload this page by pressing F5.

%%shell

set -e

#---------------------------------------------------#

JULIA_VERSION="1.6.2"

export JULIA_PACKAGES="CUDA IJulia CairoMakie"

JULIA_NUM_THREADS="2"

#---------------------------------------------------#

if [ -n "$COLAB_GPU" ] && [ -z `which julia` ]; then

# Install Julia

JULIA_VER=`cut -d '.' -f -2 <<< "$JULIA_VERSION"`

echo "Installing Julia $JULIA_VERSION on the current Colab Runtime..."

BASE_URL="https://julialang-s3.julialang.org/bin/linux/x64"

URL="$BASE_URL/$JULIA_VER/julia-$JULIA_VERSION-linux-x86_64.tar.gz"

wget -nv $URL -O /tmp/julia.tar.gz # -nv means "not verbose"

tar -x -f /tmp/julia.tar.gz -C /usr/local --strip-components 1

rm /tmp/julia.tar.gz

# Install Packages

echo "Installing Julia packages, this may take up to 15 minutes. "

julia -e 'using Pkg; Pkg.add(["CUDA", "IJulia", "CairoMakie"]); Pkg.add(Pkg.PackageSpec(url="https://github.com/FluxML/FastAI.jl")); Pkg.precompile()' &> /dev/null

# Install kernel and rename it to "julia"

echo "Installing IJulia kernel..."

julia -e 'using IJulia; IJulia.installkernel("julia", env=Dict(

"JULIA_NUM_THREADS"=>"'"$JULIA_NUM_THREADS"'"))'

KERNEL_DIR=`julia -e "using IJulia; print(IJulia.kerneldir())"`

KERNEL_NAME=`ls -d "$KERNEL_DIR"/julia*`

mv -f $KERNEL_NAME "$KERNEL_DIR"/julia

echo ''

echo "Success! Please reload this page and jump to the next section."

fi

Implementation of Image Classification Using FastAI.jl

Checking the version to make sure you have a similar version as mine.

versioninfo()

Julia Version 1.6.2 Commit 1b93d53fc4 (2021-07-14 15:36 UTC) Platform Info: OS: Linux (x86_64-pc-linux-gnu) CPU: Intel(R) Xeon(R) CPU @ 2.30GHz WORD_SIZE: 64 LIBM: libopenlibm LLVM: libLLVM-11.0.1 (ORCJIT, haswell) Environment: JULIA_NUM_THREADS = 2

Import the libraries

using FastAI import CairoMakie

Download the Dataset

We are using `loaddataset` function to import the local dataset from fastai. As you can see using a single line of code can download the dataset and provide you with access to all images location with labels.

data, blocks = loaddataset("imagenette2-160", (Image, Label))

┌ Info: Downloading │ source = https://s3.amazonaws.com/fast-ai-imageclas/imagenette2-160.tgz │ dest = /root/.julia/datadeps/fastai-imagenette2-160/imagenette2-160.tgz │ progress = 1.0 │ time_taken = 2.69 s │ time_remaining = 0.0 s │ average_speed = 35.138 MiB/s │ downloaded = 94.417 MiB │ remaining = 0 bytes │ total = 94.417 MiB └ @ HTTP /root/.julia/packages/HTTP/5e2VH/src/download.jl:128

The data contains the location of images, and the block contains structure and images labels.

data

(mapobs(loadfile, ["/root/.julia/datadeps/fastai-imagenette2-160/imagenette2-160/train/n01440764/I…]), mapobs(parentname, ["/root/.julia/datadeps/fastai-imagenette2-160/imagenette2-160/train/n01440764/I…]))

blocks

(Image{2}(), Label{String}(["n01440764", "n02102040", "n02979186", "n03000684", "n03028079", "n03394916", "n03417042", "n03425413", "n03445777", "n03888257"]))

Exploring Image for Image Classification Using FastAI.jl

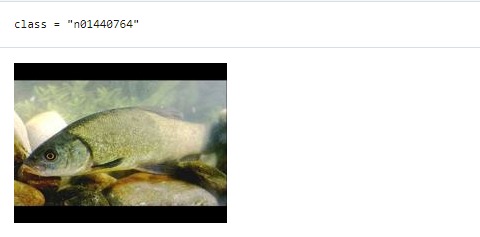

By using getobs we can check a single sample from our data and check the class. In our case the class name is n01440764 you can also rename the class as fish but for simplicity, we will be using a unique Id for the class. We can also see the image in sample 500.

image, class = sample = getobs(data, 500) @show class image

OR

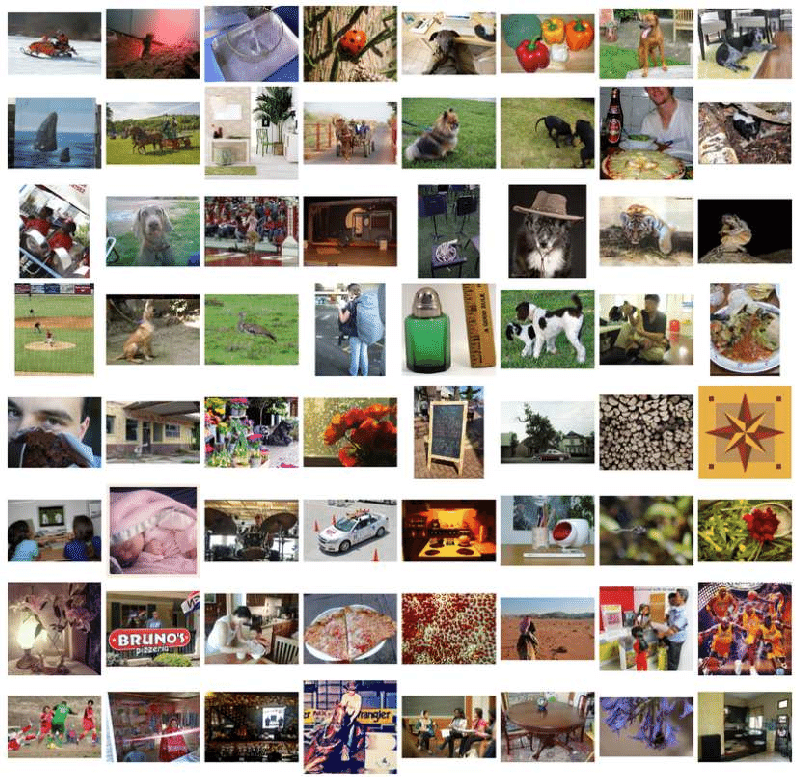

We can check multiple photos with labels by using `getobs` and `plotsamples`.

idxs = rand(1:nobs(data), 9) samples = [getobs(data, i) for i in idxs] plotsamples(method, samples)

Image Classification Function

In order to load our Image data, we need to first create a function that will transform images to 128X128 and preprocesses them to randomly augmentation (sheer, stretch, rotate, flip). For labels, it will use one-hot encoding to convert string labels into integers.

method = BlockMethod( blocks, ( ProjectiveTransforms((128, 128)), ImagePreprocessing(), OneHot() ) )

BlockMethod(Image{2} -> Label{String})

OR

You can just use the ImageClassificationSingle function to reproduce equivalent results.

method = ImageClassificationSingle(blocks)

Building model for Image Classification Using FastAI.jl

- First, we need to use a data loader to load image links and use the transformation method. This will convert images and Labels into integer data.

- `methodmodel` takes the method from the image loader and ResNet architect to build a model for training.

- we can simply create a loss function by adding the method into `metholossfn`. By default, the loss function is `CrossEntropy`.

- By using `Learner` we combine, method, data loader, optimizer which is ADAM, loss function, and Metric which is accuracy in our case.

dls = methoddataloaders(data, method) model = methodmodel(method, Models.xresnet18()) lossfn = methodlossfn(method) learner = Learner(model, dls, ADAM(), lossfn, ToGPU(), Metrics(accuracy))

OR

We can simply do all the above steps by using a single line of code as shown below.

learner = methodlearner(method, data, Models.xresnet18(), ToGPU(), Metrics(accuracy))

Training and Evaluation of Image Classification Model

We will be training our model on 10 Epochs with a 0.002 learning rate. As we can see our training and validation loss have decreased with every iteration but after the 5th Epoch, the validation loss has become steady.

Final Metrics:

Training = Loss: 0.07313 │ Accuracy: 98.38%

Validation = Loss: 0.59254 │ Accuracy: 83.27%

This is not bad as we haven’t cleaned our data or performed hyperparameters tuning.

fitonecycle!(learner, 10, 0.002)

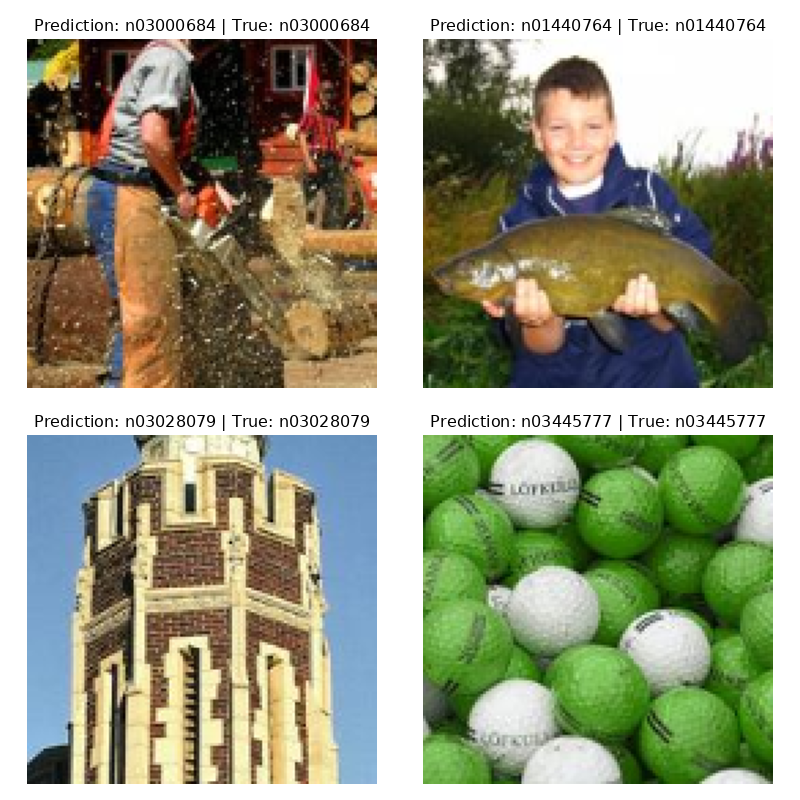

Evaluating the model Prediction

As we can see all four photos were predicted correctly. You can also see the prediction but using `plotprediction` method.

plotpredictions(method, learner)

Conclusion

Julia is the future of Data Science and if you want to become the future proof you need to learn the basics. With the introduction of fastai.jl things have gotten quite easy for Julia users as they just have to write few lines of code to build state-of-the-art machine learning models. The fastai.jl is built upon flux.jl which is totally built upon Julia, unlike Python libraries which integrate other programming languages integration such as C and Java scripts. Even CUDA used in Julia is build using Julia language, not C, which gives an advantage to Julia in processing and security. Julia is better than Python in many ways as I have already discussed in my previous article Julia for Data Science :A New Age Data Science – Analytics Vidhya.

In this project, we have built an image classifier model in ImageNet data by using few lines of code and our model has performed above our expectations. We have also discovered different methods to do similar tasks in image classification and how fastai the library is powerful in exploring and predicting images. Overall, I have enjoyed using this library and there is so much to it that I didn’t discuss in this article.

I hope you experiment with this library and showcase amazing projects that are production-ready.

Source Code

The source code is available at GitHub and Deepnote.

You can follow me on LinkedIn and Polywork where I post amazing articles on data science and machine learning.

Image Sources

Image 1 -https://www.researchgate.net/publication/314646236_Global-Attributes_Assisted_Outdoor_Scene_Geometric_Labeling

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.