Introduction

A major goal of Artificial Intelligence in recent years has been creating multimodal systems, i.e, systems that can learn concepts in multiple domains. We have seen one such system CLIP in the previous `blog post.

In this article, we will look at possibly one of the biggest breakthroughs in Computer Vision in recent years: the DALL-E named after the artist Salvador Dalí and Pixar’s WALL-E.

DALL-E is a neural network that can successfully turn text into an appropriate image for a wide range of concepts expressible in natural language. We have already seen the implementation and success of the GPT-3 which was a major breakthrough in NLP. A similar approach has been used for this network. You could almost call this a GPT-3 for images.

DALL-E has 12 billion parameters trained to generate images from textual descriptions. Similar to CLIP, it also uses text-image pairs to form its dataset. We have seen in GPT-3 which is a 175 billion parameter network capable of various text generation tasks. DALL-E has now shown to extend that knowledge to manipulation of vision tasks.

Simply put, you could pass any piece of text to the DALL-E model, real or imaginary and it will generate the images for us. We will take a look at some examples later on. DALL-E really does provides a lot of customization with the images it produces.

Be prepared to have your mind blown!!

Overview of The Algorithm

As of date, the real work behind DALL-E has not been published,i.e, the team at OpenAI has not released the research paper and code behind it. But I’ll walk you through what we know now. Also, Phil Wang (aka Lucidrains) has developed a replication of DALL-E which you can view here!

Taking its inspiration from GPT-3, DALL-E is also a transformer language model. Transformer models have been the state of the art networks for all things NLP in recent years and are now seeing much use in vision tasks. We have already seen a vision transformer being used in the CLIP network.

One thing to note is that DALL-E is a Decoder-only transformer, which means all the images and texts are passed together directly as a single stream of tokens into the decoder.

What we know about DALL-E’s architecture is that it uses a similar approach to a VQVAE. Let’s understand how that works!

The above image is the working of a VQVAE, in which we can see that an image of a dog is passed into an encoder. This encoder creates a “latent space” which is simply a space of compressed image data in which similar data points are closer together.

Now the VQVAE has a batch of vectors which we can call vocabulary to simplify. The encoder must output a vector from its latent space which is present in our vocabulary. Meaning we provide the encoder with a set of choices. This vector is fed into the decoder which reconstructs the image of the dog.

So DALL-E uses a pre-trained VAE, meaning the encoder, decoder, and vocabulary are trained together. Let’s take an example to see DALL-E in action:-

Now given a text prompt such as this, DALL-E is able to generate images. What DALL-E can do compared to other text to image synthesis networks is not only generate an image from scratch but also to regenerate any rectangular region of an existing image.

The team at OpenAI has mentioned that the network generates 512 images as output. Their latest network CLIP is used as a reranking algorithm to choose the best 32 for the given examples. What this means is that the CLIP uses its similarity search capabilities to select the best image-test pairs. We have seen how good CLIP was at that in the previous blog post.

WHAT IS DALL-E GOOD AND BAD AT?

DALL-E has shown a lot of capabilities with what it can do. Let’s analyze them!

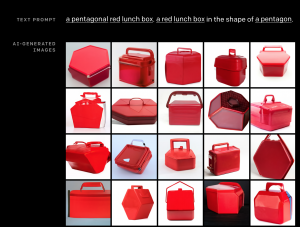

We can see that DALL-E can create objects in polygonal shapes that may or may not occur in real life. DALL-E does not miss the mark on creating the correct object but does have a lower success rate for some shapes as seen below. We can see some images of the red lunch box are hexagons even though the text requests for pentagonal boxes.

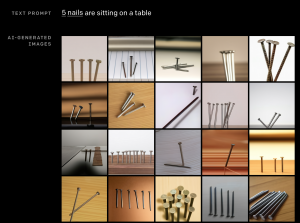

Counting is a major issue with DALL-E as of now. DALL-E is able to generate multiple objects but is unable to reliably count past two. While DALL·E does offer some level of controllability over the attributes and number of objects, the success rate can depend on how the caption is phrased. If the text requests nouns for which there are multiple meanings, such as “glasses,” “chips,” and “cups” it sometimes draws both interpretations, depending on the plural form that is used.

DALL-E is robust to changes in the lighting, shadows, and environment based on the time of day or season. This enables it to have some capabilities of a 3D rendering engine. A major difference is that, while 3D engines require the inputs to be completely detailed, DALL-E can fill those blanks based on the caption provided. In fact, DALL-E is really good at Styles!

A few other capabilities of the DALL-E are:-

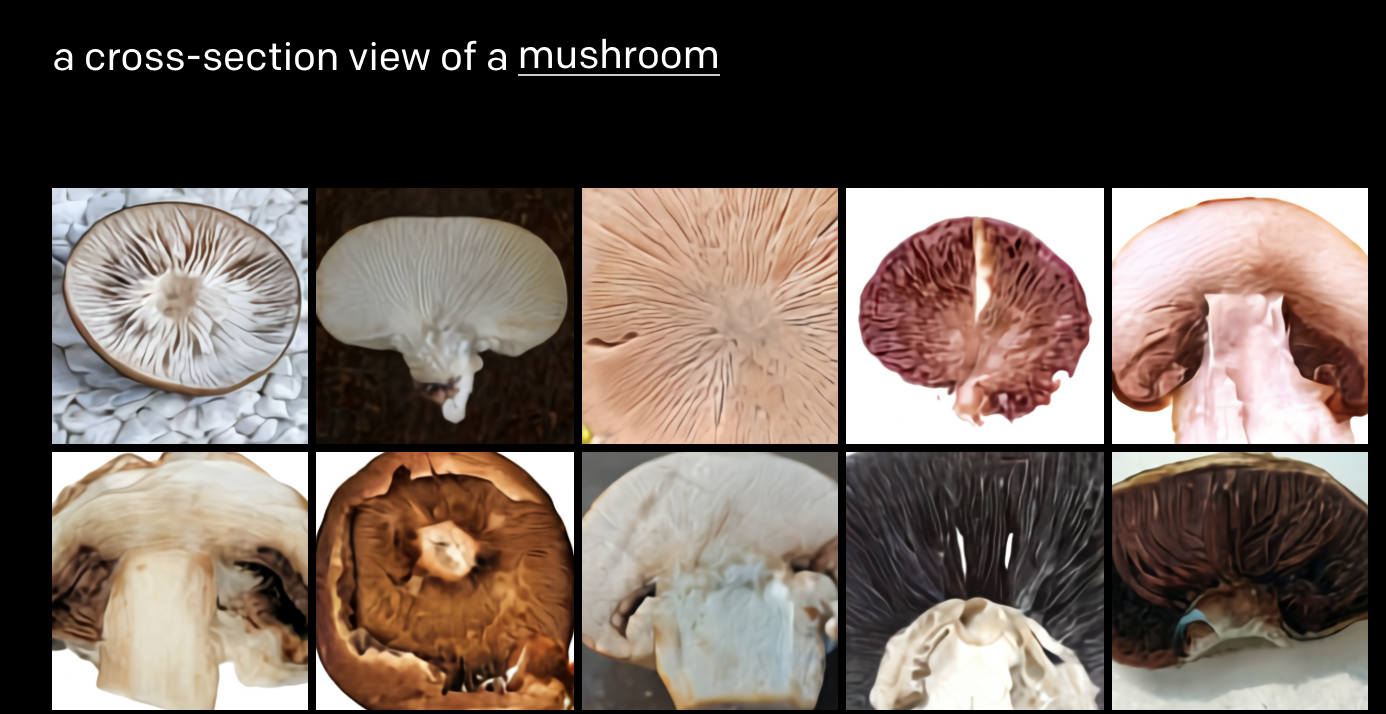

- Visualizing internal and external capabilities, i.e, it is able to generate a cross-section of a mushroom for example. It also the ability to draw the fine-grained external details of several different kinds of objects as we see in the image below. These details are only apparent when the object is viewed up close.

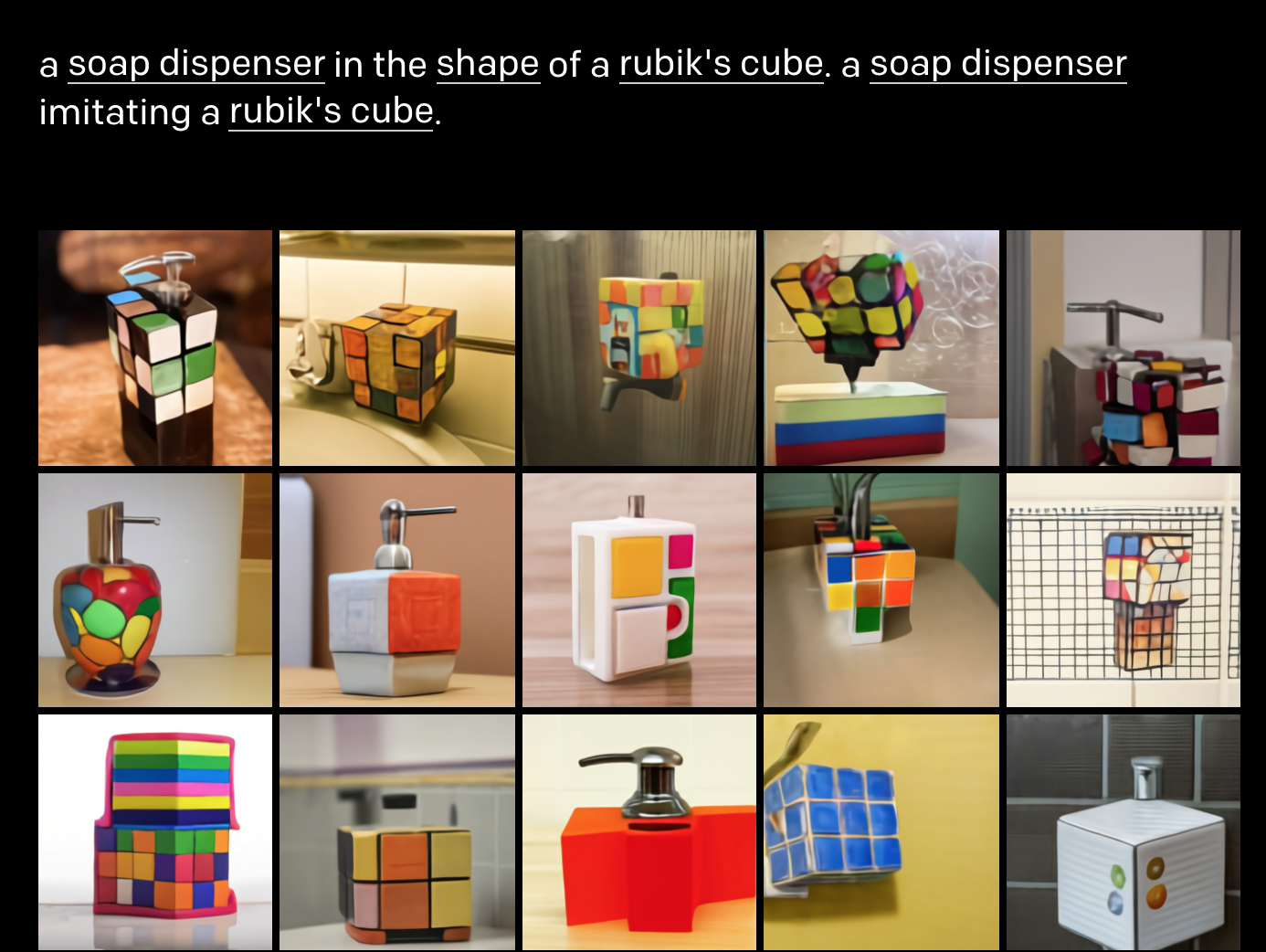

- Combining unrelated elements, i.e DALL-E is able to describe both real and imaginary objects. It has the ability to take inspiration from an unrelated idea while respecting the form of the thing being designed, ideally producing an object that appears to be practically functional. It appears that when the text contains the phrases “in the shape of,” “in the form of,” and “in the style of” gives it the ability to do this.

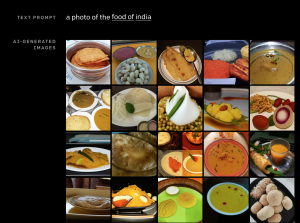

- Geographic and Temporal knowledge, i.e, is sometimes capable of rendering semblances of certain locations and understanding simple geographical facts, such as country flags, cuisines, and local wildlife. Keep in mind most of these locations do not exist!

It also has learned about basic stereotypical trends in design and technology over the decades.

ENDING NOTES

Can you believe that AlexNet was introduced 8 years ago and in such a short time we have had such revolutionary breakthroughs in AI?

With the recent breakthroughs in NLP with GPT-3, CLIP and DALL-E both introduce a whole new improvement in performance and generalization like never before. DALL-E is a prime example of extending NLP’s prowess to the visual domain.

The applications of such models could only have been thought of theoretically. We could see conversions of books into visual representations, have applications that generate photorealistic movies from a script, or new video games from a description. It’s only a matter of years at this point. One could also imagine animating or generating imagery of dreams using this technology.

From a social perspective, the team at OpenAI have plans to analyze how models like DALL-E could, in fact, relate to societal issues, given the long term ethical challenges of this technology. I truly believe the application of vision and language together is most definitely the future of deep learning.

Let us know what you think about the CLIP and DALL-E models in the comment sections below!