Introduction

Training a Deep Learning model from scratch can be a tedious task. You have to find the right training weights, get the optimal learning rates, find the best hyperparameters and the architecture that will best suit your data and model. Put it along with not having enough quality data to train and the computational intensity it requires that takes a heavy toll on our resources, they combine to give you a 3-punch combo that will knock you off in the first round. But fear not as deep learning libraries such as Fast.ai serve as our cheat codes that will put you back in the race in no time.

Table of Contents

- Overview of Fast.ai

- Why should we use Fast.ai?

- Image Databunches

- LR Find

- Fit One Cycle

- Image Databunches

- Case Study: Emergency vs Non-Emergency Vehicle Classification

Overview of Fast.ai

Fast.ai is a popular Deep Learning framework built on top of PyTorch. It aims at building state-of-the-art models quickly and easily in a few lines of code. It greatly simplifies the training process of a deep learning model without compromising on the speed, flexibility, and performance of the training model. Fast.ai supports state-of-the-art techniques and models in Computer Vision and NLP too.

Why should we use Fast.ai?

Along with the high productivity and ease of using Fast.ai’s models, it also assures us the flexibility that enables us to customize the high-level API without meddling in the lower level. Fast.ai is also packed with some really cool features making it one of the beginner’s favorite libraries to get started in deep learning.

Image Databunches

Image Data-bunches help to bring together our training, validation, and test data and process the data by performing all the required transformations and normalizing the image data.

LR Find

Learning rates can influence our model on how quickly the model learns and adapts itself to the problem. A low learning rate slows the convergence of the training process and a high learning rate can cause unpleasant divergence in the performance. Therefore, good learning rates are vital for the satisfactory performance of a model, and finding optimal learning rates is like looking for a needle in the haystack. Fast.ai’s “lr_find()” is our Knight in shining armor which saves us from the distress of finding good learning rates.

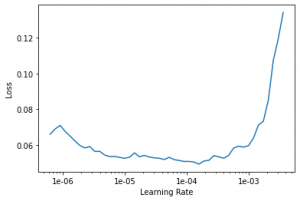

recorder.plot()

The lr_find() works on the principle of using very low LR initially to train a mini-batch and calculate the loss. It then trains the next mini-batch with a slightly higher learning rate than the previous one. This process goes on till we reach an LR where the model diverges. We can make use of the recorder.plot() to get the plot of LR vs Loss which simplifies the task of choosing a good LR. The LR is chosen on the basis of which LR gives the steepest slope for our loss rather than which LR has the lowest loss.

Fit One Cycle

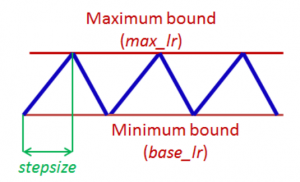

The fit_one_cycle method implements the concept of Cyclic learning rates. In this method, we use learning rates that fluctuate between the values of minimum and maximum bound rather than using a fixed or exponentially decreasing LR.

Cyclic Learning Rates. Credits: Cyclical Learning Rates for Training Neural Networks – Leslie N. Smith https://arxiv.org/pdf/1506.01186.pdf

Each cycle in CLR consists of 2 steps, one where the LR increases from the minimum to maximum bound and vice versa for the next step. The optimal learning rate is assumed to lie somewhere between the minimum and the maximum bound chosen. Here, the step size denotes the number of iterations used to increase or decrease the LR for each step.

We fit our model by the number of cycles using cyclic LR. The LR oscillates within the chosen minimum LR to maximum LR for each cycle during the training. The use of CLR eliminates the possibility of our training model getting stuck in saddle points.

The fit_one_cycle approach also implements the concept of LR Annealing where we use a reduced LR for the last few iterations. The LR for those last iterations is usually taken as one-hundredth of the chosen minimum LR. This prevents overshooting the optima as we reach closer to it.

Case Study: Emergency vs Non-Emergency Vehicle Classification

Let us try to solve the problem of Emergency vs Non-Emergency Vehicle Classification using the pre-trained Resnet50 Model in Imagenets Dataset using Fast.ai.

Importing Modules

Data Augmentation Using ImageDataBunch

Model Training

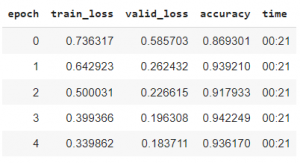

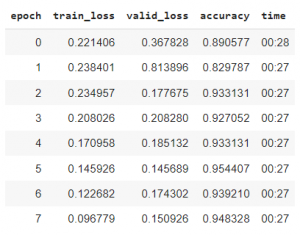

Initially, let us train our model using fit one cycle for 5 epochs. This is to get an idea of how the model works.

Model Training using fit_one_cycle()

Here, the validation loss is much lesser than the training loss. This indicates that our model is underfitting and it’s far from what we need it to be.

Unfreeze The Layers

Let’s unfreeze the layers of the pre-trained model. This is done so that our model learns features specific to our dataset. We fit-one-cycle our model again to see how the model works now.

Unfreeze the layers and Train the model

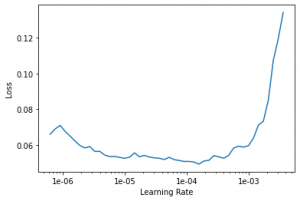

Learning Rate Finder

Using the lr_find and recorder.plot, we would be able to get a clear idea of which LR would best suit our model. Make use of the Learning Rate vs Loss plot to choose the LR.

Learning Rate against Loss Plot

You can see that the loss after 1e-4 increases progressively. Thus, choosing the learning rate as 1e-5 for the initial layers and 1e-4 for the later layers would be a wise idea.

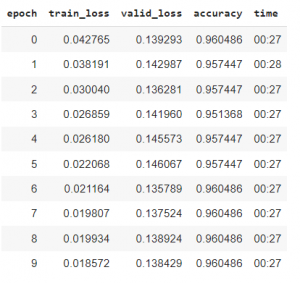

We fit our method again and train it with the chosen learning rates. The model is later frozen and exported for later uses.

Train and Freeze the Model

The slice inside the fit_one_cycle() is used to implement discriminative learning. It basically tells the model to train the initial layers with a learning rate of 1e-5 and the final layers with a learning rate of 1e-4, and the layers in-between them with values ranging between those two learning rates.

Prediction

With all the model training done, we are only left with the task of predicting our test dataset. Let us now load the test data and the Resnet50 model which we had previously exported and use it to predict our test data.

Endnotes

Voila! We have now predicated our test data without the long hours of time spent in scrapping for a larger training dataset, designing and training our Deep Learning model without completely exhausting our computational resources. In conclusion, it is definitely an understatement to say that Fast.ai is a “Blessing in Disguise” for novices who have just entered the incredible world of Deep Learning.

Read the latest articles on our blog.