Overview

- Hugging Face has released Transformers v4.3.0 and it introduces the first Automatic Speech Recognition model to the library: Wav2Vec2

- Using one hour of labeled data, Wav2Vec2 outperforms the previous state of the art on the 100-hour subset while using 100 times less labeled data

- Using just ten minutes of labeled data and pre-training on 53k hours of unlabeled data Wav2Vec2 achieves 4.8/8.2 WER

- Understand Wav2Vec2 implementation using transformers library on audio to text generation

Introduction

Transformers has been a driving point for breakthrough developments in the Audio and Speech processing domain. And Hugging Face has no plans to stop its growing applications. Hugging Face just dropped the State-of-the-art Natural Language Processing library Transformers v4.30 and it has extended its reach to Speech Recognition by adding one of the leading Automatic Speech Recognition models by Facebook called the Wav2Vec2.

We have seen Deep learning models benefit from large quantities of labeled training data. However, labeled data is much harder to come by than unlabeled data especially in the speech recognition domain which requires thousands of hours of transcribed speech to reach acceptable performance for more than 6,000 languages spoken worldwide.

In recent years, self-supervised learning has emerged as a paradigm to learn general data representations from unlabeled examples and to fine-tune the model on labeled data. This has been particularly successful for natural language processing and is an active research area for computer vision.

Wav2Vec2 uses self-supervised learning to enable speech recognition for many more languages and dialects by learning from unlabeled training data. With just one hour of labeled training data, Wav2Vec2 outperforms the previous state of the art on the 100-hour subset of the LibriSpeech benchmark using 100 times less labeled data.

If you are interested in having a career in Data Science and learning about these amazing things, I recommend you check out our Certified AI & ML BlackBelt Accelerate Program.

Working

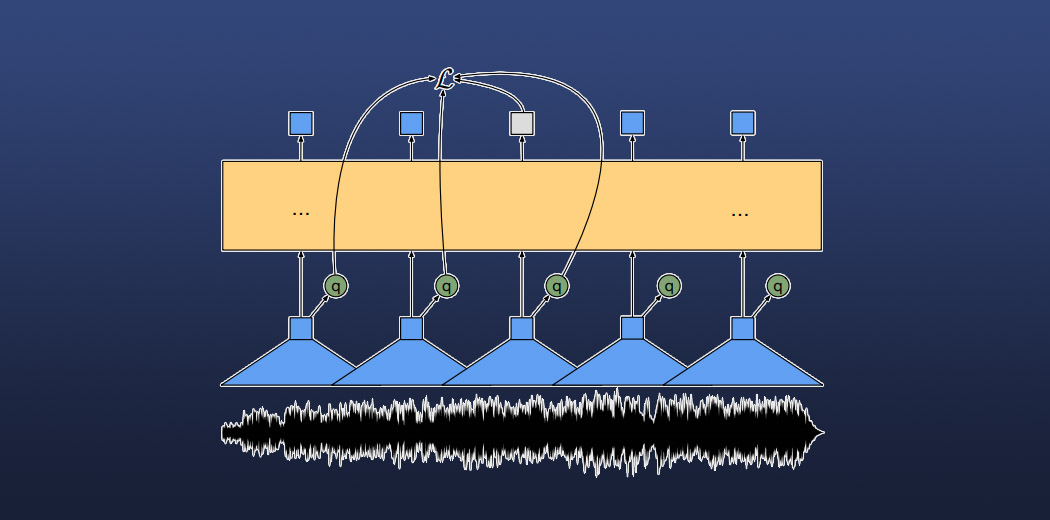

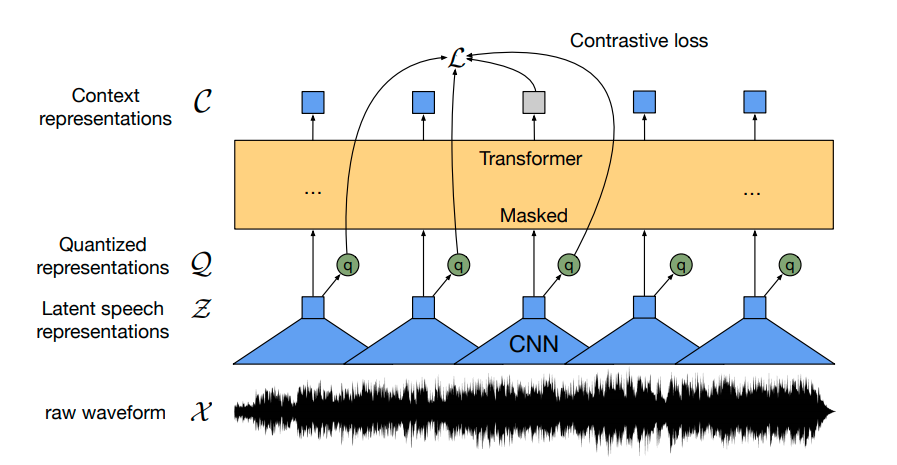

Source

The model takes as input a speech signal in any language in its raw form. This audio data is one-dimensional and is passed to a multi-layer 1-d Convolutional neural network to generate audio representations of 25ms each. This model uses a quantizer concept similar to that of a VQ-Vae where the latent representations are matched with a codebook so select the most appropriate representation for the data.

As this quantized data is fed into the transformer about half the audio representations are masked. The idea is to predict these masked vectors from the output of the transformer. This is done effectively using the contrastive loss function.

After pre-training on unlabeled speech, the model is fine-tuned on labeled data to be used for downstream speech recognition tasks like emotion recognition and speaker identification.

Performance and Implementation

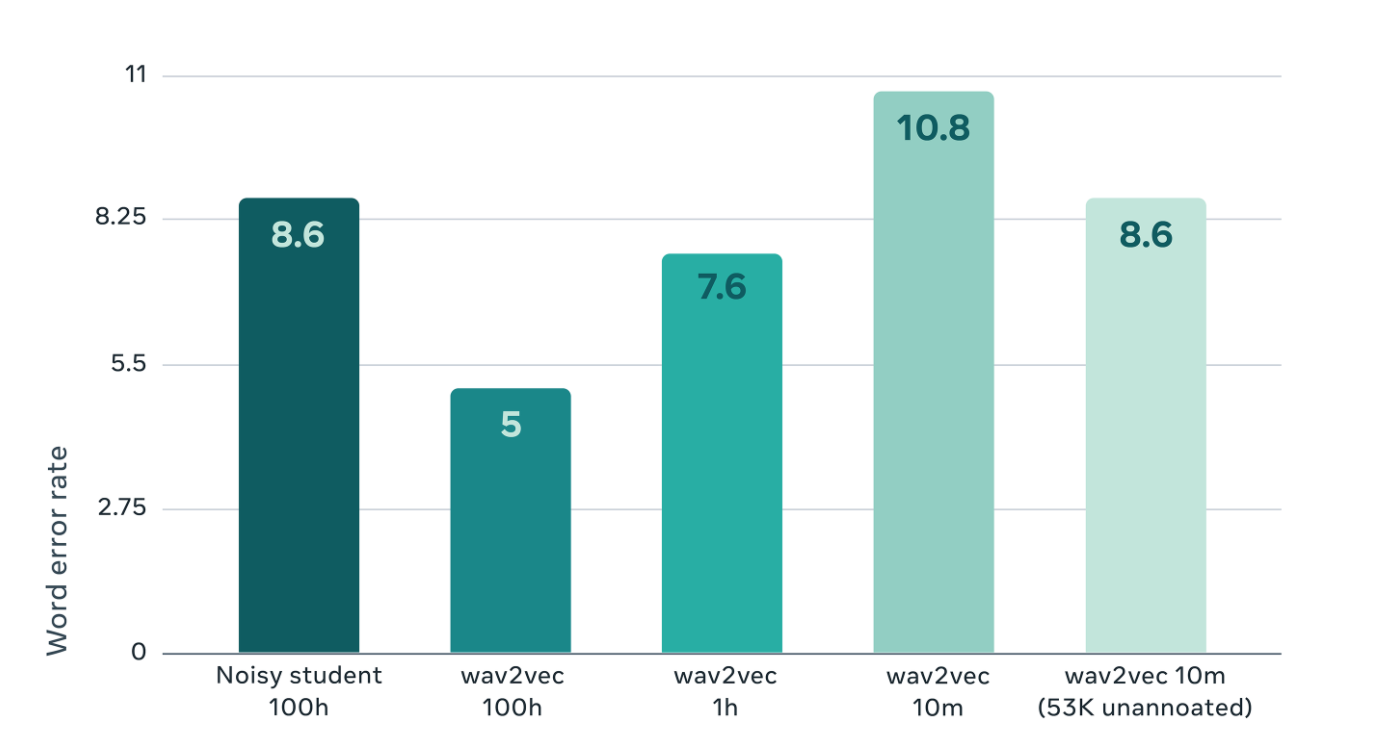

Word Error Rate (WER) for Noisy Student self-training with 100 hours of labeled data is 8.6. Here, Wav2Vec2 is trained with 100 hours and 1 hour gives better performance already. What is much more surprising is the performance of wav2vec2 on only 10 minutes of labeled data.

Similar to the Bidirectional Encoder Representations from Transformers (BERT), Wav2Vec2 is trained by predicting speech units for masked parts of the audio but uses 25ms long representations. This enables it to outperform the best-semisupervised methods, even with 100x less labeled training data.

Hugging Face has hosted an inference API for the base model pre-trained and fine-tuned on 960 hours of Librispeech on 16kHz sampled speech audio. You can record an audio sample through your browser and see the results!

Let’s see an example of how to use the transformers library and Wav2Vec2 to convert any English audio to text:-

Here is a link to code on Google Colab!!

Wav2Vec2ForCTC is used to instantiate a Wav2Vec2 model according to the specified arguments, defining the model architecture.

! pip install -q transformers

import librosa import torch from transformers import Wav2Vec2ForCTC, Wav2Vec2Tokenizer

#load model and tokenizer

tokenizer = Wav2Vec2Tokenizer.from_pretrained("facebook/wav2vec2-base-960h")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-base-960h")

Since the base model is pre-trained on 16 kHz audio, we must make sure our audio sample is also resampled to a 16 kHz sampling rate.

Next, we tokenize the inputs and make sure to set our tensors to PyTorch objects instead of python integers.

#load any audio file of your choice

speech, rate = librosa.load("Audio.wav",sr=16000)

input_values = tokenizer(speech, return_tensors = 'pt').input_values #Store logits (non-normalized predictions) logits = model(input_values).logits

#Store predicted id's predicted_ids = torch.argmax(logits, dim =-1) #decode the audio to generate text transcriptions = tokenizer.decode(predicted_ids[0]) print(transcriptions)

End Notes

This model shows the large potential of pre-training on unlabeled data for speech processing and the widespread impact of transformers in recent years. Wav2Vec2 can allow for improved automatic speech recognition for many more languages and domains with much less annotated data but State of the art results.

With the introduction of Wav2Vec2 in the Transformers library, Hugging Face has made it much easier and simple to create and work with audio data to create State of an art speech recognition system is very short lines of code.

At this rate, Hugging Face might be all we need!!

Did you find this article helpful? Do share your valuable feedback in the comments section below.