This article was published as a part of the Data Science Blogathon.

Overview

- understanding GPU’s in Deep learning.

- Starting with prerequisites for the installation of TensorFlow -GPU.

- Installing and setting up the GPU environment.

- Testing and verifying the installation of GPU

“Graphics has lately made

a great shift towards machine learning, which itself is about understanding

data”

_ Jefferson Han, Founder and Chief Scientist of Perceptive Pixel

Source: Google images

Understanding GPUs in Deep learning

CPU’s can fetch data at a faster rate but cannot process more data at a time as CPU has to make many iterations to main memory to perform a simple task. On the other hand, GPU comes with its own dedicated VRAM (Video RAM) memory hence makes fewer calls to main memory thus is fast

CPU executes jobs sequentially and has fewer cores but GPUs come with hundreds of smaller cores working in parallel making GPU a highly parallel architecture thereby improving the performance.

Starting with prerequisites for the installation of TensorFlow – GPU

Tensorflow GPU can work only if you have a CUDA enabled graphics card. All the newer NVidia graphics cards within the past three or four years have CUDA enabled.

However, let’s pause and check whether your graphics card is enabled with CUDA as “Making the wrong assumptions causes pain and suffering for everyone” said Jennifer young.

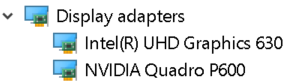

To verify graphics card details. Open the run window from the Start menu and run Control /name Microsoft.DeviceManager.graphics card will be displayed under Display adapters

Every machine will be equipped with integrated graphic cards that are placed on the same chip as the CPU and it relies on systems memory for handling graphics whereas a discrete graphics card is an independent unit from the CPU and has high graphics processing performance.

GPU capabilities are provided by discrete graphics cards. Therefore, make sure that your machine has both integrated graphics and the discrete graphics card installed.

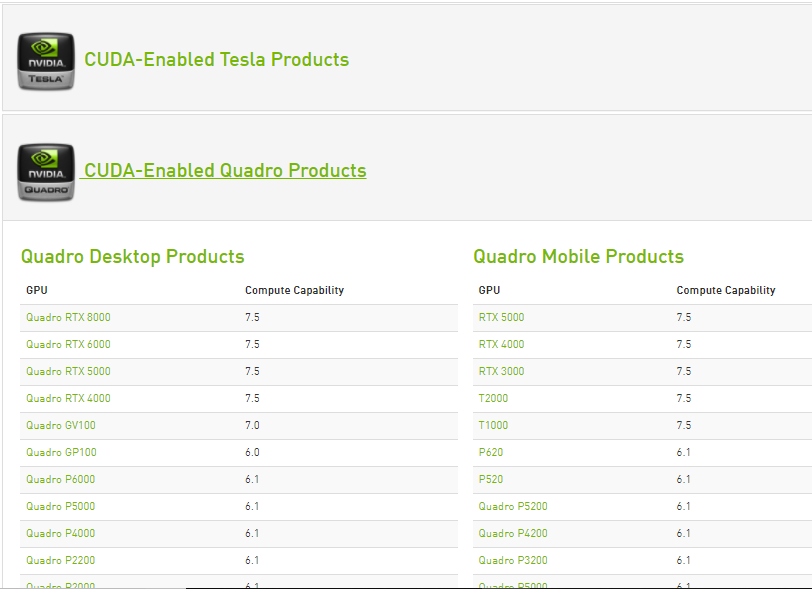

Compute Capabilities of every Nvidia graphics card that was enabled with Cuda are enlisted on the Nvidia website. Only if the discrete graphics card is available in this list it can support TensorFlow GPU.

Source: https://developer.nvidia.com/cuda-gpus

Once it’s known that the discrete graphics card can support TensorFlow GPU. Start with installation

To make sure that any of the previous NVidia settings or configurations doesn’t affect the installation, uninstall all the NVidia graphics drivers and software (optional step).

Installations of required prerequisites

Step1: Installation of visual studio 2017

Microsoft Visual Studio is an integrated development environment from Microsoft used to develop computer programs, as well as websites, web apps, web services, and mobile apps.

The CUDA Toolkit includes Visual Studio project templates and the NSight IDE (which it can use from Visual Studio). We need to install the VC++ 2017 toolset (CUDA is still not compatible with the latest version of Visual Studio).

- Visual studio can be downloaded from the official visual studio website of Microsoft, Download the software by selecting workload ‘Desktop development with c++’ and install

- Cuda toolkit while installation, installs necessary libraries, and then checks for available visual studio versions in the system and then installs visual studio integrations.so having a visual studio installed in the system is a required step to follow.

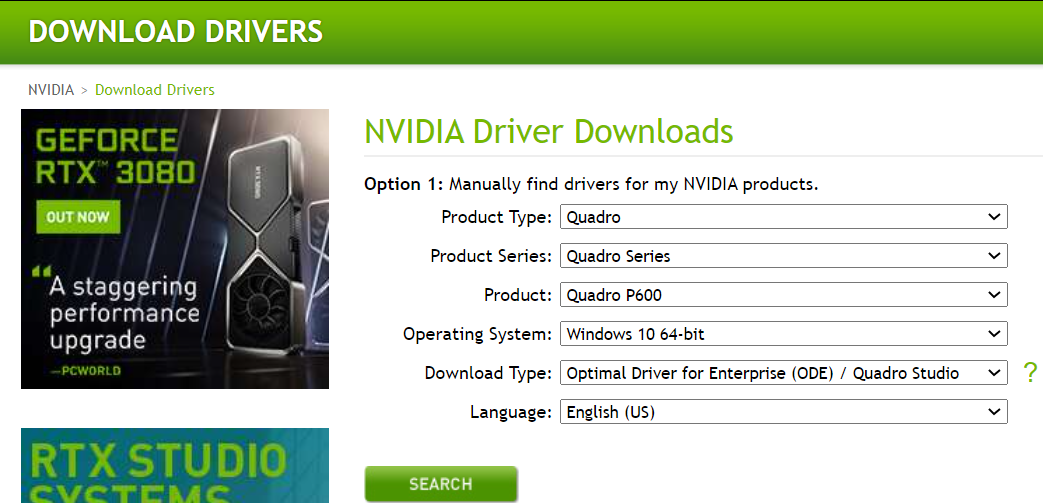

Step2: Download and install the NVIDIA driver

Nvidia driver is the software driver for Nvidia Graphics GPU installed on the PC. It is a program used to communicate from the Windows PC OS to the device. This software is required in most cases for the hardware device to function properly

To download, Navigate to the download page of Nvidia.com and provide all the details of the graphics card and system in the dropdowns. Click on search then we will provide the download link.

Install downloaded Nvidia drivers

once installed we should get a folder NVidia GPU computing toolkit in program files of C drive containing CUDA subfolder inside.

Step3: Cuda toolkit

The Nvidia CUDA Toolkit provides a development environment for creating high-performance GPU-accelerated applications. With the CUDA Toolkit, you can develop, optimize, and deploy your applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centers, cloud-based platforms, and HPC supercomputers. The toolkit includes GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library to build and deploy your application on major architectures including x86, Arm, and POWER.

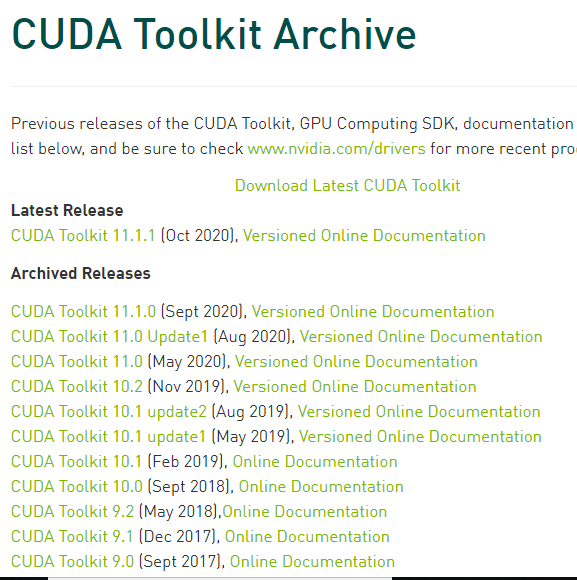

There are various versions of CUDA that are supported by Tensorflow. We can find the current version of Cuda supported by TensorFlow, in TensorFlow GPU support webpage.

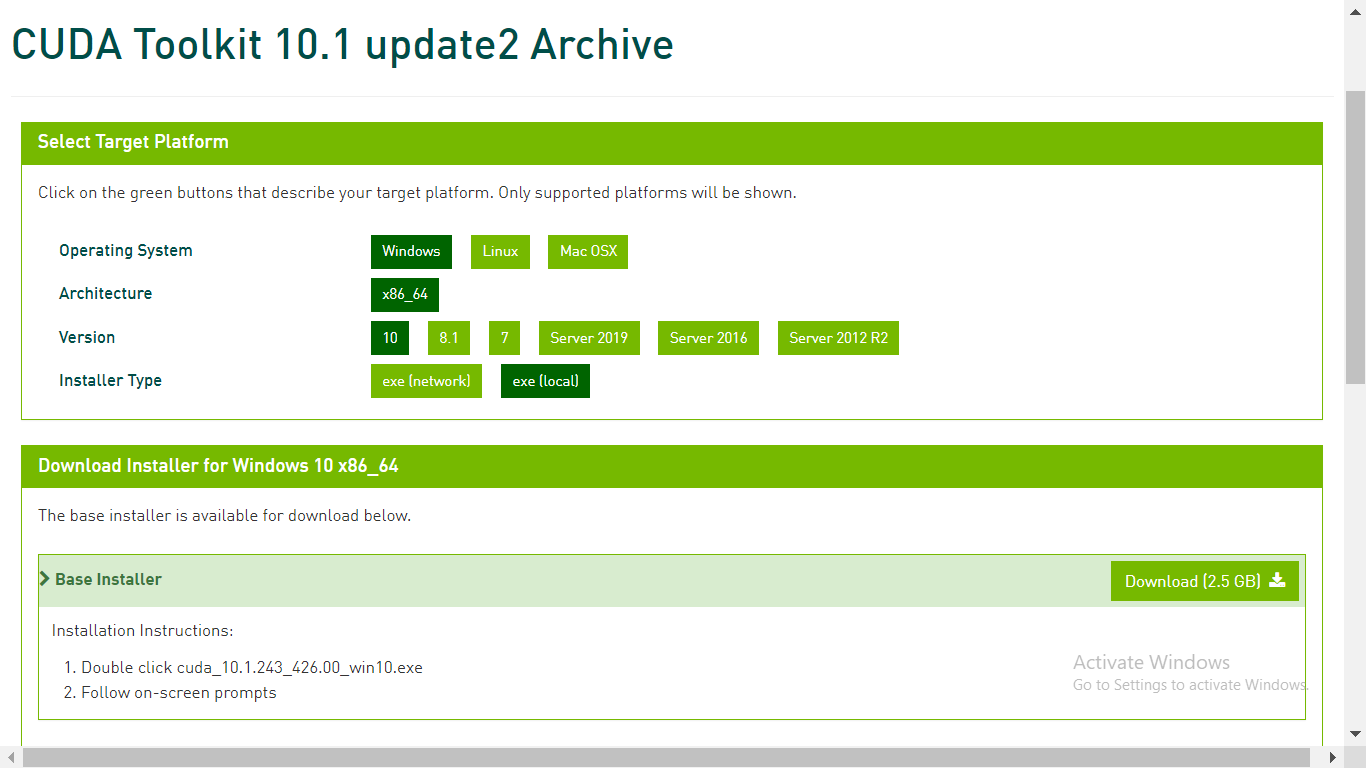

Under CUDA toolkit archive of developer.nvidia.com download the required CUDA toolkit

Step4: Download cuDNN

cuDNN is a library with a set of optimized low-level primitives to boost the processing speed of deep neural networks (DNN) on CUDA compatible GPUs.

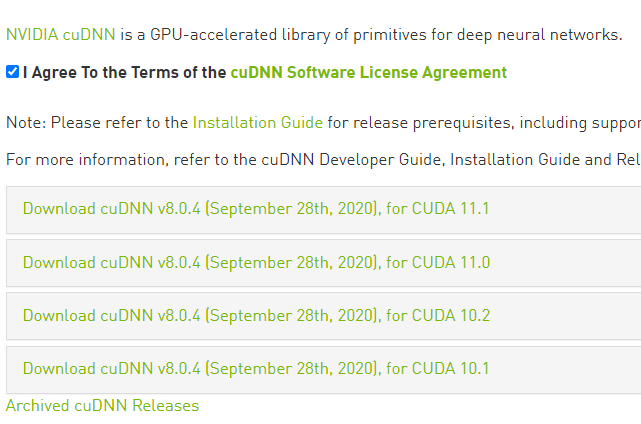

Navigate to the cuDNN download webpage of the developer.nvidia.com and download the cuDNN version compatible with the CUDA version.

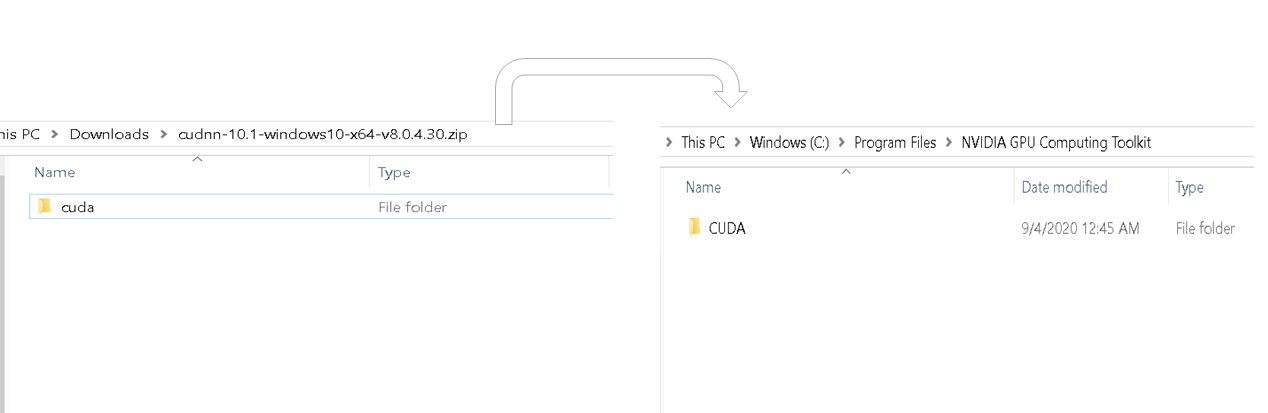

Once the download is complete, extract the downloaded folder. The downloaded folder should contain CUDA folder and this CUDA folder should be matching in contents with the CUDA folder in the NVidia GPU computation toolkit folder of program files. Please refer to the picture below.

Copy cudnn64_88.dll from the bin of the latest extracted folder and paste it in the similar bin folder inside the Cuda folder of Nvidia GPU computing tool kit.

Copy the cudnn.h file from include subfolder of the latest extracted folder and paste it in the similar bin folder inside the Cuda folder of Nvidia GPU computing tool kit.

Copy the cudnn.lib from lib>X64 folder subfolder of the latest extracted folder and paste it in the similar bin folder inside the Cuda folder of the Nvidia GPU computing tool kit.

Now we have completed the download and installation of Cuda for GPU. Let’s set up the environment.

Installing and setting up the GPU environment

Anaconda is a python distribution that helps to set up a virtual environment. Assuming that anaconda is already installed, let’s start with creating a virtual environment.

Step 1: Create an environment variable

Create a virtual environment from command prompt by using command – <cmd> conda create -n [env_name] python= [python_version]

Tensor flow supports only a few versions of python. Choose a Python version that supports tensor while creating an environment.

Next activate the virtual environment by using command – <cmd> activate [env_name].

Inside the created virtual environment install the latest version of tensor flow GPU by using command –<cmd> pip install — ignore-installed –upgrade TensorFlow-GPU

Once we are done with the installation of tensor flow GPU, check whether your machine has basic packages of python like pandas,numpy,jupyter, and Keras. if they don’t exist please install them.

Install ipykernel through command –<cmd> pip install ipykernel

Let’s set the display name and link the kernel to the virtual environment variable using command –<cmd> python –m ipykernel install –user –name [env_name] –display-name “any name”.

Step2: set the python kernel in jupyter.

Open jupyter notebook and from the menu bar click kernel and change the kernel to the environment variable we just set

Testing and verifying the installation of the GPU.

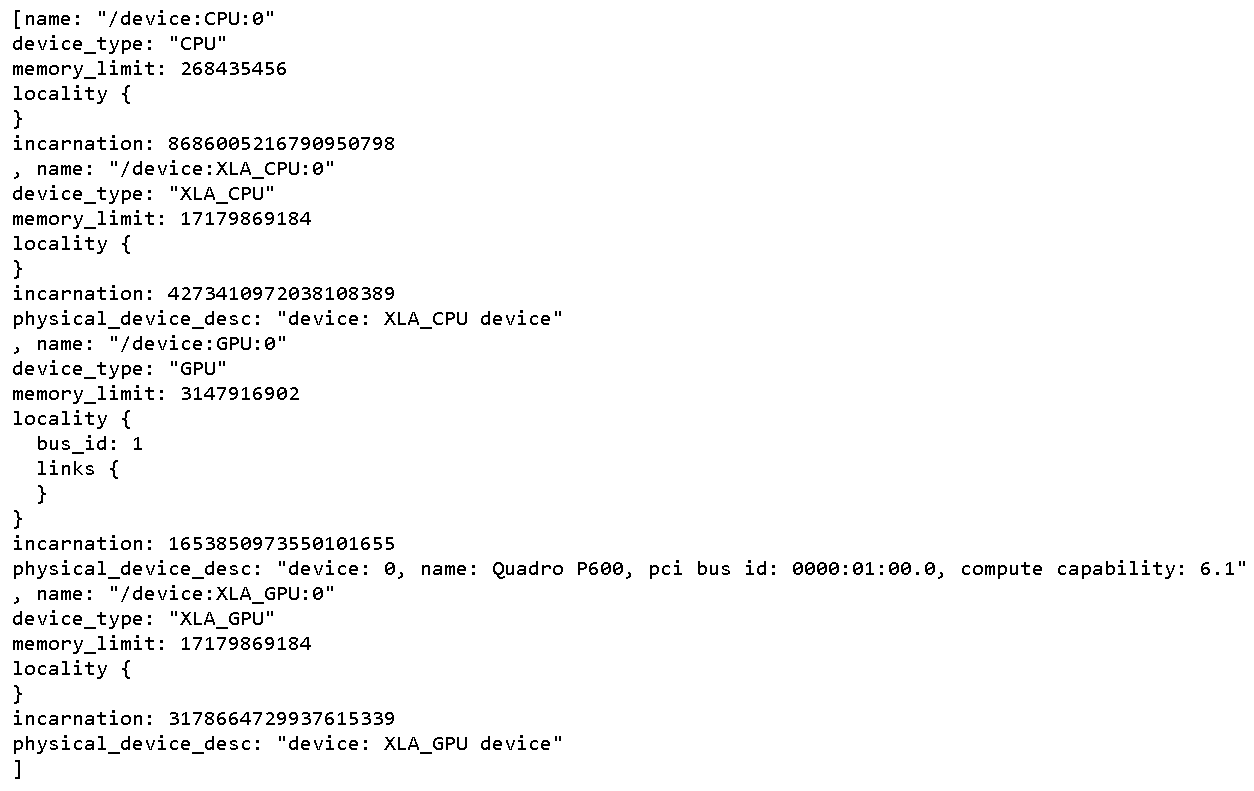

To check all the physical GPU devices available to TensorFlow. Run the below code

From tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

This prints all the available devices for Tensorflow

To check whether CUDA is enabled for the TensorFlow run below code

Import tensorflow as tf

print(tf.test.is_built_with_cuda())

The output will be a boolean value which results in true if TensorFlow is built with CUDA

To know whether GPU is triggered during run time, start execution of any complex neural network.

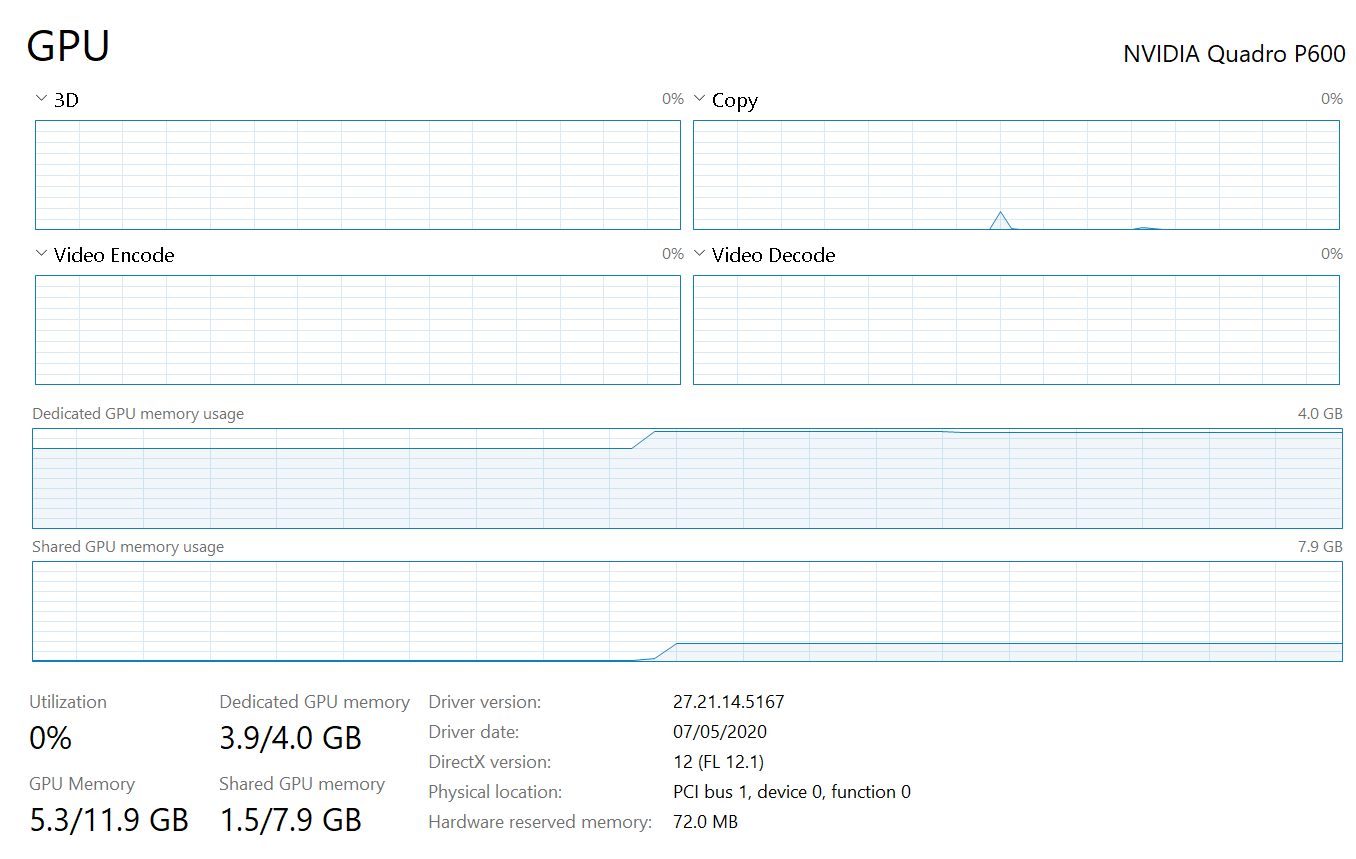

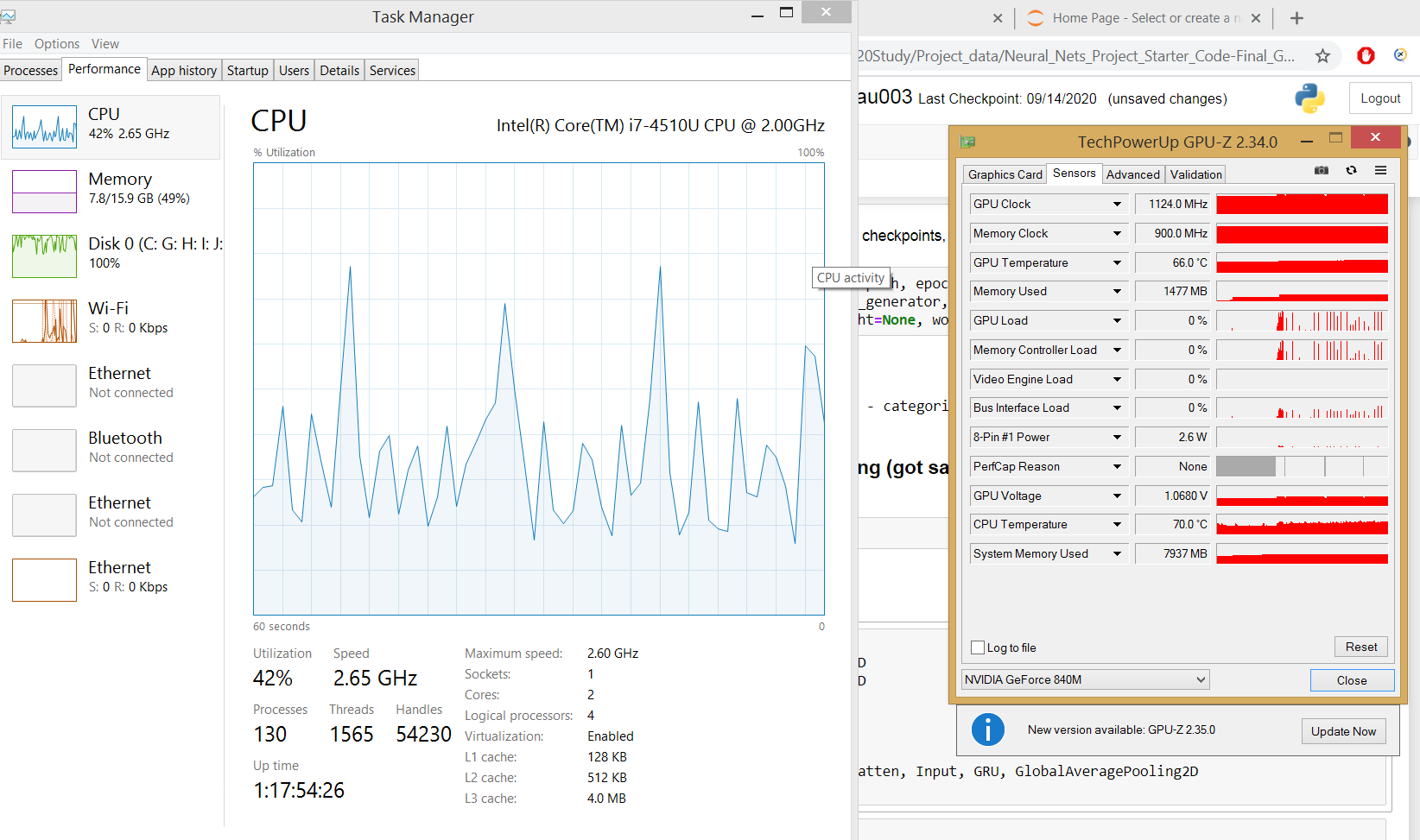

Task manager displays GPU utilization and the memory being occupied for the Tensorflow program execution.

if the process tab of task manager doesn’t display the GPU utilization which is a scenario for the machines which run on windows 8.1 OS use third-party tools like GPUZ to observe the GPU utilization

Now the complete installation and setup of GPU are completed.

Conclusion

-

- There are many free GPU computing cloud platforms that could make our GPU computations in deep neural networks faster. So give them a try if your machine does not contain a dedicated GPU.

- All the above installation steps are dependent on one another, so we need to follow the same sequence as mentioned above.

- There is no way we could debug why our machine not able to consume dedicated GPU.so don’t miss any of the steps.