This article was published as a part of the Data Science Blogathon

Wow! Some libraries out there are just pampering the developers and not letting them do any of the work. Well, not exactly any work but definitely way lower than they would have done if not for these magical libraries.

Today I have one of these magical libraries which do the task of object detection with just one line. YESS!! one line. The library is cvlib.

About cvlib

cvlib is a simple, easy-to-use, high level(you don’t need to worry about what’s underneath), open-source Computer Vision library for Python. The library was developed with a focus on enabling easy and fast experimentation. Most of the Guided principles that cvlib has are heavily inspired by the famous Keras library(deep learning library used on top of TensorFlow).

A few of these principles are:

- simplicity

- user-friendliness

- modularity and

- extensibility

Installation

The library is fueled with 2 very powerful libraries which need to be installed for us to use this library without any issue.

- OpenCV: installed through pip install OpenCV-python

- Tensorflow: installed through pip install TensorFlow

Once you install these successfully, just simply pip install cvlib, that’s it for the installation.

Now just so we all are on the same page, YOLO is a very popular computer vision algorithm used for object detection tasks. It stands for You Only Look Once, which clearly signifies the reason for its popularity, that is “really quick detection results”. There is a lot going on in these algorithms which we will not cover in this article. Today, you will learn how easy it is to detect objects in images without knowing any of the backend processes. Let’s get started (For demonstration I am using Jupyter Notebooks).

1. Inspecting the Image

First, let us see what are the images that we will use to detect objects by passing them as input to our YOLOV3 model. This gives us some idea about what to expect from the output and judge the results on that basis.

Just so you can copy and learn from exactly what I am doing, download these 2 images and save them in the new directory(folder) called ‘images’ which will be inside the parent directory where you are writing the code. Apple Image & Clock Image

from IPython.display import Image, display

# Some example images

image_files = [

'apple1.jpg',

'clock_wall.jpg'

]

for image_file in image_files:

print(f"nDisplaying image with Image Name: {image_file}")

display(Image(filename=f"images/{image_file}"))

2. Model Overview

Now that we have taken a sense of the images that will be used to detect apples and clocks in them. It’s time to see if the model is able to detect and classify them correctly. We know that we will use cvlib but to be more accurate, we will use cvlib.detect_common_objects function for the detection. This function takes an image formatted as NumPy array as an input and returns 3 things:

- bbox: a list of bounding box coordinates for the detected objects.

- label: list of labels for the detected object corresponding to the index of list of bounding box coordinates.

- conf: list of confidence scores for the detection which shows how confident the model is that the predicted outcome is correct.

One last thing before we create the function for detection is to create a directory to store images that we have generated with bounding boxes and labels on them.

import os

dir_name = "images_with_boxes"

if not os.path.exists(dir_name):

os.mkdir(dir_name)

Before looking at the code, I will let you know about the input arguments of the detect_common_objects function that we will use.

1. filename: the name of the input image in your file system

2. model: Now, this function takes this parameter to choose from various trained yolo models available in the library. By default we have ‘yolov3’ selected, but for faster and less memory usage, we will be using ‘yolov3-tiny’. Now definitely this would reduce the model performance by some factor but for demonstration and certain tradeoff with accuracy and speed, it is a good choice.

3. confidence: this confidence parameter is a threshold parameter for selection only those bounding boxes whose confidence is greater than this provided confidence.

In our case, we will use model: ‘yolov3-tiny’ and confidence: 0.6 as default.

import cv2

import cvlib as cv

from cvlib.object_detection import draw_bbox

def detect_and_draw_box(filename, model="yolov3-tiny", confidence=0.6):

"""Detects common objects on an image and creates a new image with bounding boxes.

Args:

filename (str): Filename of the image.

model (str): Either "yolov3" or "yolov3-tiny". Defaults to "yolov3-tiny".

confidence (float, optional): Desired confidence level. Defaults to 0.6.

"""

# Images are stored under the images/ directory

img_filepath = f'images/{filename}'

# Read the image into a numpy array

img = cv2.imread(img_filepath)

# Perform the object detection

bbox, label, conf = cv.detect_common_objects(img, confidence=confidence, model=model)

# Print current image's filename

print(f"========================nImage processed: {filename}n")

# Print detected objects with confidence level

for l, c in zip(label, conf):

print(f"Detected object: {l} with confidence level of {c}n")

# Create a new image that includes the bounding boxes

output_image = draw_bbox(img, bbox, label, conf)

# Save the image in the directory images_with_boxes

cv2.imwrite(f'images_with_boxes/{filename}', output_image)

# Display the image with bounding boxes

display(Image(f'images_with_boxes/{filename}'))

That’s it for the whole process of taking the image as input, apply model inference on it, get the output and perform the task of drawing the bounding boxes and labels onto those images and saving them in a directory.

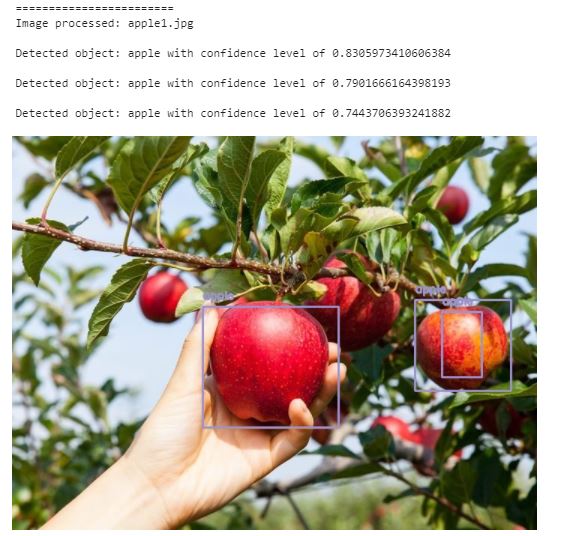

3. Looking at the Results

Now, until now we have only created a function to do all these tasks. It is time to run the code and see it in action.

for image_file in image_files:

detect_and_draw_box(image_file)

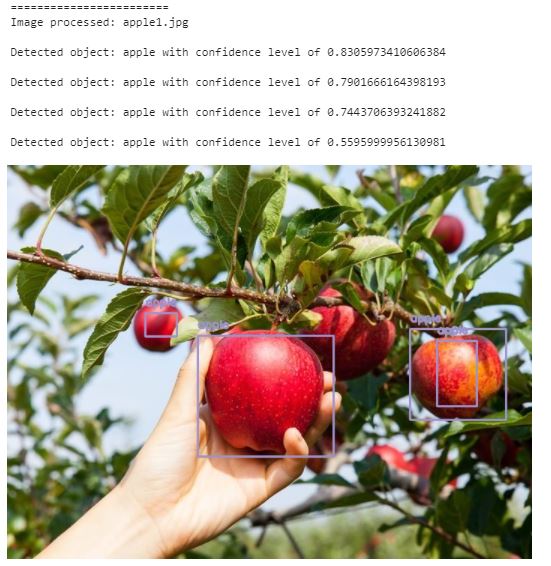

Look, this is not at all a bad performing model and because we took yolov3-tiny, a little trade-off is expected. I am satisfied with these outputs. But before we wrap up, let us do a small experiment and see what will happen if we decrease the confidence threshold for filtering all the other detection who couldn’t clear the confidence value of 0.6.

detect_and_draw_box("apple1.jpg", confidence=0.2)

Observe carefully, we do have one more detection with a confidence score of 0.56 which was earlier filtered due to the threshold, but now showing its bounding box as the most left detection box in the image.

Well, that was it for this article, I hope you became excited after getting to know about such a simple and amazing library, I know I was when I first found out about it.

Gargeya Sharma

B.Tech in Computer science (4th year)

Specialized in Data Science and Deep learning

Data Scientist Intern at Upswing Cognitive Hospitality Solutions

For more information, check out my GitHub Home Page.