This article was published as a part of the Data Science Blogathon.

The field of Deep Learning has materialized a lot over the past few decades due to efficiently tackling massive datasets and making computer systems capable enough to solve computational problems

Hidden layers have ushered in a new era, with the old techniques being non-efficient, particularly when it comes to problems like Pattern Recognition, Object Detection, Image Segmentation, and other image processing-based problems. CNN is one of the most deployed deep

learning neural networks.

Table of contents

Background of CNNs

Around the 1980s, CNNs were developed and deployed for the first time. A CNN could only detect handwritten digits at the time. CNN was primarily used in various areas to read zip and pin codes etc.

The most common aspect of any A.I. model is that it requires a massive amount of data to train. This was one of the biggest problems that CNN faced at the time, and due to this, they were only used in the postal industry. Yann LeCun was the first to introduce convolutional neural networks.

Kunihiko Fukushima, a renowned Japanese scientist, who even invented recognition, which was a very simple Neural Network used for image identification, had developed on the work done earlier by LeCun

What is CNN?

In the field of deep learning, convolutional neural network (CNN) is among the class of deep neural networks, which was being mostly deployed in the field of analyzing/image recognition.

Convolutional Neural uses a very special kind of method which is being known as Convolution.

The mathematical definition of convolution is a mathematical operation being applied on the two functions that give output in a form of a third function that shows how the shape of one function is being influenced, modified by the other function.

The Convolutional neural networks(CNN) consists of various layers of artificial neurons. Artificial neurons, similar to that neuron cells that are being used by the human brain for passing various sensory input signals and other responses, are mathematical functions that are being used for calculating the sum of various inputs and giving output in the form of an activation value.

The behaviour of each CNN neuron is being defined by the value of its weights. When being fed with the values (of the pixel), the artificial neurons of a CNN recognizes various visual features and specifications.

When we give an input image into a CNN, each of its inner layers generates various activation maps. Activation maps point out the relevant features of the given input image. Each of the CNN neurons generally takes input in the form of a group/patch of the pixel, multiplies their values(colours) by the value of its weights, adds them up, and input them through the respective activation function.

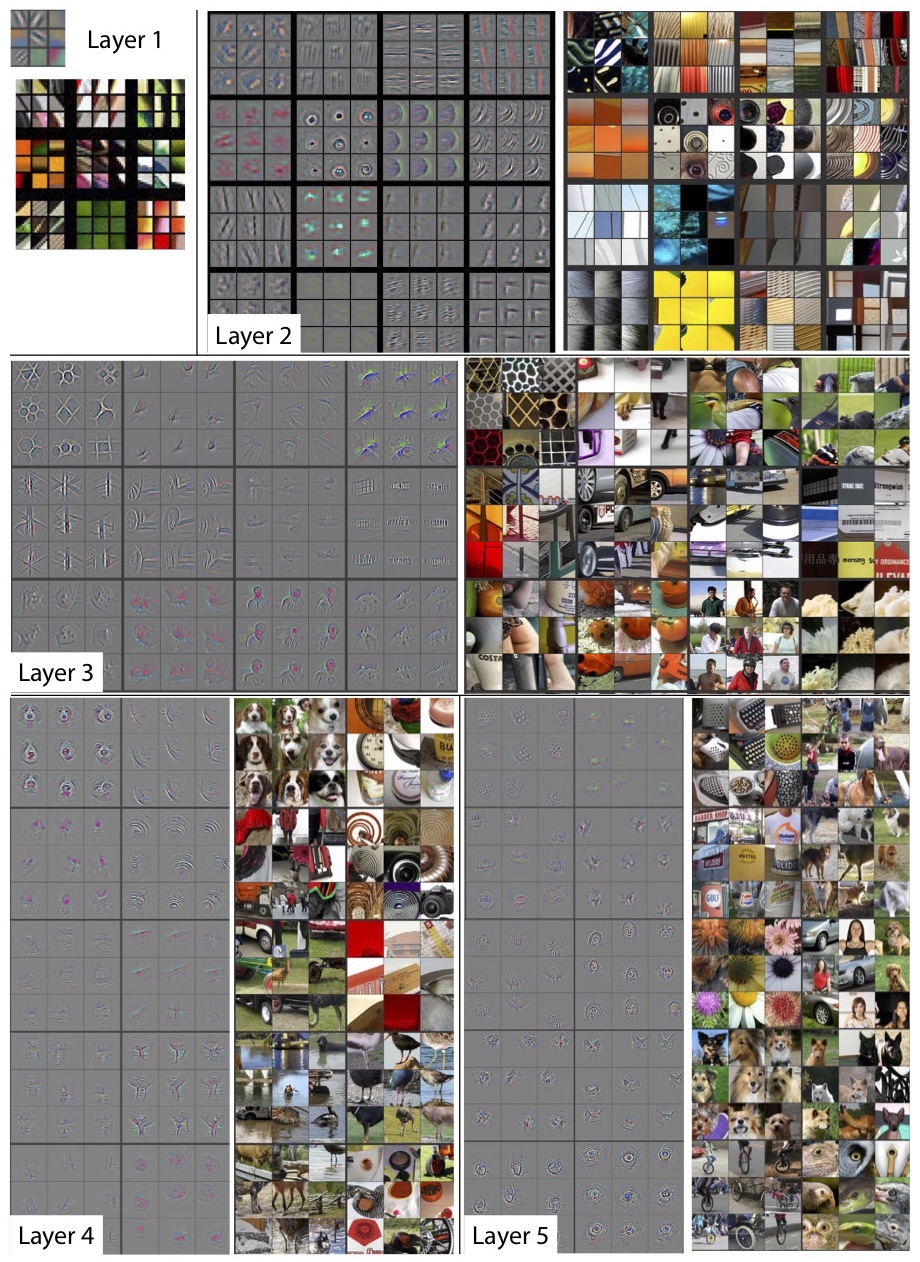

The first (or maybe the bottom) layer of the CNN usually recognizes the various features of the input image such as edges horizontally, vertically, and diagonally.

The output of the first layer is being fed as an input of the next layer, which in turn will extract other complex features of the input image like corners and combinations of edges.

The deeper one moves into the convolutional neural network, the more the layers start detecting various higher-level features such as objects, faces, etc

CNN’s Basic Architecture

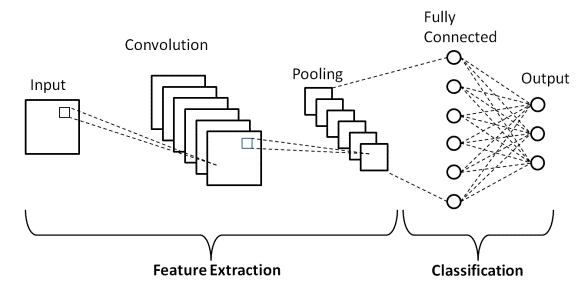

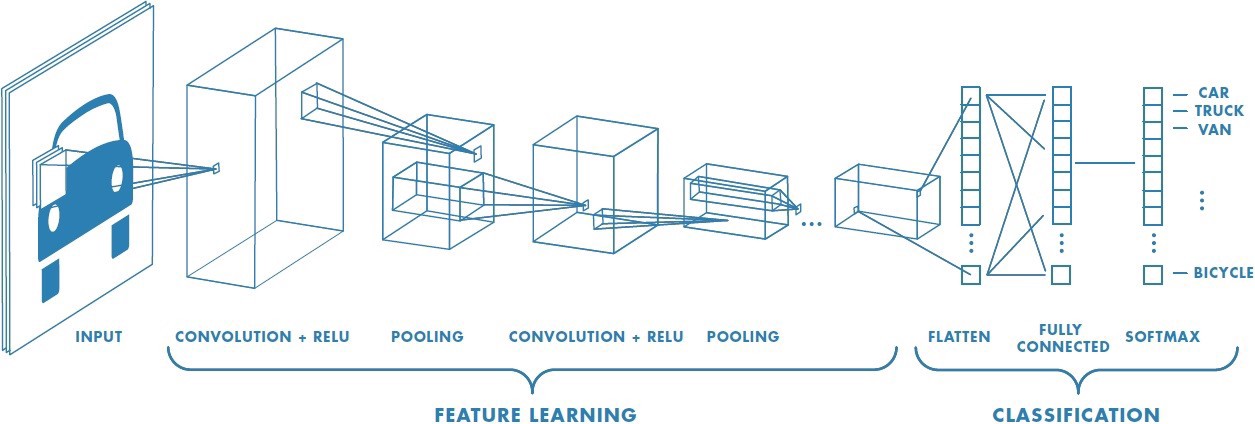

A CNN architecture consists of two key components:

• A convolution tool that separates and identifies the distinct features of an image for analysis in a process known as Feature Extraction

• A fully connected layer that takes the output of the convolution process and predicts the image’s class based on the features retrieved earlier.

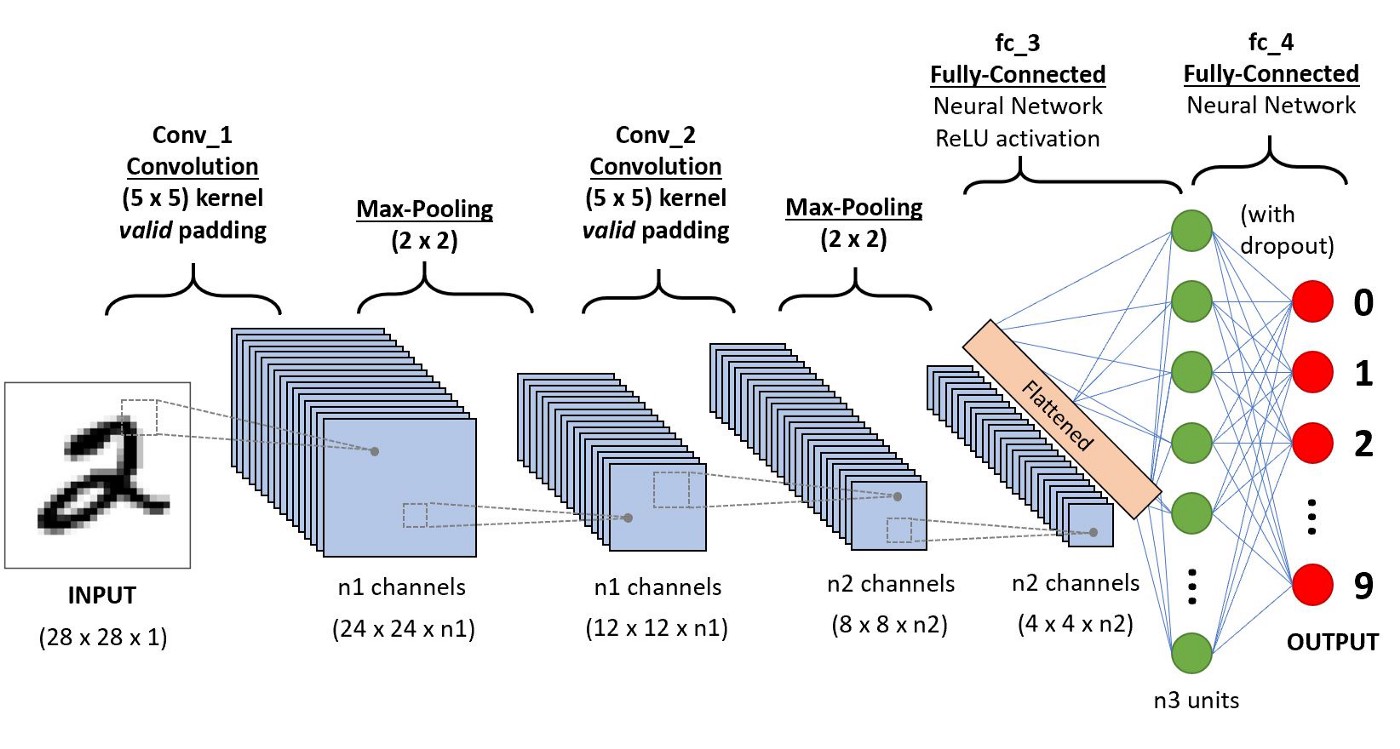

The CNN is made up of three types of layers: convolutional layers, pooling layers, and fully-connected (FC) layers.

Convolution Layers

This is the very first layer in the CNN that is responsible for the extraction of the different features from the input images. The convolution mathematical operation is done between the input image and a filter of a specific size MxM in this layer.

The Fully Connected

The Fully Connected (FC) layer comprises the weights and biases together with the neurons and is used to connect the neurons between two separate layers. The last several layers of a CNN Architecture are usually positioned before the output layer.

Pooling layer

The Pooling layer is responsible for the reduction of the size(spatial) of the Convolved Feature. This decrease in the computing power is being required to process the data by a significant reduction in the dimensions.

There are two types of pooling

1 average pooling

2 max pooling.

A Pooling Layer is usually applied after a Convolutional Layer. This layer’s major goal is to lower the size of the convolved feature map to reduce computational expenses. This is accomplished by reducing the connections between layers and operating independently on each feature map. There are numerous sorts of Pooling operations, depending on the mechanism utilised.

The largest element is obtained from the feature map in Max Pooling. The average of the elements in a predefined sized Image segment is calculated using Average Pooling. Sum Pooling calculates the total sum of the components in the predefined section. The Pooling Layer is typically used to connect the Convolutional Layer and the FC Layer.

Dropout

To avoid overfitting (when a model performs well on training data but not on new data), a dropout layer is utilised, in which a few neurons are removed from the neural network during the training phase, resulting in a smaller model.

Activation Functions

They’re utilised to learn and approximate any form of network variable-to-variable association that’s both continuous and complex.

It gives the network non-linearity. The ReLU, Softmax, and tanH are some of the most often utilised activation functions.

Training the convolutional neural network

The process of adjusting the value of the weights is defined as the “training” of the neural network.

Firstly, the CNN initiates with the random weights. During the training of CNN, the neural network is being fed with a large dataset of images being labelled with their corresponding class labels (cat, dog, horse, etc.). The CNN network processes each image with its values being assigned randomly and then make comparisons with the class label of the input image.

If the output does not match the class label(which mostly happen initially at the beginning of the training process and therefore makes a respective small adjustment to the weights of its CNN neurons so that output correctly matches the class label image.

The corrections to the value of weights are being made through a technique which is known as backpropagation. Backpropagation optimizes the tuning process and makes it easier for adjustments for better accuracy every run of the training of the image dataset is being called an “epoch.”

The CNN goes through several series of epochs during the process of training, adjusting its weights as per the required small amounts.

After each epoch step, the neural network becomes a bit more accurate at classifying and correctly predicting the class of the training images. As the CNN improves, the adjustments being made to the weights become smaller and smaller accordingly.

After training the CNN, we use a test dataset to verify its accuracy. The test dataset is a set of labelled images that were not being included in the training process. Each image is being fed to CNN, and the output is compared to the actual class label of the test image. Essentially, the test dataset evaluates the prediction performance of the CNN

If a CNN accuracy is good on its training data but is bad on the test data, it is said as “overfitting.” This happens due to less size of the dataset (training)

Limitations

They (CNN) use massive computing power and resources for the recognition of various visual patterns/trends that is very much impossible to achieve by the human eye.

One usually needs a very long time to train a convolutional neural network, especially with a large size of image datasets.

One generally requires very specialized hardware (like a GPU) to perform the training of the dataset

Python Code implementation for Implementing CNN for classification

Importing Relevant Libraries

import NumPy as np %matplotlib inline import matplotlib.image as mpimg import matplotlib.pyplot as plt import TensorFlow as tf tf.compat.v1.set_random_seed(2019)

Loading MNIST Dataset

(X_train,Y_train),(X_test,Y_test) = keras.datasets.mnist.load_data()

Scaling The Data

X_train = X_train / 255 X_test = X_test / 255

#flatenning

X_train_flattened = X_train.reshape(len(X_train), 28*28) X_test_flattened = X_test.reshape(len(X_test), 28*28)

Designing The Neural Network

model = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train_flattened, Y_train, epochs=5)

Output:

Epoch 1/5 1875/1875 [==============================] - 8s 4ms/step - loss: 0.7187 - accuracy: 0.8141 Epoch 2/5 1875/1875 [==============================] - 6s 3ms/step - loss: 0.3122 - accuracy: 0.9128 Epoch 3/5 1875/1875 [==============================] - 6s 3ms/step - loss: 0.2908 - accuracy: 0.9187 Epoch 4/5 1875/1875 [==============================] - 6s 3ms/step - loss: 0.2783 - accuracy: 0.9229 Epoch 5/5 1875/1875 [==============================] - 6s 3ms/step - loss: 0.2643 - accuracy: 0.9262

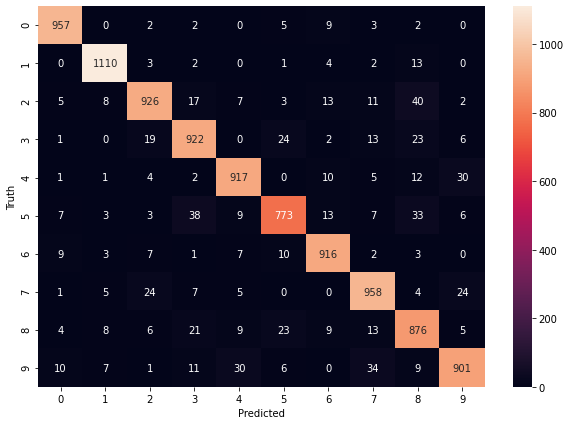

Confusion Matrix for visualization of predictions

Y_predict = model.predict(X_test_flattened) Y_predict_labels = [np.argmax(i) for i in Y_predict]

cm = tf.math.confusion_matrix(labels=Y_test,predictions=Y_predict_labels)

%matplotlib inline

plt.figure(figsize = (10,7))

sn.heatmap(cm, annot=True, fmt='d')

plt.xlabel('Predicted')

plt.ylabel('Truth')

Output

Frequently Asked Questions

A. A Convolutional Neural Network (CNN) is a deep learning architecture designed for image analysis and recognition. It employs specialized layers to automatically learn features from images, capturing patterns of increasing complexity. These features are then used to classify objects or scenes. CNNs have revolutionized computer vision tasks, exhibiting high accuracy and efficiency in tasks like image classification, object detection, and image generation.

A. The fundamental principle of Convolutional Neural Networks (CNNs) is hierarchical feature learning. CNNs process input data, often images, by applying a series of convolutional and pooling layers. Convolutional layers employ small filters to convolve across the input, detecting spatial patterns. Pooling layers downsample the output, retaining important information. This enables the network to progressively learn hierarchical features, from simple edges to complex object parts. The learned features are then used for classification or other tasks. CNNs’ ability to automatically learn and abstract features from data has made them exceptionally effective in image analysis, with applications spanning various fields.

Conclusion

So in this article, we covered the basic Introduction about CNN architecture and its basic implementation in real-time scenarios like classification. We also covered other key terminologies related to CNN like pooling, Activation Function, Dropoutetc. We also covered about limitations regarding CNN and the training of CNN

With this, I finish this blog.

Hello Everyone, Namaste

My name is Pranshu Sharma and I am a Data Science Enthusiast

Thank you so much for taking your precious time to read this blog. Feel free to point out any mistake(I’m a learner after all) and provide respective feedback or leave a comment.

Dhanyvaad!!

Feedback:Email: [email protected]

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion