Introduction

Human brain is a complex system made of billions of neurons that opens up new mysteries with every discovery about it. And the attempts to mimic the structure and function of the human brain led to a new field of study called Deep Learning. Artificial Neural Networks also known as Neural Networks, inspired from the neural networks of the human brain is a component of Artificial Intelligence. With hundreds of applications in day to day life, the field has seen exponential growth in the last few years. From spell check, machine translation to facial recognition it finds its application everywhere in the real world.

Structure of Artificial Neural Network vs Biological Neural Network

Intended to mimic the neural networks of the human brain, the structure of artificial neural networks is similar to that of biological neural networks. The human brain is a network of billion densely connected neurons, which is highly complex, nonlinear and has trillions of synapses. A neural network mainly consists of dendrites, axon, cell body, synapses, soma and nucleus. Dendrites are responsible for receiving input from other neurons and axon is responsible for transmission from one to the other.

The molecular and biological machinery of neural networks is based on electrochemical signaling. Neurons fire electrical impulses only when certain conditions are met. Some of the neural structure of the brain is present at birth, while other parts are developed through learning, especially in early stages of life to adapt to the environment (new inputs).

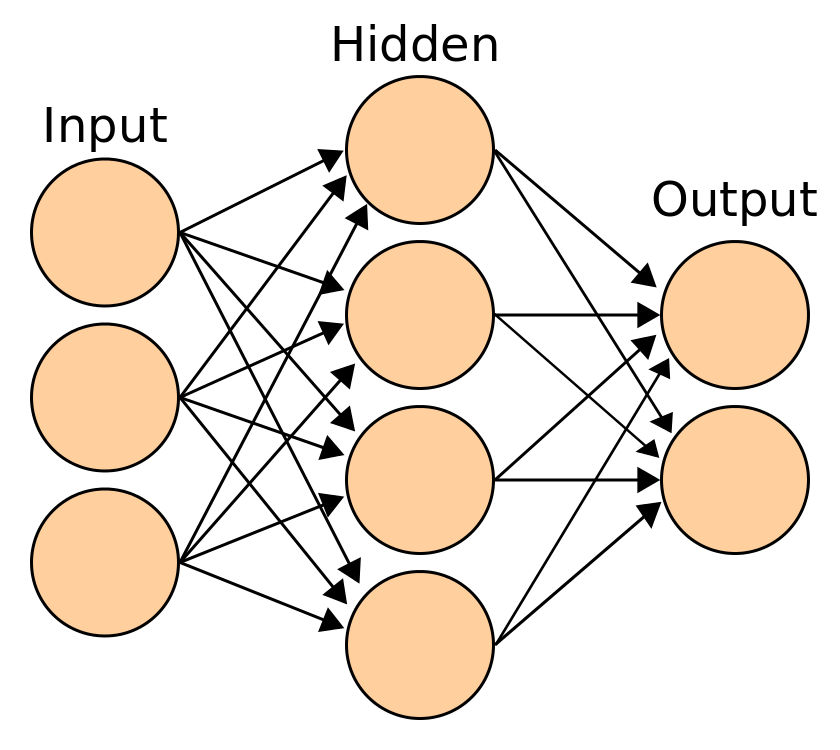

Artificial Neural Networks are made up of layers and layers of connected input units and output units called neurons. A single layer neural network is called a perceptron. Multiple hidden layers may also be present in an artificial neural network. The input units(receptor), connection weights, summing function, computation and output units (effectors) are what makes up an artificial neuron.

Even though neurons are slower than silicon logic gates, their massive interconnection makes up for the slow rate. The weight value of a connection is the strength of the specified connection between neurons. Weights are randomly initialized and adjusted via an optimization algorithm to map aggregations of input stimuli to a desired output function.

The architecture of a neural network are of many types which include: Perceptron, Feed Forward Neural Network, Multilayer Perceptron, Convolutional Neural Network, Radial Basis Function Neural Network, Recurrent Neural Network, LSTM –Long Short-Term Memory, Sequence to Sequence models, Modular Neural Network. Learning algorithms could be supervised, unsupervised or reinforcement methods.

How does the Biological Neural Network work?

As said already, the dendrites are nerve fibers that carry the electrical signal to the cell. From there, the cell body takes over to compute a nonlinear function of the inputs. The cell fires only if a sufficient input is received. The axon which is a single nerve fibre then takes the output of the function to other neurons.

A dendrite of another neuron receives the input and the process continues. The point of contact between an axon of a neuron and dendrite of another neuron is called a synapse. It regulates the chemical connection whose weight has an impact on the input to the cell. Each neuron has several dendrites thus receiving input from many neighboring neurons.

And every neuron has one axon which passes the output as input to the next neuron with its dendrite. Owing to the large number of synapses, they can tolerate ambiguity in the data.

The working mechanism of Artificial Neural Network

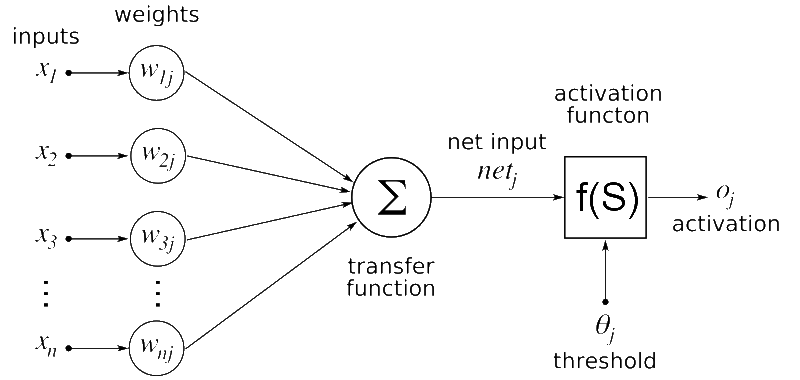

Artificial Neural Networks work in a way similar to that of their biological inspiration. They can be considered as weighted directed graphs where the neurons could be compared to the nodes and the connection between two neurons as weighted edges. The processing element of a neuron receives many signals (both from other neurons and as input signals from the external world).

Signals are sometimes modified at the receiving synapse and the weighted inputs are summed at the processing element. If it crosses the threshold, it goes as input to other neurons (or as output to the external world) and the process repeats.

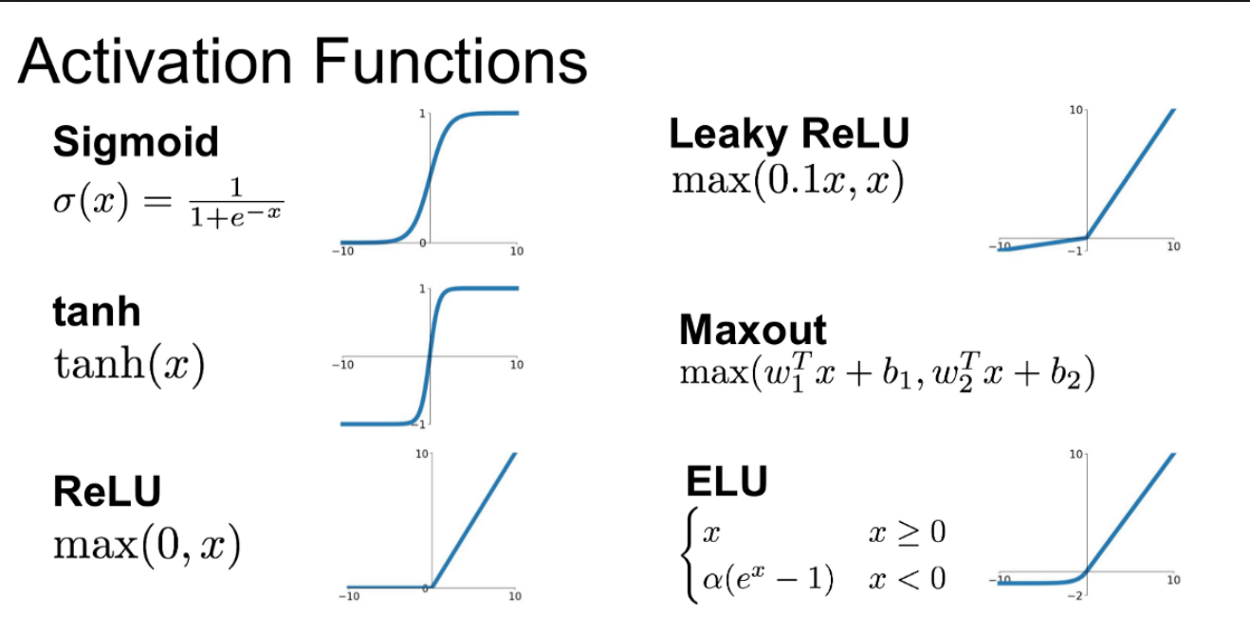

Usually, the weights represent the interconnection strength between the neurons. The activation function is a transfer function that is used to get the desired output for the problem designed. Say, the desired output is zero or one in case of a binary classifier. Sigmoid function could be used as the activation function.

There are a lot of activation functions to name a few: linear regression, logistic regression, identity function, bipolar, binary sigmoid, bipolar sigmoid, hyperbolic tangent, sigmoidal hyperbolic and ReLU. Artificial neural networks are specifically designed for a particular function like binary classification, multi class classification, pattern recognition and so on through learning processes. The weights of the synaptic connections of both the neural networks adjust with the learning process.

When the biological neural network contains 86 billions of neurons and trillions of synapses, the artificial neural network contains no more than 1000 neurons. There are multiple other factors including the layers of neurons, the data to consider when comparing their capabilities. Artificial Neural Networks require a lot of computational power and they liberate a large amount of heat when compared with biological neural networks.

The mystery revealed by the biological neural network keeps improving the artificial neural network. More the mystery revealed, more webs the brain spins. Even if it is inspired from the biological neural network, its functions are more mathematics than biology.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.