This article was published as a part of the Data Science Blogathon.

Introduction

Have you ever wondered how the human brain functions? There’s a good possibility you learned about it in school. The function of ANN is the same as that of neurons in the human nervous system. Let’s get this Artificial Neural Network lesson started. We’ll take a quick look at ANN in this article.

Artificial Neural Networks (ANNs) are currently the most widely used Machine Learning techniques. These Neural Networks were first developed in the 1970s, but due to recent increases in computing power, they have become extremely popular, and they are now found almost everywhere. Neural Networks power the intelligent interface that keeps you engaged in every program you use.

A computational network grounded on natural neural networks that construct the structure of the human brain is known as an artificial neural network. Artificial neural networks, like human smarts, have neurons that are coupled to each other in different layers of the networks.

In the sphere of machine literacy, artificial neural networks are the most important literacy models. They can arguably negotiate every exertion that the human brain can, still, they may work in a different way than a real human brain.

What is Artificial Neural Network?

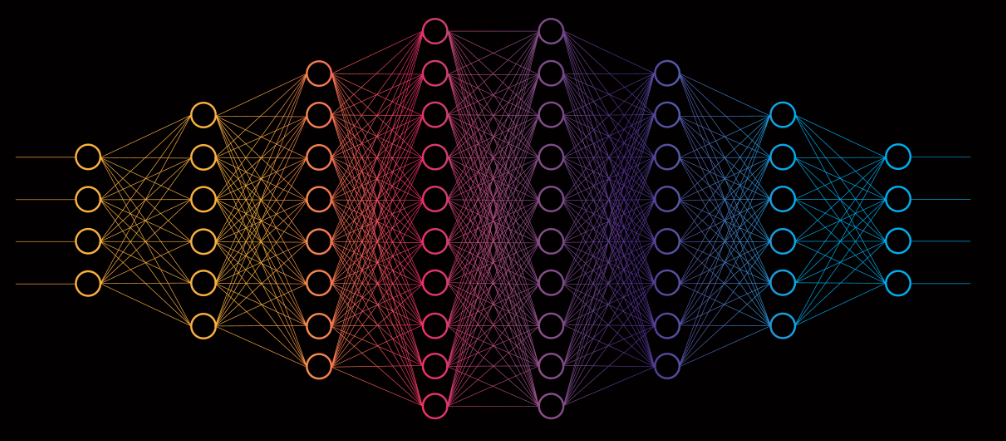

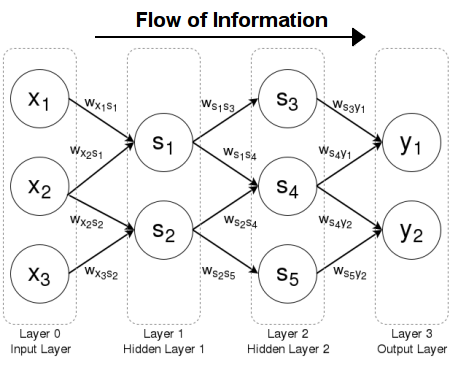

The term “Artificial Neural Network” is derived from biological neural networks, which define the structure of the human brain. Artificial neural networks, like the human brain, have neurons in multiple layers that are connected to one another. These neurons are referred to as nodes.

In ANN, dendrites from biological neural networks represent inputs, cell nuclei represent nodes, synapses represent weights, and axons represent the output.

ANNs are nonlinear statistical models that demonstrate a complex relationship between inputs and outputs in order to uncover a new pattern. Artificial neural networks are used for a range of applications, including image recognition, speech recognition, machine translation, and medical diagnosis.

The fact that ANN learns from sample data sets is a significant advantage. The most typical application of ANN is for random function approximation. With these types of technologies, one can arrive at solutions that specify the distribution in a cost-effective manner. ANN can also offer an output result based on a sample of data rather than the complete dataset. ANNs can be used to improve existing data analysis methods due to their high prediction capabilities.

Artificial Neural Networks Architecture

A node layer contains an input layer, one or more hidden layers, and an output layer in ANNs. Each node, or artificial neuron, has its own weight and threshold and is connected to the others. When a node’s output hits a certain threshold, it is activated, and data is sent to the next tier of the network. No data is sent to the next tier of the network if this is not the case.

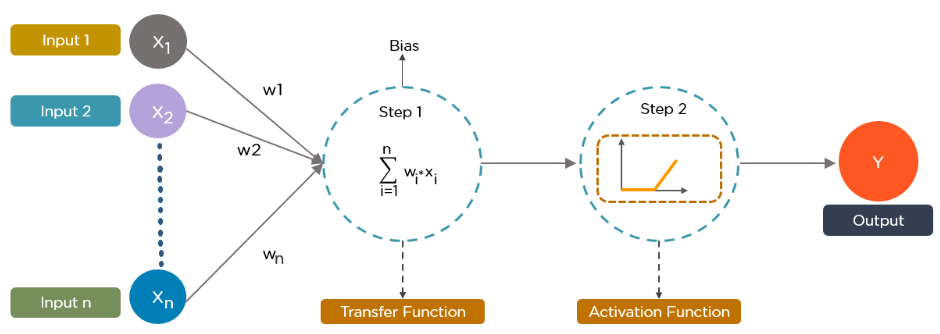

The performance of a neural network is influenced by a number of parameters and hyperparameters. The output of ANNs is mostly determined by these variables. Weights, biases, learning rate, batch size, and other parameters are among them. Each node in the ANN has a certain amount of weight.

Weights are assigned to each node in the network. The weighted sum of the inputs and the bias is calculated using a transfer function. To generate the output, the weighted total is supplied as an input to an activation function. Activation functions determine whether or not a node should fire. Those who are fired are the only ones who make it to the output layer. There are several activation functions that can be used depending on the type of task we’re doing. Sigmoid, RELU, Softmax, tanh, etc. are some of the most commonly utilized activation functions in Artificial Neural Networks.

Working on Artificial Neural Networks

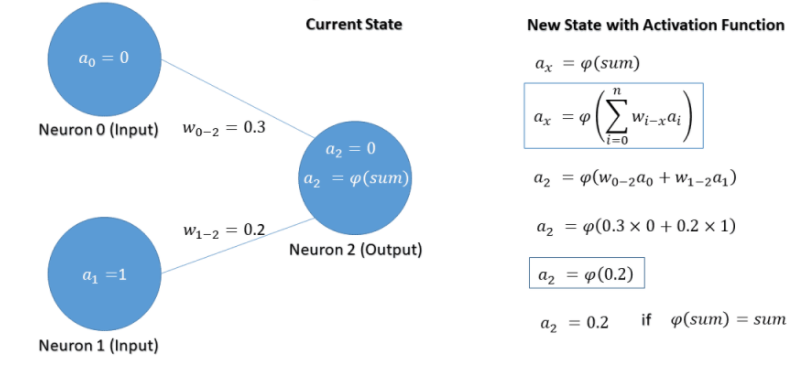

A neuron is essentially a node with numerous inputs and one output, while a neural network is made up of many interconnected neurons. To execute their jobs, neural networks must go through a ‘learning phase,’ in which they must learn to correlate incoming and outgoing signals. They then start working, receiving input data and generating output signals based on the accumulated data.

The information is taken in numerical form by the input node. The data represents an activation value, with a number assigned to each node. The stronger the activation, the higher the number. The activation value is passed to the next node based on weights and the activation function. Each node calculates and updates the weighted sum based on the transfer function (activation function). It then performs an activation function. This function is specific to this neuron. The neuron then decides whether or not it needs to convey the signal. The signal extension is determined by the weights being adjusted by the ANN.

The activation travels across the network until it reaches the destination node. The information is shared in an understandable manner by the output layer. The network compares the output and expected output using the cost function. The discrepancy between the actual and projected values is referred to as the cost function. The lower the cost function, the closer the result is to the desired one.

The cost function can be minimized using one of two methods:

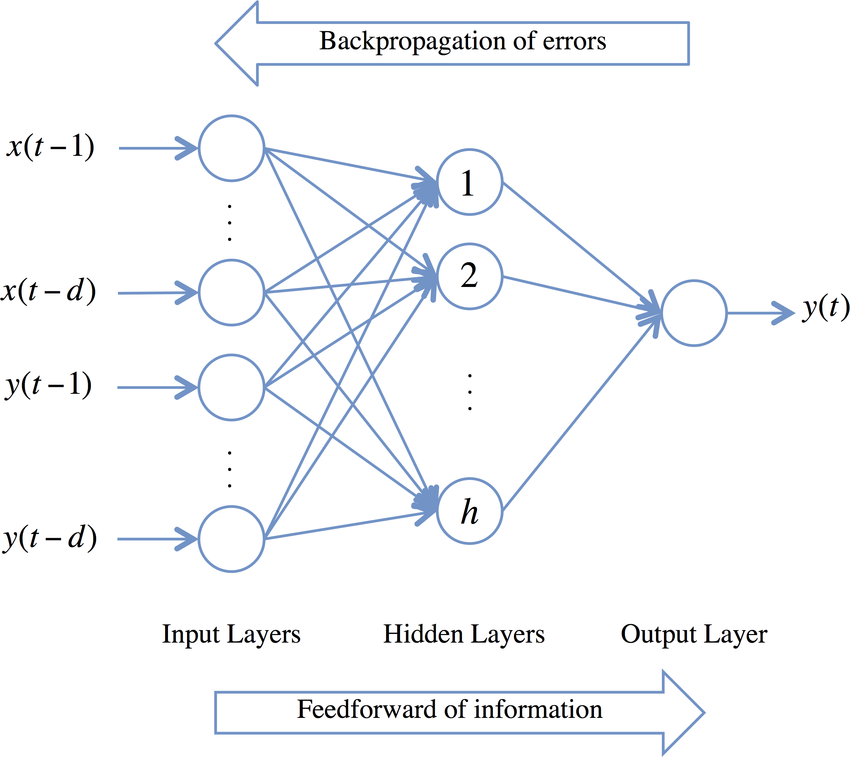

- Back Propagation: Backpropagation is at the heart of neural network training. It is the most important way for neural networks to learn. The data enters the input layer and travels across the network to the output layer. The cost function will then equate the output with the intended output. If the cost function’s value is high, the information is returned, and the neural network learns to reduce the cost function’s value by modifying the weights. The error rate is reduced and the model becomes definite when the weights are properly adjusted.

- Forward Propagation: The data enters the input layer and travels across the network to the output value. The value is compared to the expected results by the user. Calculating mistakes and transmitting information backwards is the next stage. The user can now train the neural network and update the weights. The user can alter weights simultaneously thanks to the structured algorithm. It will assist the user in determining which neural network weight is accountable for the error.

Types of Artificial Neural Network

There are two important types of ANNs –

1) FeedForward Neural Network:

The information flow in feedforward ANNs is only in one direction. That is, data flows from the input layer to the concealed layer and then to the output layer. There are no feedback loops. These neural networks are commonly employed in supervised learning for tasks like classification and image recognition. We use them when the data is not in consecutive order. Feedforward networks are comparable to convolutional neural networks (CNNs).

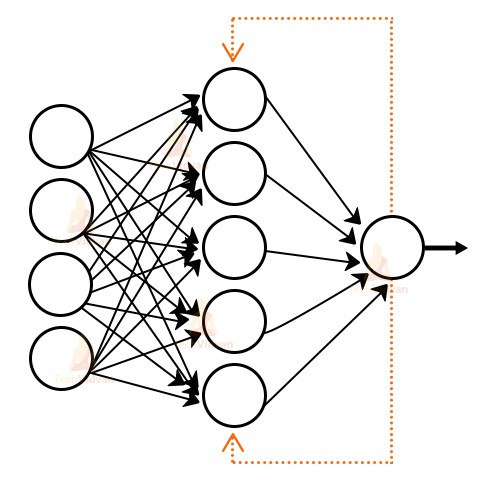

2) Feedback Neural Network:

The feedback loops are an element of the feedback ANNs. Such neural networks, such as recurrent neural networks, are mostly used for memory retention. These networks are best used in situations where the data is sequential or time-dependent. The feedback loops define recurrent neural networks (RNNs).

ANN Learning Techniques

- Supervised Learning: The user trains the model with labelled data in this learning method. It indicates that some data has already been tagged with the proper responses. Learning that takes place in the presence of a supervisor is referred to as supervised learning.

- Unsupervised Learning: The model does not require supervision in this learning. It usually deals with data that hasn’t been labelled. The user gives permission for the model to categorize the data on its own. It organizes the data based on similarities and patterns without requiring any prior data training.

- Reinforcement Learning: The output value is unknown in this case, but the network provides feedback on whether it is correct or incorrect. It’s referred to as “Semi-Supervised Learning.”

Artificial Neural Network Applications

Following are some important ANN Applications –

- Speech Recognition: Speech recognition relies heavily on artificial neural networks (ANNs). Earlier speech recognition models used statistical models such as Hidden Markov Models. With the introduction of deep learning, several forms of neural networks have become the only way to acquire a precise classification.

- Handwritten Character Recognition: ANNs are used to recognize handwritten characters. Handwritten characters can be in the form of letters or digits, and neural networks have been trained to recognize them.

- Signature Classification: We employ artificial neural networks to recognize signatures and categorize them according to the person’s class when developing these authentication systems. Furthermore, neural networks can determine whether or not a signature is genuine.

- Medical: It can be used to detect cancer cells and analyze MRI pictures in order to provide detailed results.

Create a Neural Network Using Keras

The Sequential model is a linear stack of layers, according to the Keras documentation. By supplying a list of layer objects to the function Object you may make a Sequential model. The dense layer is the usual deeply coupled neural network layer. It is the most popular and often utilized layer. We’ll begin by importing Python libraries.

# Import python libraries required: from keras.models import Sequential from keras.layers import Dense, Activation import numpy as np # numpy array to store inputs (x) and outputs (y): x = np.array([[0,0], [0,1], [1,0], [1,1]]) y = np.array([[0], [1], [1], [0]]) # Define network model and its arguments.

# Set the no. of neurons/nodes for each layer:

model = Sequential()

model.add(Dense(2, input_shape=(2,)))

model.add(Activation('sigmoid'))

model.add(Dense(1))

model.add(Activation('sigmoid'))

# Compile the model and it's accuracy calculation: model.compile(loss='mean_squared_error', optimizer='sgd', metrics=['accuracy']) # Print summary of the model: model.summary()

|

Conclusion

- An artificial neural network (ANN) is a data processing paradigm based on how biological nervous systems, such as the brain, process data.

- Because of their universal approximation capabilities and flexible structure, ANNs are effective data-driven modelling tools for nonlinear systems dynamic modelling and identification.

- It provides a framework for combining different machine learning algorithms to process large amounts of data. Without task-specific instructions, a neural network can “learn” to execute tasks by examining examples.

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion.