This article was published as a part of the Data Science Blogathon.

Introduction

Software as a service, Infrastructure as a service, Platform as a service, etc. are common services that everyone has heard of in the tech world. But what about Artificial Intelligence as a service? It is a third-party AI solution that is cost-effective and an alternative to developing software in-house. AIaaS is relatively new and its emergence is due to the rising popularity of Artificial Intelligence in the IT industry.

Artificial Intelligence as a Service (AIaaS) makes AI technology accessible to everyone. It provides fast, cost-effective, and ready-to-use solutions with minimal effort. With AiaaS, you don’t have to worry much about data, model training, etc as these services are specific ML /DL models already trained with millions of data to accomplish specific tasks like Image processing (uses Computer vision-related deep learning algorithms) or text or audio processing (uses NLP related deep learning algorithms). We need to only call an API without much bothering on what happens in the background, to get our predictions from our custom data. It is that simple.

Top Cloud AiaaS providers in 2020

- Amazon AWS

- Microsoft Azure

- Google Cloud ML

- IBM Watson

Overview:

In this blog, I will show you the outputs from some very interesting AI Services from AWS which can be obtained by simple API calls and can be implemented on a serverless architecture platform. The blog only tries to explain the power of AIaaS and how quickly, we can get predictions from Deep Learning Models (Computer Vision, NLP ) without having to build and train the model ourselves in no time. We can then use these predictions as per our business model to do further processing.

- Face Detection from a given Image

- Labels and Keypoints Detection from Images

- Interpretation of unformatted text (medical reports)

Amazon Rekognition is a cloud-based Software as a service computer vision platform that was launched in 2016. Amazon Rekognition can detect faces in images. You can get information about where faces are detected in an image, facial landmarks such as the position of eyes, and detected emotions (for example, appearing happy or sad). When you provide an image that contains a face, Amazon Rekognition detects the face in the image, analyzes the facial attributes of the face, and then returns a percent confidence score for the face and the facial attributes that are detected in the image. In this blog, I have extracted the facial attributes from an image of God of Cricket – Sachin Tendulkar using AWS Rekognition API in no time

Input Image (Face Recognition)

Output :

{‘FaceDetails’: [{‘BoundingBox’: {‘Width’: 0.20541706681251526, ‘Height’: 0.5380652546882629, ‘Left’: 0.44415491819381714, ‘Top’: 0.24033501744270325}, ‘AgeRange’: {‘Low’: 31, ‘High’: 47}, ‘Smile’: {‘Value’: True, ‘Confidence’: 96.30479431152344}, ‘Eyeglasses’: {‘Value’: True, ‘Confidence’: 99.83201599121094}, ‘Sunglasses’: {‘Value’: True, ‘Confidence’: 98.6719741821289}, ‘Gender’: {‘Value’: ‘Male’, ‘Confidence’: 99.00096893310547}, ‘Beard’: {‘Value’: False, ‘Confidence’: 69.7818832397461}, ‘Mustache’: {‘Value’: False, ‘Confidence’: 98.1515884399414}, ‘EyesOpen’: {‘Value’: True, ‘Confidence’: 99.99970245361328}, ‘MouthOpen’: {‘Value’: True, ‘Confidence’: 96.32877349853516}, ‘Emotions’: [{‘Type’: ‘HAPPY’, ‘Confidence’: 98.26586151123047}, {‘Type’: ‘SURPRISED’, ‘Confidence’: 0.6169241666793823}, {‘Type’: ‘DISGUSTED’, ‘Confidence’: 0.275738000869751}, {‘Type’: ‘CONFUSED’, ‘Confidence’: 0.243975430727005}, {‘Type’: ‘ANGRY’, ‘Confidence’: 0.19858835637569427}, {‘Type’: ‘FEAR’, ‘Confidence’: 0.18878471851348877}, {‘Type’: ‘CALM’, ‘Confidence’: 0.1781758964061737}, {‘Type’: ‘SAD’, ‘Confidence’: 0.03194674849510193}], ‘Landmarks’: [{‘Type’: ‘eyeLeft’, ‘X’: 0.47624024748802185, ‘Y’: 0.451119601726532}, {‘Type’: ‘eyeRight’, ‘X’: 0.5555426478385925, ‘Y’: 0.44501790404319763}, {‘Type’: ‘mouthLeft’, ‘X’: 0.48754286766052246, ‘Y’: 0.634062647819519}, {‘Type’: ‘mouthRight’, ‘X’: 0.5532474517822266, ‘Y’: 0.6297339200973511}, {‘Type’: ‘nose’, ‘X’: 0.4920603930950165, ‘Y’: 0.5352466106414795}, {‘Type’: ‘leftEyeBrowLeft’, ‘X’: 0.4541913866996765, ‘Y’: 0.4153725802898407}, {‘Type’: ‘leftEyeBrowRight’, ‘X’: 0.46305912733078003, ‘Y’: 0.38742440938949585}, {‘Type’: ‘leftEyeBrowUp’, ‘X’: 0.48185744881629944, ‘Y’: 0.39301180839538574}, {‘Type’: ‘rightEyeBrowLeft’, ‘X’: 0.5279744863510132, ‘Y’: 0.38867324590682983}, {‘Type’: ‘rightEyeBrowRight’, ‘X’: 0.5559390187263489, ‘Y’: 0.3791316747665405}, {‘Type’: ‘rightEyeBrowUp’, ‘X’: 0.5934171080589294, ‘Y’: 0.40411877632141113}, {‘Type’: ‘leftEyeLeft’, ‘X’: 0.46517136693000793, ‘Y’: 0.45265403389930725}, {‘Type’: ‘leftEyeRight’, ‘X’: 0.49202463030815125, ‘Y’: 0.4516719579696655}, {‘Type’: ‘leftEyeUp’, ‘X’: 0.47422394156455994, ‘Y’: 0.44147491455078125}, {‘Type’: ‘leftEyeDown’, ‘X’: 0.47658079862594604, ‘Y’: 0.45885252952575684}, {‘Type’: ‘rightEyeLeft’, ‘X’: 0.5400394797325134, ‘Y’: 0.44783228635787964}, {‘Type’: ‘rightEyeRight’, ‘X’: 0.5731584429740906, ‘Y’: 0.44445860385894775}, {‘Type’: ‘rightEyeUp’, ‘X’: 0.5541402101516724, ‘Y’: 0.43517470359802246}, {‘Type’: ‘rightEyeDown’, ‘X’: 0.5553746819496155, ‘Y’: 0.452958881855011}, {‘Type’: ‘noseLeft’, ‘X’: 0.49367624521255493, ‘Y’: 0.5608895421028137}, {‘Type’: ‘noseRight’, ‘X’: 0.5235978960990906, ‘Y’: 0.5586907863616943}, {‘Type’: ‘mouthUp’, ‘X’: 0.5075159072875977, ‘Y’: 0.601891279220581}, {‘Type’: ‘mouthDown’, ‘X’: 0.5127723813056946, ‘Y’: 0.65743088722229}, {‘Type’: ‘leftPupil’, ‘X’: 0.47624024748802185, ‘Y’: 0.451119601726532}, {‘Type’: ‘rightPupil’, ‘X’: 0.5555426478385925, ‘Y’: 0.44501790404319763}, {‘Type’: ‘upperJawlineLeft’, ‘X’: 0.46838507056236267, ‘Y’: 0.47455382347106934}, {‘Type’: ‘midJawlineLeft’, ‘X’: 0.4850492775440216, ‘Y’: 0.6637623906135559}, {‘Type’: ‘chinBottom’, ‘X’: 0.5270727872848511, ‘Y’: 0.7539381980895996}, {‘Type’: ‘midJawlineRight’, ‘X’: 0.6289422512054443, ‘Y’: 0.6527353525161743}, {‘Type’: ‘upperJawlineRight’, ‘X’: 0.6444846391677856, ‘Y’: 0.4598093032836914}], ‘Pose’: {‘Roll’: -7.582068920135498, ‘Yaw’: -27.886552810668945, ‘Pitch’: 13.909193992614746}, ‘Quality’: {‘Brightness’: 83.0195541381836, ‘Sharpness’: 78.64350128173828}, ‘Confidence’: 99.99861907958984}], ‘ResponseMetadata’: {‘RequestId’: ‘7c7b8384-d5a8-40a3-b0d3-1db5f65198fc’, ‘HTTPStatusCode’: 200, ‘HTTPHeaders’: {‘content-type’: ‘application/x-amz-json-1.1’, ‘date’: ‘Thu, 14 Jan 2021 14:47:20 GMT’, ‘x-amzn-requestid’: ‘7c7b8384-d5a8-40a3-b0d3-1db5f65198fc’, ‘content-length’: ‘3347’, ‘connection’: ‘keep-alive’}, ‘RetryAttempts’: 0}}

We can see how the model extracted multiple facial attributes including the bounding box coordinates of the face and many other attributes like age, emotions, gender. It also identified the person in the image is wearing sunglasses. The numbers are the confidence score computed by the model. Next, let us try to predict objects and scenes in an image.

Input Image (Object Recognition)

This image is from a recent 3rd cricket test match between India and Australia in Sydney. Now let us see what the model identified from this image

Output :

{‘Labels’: [{‘Name’: ‘Person’, ‘Confidence’: 99.94894409179688, ‘Instances’: [{‘BoundingBox’: {‘Width’: 0.11527907848358154, ‘Height’: 0.7512875199317932, ‘Left’: 0.7737820148468018, ‘Top’: 0.23506903648376465}, ‘Confidence’: 99.94894409179688}, {‘BoundingBox’: {‘Width’: 0.1395261287689209, ‘Height’: 0.7048695683479309, ‘Left’: 0.3460443615913391, ‘Top’: 0.29426464438438416}, ‘Confidence’: 99.93523406982422}, {‘BoundingBox’: {‘Width’: 0.10976755619049072, ‘Height’: 0.7354522943496704, ‘Left’: 0.8721725940704346, ‘Top’: 0.24789121747016907}, ‘Confidence’: 99.93440246582031}, {‘BoundingBox’: {‘Width’: 0.16669583320617676, ‘Height’: 0.7431305646896362, ‘Left’: 0.48980849981307983, ‘Top’: 0.24040062725543976}, ‘Confidence’: 99.9211654663086}, {‘BoundingBox’: {‘Width’: 0.13125979900360107, ‘Height’: 0.7153438925743103, ‘Left’: 0.6609324216842651, ‘Top’: 0.280178040266037}, ‘Confidence’: 99.90505981445312}, {‘BoundingBox’: {‘Width’: 0.1306787133216858, ‘Height’: 0.6836469173431396, ‘Left’: 0.19070139527320862, ‘Top’: 0.30579841136932373}, ‘Confidence’: 99.89260864257812}, {‘BoundingBox’: {‘Width’: 0.11481481790542603, ‘Height’: 0.7306142449378967, ‘Left’: 0.08764462172985077, ‘Top’: 0.2639639377593994}, ‘Confidence’: 99.87141418457031}, {‘BoundingBox’: {‘Width’: 0.08894997835159302, ‘Height’: 0.5478061437606812, ‘Left’: 0.28502774238586426, ‘Top’: 0.2700440287590027}, ‘Confidence’: 99.86509704589844}, {‘BoundingBox’: {‘Width’: 0.05594509840011597, ‘Height’: 0.46266835927963257, ‘Left’: 0.4419107139110565, ‘Top’: 0.3235691487789154}, ‘Confidence’: 99.41387176513672}, {‘BoundingBox’: {‘Width’: 0.0792531669139862, ‘Height’: 0.6266587376594543, ‘Left’: 0.01880905032157898, ‘Top’: 0.2961716949939728}, ‘Confidence’: 99.31836700439453}, {‘BoundingBox’: {‘Width’: 0.07466930150985718, ‘Height’: 0.6673304438591003, ‘Left’: 0.06281328201293945, ‘Top’: 0.2985752522945404}, ‘Confidence’: 97.95123291015625}, {‘BoundingBox’: {‘Width’: 0.050719618797302246, ‘Height’: 0.15526556968688965, ‘Left’: 0.7476598620414734, ‘Top’: 0.283884733915329}, ‘Confidence’: 89.93550109863281}, {‘BoundingBox’: {‘Width’: 0.9160513877868652, ‘Height’: 0.2598652243614197, ‘Left’: 0.03499102592468262, ‘Top’: 0.005872916430234909}, ‘Confidence’: 74.86686706542969}], ‘Parents’: []}, {‘Name’: ‘Human’, ‘Confidence’: 99.94894409179688, ‘Instances’: [], ‘Parents’: []}, {‘Name’: ‘Shoe’, ‘Confidence’: 99.1314697265625, ‘Instances’: [{‘BoundingBox’: {‘Width’: 0.07123875617980957, ‘Height’: 0.05580293759703636, ‘Left’: 0.8770506978034973, ‘Top’: 0.9347420334815979}, ‘Confidence’: 99.1314697265625}, {‘BoundingBox’: {‘Width’: 0.037609100341796875, ‘Height’: 0.05298950895667076, ‘Left’: 0.9378718137741089, ‘Top’: 0.9069159626960754}, ‘Confidence’: 98.84042358398438}, {‘BoundingBox’: {‘Width’: 0.025996685028076172, ‘Height’: 0.051444340497255325, ‘Left’: 0.7660526037216187, ‘Top’: 0.9468560814857483}, ‘Confidence’: 96.88949584960938}, {‘BoundingBox’: {‘Width’: 0.029384374618530273, ‘Height’: 0.04353249445557594, ‘Left’: 0.7718676328659058, ‘Top’: 0.759616494178772}, ‘Confidence’: 90.52886962890625}, {‘BoundingBox’: {‘Width’: 0.0338703989982605, ‘Height’: 0.045315880328416824, ‘Left’: 0.3213094174861908, ‘Top’: 0.7607503533363342}, ‘Confidence’: 90.32197570800781}, {‘BoundingBox’: {‘Width’: 0.037531375885009766, ‘Height’: 0.042367592453956604, ‘Left’: 0.6709437966346741, ‘Top’: 0.956001341342926}, ‘Confidence’: 86.78009796142578}, {‘BoundingBox’: {‘Width’: 0.051809702068567276, ‘Height’: 0.047557536512613297, ‘Left’: 0.04164793714880943, ‘Top’: 0.8836102485656738}, ‘Confidence’: 86.4990234375}, {‘BoundingBox’: {‘Width’: 0.047059208154678345, ‘Height’: 0.04854234308004379, ‘Left’: 0.07918156683444977, ‘Top’: 0.9307251572608948}, ‘Confidence’: 79.03814697265625}, {‘BoundingBox’: {‘Width’: 0.04683256149291992, ‘Height’: 0.043805379420518875, ‘Left’: 0.48746615648269653, ‘Top’: 0.9553288221359253}, ‘Confidence’: 75.76573944091797}, {‘BoundingBox’: {‘Width’: 0.024056553840637207, ‘Height’: 0.05118529871106148, ‘Left’: 0.30561891198158264, ‘Top’: 0.7718438506126404}, ‘Confidence’: 73.63504791259766}, {‘BoundingBox’: {‘Width’: 0.041503190994262695, ‘Height’: 0.04735144227743149, ‘Left’: 0.8406999707221985, ‘Top’: 0.9409265518188477}, ‘Confidence’: 71.9170913696289}], ‘Parents’: [{‘Name’: ‘Footwear’}, {‘Name’: ‘Clothing’}]}, {‘Name’: ‘Footwear’, ‘Confidence’: 99.1314697265625, ‘Instances’: [], ‘Parents’: [{‘Name’: ‘Clothing’}]}, {‘Name’: ‘Clothing’, ‘Confidence’: 99.1314697265625, ‘Instances’: [], ‘Parents’: []}, {‘Name’: ‘Apparel’, ‘Confidence’: 99.1314697265625, ‘Instances’: [], ‘Parents’: []}, {‘Name’: ‘Building’, ‘Confidence’: 95.78233337402344, ‘Instances’: [], ‘Parents’: []}, {‘Name’: ‘Shorts’, ‘Confidence’: 94.52140045166016, ‘Instances’: [], ‘Parents’: [{‘Name’: ‘Clothing’}]}, {‘Name’: ‘Field’, ‘Confidence’: 89.95359802246094, ‘Instances’: [], ‘Parents’: []}, {‘Name’: ‘Hat’, ‘Confidence’: 89.91564178466797, ‘Instances’: [{‘BoundingBox’: {‘Width’: 0.09435784816741943, ‘Height’: 0.10483504086732864, ‘Left’: 0.5526021718978882, ‘Top’: 0.2231374979019165}, ‘Confidence’: 89.91564178466797}], ‘Parents’: [{‘Name’: ‘Clothing’}]}, {‘Name’: ‘People’, ‘Confidence’: 88.79578399658203, ‘Instances’: [], ‘Parents’: [{‘Name’: ‘Person’}]}, {‘Name’: ‘Arena’, ‘Confidence’: 88.33050537109375, ‘Instances’: [], ‘Parents’: [{‘Name’: ‘Building’}]}, {‘Name’: ‘Stadium’, ‘Confidence’: 86.9452896118164, ‘Instances’: [], ‘Parents’: [{‘Name’: ‘Arena’}, {‘Name’: ‘Building’}]}, {‘Name’: ‘Helmet’, ‘Confidence’: 81.95767211914062, ‘Instances’: [{‘BoundingBox’: {‘Width’: 0.056189119815826416, ‘Height’: 0.1054595559835434, ‘Left’: 0.3773099184036255, ‘Top’: 0.2766309082508087}, ‘Confidence’: 81.95767211914062}, {‘BoundingBox’: {‘Width’: 0.03357630968093872, ‘Height’: 0.06521259993314743, ‘Left’: 0.44274526834487915, ‘Top’: 0.32206523418426514}, ‘Confidence’: 55.274818420410156}], ‘Parents’: [{‘Name’: ‘Clothing’}]}], ‘LabelModelVersion’: ‘2.0’, ‘ResponseMetadata’: {‘RequestId’: ‘aa76f817-1ec0-4380-a676-6730ab36f3cc’, ‘HTTPStatusCode’: 200, ‘HTTPHeaders’: {‘content-type’: ‘application/x-amz-json-1.1’, ‘date’: ‘Thu, 14 Jan 2021 15:53:34 GMT’, ‘x-amzn-requestid’: ‘aa76f817-1ec0-4380-a676-6730ab36f3cc’, ‘content-length’: ‘5541’, ‘connection’: ‘keep-alive’}, ‘RetryAttempts’: 0}}

We can see again, we get a lot of details high confidence scores. The model recognizes objects like

- Persons (multiple bounding boxes for different players)

- Human

- Shoe (players)

- Footwear

- helmet

- Clothing

- Sports

- Field

- Hat

- Stadium

We can also get all these outputs as a JSON file

{“Labels”: [{“Name”: “Person”,“Confidence”: 99.94894409179688,“Instances”: [{“BoundingBox”: {“Width”: 0.11527907848358154,“Height”: 0.7512875199317932,“Left”: 0.7737820148468018,“Top”: 0.23506903648376465},“Confidence”: 99.94894409179688},{“BoundingBox”: {“Width”: 0.1395261287689209,“Height”: 0.7048695683479309,“Left”: 0.3460443615913391,“Top”: 0.29426464438438416},“Confidence”: 99.93523406982422},{“BoundingBox”: {“Width”: 0.10976755619049072,“Height”: 0.7354522943496704,“Left”: 0.8721725940704346,“Top”: 0.24789121747016907},“Confidence”: 99.93440246582031},{“BoundingBox”: {“Width”: 0.16669583320617676,“Height”: 0.7431305646896362,“Left”: 0.48980849981307983,“Top”: 0.24040062725543976},“Confidence”: 99.9211654663086},{“BoundingBox”: {“Width”: 0.13125979900360107,“Height”: 0.7153438925743103,“Left”: 0.6609324216842651,“Top”: 0.280178040266037},“Confidence”: 99.90505981445312},{“BoundingBox”: {“Width”: 0.1306787133216858,“Height”: 0.6836469173431396,“Left”: 0.19070139527320862,“Top”: 0.30579841136932373},“Confidence”: 99.89260864257812},{“BoundingBox”: {“Width”: 0.11481481790542603,“Height”: 0.7306142449378967,“Left”: 0.08764462172985077,“Top”: 0.2639639377593994},“Confidence”: 99.87141418457031},{“BoundingBox”: {“Width”: 0.08894997835159302,“Height”: 0.5478061437606812,“Left”: 0.28502774238586426,“Top”: 0.2700440287590027},“Confidence”: 99.86509704589844},{“BoundingBox”: {“Width”: 0.05594509840011597,“Height”: 0.46266835927963257,“Left”: 0.4419107139110565,“Top”: 0.3235691487789154},“Confidence”: 99.41387176513672},{“BoundingBox”: {“Width”: 0.0792531669139862,“Height”: 0.6266587376594543,“Left”: 0.01880905032157898,“Top”: 0.2961716949939728},“Confidence”: 99.31836700439453},{“BoundingBox”: {“Width”: 0.07466930150985718,“Height”: 0.6673304438591003,“Left”: 0.06281328201293945,“Top”: 0.2985752522945404},“Confidence”: 97.95123291015625},{“BoundingBox”: {“Width”: 0.050719618797302246,“Height”: 0.15526556968688965,“Left”: 0.7476598620414734,“Top”: 0.283884733915329},“Confidence”: 89.93550109863281},{“BoundingBox”: {“Width”: 0.9160513877868652,“Height”: 0.2598652243614197,“Left”: 0.03499102592468262,“Top”: 0.005872916430234909},“Confidence”: 74.86686706542969}],“Parents”: []},{“Name”: “Human”,“Confidence”: 99.94894409179688,“Instances”: [],“Parents”: []},{“Name”: “Shoe”,“Confidence”: 99.1314697265625,“Instances”: [{“BoundingBox”: {“Width”: 0.07123875617980957,“Height”: 0.05580293759703636,“Left”: 0.8770506978034973,“Top”: 0.9347420334815979},“Confidence”: 99.1314697265625},{“BoundingBox”: {“Width”: 0.037609100341796875,“Height”: 0.05298950895667076,“Left”: 0.9378718137741089,“Top”: 0.9069159626960754},“Confidence”: 98.84042358398438},{“BoundingBox”: {“Width”: 0.025996685028076172,“Height”: 0.051444340497255325,“Left”: 0.7660526037216187,“Top”: 0.9468560814857483},“Confidence”: 96.88949584960938},{“BoundingBox”: {“Width”: 0.029384374618530273,“Height”: 0.04353249445557594,“Left”: 0.7718676328659058,“Top”: 0.759616494178772},“Confidence”: 90.52886962890625},{“BoundingBox”: {“Width”: 0.0338703989982605,“Height”: 0.045315880328416824,“Left”: 0.3213094174861908,“Top”: 0.7607503533363342},“Confidence”: 90.32197570800781},{“BoundingBox”: {“Width”: 0.037531375885009766,“Height”: 0.042367592453956604,“Left”: 0.6709437966346741,“Top”: 0.956001341342926},“Confidence”: 86.78009796142578},{“BoundingBox”: {“Width”: 0.051809702068567276,“Height”: 0.047557536512613297,“Left”: 0.04164793714880943,“Top”: 0.8836102485656738},“Confidence”: 86.4990234375},{“BoundingBox”: {“Width”: 0.047059208154678345,“Height”: 0.04854234308004379,“Left”: 0.07918156683444977,“Top”: 0.9307251572608948},“Confidence”: 79.03814697265625},{“BoundingBox”: {“Width”: 0.04683256149291992,“Height”: 0.043805379420518875,“Left”: 0.48746615648269653,“Top”: 0.9553288221359253},“Confidence”: 75.76573944091797},{“BoundingBox”: {“Width”: 0.024056553840637207,“Height”: 0.05118529871106148,“Left”: 0.30561891198158264,“Top”: 0.7718438506126404},“Confidence”: 73.63504791259766},{“BoundingBox”: {“Width”: 0.041503190994262695,“Height”: 0.04735144227743149,“Left”: 0.8406999707221985,“Top”: 0.9409265518188477},“Confidence”: 71.9170913696289}],“Parents”: [{“Name”: “Footwear”},{“Name”: “Clothing”}]},{“Name”: “Footwear”,“Confidence”: 99.1314697265625,“Instances”: [],“Parents”: [{“Name”: “Clothing”}]},{“Name”: “Clothing”,“Confidence”: 99.1314697265625,“Instances”: [],“Parents”: []},{“Name”: “Apparel”,“Confidence”: 99.1314697265625,“Instances”: [],“Parents”: []},{“Name”: “Building”,“Confidence”: 95.49980163574219,“Instances”: [],“Parents”: []},{“Name”: “Shorts”,“Confidence”: 94.40264129638672,“Instances”: [],“Parents”: [{“Name”: “Clothing”}]},{“Name”: “Field”,“Confidence”: 90.48631286621094,“Instances”: [],“Parents”: []},{“Name”: “Hat”,“Confidence”: 89.91564178466797,“Instances”: [{“BoundingBox”: {“Width”: 0.09435784816741943,“Height”: 0.10483504086732864,“Left”: 0.5526021718978882,“Top”: 0.2231374979019165},“Confidence”: 89.91564178466797}],“Parents”: [{“Name”: “Clothing”}]},{“Name”: “People”,“Confidence”: 88.85064697265625,“Instances”: [],“Parents”: [{“Name”: “Person”}]},{“Name”: “Arena”,“Confidence”: 87.73716735839844,“Instances”: [],“Parents”: [{“Name”: “Building”}]},{“Name”: “Stadium”,“Confidence”: 86.18683624267578,“Instances”: [],“Parents”: [{“Name”: “Arena”},{“Name”: “Building”}]},{“Name”: “Helmet”,“Confidence”: 81.95767211914062,“Instances”: [{“BoundingBox”: {“Width”: 0.056189119815826416,“Height”: 0.1054595559835434,“Left”: 0.3773099184036255,“Top”: 0.2766309082508087},“Confidence”: 81.95767211914062},{“BoundingBox”: {“Width”: 0.03357630968093872,“Height”: 0.06521259993314743,“Left”: 0.44274526834487915,“Top”: 0.32206523418426514},“Confidence”: 55.274818420410156}],“Parents”: [{“Name”: “Clothing”}]},{“Name”: “Crowd”,“Confidence”: 62.106300354003906,“Instances”: [],“Parents”: [{“Name”: “Person”}]},{“Name”: “Sport”,“Confidence”: 58.99622344970703,“Instances”: [],“Parents”: [{“Name”: “Person”}]},{“Name”: “Sports”,“Confidence”: 58.99622344970703,“Instances”: [],“Parents”: [{“Name”: “Person”}]},{“Name”: “Team Sport”,“Confidence”: 57.865882873535156,“Instances”: [],“Parents”: [{“Name”: “Sport”},{“Name”: “Team”},{“Name”: “Person”},{“Name”: “People”}]},{“Name”: “Team”,“Confidence”: 57.865882873535156,“Instances”: [],“Parents”: [{“Name”: “People”},{“Name”: “Person”}]}],“LabelModelVersion”: “2.0”}

As we can see the service gives us the gamut of different attribute values, which we can use per our needs. imagine what it would take if we were to build the same kind of model from inception. From data collection-cleaning-transformation-building a model-training it on high-end servers for weeks. All these stages are bypassed here as we have an out of the box readymade solution already available. Even if use pre-trained models like yolo3, detectron 2, we would still need to pass through the pipeline of all the stages mentioned above before we have the model ready for prediction

How can we use these services in real-time (use-cases) what we saw till now was just a raw AI solution but that itself is so powerful? We can make it even more lethal by bending them with other like services for our business cases. Few very high-level cases I can think of now are

- Contactless Entry into Office Buildings: Faces of Employees along with Emp ID will be matched with HR records while signing In /Out of the buildings

- Restrict Entry into meeting rooms/conference halls Face of the person will be checked with meeting invitees faces

Next, let us look into one of the most interesting AI services from Amazon

Amazon Comprehend – Natural Language Processing and Text Analytics

Here I will show how the comprehend medical in real-time outputs the health data of the patient in a human-understandable format using the medical texts that are not ordered or structured in any particular fashion. These could a doctors note, clinical records, or any other plain lab test reports

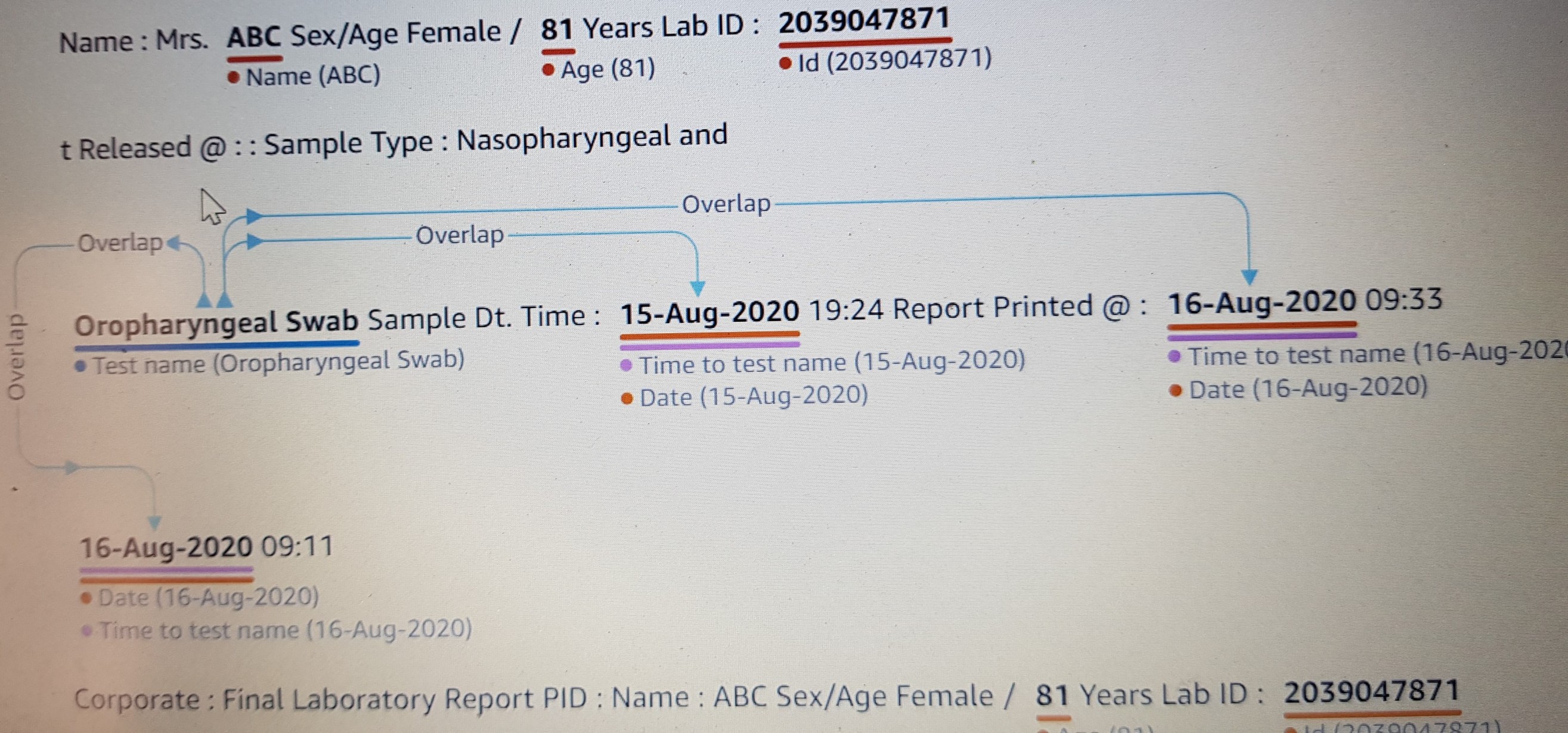

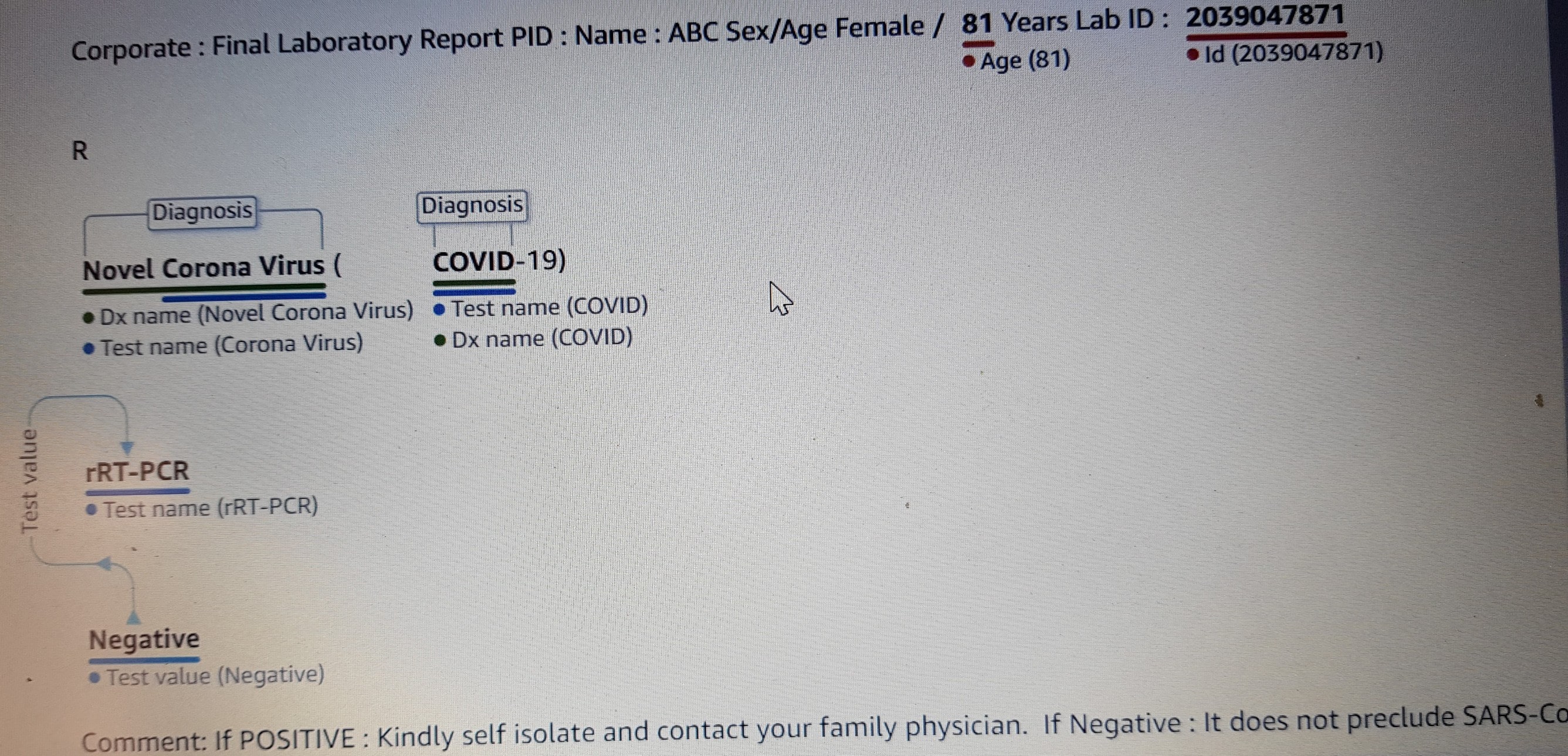

Input Text of Patient (name masked) – plain text, lab report

Name : Mrs. ABC Sex/Age Female / 81 Years Lab ID : 2039047871t Released @:: Sample Type : Nasopharyngeal andOropharyngeal Swab Sample Dt. Time : 15-Aug-2020 19:24 Report Printed @ : 16-Aug-2020 09:3316-Aug-2020 09:11Corporate:Final Laboratory Report PID :Name : ABC Sex/Age Female / 81 Years Lab ID : 2039047871RNovel Corona Virus (COVID-19)rRT-PCRNegativeComment:If POSITIVE : Kindly self isolate and contact your family physician.If Negative : It does not preclude SARS-CoV-2 and should not be used as the sole basis for patient managementdecisions. In clinically suspected patients and those who had contact with a known positive patient, it is advisableto retest in a fresh sample after 48 to 72 hoursICMR Registration number NEUBE002.

Output 1 – Plain Text

Name : Mrs. ABC Sex/Age Female / 81 Years Lab ID : 2039047871t Released @ : : Sample Type : Nasopharyngeal and Oropharyngeal Swab Sample Dt. Time : 15-Aug-2020 19:24 Report Printed @ : 16-Aug-2020 09:33 16-Aug-2020 09:11 Corporate : Final Laboratory Report PID : Name : ABC Sex/Age Female / 81 Years Lab ID : 2039047871 RNovel Corona VirusDx name (Novel Corona Virus)(COVID-19)DiagnosisrRT-PCRNegativeComment:If POSITIVE : Kindly self isolate and contact your family physician.If Negative : It does not preclude SARS-CoV-2 and should not be used as the sole basis for patientmanagementdecisions. In clinically suspected patients and those who had contact with a known positive patient, itis advisableto retest in a fresh sample after 48 to 72 hoursICMR Registration number NEUBE002.

Output 2 – Entity Relationships

We can see how the model was able to relate different entities in such a way, we can easily identify – Diagnosis – Test Conducted – Test Results – Date of Test – Patient Name – Patient Age, etc

Conclusion

In this blog, I tried to highlight the power of AIaaS and the kind of impact it can create on our business. These services are unique in that, they are meant to carry out a particular task for which the solution is created. oftentimes, our business may demand solutions, that cannot be directly mapped to any of these pre-build services. In those cases, we would still be falling back on our own models. AIaaS will co-exist with our ml /dl models for at least the time we enter the stage of full AGI ( Artificial General Intelligence)

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.