This article was published as a part of the Data Science Blogathon.

Introduction

Deep learning is a branch of machine learning inspired by the brain’s ability to learn. It is a data-driven approach to learning that can automatically extract features from data and build models to make predictions.

Deep learning has revolutionized many areas of machine learning, such as image classification, object detection, and natural language processing. It has also successfully tackled unsolvable problems, such as machine translation.

Deep learning is still an emerging field, and a lot of research is still to be done to unlock its potential fully. However, the results so far have been encouraging, and it is clear that deep learning is here to stay.

What Do Deep Learning Companies do?

Deep learning companies are in the business of creating algorithms that can learn from data. It is done by building models capable of extracting features from data and then using those features to make predictions. The goal is to create algorithms that can generalize well from data and learn new tasks as they are presented. There are several ways to build deep learning models, and each company has its approach. Some popular methods include convolutional neural networks, recurrent neural networks, and Long Short-Term Memory networks.

Importance of Asking the Right Question in an Interview

Asking the right questions during an interview is essential for several reasons. First, it shows that you’re genuinely interested in the position and the company. It also allows you to gather information that can help you determine if the job is a good fit for you. Finally, asking questions will enable you to sell yourself and demonstrate why you’re the best candidate for the job.

When preparing for an interview, take some time to brainstorm questions that will show your interest in the position and the company. Please avoid asking questions that we can quickly answer with a simple Google search. Instead, focus on questions that will require the interviewer to think about the answer and provide you with more information.

Below are some most common advanced questions in Deep Learning Interviews. Basic knowledge of Deep Learning is a prerequisite for this article.

Deep Learning Interview Questions

Below are the questions with detailed answers.

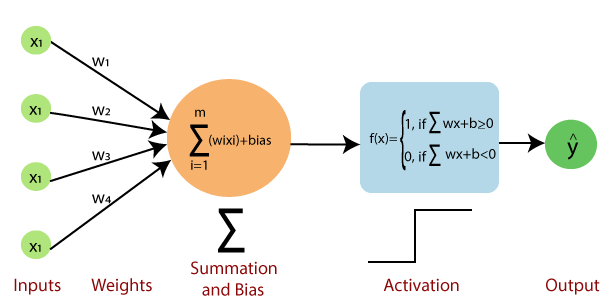

Q1. What is a perceptron in Deep Neural Networks?

A perceptron is an artificial neuron that simulates a biological neuron’s workings. It is the basic building block of a neural network. A perceptron consists of a set of input nodes and a single output node. Each input node is connected to the output node by a weight. The perceptron calculates the weighted sum of the input signals and outputs a signal if the sum is greater than a threshold value.

Frank Rosenblatt first introduced the perceptron in the 1950s. He developed the perceptron to simulate the workings of the human brain. The perceptron was the first artificial neural network to be developed, the simplest form of a neural network.

The perceptron is used in various applications, including pattern recognition, data classification, and artificial intelligence.

Types of Perceptron:

1. A single-layer perceptron (SLP) is a supervised learning algorithm for binary or multiclass classification. A single-layer perceptron is a type of neural network that consists of a single layer of neurons.

2. A multi-layer perceptron (MLP) is a supervised learning algorithm for binary or multiclass classification. A multi-layer perceptron is a type of neural network consisting of multiple neurons.

The basic idea behind the operation of a single-layer and multi-layer perceptron is the same. Each neuron in the network is connected to all the other neurons.

Q2. What are activation functions?

Activation functions are essential components in deep learning models. They are used to control the output of a neural network.

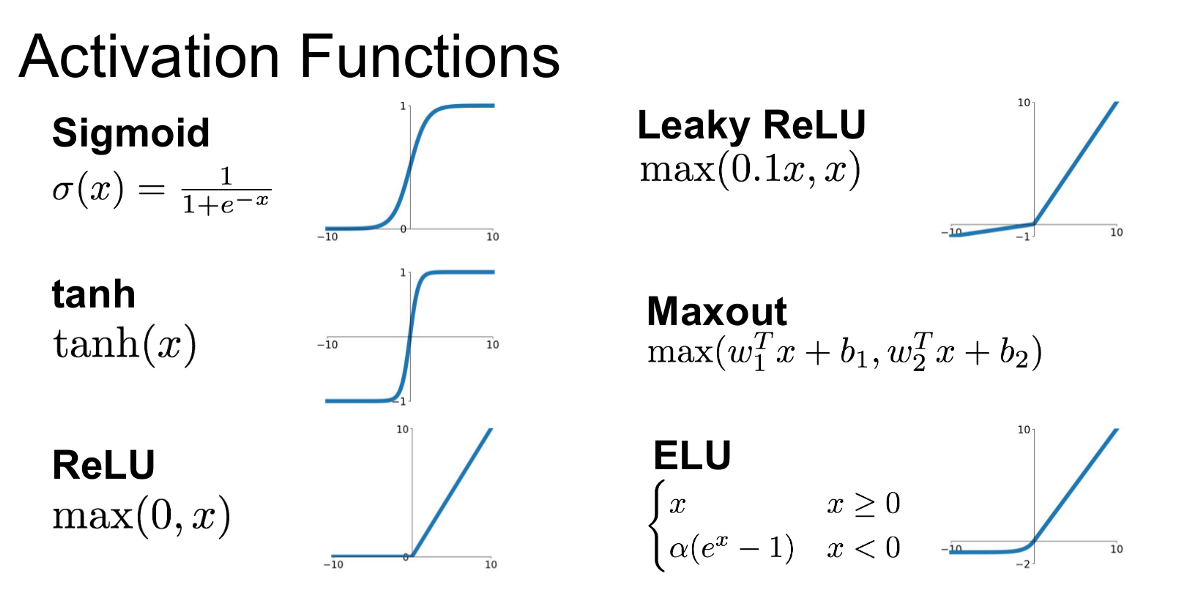

There are many different activation functions, but the most common ones are sigmoid, tanh, and ReLU.

Sigmoid activation functions are used in logistic regression models. They map input values to output values between 0 and 1.

Tanh activation functions are used in many types of neural networks. They are similar to sigmoid activation functions but map input values to output values between -1 and 1.

ReLU activation functions are used in many types of neural networks. They are the most popular type of activation function. ReLU stands for a rectified linear unit. ReLU activation functions are linear when the input is positive and zero when the input is negative.

Q3. Difference between Supervised and Unsupervised learning.

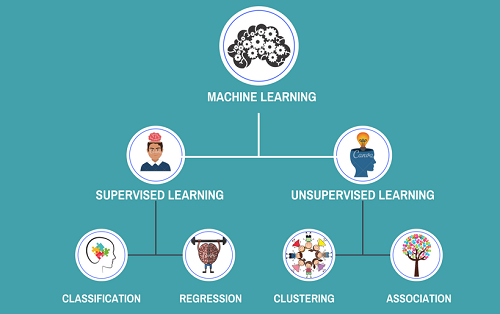

Deep learning algorithms can be broadly split into supervised and unsupervised categories. Supervised learning algorithms are trained using labeled data, where each example is a pair of an input and an output value. The goal is to learn a mapping from the input to the output. Unsupervised learning algorithms are trained using unlabeled data, where the goal is to learn some structure or intrinsic relationship in the data.

Supervised learning is the most common type of machine learning and has been successful in a wide variety of tasks, such as image classification, speech recognition, and natural language processing. Unsupervised learning is less commonly used but has been successful in tasks such as clustering and dimensionality reduction.

Q4. What are loss functions?

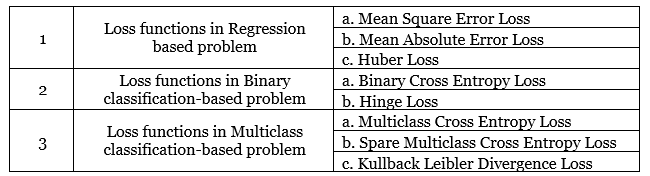

Mean squared error: This loss function is used for regression problems and measures the average of the squared differences between the predicted and actual values.

Binary cross entropy: This loss function is used for binary classification problems and measures the cross entropy between the predicted and actual values.

Categorical cross entropy: This loss function is used for multiclass classification problems and measures the cross entropy between the predicted and actual value.

Q5. What are autoencoders in deep learning?

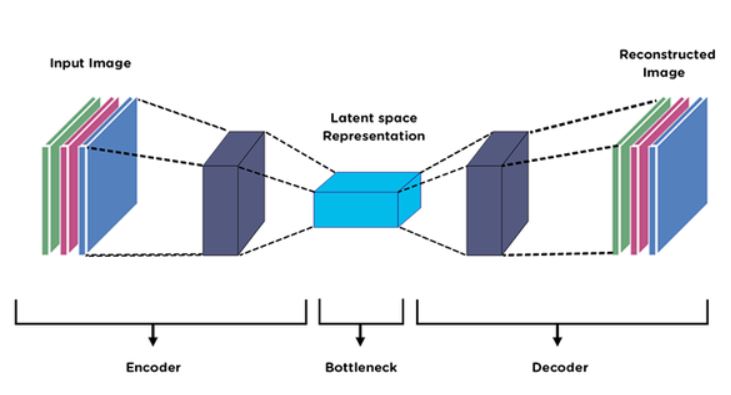

There are several types of autoencoders in deep learning, each with advantages and disadvantages. The most common types are:

1. Denoising autoencoders: These autoencoders are trained to reconstruct the original input from a corrupted version. It makes them robust to noise and able to learn features that are robust to small changes.

2. Sparse autoencoders: These are trained to learn a sparse representation, i.e., have few non-zero entries. It makes them efficient at learning local features with few dependencies.

3. Variational autoencoders: These are trained to maximize the likelihood of the data under the model. It enables them to learn complex distributions and generate new data from the learned distribution.

4. Generative adversarial autoencoders: These autoencoders are trained using a generative adversarial network.

Q6. What is meant by data normalization?

1. Normalization can help improve the performance of machine learning algorithms.

2. Normalization can make it easier to compare different data sets.

3. Normalization can help you find patterns in your data that you might not have noticed.

4. Normalization can improve the stability of machine learning models.You can use several methods to normalize your data; choosing one will depend on your data and goals. Some common techniques include min-max scaling, z-score scaling, and standardization.

Q7. What is forward propagation?

Q8. What is backward propagation?

Q9. What are hyperparameters in Deep Learning?

Q10. What are the different layers in a deep learning network?

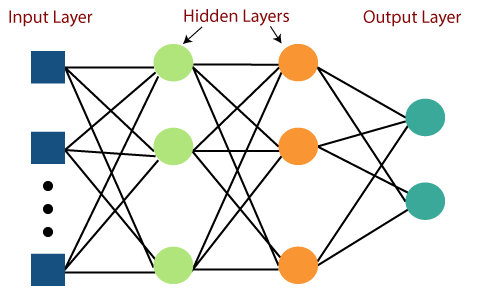

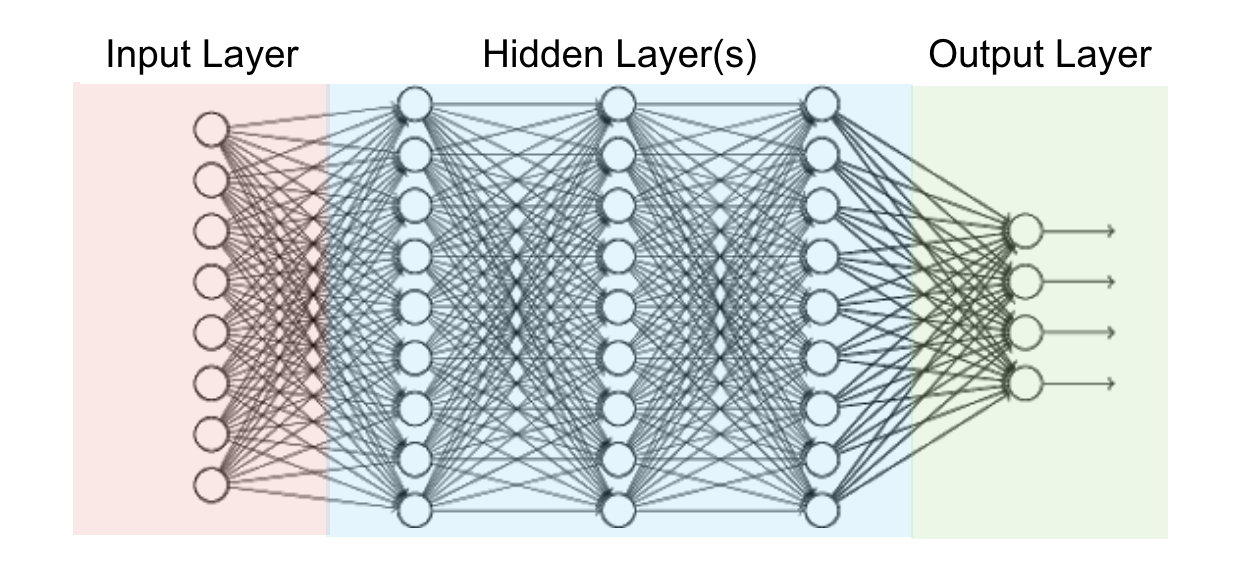

Deep learning networks are often described as being composed of multiple layers. These layers are usually made up of a series of interconnected processing nodes, or neurons, each performing a simple operation on the data they receive. The output of one layer becomes the input of the next layer, and we can use the network’s final output to make predictions or decisions. The most superficial deep learning networks contain just two layers: an input layer and an output layer. However, most networks have multiple hidden layers between the input and output layers. The number of hidden layers and neurons in each layer can vary. The specific configuration of a deep learning network will be determined by the problem it is trying to solve.

The input layer of a deep learning network is where the data enters the network. This data can be in images, text, or any other type of data that we can represent numerically. The output layer is where the network produces its predictions or decisions.

Q11. What is the Convolutional layer in deep learning?

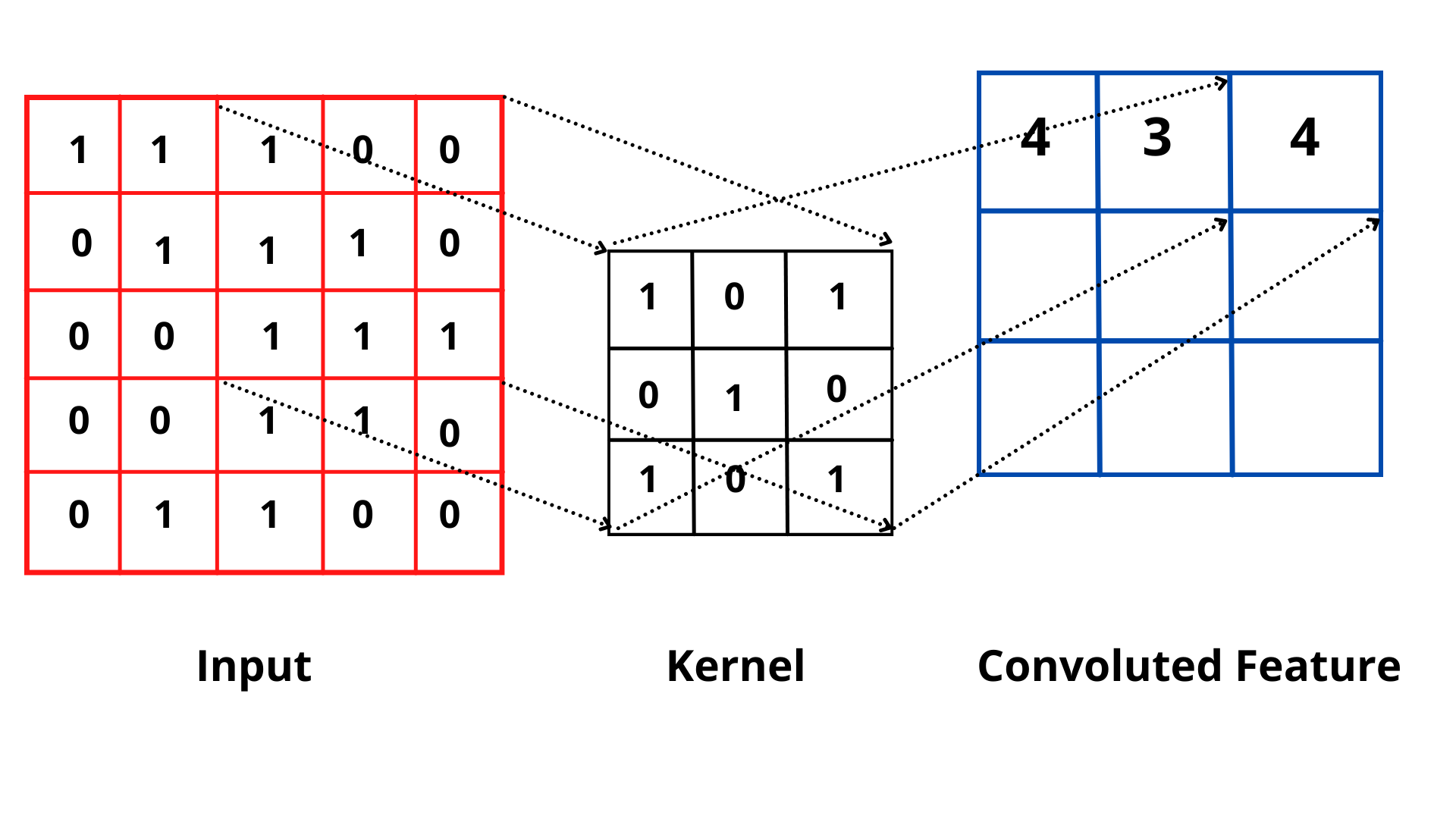

A convolutional layer is a critical component of a convolutional neural network (CNN), a type of deep learning algorithm. A convolutional layer comprises a set of neurons with a small receptive field. The receptive fields of the neurons in a convolutional layer are tiled so that they overlap with each other. This overlap allows the convolutional layer to learn features that are local in space but global in nature. For example, a convolutional layer might learn to detect the presence of an eye in an image.

Q12. What is the Dropout layer in deep learning?

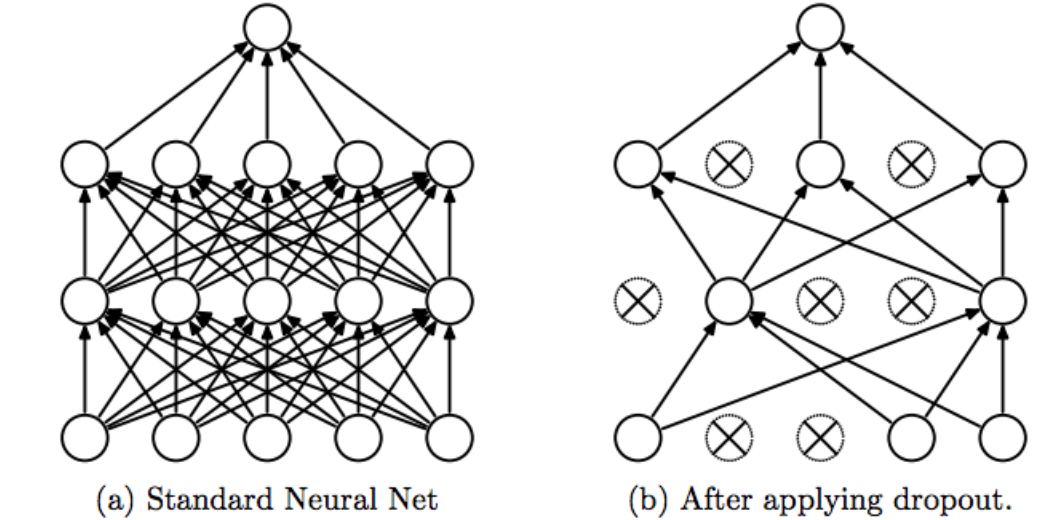

In deep learning, the dropout layer is neurons randomly “dropped out” (ignored) during training. The goal of dropout is to prevent overfitting by providing a way to reduce the complexity of the neural network. When using dropout, it is essential to remember that the dropped-out neurons are not removed from the network. They are ignored during training. It means that the number of neurons in the input layer must match the number of neurons in the output layer.Dropout is typically used with other regularization techniques, such as weight decay and early stopping.

Q13. What is the Flattening layer in deep learning?

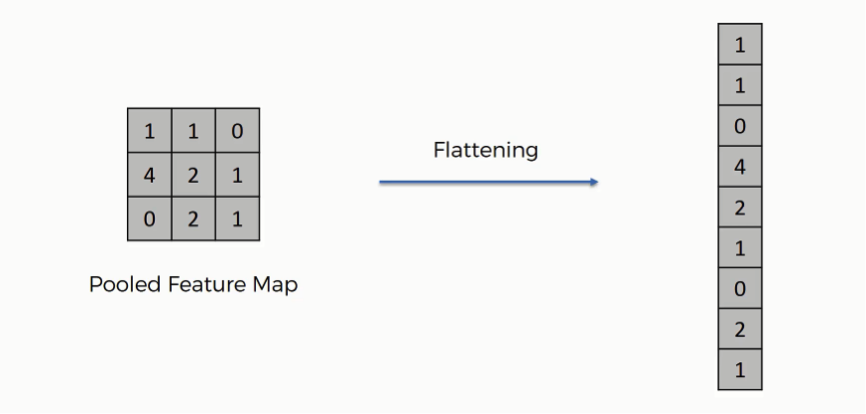

The flattening layer has no learnable parameters and performs a transformation on the data.

There are many benefits to using a flattening layer in deep learning. The most obvious benefit is that it reduces the dimensionality of the data, which can lead to faster training times and improved performance. Additionally, the flattening layer can help improve the model’s generalizability by reducing the number of parameters that need to be learned.

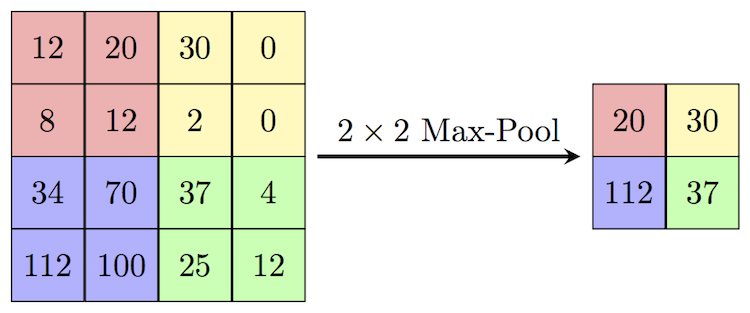

Q14. What is the Max Pooling layer in deep learning?

Max pooling is a layer typically used in convolutional neural networks. It operates on a feature map by sliding a window over it and computing the maximum value in the window. It is done for each window, resulting in a new, smaller feature map.Max pooling has several benefits. First, it reduces the number of parameters in the model, which can help reduce overfitting. Second, it can increase the robustness of the model by making it invariant to small changes in the input.There are a few things to keep in mind when using max pooling. First, the window size should be smaller than the input size. Second, the stride (the distance between the window and the following window) should be chosen so that the windows do not overlap.

Q15. What is the learning rate in deep learning?

1. Constant learning rate: The weights are updated by a constant amount each iteration.

2. Exponential learning rate: The learning rate is decreased by a factor each iteration.

3. Step learning rate: Each iteration decreases the learning rate by a step function.

4. Adaptive learning rate: The learning rate is adaptively changed each iteration based on the training data.

Q16. What is gradient descent in deep learning?

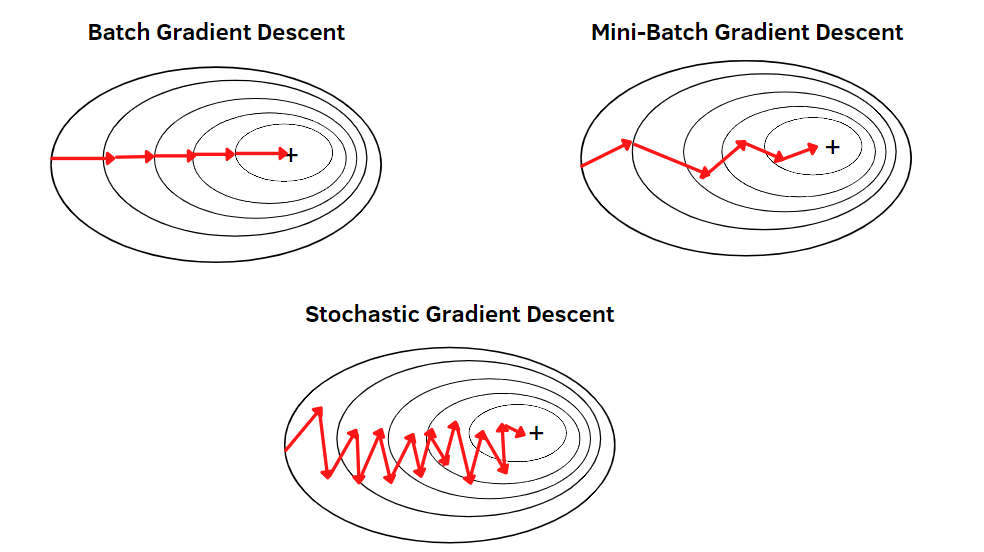

Gradient descent is a key algorithm in deep learning. It is an optimization algorithm that is used to minimize a cost function. The cost function is a measure of how well the model is performing. The cost function is typically a function of the weights of the model. The goal of gradient descent is to find the values of the weights that minimize the cost function. Gradient descent is an iterative algorithm. It starts with random values for the weights. Then, it computes the cost function for those weights. Based on the cost function, it adjusts the weights and repeats the process. The algorithm continues until the cost function converges to a minimum.There are different variants of gradient descent. The most common variant is called stochastic gradient descent. The cost function is computed for each training example in stochastic gradient descent. The weights are updated based on the cost of the training example.

Variants of gradient descent:

A few variants of gradient descent are commonly used in deep learning. The most popular ones are stochastic gradient descent (SGD), mini-batch gradient, and adaptive gradient descent.

1. Stochastic Gradient Descent:

Stochastic gradient descent (SGD) is a simple yet efficient approach to fitting linear models. It is beneficial when the number of training examples is large. SGD scales linearly with the number of samples and can be used to train models on massive datasets.

SGD works by iteratively updating the model weights in a direction that minimizes the cost function. The cost function is a measure of how well the model predicts the labels of the training examples.

2. Mini Batch Gradient Descent:

Mini-batch gradient descent is a variation of the gradient descent algorithm that splits the training data into small batches and performs the gradient descent update on each batch. The advantage of mini-batch gradient descent over stochastic gradient descent is that the mini-batches allow for better gradient estimates, leading to faster convergence. The disadvantage is that mini-batch gradient descent can be more computationally expensive than stochastic gradient descent.

3. Adaptive Gradient Descent:

One of the critical challenges in deep learning is that the data is often noisy and heterogeneous, making it challenging to train a model that can generalize well to new data. A popular approach to overcome this challenge is to use adaptive gradient descent methods, which adapt the model’s learning rate to the data’s characteristics.

There are several different adaptive gradient descent methods, but they all share the same goal: to find the optimal learning rate for the model to learn from the data as efficiently as possible.

There are several benefits to using adaptive gradient descent methods. One is that they can help the model to converge faster to a good solution. Another is that they can help to reduce the amount of overfitting that can occur when training a deep learning model. In general, adaptive gradient descent methods are a powerful tool for deep learning and can help improve your model’s performance.

Conclusion

There is a lot of debate these days about machine learning vs. deep learning. Both are hot topics in the field of artificial intelligence (AI) and have a lot of potential applications. So, what’s the difference between the two? Machine learning is a branch of AI that focuses on creating algorithms that can learn from data and improve over time. Deep learning is a subset of machine learning that uses neural networks to learn from data in a more human-like way.

Both machine learning and deep learning are powerful tools that can be used to solve complex problems. However, deep learning is often seen as a more powerful tool because it can learn more complex patterns than machine learning.

One significant difference between machine learning and deep learning is the amount of data required. Machine learning can often work with smaller data sets, while deep learning requires large data sets.

Major points of this article:

1. Firstly, we have discussed deep learning and its use in current technology. After that, we also discussed the importance of asking the right questions in the interview to select the best candidates.

2. After that, we discussed many questions related to deep learning technologies, like Activation Functions, Layering Architecture, Gradient Descent, etc.

3. Finally, we have concluded the article by discussing the key differences between machine learning and deep learning technologies.

It is all for today. I hope you have enjoyed reading that article. In the future, I will try to cover more questions on deep learning are very important from a data science interview perspective.

Thanks for reading.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.