“The development of full artificial intelligence could spell the end of the human race. It would take off on its own and re-design itself at an ever-increasing rate. Humans, limited by slow biological evolution, couldn’t compete and would be superseded.” Stephen Hawkings

Introduction

While the truth in this quote by one of the prominent individuals of the century has resonated and is currently haunting many of the top practitioners of AI in the industry, let us see what stirred this thinking and persuasion.

Well, this is owing to the recent popularity and surge in embracing the usage of Generative Artificial Intelligence (Gen AI) and the paradigm change that it has brought into our everyday lifestyle with it that some individuals feel that if not regulated, this technology can be used and manipulated to embark anguish amongst human race. So today’s blog is all about the nitti-gritties of Gen AI and how can we be both benefitted and tormented by it.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Gen AI?

Gen AI is a type of Artificial Intelligence that can be used to generate synthetic content in the form of written text, images, audio, or videos. They achieve it by recognizing the inherent pattern in existing data and then using this knowledge to generate new and unique outputs. Although it is now that we are using a lot of this Gen AI, this technology had existed since the 1960s, when it was first used in chat bots. In the past decade, with the introduction of GANs in 2014, people became convinced that Gen AI could create convincingly authentic images, videos, and audio of real people.

Machine Learning converts logic problems into statistical problems, allowing algorithms to learn patterns and solve them. Instead of relying on coherent logic, millions of datasets of cats and dogs are used to train the algorithm. However, this approach lacks structural understanding of the objects. Gen AI reverses this concept by learning patterns and generating new content that fits those patterns. Although it can create more pictures of cats and dogs, it does not possess conceptual understanding like humans. It simply matches, recreates, or remixes patterns to generate similar outputs.

Starting in 2022, Gen AI has taken the world by storm; so much so that now in every business meeting, you are sure to hear this term at least once, if not more. Big Think has called it “Technology of the Year,” this claim is more than justified by the amount of VC support Generative AI startups are getting. Tech experts have mentioned that in the coming five to ten years, this technology will surge rapidly breaking boundaries and conquering newer fields.

Types of GenAI Models

Generative Adversarial Networks (GANs)

Features of GANs

- Two Neural Networks: GANs consist of two neural networks pitted against each other: the generator and the discriminator. The generator network takes random noise as input and generates synthetic data, such as images or text. On the other hand, the discriminator network tries to distinguish between the generated data and actual data from a training set.

- Adversarial Training: The two networks engage in a competitive and iterative negative training process. The generator aims to produce synthetic data indistinguishable from real data, while the discriminator seeks to accurately classify the real and generated data. As training progresses, the generator learns to create more realistic samples, and the discriminator improves its ability to distinguish between real and fake data.

- Mutual Improvement: The adversarial nature of GANs leads to a mutual improvement between the generator and discriminator. As the generator learns to create more realistic data, it becomes harder for the discriminator to distinguish between actual and generated samples. This prompts the discriminator to become more discerning, pushing the generator to produce more convincing examples. GANs can achieve high-quality generation of complex and realistic data through this feedback loop.

Variational Auto Encoders (VAEs)

- Variational Autoencoders (VAEs) are generative models that aim to learn a compressed and continuous representation of input data. VAEs consist of an encoder network that maps input data, such as images or text, to a lower-dimensional latent space. This latent space captures the underlying structure and features of the input data in a continuous and probabilistic manner.

- VAEs employ a probabilistic approach to encoding and decoding data. Instead of producing a single point in the latent space, the encoder generates a probability distribution over the latent variables. The decoder network then takes a sample from this distribution and reconstructs the original input data. This probabilistic nature allows VAEs to capture the uncertainty and diversity present in the data.

- VAEs are trained using a combination of reconstruction loss and a regularization term called the Kullback-Leibler (KL) divergence. The reconstruction loss encourages the decoder to reconstruct the original input data accurately. Simultaneously, the KL divergence term regularizes the latent space by encouraging the learned latent distribution to match a prior distribution, usually a standard Gaussian distribution. This regularization promotes the smoothness and continuity of the latent representation.

Transformer-Based Models

- Self-Attention: The core component of the Transformer architecture is the self-attention mechanism. It allows the model to capture dependencies and relationships between words or tokens in the input sequence. Self-attention computes attention weights for each token by considering its interactions with all other tickets in the series. This mechanism enables the model to weigh the importance of different words based on their relevance to each other, allowing for comprehensive context understanding.

- Encoder-Decoder Structure: Transformer-based models typically consist of an encoder and a decoder. The encoder processes the input sequence and encodes it into representations that capture the contextual information. The decoder, in turn, generates an output sequence by attending to the encoder’s terms and using self-attention within the decoder itself. This encoder-decoder structure is particularly effective for tasks like machine translation, where the model needs to understand the source sequence and generate a target sequence.

- Positional Encoding and Feed-Forward Networks: Transformers incorporate positional encoding to provide information about the order of the tokens in the input sequence. Since self-attention is order-agnostic, positional encoding helps the model differentiate the positions of the tickets. This is achieved by adding sinusoidal functions of different frequencies to the input embeddings. Additionally, Transformers utilize feed-forward networks to process the encoded representations. These networks consist of multiple fully connected layers with non-linear activation functions. This enables the model to capture complex patterns and dependencies in the data.

Some Prominent Gen AI Products

Some prominent Gen AI interfaces that sparked an interest include Dall-E, Chat GPT, and BARD.

Dall-E

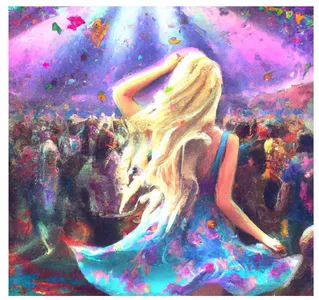

Dall-E is a GenAI model developed by Open AI, that allows you to create unique and creative images from textual descriptions. Below is an example of an image created by Dall-E with the prompt “a woman at a music festival twirling her dress, in front of a crowd with glitter falling from the top, long colorful wavy blonde hair, wearing a dress, digital painting.”

ChatGPT

A conversational AI model by Open AI is known as ChatGPT. It engages dynamically and natural-sounding conversations providing intelligent responses to user queries across various topics. The image below exemplifies how ChatGPT is built to provide intelligent solutions to your queries.

BARD

BARD is a language model developed by Google. It was hastily released as a response to Microsoft’s integration of GPT into Bing search. BARD (Building Autoregressive Transformers for Reinforcement Learning) aims to enhance language models by incorporating Reinforcement Learning techniques. It ideates the development of language models by interacting with an environment and performing training tasks. Thus enabling more sophisticated and content-aware conversational agents. Unfortunately, the BARD debut was flawed, and in the current Google I/O, Google broadened the accessibility of BARD to 180 countries and territories.

Applications of Gen AI

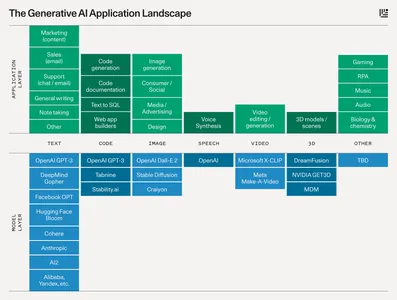

Since its emergence, Gen AI has never lost relevance. People have been embracing its applicability in newer and newer fields with the passing days. Now it has marked its presence in most of the activities in our daily life. The image below shows the Gen AI products available in each domain, from text, speech, audio, and video to writing computer codes.

Gen AI finds applicability in the below fields, but the list is not exhaustive.

- Content Generation: Automatically generate text, images, and videos across various domains.

- Data Augmentation: Use synthetic data to enhance training datasets for machine learning models.

- Virtual Reality and Gaming: Create immersive virtual worlds and realistic game environments.

- Image and Video Editing: Automatically edit and enhance images and videos.

- Design and Fashion: Generate new clothing, furniture, or architecture designs.

- Music and Sound Generation: Create personalized music compositions and sound effects.

- Personal Assistants and Chatbots: Develop intelligent virtual assistants and chatbots for various applications.

- Simulation and Training: Simulate realistic scenarios or generate synthetic data for training purposes.

- Anomaly Detection: Identify and flag anomalies in datasets or systems.

- Medical Imaging and Diagnosis: Aid in medical image analysis and assist in diagnosis.

- Language Translation: Translate text or speech between different languages.

- Style Transfer: Apply artistic styles to images or videos.

- Data Generation for Testing: Generate diverse data for testing and evaluating algorithms or systems.

- Storytelling and Narrative Generation: Create interactive and dynamic narratives.

- Drug Discovery: Assist in the discovery and design of new drugs.

- Financial Modeling: Generate financial models and perform risk analysis.

- Sentiment Analysis and Opinion Mining: Analyze and classify sentiments from text data.

- Generative Advertising: Create personalized and engaging advertisements.

- Weather Prediction: Improve weather forecasting models by generating simulated weather data.

- Game AI: Develop intelligent and adaptable AI opponents in games.

How Will Gen AI Impact Jobs?

As the popularity of Gen AI keeps soaring, this question keeps looming. While I personally believe the statement that AI will never replace humans, people using AI intelligently will replace those who don’t use AI. So it is wise not to be utterly naive towards the developments in AI. In this regard, I would like to reiterate the comparison of Gen AI with email. When emailing was first introduced, everybody feared that it would take up the job of the postman. However, decades later, we do see that postal services do exist, and email’s impact has penetrated much deep. Gen AI also will have similar implications.

Concerning Gen AI, one job that gathered a lot of attention is that of an artist. The remaining artists are expected to enhance their creativity and productivity, while this may diminish the total number of artists required.

Some Gen AI Companies

Below are some pioneering companies operating in the domain of Gen AI.

Synthesia

It is a UK-based company that is one of the earliest pioneers of video synthesis technology. Founded in 2017, this company is focussing on implementing new synthetic media technology to revolutionize visual content creation while reducing cost and skills.

Mostly AI

This company is working to develop ways to simulate and represent synthetic data at scale realistically. They have created state-of-the-art generative technology that automatically learns new patterns, structures, and variations from existing data.

Genie AI

The company involves machine learning experts who share and organize reliable, relevant information within a legal firm, team, or structure which helps to empower lawyers to draft with the collective intelligence of the entire firm.

Gen AI Statistics

- By 2025, generative AI will account for 10% of all data generated.

- According to Gartner, 71% of respondents said the ROI of intelligent automation is high within their organizations.

- It is projected that AI will grow at an annual rate of 33.2% from 2020 to 2027.

- It is estimated that AI will add US $15.7 trillion or 26% to global GDP, By 2030.

Limitations of Gen AI

Reading till now, Gen AI may seem all good and glorious, but like any other technology, it has its limitations.

Data Dependence

Generative AI models heavily rely on the quality and quantity of training data. Insufficient or biased data can lead to suboptimal results and potentially reinforce existing biases present in the training data.

Lack of Interpretability

Generative AI models can be complex and difficult to interpret. Understanding the underlying decision-making process or reasoning behind the generated output can be challenging, making identifying and rectifying potential errors or biases harder.

Mode Collapse

In the context of generative adversarial networks (GANs), mode collapse refers to the generator producing limited or repetitive outputs, failing to capture the full diversity of the target distribution. This can result in generated samples that lack variation and creativity.

Computational Requirements

Training and running generative AI models can be computationally intensive and require substantial resources, including powerful hardware and significant time. This limits their accessibility for individuals or organizations with limited computational capabilities.

Ethical and Legal Considerations

The use of generative AI raises ethical concerns, particularly in areas such as deep fakes or synthetic content creation. Misuse of generative AI technology can spread misinformation, privacy violations, or potential harm to individuals or society.

Lack of Control

Generative AI models, especially in autonomous systems, may lack control over the generated outputs. This can result in unexpected or undesirable outputs, limiting the reliability and trustworthiness of the generated content.

Limited Context Understanding

While generative AI models have made significant progress in capturing contextual information, they may still struggle with nuanced understanding, semantic coherence, and the ability to grasp complex concepts. This can lead to generating outputs that are plausible but lack deeper comprehension.

Conclusion

So we covered Generative Artificial Intelligence at length. Starting with the basic concept of Gen AI, we delved into the various models that have the potential to generate new output, their opportunities, and limitations.

Key Takeaways:

- What Gen AI is at its core?

- The various Gen AI models – GANs, VAEs, and Transformer Based Models. The architectures of these models are of particular note.

- Knowing some of the popular Gen AI products like Dall-E, Chat GPT, and BARD.

- The applications of Gen AI

- Some of the companies that operate in this domain

- Limitations of Gen AI

I hope you found this blog informative. Now you also will have something to contribute to the subsequent discussions with your friends or colleagues on Generative AI that I am sure you would often come across in the current scenario. Will see you in the next blog; till then, Happy Learning!

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

A. Generative AI has vast future potential. It can create realistic virtual environments, generate art, enhance content creation, enable personalized user experiences, aid drug discovery, advance robotics, and even simulate scenarios for training purposes.

A. After generative AI, the next frontier could involve refining its capabilities, improving interpretability, ensuring ethical usage, and exploring applications in fields like medicine, education, entertainment, and scientific research. Continual advancements will likely expand its impact and potential.

A. The danger of generative AI lies in potential misuse or malicious intent, such as generating deepfakes, spreading misinformation, or producing deceptive content. Strict ethical guidelines, responsible development, and robust safeguards are necessary to mitigate these risks.

A. Generative AI offers numerous benefits, including enhanced creativity, improved design workflows, automated content generation, personalized user experiences, efficient data augmentation, accelerated innovation, and new avenues for exploration in fields like art, gaming, marketing, and research. It empowers users with powerful tools for generating novel and impactful content.

A. We need generative AI because it unlocks new possibilities for creativity, problem-solving, and innovation. It can automate tedious tasks, accelerate design iterations, generate realistic simulations, facilitate data augmentation, assist in content generation, and provide valuable insights, enhancing productivity and efficiency across various domains.