Introduction

Welcome to the practical side of machine learning, where the concept of vector norms quietly guides algorithms and shapes predictions. In this exploration, we simplify the complexities to understand the essence of vector norms—basic yet effective tools for measuring, comparing, and manipulating data with precision. Whether you’re new or familiar with the terrain, grasping L1 and L2 norms offers a clearer intuition for models and the ability to transform data into practical insights. Join us on this journey into the core of machine learning, where the simplicity of vector norms reveals the key to your data-driven potential.

Table of contents

What are Vector Norms?

Vector norms are mathematical functions that assign a non-negative value to a vector, representing its magnitude or size. They measure the distance between vectors and are essential in various machine-learning tasks such as clustering, classification, and regression. Vector norms provide a quantitative measure of the similarity or dissimilarity between vectors, enabling us to compare and contrast their performances.

Importance of Vector Norms in Machine Learning

Vector norms are fundamental in machine learning as they enable us to quantify the magnitude of vectors and measure the similarity between them. They serve as a basis for many machine learning algorithms, including clustering algorithms like K-means, classification algorithms like Support Vector Machines (SVM), and regression algorithms like Linear Regression. Understanding and utilizing vector norms enables us to make informed decisions in model selection, feature engineering, and regularization techniques.

L1 Norms

Definition and Calculation of L1 Norm

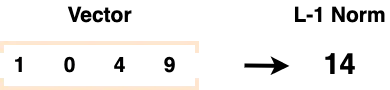

The L1 norm, also known as the Manhattan norm or the Taxicab norm, calculates the sum of the absolute values of the vector elements. Mathematically, the L1 norm of a vector x with n elements can be defined as:

||x||₁ = |x₁| + |x₂| + … + |xₙ|

where |xᵢ| represents the absolute value of the i-th element of the vector.

Properties and Characteristics of L1 Norm

The L1 norm has several properties that make it unique. One of its key characteristics is that it promotes sparsity in solutions. This means that when using the L1 norm, some of the coefficients in the solution tend to become exactly zero, resulting in a sparse representation. This property makes the L1 norm useful in feature selection and model interpretability.

Applications of L1 Norm in Machine Learning

The L1 norm finds applications in various machine learning tasks. One prominent application is in L1 regularization, also known as Lasso regression. L1 regularization adds a penalty term to the loss function of a model, encouraging the model to select a subset of features by driving some of the coefficients to zero. This helps in feature selection and prevents overfitting. L1 regularization has been widely used in linear regression, logistic regression, and support vector machines.

L2 Norms

Definition and Calculation of L2 Norm

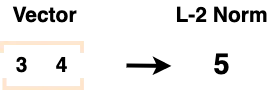

The L2 norm, also known as the Euclidean norm, calculates the square root of the sum of the squared values of the vector elements. Mathematically, the L2 norm of a vector x with n elements can be defined as:

||x||₂ = √(x₁² + x₂² + … + xₙ²)

where xᵢ represents the i-th element of the vector.

Properties and Characteristics of L2 Norm

The L2 norm has several desirable properties, making it widely used in machine learning. One of its key characteristics is that it provides a smooth and continuous measure of the vector’s magnitude. Unlike the L1 norm, the L2 norm does not promote sparsity in solutions. Instead, it distributes the penalty across all coefficients, resulting in a more balanced solution.

Applications of L2 Norm in Machine Learning

The L2 norm finds extensive applications in machine learning. It is commonly used in L2 regularization, also known as Ridge regression. L2 regularization adds a penalty term to a model’s loss function, encouraging the model to have smaller and more evenly distributed coefficients. This helps prevent overfitting and improves the model’s generalization ability. L2 regularization is widely used in linear regression, logistic regression, neural networks, and support vector machines.

Also read – Must Known Vector Norms in Machine Learning

Comparison of L1 and L2 Norms

Differences in Calculation and Interpretation

The L1 norm and L2 norm differ in their calculation and interpretation. The L1 norm calculates the sum of the absolute values of the vector elements, while the L2 norm calculates the square root of the sum of the squared values of the vector elements. The L1 norm promotes sparsity in solutions, leading to some coefficients becoming exactly zero. On the other hand, the L2 norm provides a more balanced solution by distributing the penalty across all coefficients.

Impact on Machine Learning Models

The choice between L1 and L2 norms can significantly impact machine learning models. The L1 norm is effective in feature selection and model interpretability, as it drives some coefficients to zero. This makes it suitable for situations where we want to identify the most important features or variables. The L2 norm, on the other hand, provides a more balanced solution and is useful in preventing overfitting and improving the model’s generalisation ability.

Choosing between L1 and L2 Norms

The choice between L1 and L2 norms depends on the specific requirements of the machine learning task. The L1 norm (Lasso regularization) should be preferred if feature selection and interpretability are crucial. On the other hand, if preventing overfitting and improving generalization are the primary concerns, the L2 norm (Ridge regularization) should be chosen. In some cases, a combination of both norms, known as Elastic Net regularization, can be used to leverage the advantages of both approaches.

Regularization Techniques Using L1 and L2 Norms

L1 Regularization (Lasso Regression)

L1 regularization, also known as Lasso regression, adds a penalty term to the loss function of a model, which is proportional to the L1 norm of the coefficient vector. This penalty term encourages the model to select a subset of features by driving some of the coefficients to zero. L1 regularization effectively selects feature and can help reduce the model’s complexity.

Simple Explanation:

Imagine you are a chef creating a recipe. L1 regularization is like saying, “Use only the essential ingredients and skip the ones that don’t add flavour.” In the same way, L1 regularization encourages the model to pick only the most crucial features for making predictions.

Example:

For a simple model predicting house prices with features like size and location, L1 regularization might say, “Focus on either the size or location and skip the less important one.”

L2 Regularization (Ridge Regression)

L2 regularization, also known as Ridge regression, adds a penalty term to the loss function of a model, which is proportional to the L2 norm of the coefficient vector. This penalty term encourages the model to have smaller and more evenly distributed coefficients. L2 regularization helps prevent overfitting and improve the model’s generalisation ability.

Simple Explanation:

Imagine you are a student studying for exams, and each book represents a feature in your study routine. L2 regularization is like saying, “Don’t let any single book take up all your study time; distribute your time more evenly.” Similarly, L2 regularization prevents any single feature from having too much influence on the model.

Example:

For a model predicting student performance with features like study hours and sleep quality, L2 regularization might say, “Don’t let one factor, like study hours, completely determine the prediction; consider both study hours and sleep quality equally.”

Elastic Net Regularization

Elastic Net regularization combines the L1 and L2 regularization techniques. It adds a penalty term to a model’s loss function, which is a linear combination of the L1 norm and the L2 norm of the coefficient vector. Elastic Net regularization provides a balance between feature selection and coefficient shrinkage, making it suitable for situations where both sparsity and balance are desired.

Simple Explanation:

Imagine you are a gardener trying to grow a beautiful garden. Elastic Net regularization is like saying, “Include the most important flowers, but also make sure no single weed takes over the entire garden.” It strikes a balance between simplicity and preventing dominance.

Example:

For a model predicting crop yield with features like sunlight and water, Elastic Net regularization might say, “Focus on the most crucial factor (sunlight or water), but ensure that neither sunlight nor water completely overshadows the other.”

Advantages and Disadvantages of L1 and L2 Norms

Advantages of L1 Norm

- Promotes sparsity in solutions, leading to feature selection and model interpretability.

- Helps reduce the model’s complexity by driving some coefficients to zero.

- Suitable for situations where identifying the most important features is crucial.

Advantages of L2 Norm

- Provides a more balanced solution by distributing the penalty across all coefficients.

- Helps in preventing overfitting and improving the generalization ability of the model.

- Widely used in various machine learning algorithms, including linear regression, logistic regression, and neural networks.

Disadvantages of L1 Norm

- Can result in a sparse solution with many coefficients becoming exactly zero, which may lead to information loss.

- Computationally more expensive compared to the L2 norm.

Disadvantages of L2 Norm

- Does not promote sparsity in solutions, which may not be desirable in situations where feature selection is crucial.

- It may not be suitable for situations where interpretability is a primary concern.

Conclusion

In conclusion, vector norms, particularly L1 and L2 norms, play a vital role in machine learning. They provide a mathematical framework to measure the magnitude or size of vectors and enable us to compare and contrast their performances. The L1 norm promotes sparsity in solutions and is useful in feature selection and model interpretability. The L2 norm provides a more balanced solution and helps in preventing overfitting. The choice between L1 and L2 norms depends on the specific requirements of the machine learning task, and in some cases, a combination of both can be used. By understanding and utilizing vector norms, we can enhance our understanding of machine learning algorithms and make informed decisions in model development and regularization techniques.

Unleash the Power of AI & ML Mastery! Elevate your skills with our Certified AI & ML BlackBelt Plus Program. Seize the future of technology – Enroll Now and become a master in Artificial Intelligence and Machine Learning! Take the first step towards excellence. Join the elite, conquer the challenges, and redefine your career. Click here to enroll and embark on a journey of innovation and success!