Artificial intelligence (AI) has brought many advancements in creating media content nearly indistinguishable from that produced by humans. However, while this technology opens up new possibilities for creativity and innovation, it also poses a significant threat: deepfakes. AI can create manipulated images, videos, or audio files known as deepfakes that can be challenging to identify as fake. This poses a severe challenge to truth and authenticity in media. In recent years, individuals have used deepfakes to spread propaganda, manipulate public opinion, and scam others out of sensitive information. This synthetic media using machine learning algorithms is changing the face of AI.

Also Read: AI and Beyond: Exploring the Future of Generative AI

Get ready for the data event of the year! Join us at the DataHack Summit 2023, happening from Aug 2-5 at the prestigious NIMHANS Convention Center in Bengaluru. Why should you be there? Well, this is your chance to dive into mind-blowing workshops, gain insights from industry experts, and connect with like-minded data enthusiasts. Stay ahead of the curve, learn the latest trends, and unlock endless possibilities in the world of data science and AI. Don’t miss out on this incredible opportunity! Mark your calendars and secure your spot at the DataHack Summit 2023. Click here for more details!

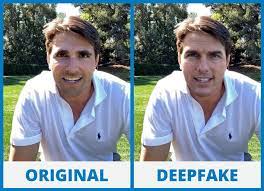

What Are Deepfakes?

Machine learning algorithms create synthetic media known as deepfakes, which superimpose one person’s face onto another’s body or make someone say or do something they didn’t say or do. The term “deepfake” comes from the combination of “deep learning,” a type of machine learning used to create the media, and “fake.”

While deepfakes have been around for several years, they have become more sophisticated with advancements in AI. Today, anyone can create deepfakes relatively quickly using open-source software and just a few hours of training data. The consequences of deepfakes can be severe, including manipulating public opinion, spreading propaganda, and even identity theft.

How Are Deepfakes Created?

At its core, deepfake technology uses machine learning algorithms to synthesize new data based on existing data. The process involves training a computer model to learn what certain people or objects look like in images or videos and then generating new ones based on that knowledge.

The most common way to generate deepfakes is by using Generative Adversarial Networks (GANs). A generative adversarial network (GAN) uses machine learning algorithms in which two neural networks compete with each other by using deep learning methods to become more accurate in their predictions. One network generates fake images, while the other tries to identify which images are simulated. Over time, the generator network learns to create increasingly realistic images that can fool the discriminator network.Learn More: Why Are Generative Adversarial Networks(GANs) So Famous, And How Will GANs Be In The Future?

Once someone generates an image or video using deepfake technology, they can distribute it through social media, websites, or other platforms. Due to the sophistication of the technology, these images or videos can be challenging to distinguish from authentic content.

Technical Solutions for Detecting Deepfakes

There are several technical solutions available to detect deepfakes, including the following:

- Software For Detecting AI Output: This type of software analyzes the digital fingerprints left by AI-generated content to determine whether an image, video, or audio file has been manipulated.

- AI-Powered Watermarking: This technique involves adding a unique identifier to an image or text that identifies its origin. This makes it easier to track and trace the source of a piece of media, which can help determine its authenticity.

- Content Provenance: This strategy aims to clarify where digital media – both natural and synthetic – comes from. Maintaining a record of a piece of media’s sources and history helps people detect if someone has tampered with it.

Despite these technical solutions, some limitations should be taken into account, including:

- Lack of universal standards for identifying real or fake content

- Detectors don’t catch everything.

- Open-source AI models may not include watermarks.

Therefore, while technical solutions play an essential role in detecting deepfakes, other measures should combine with them to minimize the risks posed by this media manipulation.

Limitations of Technical Solutions

Despite the usefulness of technical solutions, they have several limitations that must also be considered. Some of these limitations include:

1. Lack of Universal Standards: No universal standard for identifying real or fake content exists. Different detectors may identify deepfakes differently, making it difficult to achieve consistency across platforms.

2. Detectors Don’t Catch Everything: As AI technology advances, it becomes harder to distinguish between natural and synthetic media. This means that even the most advanced detectors may not recognize some deepfakes.

3. Open-Source AI Models May Not Include Watermarks: While watermarking is a valuable technique for identifying the origin of media, open-source AI models may not include this step, making it difficult to trace the source of some synthesized media.

It’s important to note that technical solutions should be used with other measures to minimize the risks posed by deepfakes. Laws and regulations, individual awareness, and verification of authenticity before sharing media are all crucial components of an effective response to this form of media manipulation.

A Mix of Responses is Needed

Given the limitations of technical solutions, a mix of responses is necessary to combat deepfakes effectively. Some of these responses include:

1. Laws and Regulations: Governing bodies can be essential in addressing high-risk areas where deepfakes can cause the most harm. For example, laws barring deepfakes targeting candidates for office have been enacted in Texas and California.

2. Individual Awareness: Individuals must be mindful of the source and nature of media, look for inconsistencies, examine metadata, and use AI detection tools to verify authenticity before sharing.

3. Verification of Authenticity: Verifying the authenticity of media before sharing is crucial to minimizing the risk of spreading deepfakes. This can involve reversing image search, checking metadata, or using AI detection tools to analyze images and videos.

4. Copyright Law: In some cases, using copyright law to take down deepfakes. Companies like Universal Music Group use this strategy to pull impersonation songs from streaming platforms.

5. Education And Research: As AI technology advances, there is a need for continuous education and research into new methods for detecting and handling deepfakes. Collaboration between researchers, tech companies, and governing bodies can help protect individuals from the harmful effects of deepfakes.Also Read: OpenAI Seeks Trademark for ‘GPT’

Need for a Multifaceted Approach to Tackle Deepfakes in the Era of AI

It’s important to note that governing bodies must also be sensitive to the issue of deepfakes and aware of common ways to minimize the risks of encountering them. The Biden administration and Congress have signaled their intentions to regulate AI. The European Union is leading the way with the forthcoming AI Act, which puts guardrails on how AI can be used, including provisions for transparency and accountability, as with other tech policy matters.Also Read: EU Takes First Steps Towards Regulating Generative AI

In conclusion, a mix of responses is essential to detect and handle deepfakes in the age of AI. Technical solutions, laws and regulations, individual awareness, verification of authenticity before sharing, copyright law, education, and research all have a role to play in minimizing the risks posed by deepfakes.

Common Ways to Minimize the Risk of Deepfakes

Individuals can take several steps to minimize the risk of encountering deepfakes. Some common ways include:

1. Reverse Image Search: Using a search engine like Google Images or TinEye to check if an image has been posted online. If it’s a brand-new image, it may be suspicious.

2. Checking For Inconsistencies: We can check for manipulated media by looking for any distortions or inconsistencies in the image or video. For example, shadows may be missing or inconsistent or have lighting issues.

3. Examining Metadata: Most images and videos have metadata, including the photo’s date, time, and location. Looking at this data to see if it matches up with the image’s content can help determine its authenticity.

4. Analyzing The Source of Media: Checking the website or head of the media to see if it comes from a reputable and reliable source. It may be more likely to be fake if it’s from an unfamiliar or unverified source.

5. Using AI Detection Tools: Various tools, such as Forensically and Izitru, can analyze an image to determine if someone has altered it.

It’s important to remember that these methods may not be foolproof, and some fake media may be challenging to detect. Therefore, it’s always best to verify the authenticity of the media before sharing it.

Our Say

Deepfakes threaten truth and authenticity in media, and detecting and handling them has become more critical than ever. They use machine learning algorithms to make this synthetic media. While technical solutions such as AI detection tools, watermarking, and content provenance exist, they have limitations. They should combine other measures to minimize the risks posed by deepfakes.

A mix of responses is necessary to effectively combat deepfakes, including laws and regulations, individual awareness, verification of authenticity before sharing media, copyright law, education, and research. Combining responses and techniques can minimize the risks posed by deepfakes and maintain trust in media and information in the digital age.

This is it for this article. But before you go, I’d highly recommend you check out our value-loaded workshops like ‘Mastering LLMs: Training, Fine-tuning, and Best Practices‘, ‘Exploring Generative AI with Diffusion Models‘ and ‘Solving Real World Problems Using Reinforcement Learning’ and many more at the DataHack Summit 2023 to level up your data game! Our workshops are your ticket to unlocking immense value, equipping you with practical skills and real-world knowledge that you can apply right away. Picture yourself diving into hands-on experiences, gaining the confidence to conquer any data challenge that comes your way. This is your golden opportunity to enhance your expertise, connect with industry leaders, and open doors to exciting new career opportunities. Don’t wait any longer! Secure your spot and register now for the highly anticipated DataHack Summit 2023.