Introduction

I remember the initial days of my Machine Learning (ML) projects. I had put in a lot of efforts to build a really good model. I took expert advice on how to improve my model, I thought about feature engineering, I talked to domain experts to make sure their insights are captured. But, then I came across a problem!

How do I implement this model in real life? I had no idea about this. All the literature I had studied till now focussed on improving the models. But I didn’t know what was the next step.

This is why, I have created this guide – so that you don’t have to struggle with the question as I did. By end of this article, I will show you how to implement a machine learning model using Flask framework in Python.

Table of Contents

- Options to implement Machine Learning models

- What are APIs?

- Python Environment Setup & Flask Basics

- Creating a Machine Learning Model

- Saving the Machine Learning Model: Serialization & Deserialization

- Creating an API using Flask

1. Options to implement Machine Learning models

Most of the times, the real use of our Machine Learning model lies at the heart of a product – that maybe a small component of an automated mailer system or a chatbot. These are the times when the barriers seem unsurmountable.

For example, majority of ML folks use R / Python for their experiments. But consumer of those ML models would be software engineers who use a completely different stack. There are two ways via which this problem can be solved:

- Option 1: Rewriting the whole code in the language that the software engineering folks work. The above seems like a good idea, but the time & energy required to get those intricate models replicated would be utterly waste. Majority of languages like JavaScript, do not have great libraries to perform ML. One would be wise to stay away from it.

- Option 2 – API-first approach – Web APIs have made it easy for cross-language applications to work well. If a frontend developer needs to use your ML Model to create a ML powered web application, they would just need to get the URL Endpoint from where the API is being served.

2. What are APIs?

In simple words, an API is a (hypothetical) contract between 2 softwares saying if the user software provides input in a pre-defined format, the later with extend its functionality and provide the outcome to the user software.

You can read this article to understand why APIs are a popular choice amongst developers:

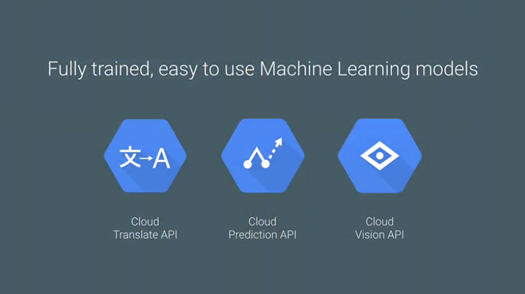

Majority of the Big Cloud providers and smaller Machine Learning focussed companies provide ready-to-use APIs. They cater to the needs of developers / businesses that don’t have expertise in ML, who want to implement ML in their processes or product suites.

One such example of Web APIs offered is the Google Vision API

All you need is a simple REST call to the API via SDKs (Software Development Kits) provided by Google. Click here to get an idea of what can be done using Google Vision API.

Sounds marvellous right! In this article, we’ll understand how to create our own Machine Learning API using Flask, a web framework in Python.

NOTE:Flask isn’t the only web-framework available. There is Django, Falcon, Hug and many more. For R, we have a package called plumber.

3. Python Environment Setup & Flask Basics

- Creating a virtual environment using Anaconda. If you need to create your workflows in Python and keep the dependencies separated out or share the environment settings, Anaconda distributions are a great option.

- You’ll find a miniconda installation for Python here

wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.shbash Miniconda3-latest-Linux-x86_64.sh- Follow the sequence of questions.

source .bashrc- If you run:

conda, you should be able to get the list of commands & help. - To create a new environment, run:

conda create --name <environment-name> python=3.6 - Follow the steps & once done run:

source activate <environment-name> - Install the python packages you need, the two important are:

flask&gunicorn.

- We’ll try out a simple Flask Hello-World application and serve it using gunicorn:

- Open up your favourite text editor and create

hello-world.pyfile in a folder - Write the code below:

"""Filename: hello-world.py """ from flask import Flask app = Flask(__name__) @app.route('/users/<string:username>') def hello_world(username=None): return("Hello {}!".format(username))

- Save the file and return to the terminal.

- To serve the API (to start running it), execute:

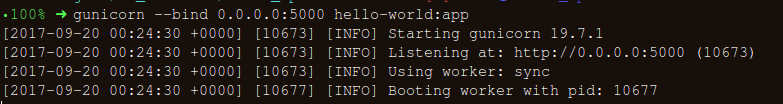

gunicorn --bind 0.0.0.0:8000 hello-world:appon your terminal. - If you get the repsonses below, you are on the right track:

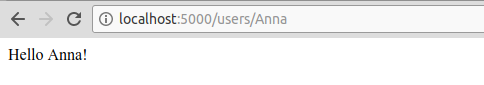

- On you browser, try out:

https://localhost:8000/users/any-name

- Open up your favourite text editor and create

Viola! You wrote your first Flask application. As you have now experienced with a few simple steps, we were able to create web-endpoints that can be accessed locally.

Using Flask, we can wrap our Machine Learning models and serve them as Web APIs easily. Also, if we want to create more complex web applications (that includes JavaScript *gasps*) we just need a few modifications.

4. Creating a Machine Learning Model

- We’ll be taking up the Machine Learning competition: Loan Prediction Competition. The main objective is to set a pre-processing pipeline and creating ML Models with goal towards making the ML Predictions easy while deployments.

Python Code:

- Finding out the null / Nan values in the columns:

for _ in data.columns:

print("The number of null values in:{} == {}".format(_, data[_].isnull().sum()))

- Next step is creating training and testing datasets:

pred_var = ['Gender','Married','Dependents','Education','Self_Employed','ApplicantIncome','CoapplicantIncome',\

'LoanAmount','Loan_Amount_Term','Credit_History','Property_Area']

X_train, X_test, y_train, y_test = train_test_split(data[pred_var], data['Loan_Status'], \

test_size=0.25, random_state=42)

- To make sure that the pre-processing steps are followed religiously even after we are done with experimenting and we do not miss them while predictions, we’ll create a custom pre-processing Scikit-learn estimator.

To follow the process on how we ended up with this estimator, refer this notebook

from sklearn.base import BaseEstimator, TransformerMixin

class PreProcessing(BaseEstimator, TransformerMixin):

"""Custom Pre-Processing estimator for our use-case

"""

def __init__(self):

pass

def transform(self, df):

"""Regular transform() that is a help for training, validation & testing datasets

(NOTE: The operations performed here are the ones that we did prior to this cell)

"""

pred_var = ['Gender','Married','Dependents','Education','Self_Employed','ApplicantIncome',\

'CoapplicantIncome','LoanAmount','Loan_Amount_Term','Credit_History','Property_Area']

df = df[pred_var]

df['Dependents'] = df['Dependents'].fillna(0)

df['Self_Employed'] = df['Self_Employed'].fillna('No')

df['Loan_Amount_Term'] = df['Loan_Amount_Term'].fillna(self.term_mean_)

df['Credit_History'] = df['Credit_History'].fillna(1)

df['Married'] = df['Married'].fillna('No')

df['Gender'] = df['Gender'].fillna('Male')

df['LoanAmount'] = df['LoanAmount'].fillna(self.amt_mean_)

gender_values = {'Female' : 0, 'Male' : 1}

married_values = {'No' : 0, 'Yes' : 1}

education_values = {'Graduate' : 0, 'Not Graduate' : 1}

employed_values = {'No' : 0, 'Yes' : 1}

property_values = {'Rural' : 0, 'Urban' : 1, 'Semiurban' : 2}

dependent_values = {'3+': 3, '0': 0, '2': 2, '1': 1}

df.replace({'Gender': gender_values, 'Married': married_values, 'Education': education_values, \

'Self_Employed': employed_values, 'Property_Area': property_values, \

'Dependents': dependent_values}, inplace=True)

return df.as_matrix()

def fit(self, df, y=None, **fit_params):

"""Fitting the Training dataset & calculating the required values from train

e.g: We will need the mean of X_train['Loan_Amount_Term'] that will be used in

transformation of X_test

"""

self.term_mean_ = df['Loan_Amount_Term'].mean()

self.amt_mean_ = df['LoanAmount'].mean()

return self

- Convert y_train & y_test to np.array:

y_train = y_train.replace({'Y':1, 'N':0}).as_matrix()

y_test = y_test.replace({'Y':1, 'N':0}).as_matrix()

We’ll create a pipeline to make sure that all the preprocessing steps that we do are just a single scikit-learn estimator.

pipe = make_pipeline(PreProcessing(),

RandomForestClassifier())

pipe

To search for the best hyper-parameters (degree for Polynomial Features & alpha for Ridge), we’ll do a Grid Search:

- Defining param_grid:

param_grid = {"randomforestclassifier__n_estimators" : [10, 20, 30],

"randomforestclassifier__max_depth" : [None, 6, 8, 10],

"randomforestclassifier__max_leaf_nodes": [None, 5, 10, 20],

"randomforestclassifier__min_impurity_split": [0.1, 0.2, 0.3]}

- Running the Grid Search:

grid = GridSearchCV(pipe, param_grid=param_grid, cv=3)

- Fitting the training data on the pipeline estimator:

grid.fit(X_train, y_train)

- Let’s see what parameter did the Grid Search select:

print("Best parameters: {}".format(grid.best_params_))

- Let’s score:

print("Validation set score: {:.2f}".format(grid.score(X_test, y_test)))

- Load the test set:

test_df = pd.read_csv('../data/test.csv', encoding="utf-8-sig")

test_df = test_df.head()

grid.predict(test_df)

Our pipeline is looking pretty swell & fairly decent to go the most important step of the tutorial: Serialize the Machine Learning Model

5. Saving Machine Learning Model : Serialization & Deserialization

In computer science, in the context of data storage, serialization is the process of translating data structures or object state into a format that can be stored (for example, in a file or memory buffer, or transmitted across a network connection link) and reconstructed later in the same or another computer environment.

In Python, pickling is a standard way to store objects and retrieve them as their original state. To give a simple example:

list_to_pickle = [1, 'here', 123, 'walker']

#Pickling the list

import pickle

list_pickle = pickle.dumps(list_to_pickle)

list_pickle

When we load the pickle back:

loaded_pickle = pickle.loads(list_pickle)

loaded_pickle

We can save the pickled object to a file as well and use it. This method is similar to creating .rda files for folks who are familiar with R Programming.

NOTE: Some people also argue against using pickle for serialization(1). h5py could also be an alternative.

We have a custom Class that we need to import while running our training, hence we’ll be using dill module to packup the estimator Class with our grid object.

It is advisable to create a separate training.py file that contains all the code for training the model (See here for example).

- To install

dill

!pip install dill

import dill as pickle

filename = 'model_v1.pk'

with open('../flask_api/models/'+filename, 'wb') as file:

pickle.dump(grid, file)

So our model will be saved in the location above. Now that the model is pickled, creating a Flask wrapper around it would be the next step.

Before that, to be sure that our pickled file works fine – let’s load it back and do a prediction:

with open('../flask_api/models/'+filename ,'rb') as f:

loaded_model = pickle.load(f)

loaded_model.predict(test_df)

Since, we already have the preprocessing steps required for the new incoming data present as a part of the pipeline, we just have to run predict(). While working with scikit-learn, it is always easy to work with pipelines.

Estimators and pipelines save you time and headache, even if the initial implementation seems to be ridiculous. Stitch in time, saves nine!

6. Creating an API using Flask

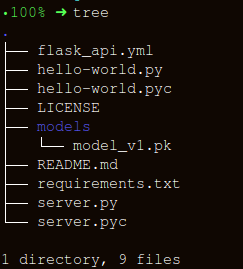

We’ll keep the folder structure as simple as possible:

There are three important parts in constructing our wrapper function, apicall():

- Getting the

requestdata (for which predictions are to be made) - Loading our

pickled estimator jsonifyour predictions and send the response back withstatus code: 200

HTTP messages are made of a header and a body. As a standard, majority of the body content sent across are in json format. We’ll be sending (POST url-endpoint/) the incoming data as batch to get predictions.

(NOTE: You can send plain text, XML, csv or image directly but for the sake of interchangeability of the format, it is advisable to use json)

"""Filename: server.py

"""

import os

import pandas as pd

from sklearn.externals import joblib

from flask import Flask, jsonify, request

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def apicall():

"""API Call

Pandas dataframe (sent as a payload) from API Call

"""

try:

test_json = request.get_json()

test = pd.read_json(test_json, orient='records')

#To resolve the issue of TypeError: Cannot compare types 'ndarray(dtype=int64)' and 'str'

test['Dependents'] = [str(x) for x in list(test['Dependents'])]

#Getting the Loan_IDs separated out

loan_ids = test['Loan_ID']

except Exception as e:

raise e

clf = 'model_v1.pk'

if test.empty:

return(bad_request())

else:

#Load the saved model

print("Loading the model...")

loaded_model = None

with open('./models/'+clf,'rb') as f:

loaded_model = pickle.load(f)

print("The model has been loaded...doing predictions now...")

predictions = loaded_model.predict(test)

"""Add the predictions as Series to a new pandas dataframe

OR

Depending on the use-case, the entire test data appended with the new files

"""

prediction_series = list(pd.Series(predictions))

final_predictions = pd.DataFrame(list(zip(loan_ids, prediction_series)))

"""We can be as creative in sending the responses.

But we need to send the response codes as well.

"""

responses = jsonify(predictions=final_predictions.to_json(orient="records"))

responses.status_code = 200

return (responses)

Once done, run: gunicorn --bind 0.0.0.0:8000 server:app

Let’s generate some prediction data and query the API running locally at https:0.0.0.0:8000/predict

import json

import requests

"""Setting the headers to send and accept json responses

"""

header = {'Content-Type': 'application/json', \

'Accept': 'application/json'}

"""Reading test batch

"""

df = pd.read_csv('../data/test.csv', encoding="utf-8-sig")

df = df.head()

"""Converting Pandas Dataframe to json

"""

data = df.to_json(orient='records')

data

"""POST <url>/predict

"""

resp = requests.post("http://0.0.0.0:8000/predict", \

data = json.dumps(data),\

headers= header)

resp.status_code

"""The final response we get is as follows:

"""

resp.json()

End Notes

There are a few things to keep in mind when adopting API-first approach:

- Creating APIs out of spaghetti code is next to impossible, so approach your Machine Learning workflow as if you need to create a clean, usable API as a deliverable. Will save you a lot of effort to jump hoops later.

- Try to use version control for models and the API code, Flask doesn’t provide great support for version control. Saving and keeping track of ML Models is difficult, find out the least messy way that suits you. This article talks about ways to do it.

- Specific to sklearn models (as done in this article), if you are using custom estimators for preprocessing or any other related task make sure you keep the estimator and training code together so that the model pickled would have the estimator class tagged along.

Next logical step would be creating a workflow to deploy such APIs out on a small VM. There are various ways to do it and we’ll be looking into those in the next article.

Code & Notebooks for this article: pratos/flask_api

Sources & Links:

[1]. Don’t Pickle your data.

[2]. Building Scikit Learn compatible transformers.

[3]. Using jsonify in Flask.

[4]. Flask-QuickStart.

About the Author

Prathamesh Sarang works as a Data Scientist at Lemoxo Technologies. Data Engineering is his latest love, turned towards the *nix faction recently. Strong advocate of “Markdown for everyone”.

Prathamesh Sarang works as a Data Scientist at Lemoxo Technologies. Data Engineering is his latest love, turned towards the *nix faction recently. Strong advocate of “Markdown for everyone”.