This article was published as a part of the Data Science Blogathon

Introduction

Before the sudden rise of neural networks, Support Vector Machines (SVMs) was considered the most powerful Machine Learning Algorithm. Still, it is more computation friendly as compared to Neural Networks and used extensively in industries. In this article, we will discuss the most important questions on SVM that are helpful to get you a clear understanding of the SVMs and also for Data Science Interviews, which covers its very fundamental level to complex concepts.

Let’s get started,

1. What are Support Vector Machines (SVMs)?

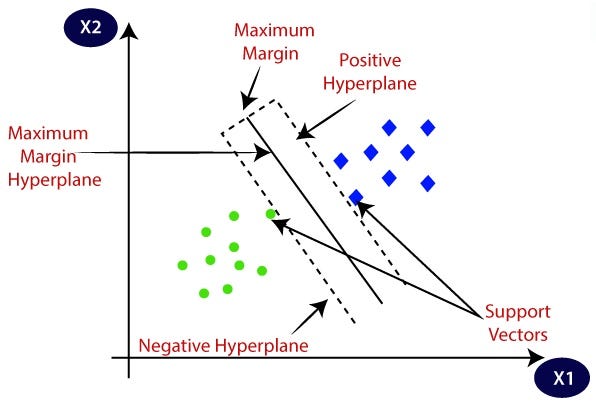

👉 SVM is a supervised machine learning algorithm that works on both classification and regression problem statements.

👉 For classification problem statements, it tries to differentiate data points of different classes by finding a hyperplane that maximizes the margin between the classes in the training data.

👉 In simple words, SVM tries to choose the hyperplane which separates the data points as widely as possible since this margin maximization improves the model’s accuracy on the test or the unseen data.

Image Source: link

2. What are Support Vectors in SVMs?

👉 Support vectors are those instances that are located on the margin itself. For SVMS, the decision boundary is entirely determined by using only the support vectors.

👉 Any instance that is not a support vector (not on the margin boundaries) has no influence whatsoever; you could remove them or add more instances, or move them around, and as long as they stay off the margin they won’t affect the decision boundary.

👉 For computing the predictions, only the support vectors are involved, not the whole training set.

3. What is the basic principle of a Support Vector Machine?

It’s aimed at finding an optimal hyperplane that is linearly separable, and for the dataset which is not directly linearly separable, it extends its formulation by transforming the original data to map into a new space, which is also called kernel trick.

4. What are hard margin and soft Margin SVMs?

5. What do you mean by Hinge loss?

Image Source: link

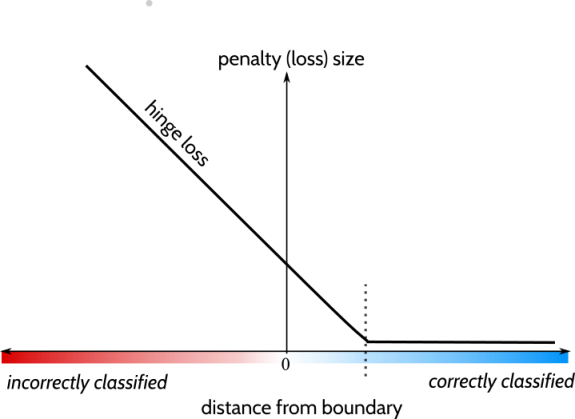

Properties of Hinge loss function:

👉 It is equal to 0 when the value of t is greater than or equal to 1 i.e, t>=1.

👉 Its derivative (slope) is equal to –1 if t < 1 and 0 if t > 1.

👉 It is not differentiable at t = 1.

👉 It penalizes the model for wrongly classifying the instances and increases as far the instance is classified from the correct region of classification.

6. What is the “Kernel trick”?

Image Source: link

7. What is the role of the C hyper-parameter in SVM? Does it affect the bias/variance trade-off?

8. Explain different types of kernel functions.

Some of the kernel functions are as follows:

👉 Polynomial Kernel: These are the kernel functions that represent the similarity of vectors in a feature space over polynomials of original variables.

👉 Gaussian Radial Basis Function (RBF) kernel: Gaussian RBF kernel maps each training instance to an infinite-dimensional space, therefore it’s a good thing that you don’t need to perform the mapping.

Image Source: link

9. How you formulate SVM for a regression problem statement?

10. What affects the decision boundary in SVM?

11. What is a slack variable?

Fig. Picture Showing the slack variables

Image Source: link

12. What is a dual and primal problem and how is it relevant to SVMs?

👉 Fortunately, the SVM problem completes these conditions, so that you can choose to solve the primal problem or the dual problem; and they both will have the same solution.

13. Can an SVM classifier outputs a confidence score when it classifies an instance? What about a probability?

14. If you train an SVM classifier with an RBF kernel. It seems to underfit the training dataset: should you increase or decrease the hyper-parameter γ (gamma)? What about the C hyper-parameter?

15. Is SVM sensitive to the Feature Scaling?

End Notes

Thanks for reading!

I hope you enjoyed the questions and were able to test your knowledge about Support Vector Machines (SVM).

If you liked this and want to know more, go visit my other articles on Data Science and Machine Learning by clicking on the Link

Please feel free to contact me on Linkedin, Email.

Something not mentioned or want to share your thoughts? Feel free to comment below And I’ll get back to you.

About the author

Chirag Goyal

Currently, I pursuing my Bachelor of Technology (B.Tech) in Computer Science and Engineering from the Indian Institute of Technology Jodhpur(IITJ). I am very enthusiastic about Machine learning, Deep Learning, and Artificial Intelligence.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.