Overview

- Here is a list of Top 15 Datasets for 2020 that we feel every data scientist should practice on

- The article contains 5 datasets each for machine learning, computer vision, and NLP

- By no means is this list exhaustive. Feel free to add other datasets in the comments below

Introduction

For the things we have to learn before we can do them, we learn by doing them

-Aristotle

I am sure everyone can attest to this saying. No matter what your task is, practice makes you better at it. In my Machine Learning journey, I have observed nothing different. In fact, I would go so far as to say that understanding a model itself, say, Logistic regression is less challenging than understanding where it should be applied as its application differs from dataset to dataset.

Therefore, it is highly important that we practice the end-to-end Machine Learning process on different kinds of data and datasets. The more diverse datasets we use to build our models, the more we understand the model. This is also a great way to keep challenging yourself and explore some interesting data being collected around the world!

I will be covering the top 15 open-source datasets in 2020. To make it easier, I have classified them into 3 types: Machine Learning, Computer Vision, and Natural Language Processing. Additionally, all these datasets are freely available – you don’t even need to sign up for getting these.

I have also listed some great resources as you read along where you can get more datasets to explore.

Machine Learning Datasets

Let us first cover a few structured datasets that you can use some of the simpler Machine Learning models on – like kNN, SVM, Linear regression, and the like. As the world wakes up to the need of collecting and maintaining data, there are literally thousands of really interesting datasets that are being released daily – across the industry, academia, and even governments!

For instance, the Harvard public dataset resource also includes datasets of assignment submissions by students. Also, NASA maintains freely available real-time datasets of most of its ongoing projects. I have highlighted the most popular datasets used this year.

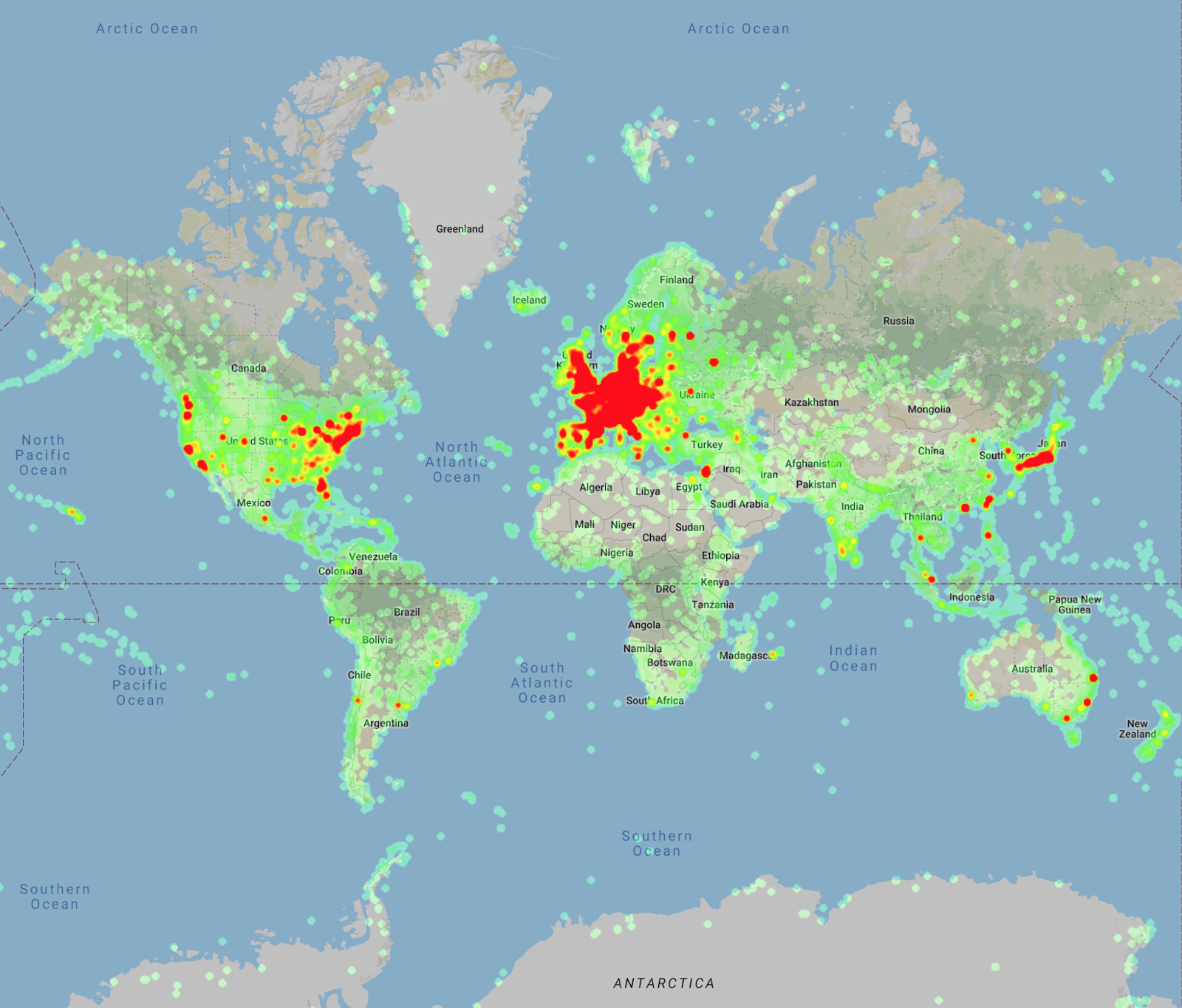

1. John Hopkins COVID-19 dataset

One cannot look back at 2020 and ignore the ‘Rona. While the COVID-19 Pandemic has brought the world to a standstill, it has also made us realize the importance of collecting and maintaining accurate data. Companies, Governments, and hospitals have now released a lot of data about how they faced the Pandemic, but the first among this list is the widely popular and highly-rated global COVID dataset by John Hopkins University.

It’s praiseworthy that they started aggregating the data right from the last week of January and have been updating it every day since. The data has been collected from multiple resources including news portals, official public agencies right down the county level where such data has been made public.

You can download the entire dataset or choose datasets for a particular date on this link.

You can use this dataset for multiple purposes – clustering, classification, time series forecasting, etc. This dataset is also being used widely to generate interactive near real-time dashboards

2. Amphibians Dataset

Source – Wikipedia

This is a really simple dataset consisting of data on amphibians and their presence near water bodies. The data has been collected from GIS and satellite imagery, as well as already available data on the previous amphibian populations around the area. The dataset itself is small with about 189 rows and 23 columns. What I really liked about this dataset is that the columns are of all possible types: Continuous, Categorical, Ordinal, etc.

The Categorical columns have a relatively large number of distinct values and even the target variable has more than 5 distinct values. Thus, this dataset is a great practice for multi-class classification in the case of smaller datasets.

You can download the dataset from here.

3. Seoul Bikesharing Demand

The bike-sharing data of big cities like New York, Chicago, etc. have been in the public domain for many years and have been extensively used for multiple purposes. This year, Seoul has been added to this list of cities sharing the bike-sharing rentals data.

Seoul is one of the most populous cities in the world and thus faces increasing transportation issues despite having a robust public transportation system. Renting public bikes is fast becoming a popular mode of transport that is cheap, less-polluting and fast as well. However, there is now a need to optimize the available rental bikes so that more and more people can use them. Thus, the city of Seoul released this data earlier this year.

You can download the data from the UCI Machine Learning Repository from this link.

(Please also note the dataset of public holidays provided in the same link above)

You can use this data for regression problems and also hone your EDA skills.

4. Cricsheet

Sports analytics is one of the most popular domains that uses Machine Learning models. Data from sporting events all throughout the year(and for previous years) is being regularly collected and released for open access. This data is very useful for judging team performances, individual player performances, etc.

Data from big international tournaments like the NBA, NFL, and the English Premier League has been available for many years and even the data that is not available readily can be created from scraping websites like ESPNCricInfo, or goal.com

However, for those who want a ready repository of all cricket matches, across all leagues, tournaments, and types of matches, Cricsheet is an awesome resource. It has all the above data from the year 2009 and is updated after every match that is played.

Do check out this cool repository with extensive data and hone your Regression and Classification models link.

5. Voice Call Quality

While this list is all about the top datasets, I do want to mention lesser-known data that might flow under your radar. As I was exploring various dataset resources while writing this article, I came across India’s own Open Government Data Platform. The Platform is a great resource for nationwide and statewide data across various Government Departments. Some datasets are even updated daily or monthly.

For instance, take this example of crowd-sourced Voice Call Ratings. All telecom subscribers in India have an option to provide their opinion on the quality of calls via an App and submit it to TRAI(Telecom Regulatory Authority of India)

The OGD Platform hosts monthly data in the form of CSV files on the Network Operator, Type of Network, Location, Call quality, etc., from April 2018 onwards till October of this year. This provides a great opportunity to practice feature engineering, classification, and regression problems.

You can download the monthly data for October 2020(or for any other month) from here.

Deep Learning Datasets

The past decade was the decade of Deep Learning. Marked by pathbreaking advancements, large neural networks have been able to achieve a nearly-human understanding of languages and images. Particularly where NLP and CV are concerned, we now have datasets with billions of parameters being used to train deep learning models. This ranges from real-time datasets consisting of videos to corpora of texts available in several languages.

There are various datasets that still form the benchmark for CV and NLP models. These have withstood the test of time and are still widely used and updated. Some examples include ImageNet, SQuAD, CIFAR-10, IMDb Reviews, etc. Therefore, I have included the newer datasets released this year. The best part of these datasets is that they have been released keeping in mind the various developments that have happened over the years. Thus, they present interesting challenges for those of us who want to put our deep learning skills through the grinder.

Let us start with Computer Vision.

Computer Vision Datasets

6. DeepMind Kinetics

Ever since DeepMind was founded in 2010, it has been at the forefront of AI research. Being acquired by Google in 2014 only enhanced the pathbreaking research put out by Deep Mind, and the DeepMind Kinetics dataset collection is just of these.

This dataset consists of a whopping 650K video clips of human actions and human-human interactions. The most interesting aspect is that these clips are of humans performing uncommon actions like picking apples, taking photos, doing laundry, and many more. There are 700 actions in total, thus having around 900 clips of each class.

Though the first version of this dataset was released way back in 2010, it has been constantly updated until October 2020 to include more and more actions.

You can download the latest version of this dataset from here.

This dataset can be used for multiple tasks such as video classification, object detection, facial recognition, etc.

7. MaskedFaceNet

It is not surprising that COVID-related data features in the CV category as well. Till the time a vaccine is not in sight and not available for large-scale use, the only effective methods to prevent the spread are social distancing and wearing masks. However, while social distancing can be enforced, it has been very difficult to enforce wearing masks.

Oftentimes, I have noticed people wearing masks, but they do not cover the nose or are not worn properly. This defeats the purpose of wearing them and increases the risk of the spread.

For this purpose, there have been quite a few datasets collected of people wearing face masks- both correctly and incorrectly for the purpose of recognition and early-warning. In fact, one can also scrape such images from the internet and create their own dataset.

However, one of the best datasets, that is readily available is the MaskedFace-Net dataset. This well-annotated dataset consists of at least 60K images, each of the people wearing masks correctly and incorrectly. Not only this, even the incorrectly masked faces are divided into types such as the uncovered nose, chin, etc.

This is the only global resource that

- shows realistic faces

- provides further classification on how the mask is being worn.

Thus, it can be used for facial detection, object detection, image classification, etc.

You can download the dataset from here.

8. Objectron

Released by Google earlier this year, Objectron is an annotated dataset of video clips and images that have objects in them. However, the annotation is incredibly extensive:

- the objects are captured from various camera angles in each video

- the 3D bounding boxes for each object have also been annotated.

These bounding boxes contain information on the object’s dimensions, position, etc.

There are a total of 15K annotated video clips and 4M annotated images of objects belonging to any of these categories: bikes, books, bottles, cameras, chairs, cereal boxes, laptops, cups, and shoes.

Staying true to Google’s excellent standards, these are collected from various countries to ensure diversity in data. Not only this, these records can be sued directly in Tensorflow or PyTorch.

This is an extremely useful dataset for edge detection, image classification, object detection, etc.

You can download this dataset from here.

9. Google Landmarks Dataset v2

Google features for the 2nd time in this list, and again, in the domain of Computer Vision. The Landmarks Dataset v2 is an upgraded version of Landmarks v1 which was released in 2019. The Landmarks Dataset basically consists of natural and man-made landmarks across the world.

These images of landmarks have been collected from Wikimedia Commons. Researchers at Google Research team claim that this is the largest dataset offering real-world images(5 million images of over 200K landmarks). The challenges presented by this dataset are also similar to what we come across in the industry – like extremely imbalanced classes and a very high diversity of images.

Here is an example of the landmark images:

A very interesting point is that some of these mages include historical images as well so that models can learn image recognition over time. It can also be used for Instance-level recognition, Image Retrieval, etc.

You can download the dataset from here.

10. Berkley Deep Drive

Autonomous Driving has been the buzzword in the field of AI for the last few years and it is evident in the different ongoing self-driving car projects in the industry and in academia as well. It is really amazing to see the progress that has been made in self-driving vehicles and even witnessing an autonomous vehicle on the road is a sight that will never get old for me.

The risks and safety restrictions associated with unmanned vehicles have created the need to have specialized datasets for such purposes and the Berkley deep drive dataset fills this void perfectly. Also called the BDD100K, it is the largest dataset consisting of 100K annotated video clips and images of vehicles being driven.

These images and clips are also diverse:

- the type of roads as well – like highways, residential streets

- highly-populated regions in the US.

- at different times of the day

- for different weather conditions

The highlight of this dataset is that it can be used for multitask learning such as multi-object detection, semantic segmentation, image tagging, lane detection, etc.

You can download the dataset from here.

NLP Datasets

11) CORD-19

Just like Computer Vision, COVID-19 features primarily in text data as well. When we look at the sheer volume of data that was collected for the purposes of the pandemic, we understand how useful it has been and helped us in dealing with it. The data has been used across various domains and across academia as well.

One must give full credit to the scientific community as well, for getting to work on it almost immediately and publishing research papers dealing with various aspects of the COVID-19 pandemic.

The CORD-19 dataset is a collection of research papers and articles not only about COVID-19 but also about the various related coronaviruses across peer-review medical journals. It is maintained daily by the famous Allen Institute for AI. It has been specifically maintained for the purpose of extracting important and new insights from all the research that is happening across the world. CORD-19 contains text from over 144K papers with 72K of them having full texts. It has been widely used for building many text mining tools and has been downloaded over 200K times.

You can use this dataset for a variety of NLP tasks such as NER, Text Classification, Text Summarization, and many more.

You can download the dataset from here.

12) TaskMaster-2

At this point, it should come as no surprise to you when a dataset from Google features in this list. It just goes to show the sheer variety of high-quality datasets being released by Google for open access through the years. The latest in this list is the TaskMaster-2.

The dataset consists of over 17K two-person spoken dialogues across various domains like restaurants, movies, flights, sports, etc.

These conversations are centered on searches and recommendations. The most interesting part is how these dialogues were recorded. Humans interacted with humans across a web-interface under the impression that they were interacting with a chatbot. These conversations are also annotated to mark proper nouns, times, quantities, etc.

The original Taskmaster-1 was released back in 2019 and the TaskMaster-2 is an updated version of it with more diversity of dialogues. It can be used for a variety of tasks such as Sentiment Analysis, Question-Answering, NER, text generation, etc.

You can download the dataset from here.

13) DoQA

While we are on the topic of Question-Answering, there is another dataset released in 2020 that adds to the famous list of Question-Answering datasets like the Quora Question-Answer Pairs, SquAD, etc.

The DoQA dataset exclusively caters to domain-specific Question-Answer conversations.

More precisely, it contains over 10K questions asking information across 3 domains: cooking, movies, and traveling. These questions and answers have been sourced from Stack Exchange, and thus, the answers have been provided by domain experts who can answer the questions to the best of their abilities. It also includes some unanswerable questions and some conversations centered around the questions as well.

Such datasets can be used to train chatbots and can also be used for text classification, summarization, etc.

You can download the dataset from here.

14) Recipe NLG

Text Generation is one of the most exciting fields in the NLP domain in recent years. Large Deep learning models like GPT-2 have been able to write entirely new Shakespeare-like texts when trained on the appropriate data. Apart from these fun and innovative ideas, it is also being used to restore damaged manuscripts and documents.

However, most of these models are used to being trained in completely unstructured data. Semi-structured data, where parts of the data are structured and parts of it aren’t, presents new challenges of its own. Thus, instead of training models on generic datasets, it is more efficient to create models for specific domains that would be trained on data specific to that domain.

One such domain is that of cooking and recipes. There have been several Computer Vision models dealing with recipes, such as identifying the ingredients from the image of the cooked dish or vice versa. However, not much data exists for tasks specifically related to NLP. The RecipeNLP dataset attempts to fill this void by maintaining recipes in text-form only and focussing on the structure and logic of the recipe instructions.

The dataset consist of over 2 million recipes scraped from various cooking websites on the internet and then cleaned and annotated. Along with the dataset, the creators have also tested a Natural Language Generation(NLG) model based on GPT-2; you provide the ingredients and quantities, and the model generates a new recipe for them. Thus, not only can you use it for tasks like NER, text classification, this dataset is also tailored for complicated tasks like text generation.

You can download the RecipeNLG dataset from here: https://recipenlg.cs.put.poznan.pl/

15) CC100

I would like to conclude this article with one of the largest corpora I have come upon. originally created by Facebook for their latest Transforme-based Language Model, XLM-R, this corpus consists of tokens from over 100 languages. The English corpus itself is over 80GB in size and some languages like Hindi and Chinese include their romanized versions as well.

The data has been crawled from the Common Crawl database. The Common Crawl project is a huge database of webpages in various languages. it is updated every month and has been cleaned by Facebook to extract tokens from the available languages. Every month, about 24TB of data is released and it is commendable that Facebook has extracted information from many months-worth of data and released it for public use. This extracted data is off around 2.5TB

This data can be used for multiple purposes like Machine Translation, NER, Text Classification, etc, for all these languages.

You can download this data from here.

End Notes

Thus, we saw some of the top datasets of 2020. 2020 also marks the end of the 2nd decade of the 21st Century which has already thrown curveballs at the human-race. However, these datasets go to show that researchers, data scientists across all domains have put in the efforts to collect and maintain user data that would shape the research in AI for years to come.

I encourage all of you to explore these datasets and enhance your data cleaning, feature engineering, and model-building skills. Each dataset represents its own set of challenges. By practicing with them, we can get an idea of how datasets actually are in the industry.

What are some of the datasets that you came to know about in 2020? Do share them below!