Machine Learning is a very interesting branch of Artificial Intelligence where the machine is made to learn from the data with the help of models created by us, and then identify patterns and thus make predictions based on that. If you’re considering taking your future ahead in the field of Data Science, then one of the most important things you should be knowing is “Probability Theory‘. It plays a very crucial role in Data Science and you should be a master of this theory. Have you ever came across the word ‘Probability’? Don’t Worry! We’ll discuss this, and at the end of this lecture, you will be confident about this theory. We’ll discuss all the important things that one should know without wasting any time.

Table of contents

Introduction to Basics of Probability Theory

Probability simply talks about how likely is the event to occur, and its value always lies between 0 and 1 (inclusive of 0 and 1). For example: consider that you have two bags, named A and B, each containing 10 red balls and 10 black balls. If you randomly pick up the ball from any bag (without looking in the bag), you surely don’t know which ball you’re going to pick up. So here is the need of probability where we find how likely you’re going to pick up either a black or a red ball. Note that we’ll be denoting probability as P from now on. P(X) means the probability for an event X to occur.

P(Red ball)= P(Bag A). P(Red ball | Bag A) + P(Bag B). P(Red ball | Bag B), this equation finds the probability of the red ball. Here I have introduced the concept of conditional probability ( which finds probability when we’re provided with the condition). P(Bag A) = 1/2 because we’ve 2 bags out which we’ve to select Bag A. P(Red ball | Bag A) should read as “probability of drawing a red ball given the bag A” here “given” word specifies the condition which is Bag A in this case, so it is 10 red balls out of 20 balls i.e. 10/20. So let’s solve:

P(Red Ball)= 1/2. 10/20 + 1/2. 10/20 = 1/2

Similarly, you can try to find the probability of drawing a black ball? Also, find the probability of drawing two consecutive red balls from the bag after transferring one black ball from bag A to Bag B?

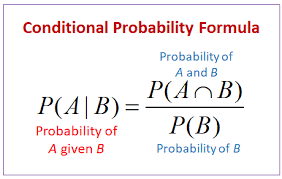

Now if you look at the image above, you must be thinking what is it? I haven’t introduced the “intersection” in Set Theory. I have already discussed the concept above, there is nothing new in the image given above. Here we’re finding the probability for an event A to occur given that event B has already occurred. The numerator of the right-hand side of the equation is the probability for both events to occur, divided by the probability for an event B to occur. The numerator has an inverted shape symbol between A and B which we call “Intersection” in set theory.

Basic concepts of probability theory

There are a few key concepts that are important to understand in probability theory. These include:

- Sample space: The sample space is the collection of all potential outcomes of an experiment. For example, the sample space of flipping a coin is {heads, tails}.

- Event: An event is a collection of outcomes within the sample space. For example, the event of flipping a head is {heads}.

- Probability: The probability of an event is a number between 0 and 1 that represents the likelihood of the event occurring. A chance of 0 means that the event is impossible, and a probability of 1 means that the event is specific.

Introduction to Bayes Theorem

Till now we’ve just discussed the basics of probability theory. Before we proceed let me discuss ‘Random Variable‘ which is a variable whose possible values are numerical outcomes of a random phenomenon. In the above case, Bag is a random variable that can take possible values as Bag A and Bag B. Ball is also a random variable that can take values red and black.

Now imagine the above situation when I say find the probability that ball is drawn from a bag A given that the ball is red in color? Notice that in this question we’re already given the color of the ball and we’ve to find the probability that the red ball is drawn is from bag A. Instead in other questions we used to find the probability of drawing a red ball from bag A. In the case where we’ve to find the probability of event and object is given, this kind of probability is called posterior probability. In the case where we’ve to find the probability of an object given for that event, this kind of probability is known as the prior probability. So the answer to my question asked at the beginning of the paragraph is:

P(Bag A | red ball)= [P(red ball | Bag A). P(Bag A) ] / [P(red ball | Bag A). P(Bag A) + P(red ball | Bag B). P(Bag B) ]

The above equation is what we call “Bayes Theorem”, one of the most significant and important theorems given by the Reverend Thomas Bayes.

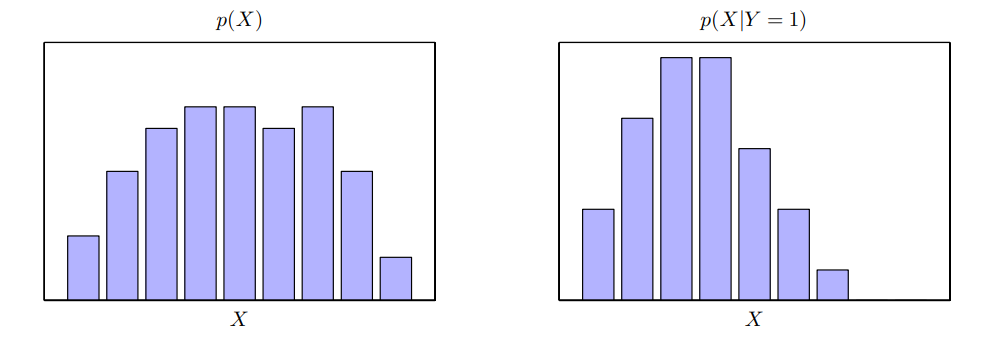

Now, I want you to take a look at what we studied so far was about discrete events. In machine learning, we usually need ‘continuous’ events instead of a discrete variable. What we do now? Look at the image given below and try to understand between the two types of events that I just talked about!

What is Probability Density?

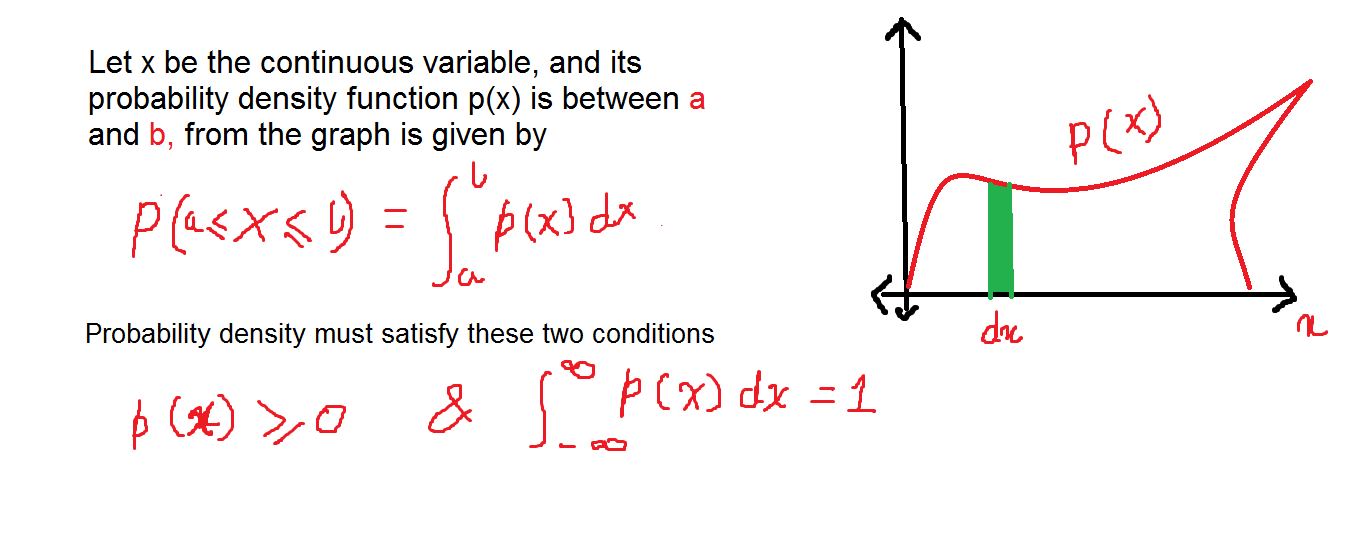

As I told you, we were considering the probabilities of discrete events but our requirement is for continuous events. To achieve this, I would like to introduce the concept of “Probability Density” here at this point. Let the probability of real-valued x within the interval (x, x+ dx) be given by the integral of p(x).dx where dx–> 0, then p(x) is probability density over x. The probability for x lies in the interval [a,b] is given by the integral from a to b of p(x).dx. This is shown in the image given below.

If you look at the image, I have also specified the two conditions that the probability density should satisfy.

Great! You learned something which is very difficult in a much easier way. Isn’t it?

How does it relate to Machine learning??

So we learned the basics of probability theory, but still, confused about how we relate this to machine learning? Isn’t it? We use probability when we’ve to make predictions. When we have the model in ML and data, we can use it to make predictions based on the trained model. Consider a case where we’ve got a dataset for different temperatures over a region for different dates. Here we can make predictions on how many water bottles are required to be stored in that region with the help of a model.

I have tried to cover as much as I can in this article but there is a lot to learn in probability theory. It is just the basics. So far we just discussed the probability definition, conditional probability, Bayes Theorem, and Probability Density. Good Luck!!

Conclusion

In summary, we’ve explored the foundational aspects of Probability Theory, including key concepts and an introduction to Bayes’ Theorem. The discussion on Probability Density shed light on its importance in understanding uncertainty. Notably, the relevance of machine learning emphasizes its crucial role in data modeling, enhancing our comprehension of probabilistic reasoning across diverse applications

FAQs

Probability theory is like a special math tool that helps us deal with things that might happen but aren’t for sure. It lets us figure out how likely different outcomes are, which is useful for making decisions, understanding data, and even building machines that learn from experience.

Probability theory is used in many areas, including flipping coins, rolling dice, predicting exam scores, estimating weather, modeling disease outbreaks, designing gambling games, assessing engineering risks, and conducting statistical sampling.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.